How to improve container observability with a service mesh

Photo by Ricardo Gomez Angel on Unsplash

Observability is a measure of how well you can infer a system's internal state from its external outputs.

In control theory, the observability and controllability of a linear system is a mathematical duality. The two are correlated. In Kubernetes, a platform control system's basic facilities can help you determine certain states (readiness, healthiness, and so on) of your workloads. This allows you to act on collected data to reach a workload's desired declared state. However, these tools are narrowly focused.

[ Leverage reusable elements for designing cloud-native applications. Download the O'Reilly eBook Kubernetes Patterns. ]

To achieve a higher level of deterministic and declarative state control and management, you need higher volume, variety, and velocity of data representing the workload and platform throughout their lifecycle. This information feeds into a better analytical engine that can estimate or derive different state characteristics of that platform. In Kubernetes, workload or platform data can be in logs, metrics, traces, and so on. This data can be correlated to external outputs for a system's duality. Are the workloads performing as expected compared to the declared state?

To implement better observability and workload management for telecom using service mesh, it's important to look at the fine details of Kubernetes networking, including pods, namespaces, processes, and the use of iptables. This information helps you understand your telco workload to optimize it for observability and management.

What is a service mesh?

A Kubernetes Service allows different applications to interact without tight coupling. For example, Application-A can interact with Application-B over Application-B's Service, where Service represents the interaction boundaries.

In the typical Kubernetes workload blueprint, multiple services talk to each other and deliver a complete solution that serves the end consumer. A consumer can be a person or another system (as with machine-to-machine communication). A service mesh exists to determine how well this service grid performs and also manages it, and Istio service mesh is a popular open source, platform-independent option.

Container communication

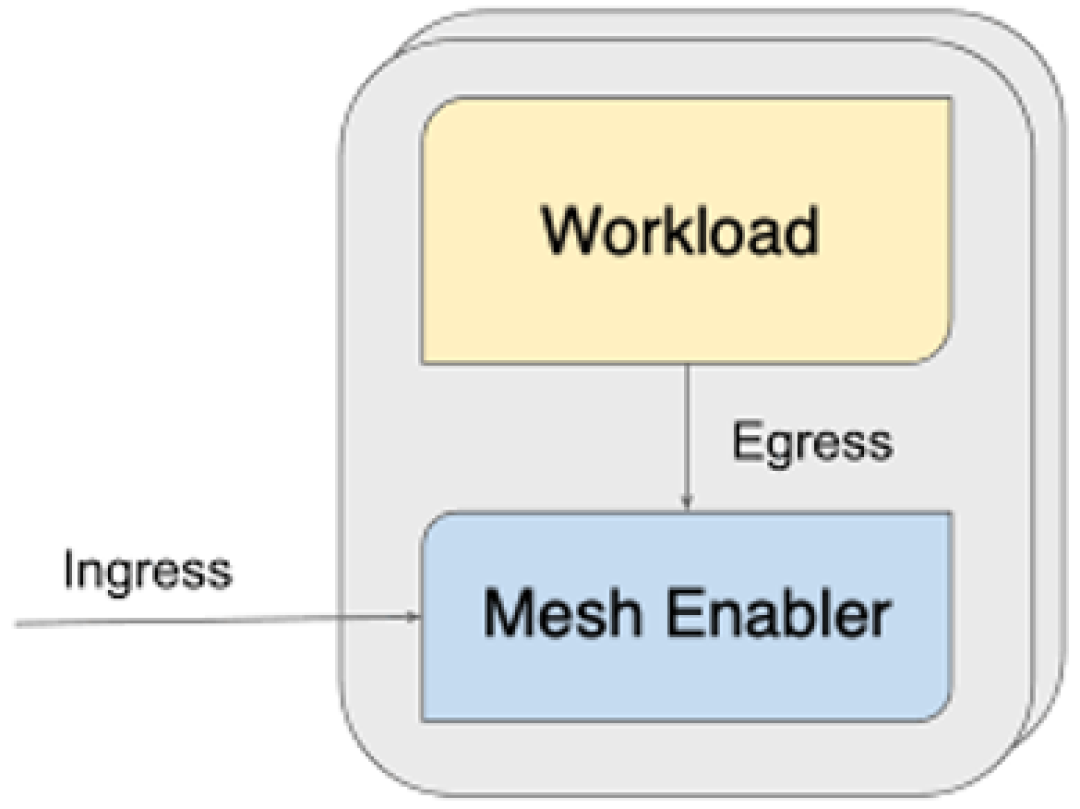

With service mesh enabled, there might be two containers in the same pod: your application container and a sidecar container. Pods in Kubernetes are sandboxes, a type of container using Linux namespaces that may contain multiple, logically grouped containers to share infrastructure (for example, networking).

Containers inside a pod have different mount points (they have unique root filesystems) and Unix Time-Sharing (UTS) namespaces; however, they can share other namespaces, which allows them to see each other's processes. Because they share the same network namespace, a pod offers the same Internet Protocol (IP) address to all containers inside. Istio service mesh sets up iptables for dynamic intervention within the same shared network namespace.

Multus CNI

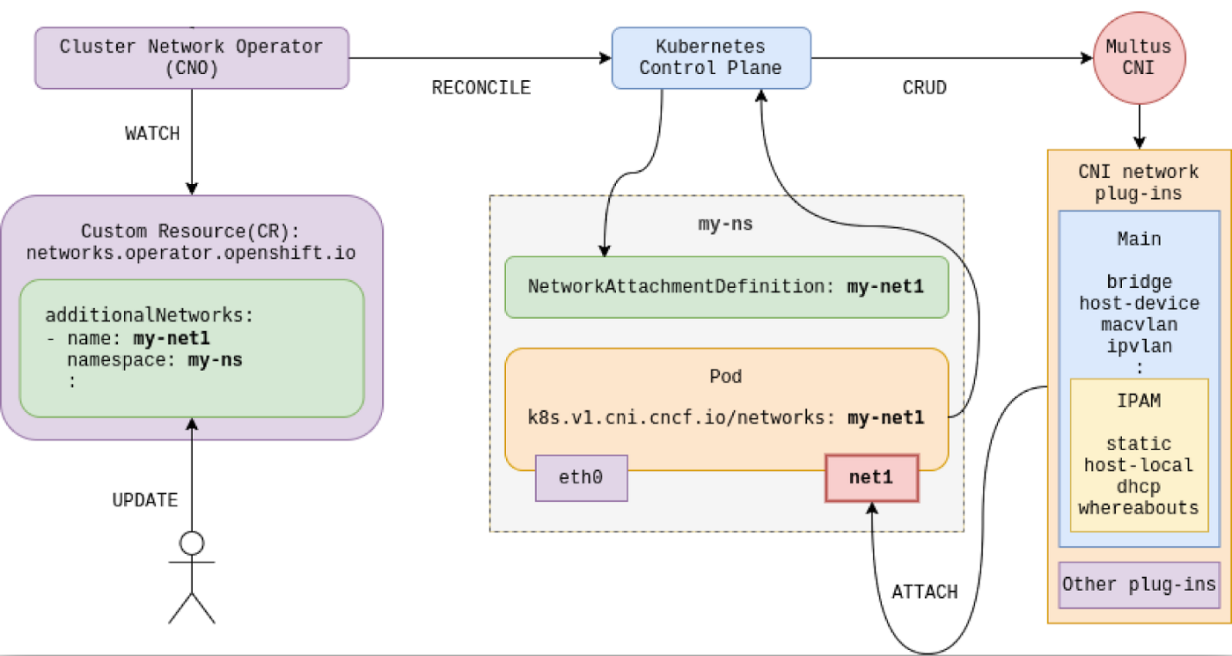

In the original Kubernetes network fabric design, workloads expected to interact with each other over network fabric through a single network interface. However, the inherited complexity of the telecom solution stacks defined by ETSI, 3GPP, and other standards bodies demands multiple interfaces in and out of each specified container or pod. The Multus Container Network Interface (CNI) was developed to address this. If you're not familiar with CNIs, read Kedar Vijay Kulkarni's A brief overview of the Container Network Interface (CNI) in Kubernetes.

Multus CNI can create and use multiple interfaces within Kubernetes pods. With Multus CNI and the NetworkAttachmentDefinition specification, the original network interface (typically, eth0) remains in place as the default network, providing the pod-to-pod connectivity required by the Kubernetes specification.

What is the CNI specification?

The CNI specification provides a pluggable application programming interface (API) to configure network interfaces in Linux containers. Multus CNI is such a plugin and is also considered a meta plugin: a CNI plugin that can run other CNI plugins. It works like a wrapper that calls other CNI plugins for attaching multiple network interfaces to pods.

[ Learn why open source and 5G are a perfect partnership. ]

Multus CNI is the reference implementation for the Network Plumbing Working Group, which defines the specification for the NetworkAttachmentDefinition, a Kubernetes custom resource used to express the intent to which networks pods should be attached.

Advanced Kubernetes networking

To leverage multiple networks with containers (sitting in the same pod), you must plan, design, and then implement your networking fabric. Later, you refer to those network parameters inside the Kubernetes network definitions.

You can create and own your network attachment definitions within your tenant namespace, or you can refer to a common network attachment definition defined under the default or the openshift-multus namespace owned by the cluster administrator.

[ Boost security, flexibility, and scale at the edge with Red Hat Enterprise Linux. ]

In our tests, we've used network attachment definitions from openshift-multus namespace.

[ Don't try to recreate what was normal before the pandemic. Learn from leading CIOs in a new report from Harvard Business Review Analytic Services: Maintaining momentum on digital transformation. ]

Key findings

Here's what we've discovered so far:

- Any traffic from any interface within a pod's network namespace is subject to packet processing through the same iptables rules within that particular pod network namespace.

- Any incoming traffic from any interface based on TCP goes to the service mesh sidecar container. It comes back and goes to the application container, following the same path on return.

- Any incoming traffic from any interface based on non-TCP (SCTP, for example) goes directly to the application container.

Advantages

In a service mesh with Multus, the service mesh sidecar container intercepts all inbound and outbound TCP traffic for all pod interfaces. This provides observability and insight for you and includes management abilities (steering) for workload traffic.

The main application container does not need to do anything extra to benefit from a service mesh, even with multiple interfaces delivered with Multus CNI.

Disadvantages

A service mesh sidecar container receives all TCP traffic, regardless of whether it can do anything useful with that traffic. For instance, diameter/tcp crosses through a sidecar container without benefitting it, as Istio does not support diameter.

Sidecar container injection introduces latency for a packet's round-trip time (RTT), which might be insignificant for telco control-plane traffic, but user-plane traffic might notice such latency, degrading the user experience, especially in real-time communication services.

Wrap up

If you're looking for greater flexibility for pod networking in Kubernetes, consider Multus CNI. As with many solutions, there are advantages and potential drawbacks, so test it on your systems and see whether it provides something useful. We've found it to be an effective way to broaden the scope and level of control over the way pods and containers communicate within a cluster.

This article is adapted from a Medium post and published with the authors' permission.

Doug Smith

Doug Smith is a principal software engineer and technical lead for the OpenShift Network Plumbing Team. Doug's focus is integrating telco and performance networking technologies with container-based systems such as OpenShift. More about me

Fatih Nar

Fatih (aka The Cloudified Turk) has been involved over several years in Linux, Openstack, and Kubernetes communities, influencing development and ecosystem cultivation, including for workloads specific to telecom, media, and More about me

Vikas Grover

Vikas Grover is a senior leader in the Telco vertical at RedHat. In his role, he focuses on assisting customers with 5G deployments, intelligent self-configuring systems, service mesh, and O-RAN using Red Hat Technologies. More about me

Navigate the shifting technology landscape. Read An architect's guide to multicloud infrastructure.

OUR BEST CONTENT, DELIVERED TO YOUR INBOX