Introduction

When designing a Containerfile/Dockerfile, there is an option to specify the User ID (UID) which will be used to execute the application inside the Container. When the container is running there is an internal UID (the one perceived from within the container) and there is the host-level UID running the process that represents the Container.

When a Pod is deployed to a project, by default, a unique UID is allocated and used to execute the Pod. In addition to this behavior, the Kubernetes Pod definition provides the ability to specify the UID under which the Pod should run.

This document describes the behavior and significance of the User ID (UIDs) from the Namespace perspective, from the Pods perspective and from the perspective of the workload in execution inside a Container.

User ID (UID) and Namespaces

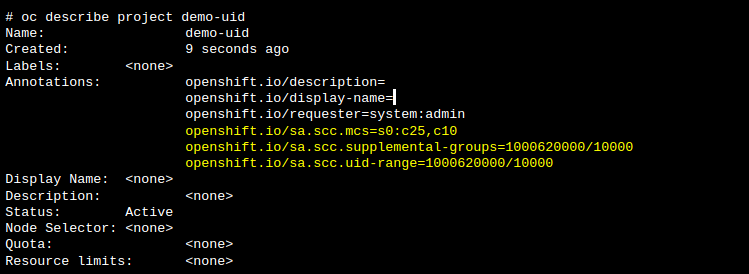

During the creation of a project or namespace, OpenShift assigns a User ID (UID) range, a supplemental group ID (GID) range, and unique SELinux MCS labels to the project or namespace. By default, no range is explicitly defined for fsGroup, instead, by default, fsGroup is equal to the minimum value of the “openshift.io/sa.scc.supplemental-groups” annotation.

The supplemental Groups IDs are used for controlling access to shared storage like NFS and GlusterFS, while the fsGroup is used for controlling access to block storage such as Ceph RBD, iSCSI, and some Cloud storage.

The range of UIDs, GIDs, and SELinux MCS labels are unique to the project and there will not be overlap with the UIDs or GIDs assigned to other projects.

When a Pod is deployed into the namespace, by default, OpenShift will use the first UID and first GID from this range to run the Pod. Any attempt by a Pod definition to specify a UID outside the assigned range will fail and requires special privileges.

The supplemental group IDs are regular Linux group IDs (GIDs). This is combined with the UID to form the UID + GUID pair used to run a Linux process. In this case, to run the Container.

Unless overridden by the Pod definition, each Pod in the same namespace will also use the same SELinux MCS values. This enables SELinux to enforce multitenancy such that by default, Pods from one namespace cannot access files created by Pods in another namespace or by host processes unrelated to the running Pod. By sharing the same SELinux MCS labels in the same namespace, it enables Pods in the same namespace, if they choose to do so, to share artifacts.

The UID and GID range follow the format <first_id>/<id_pool_size> or <first_id>-<last_id> and the assigned range can be seen by using the “describe” command as with the following example:

In the previous example, fsGroup will be equal to “1000620000”.

UIDs and GUIs in Action (Namespace perspective)

To demonstrate the behavior we can do a deployment of two unprivileged applications and validating UID, GID, and SELinux labels.

Application 1: unprivileged python application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp

labels:

app: myapp

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: podcool

image: quay.io/wcaban/podcool

ports:

- containerPort: 8080

Application 2: unprivileged network tools container.

apiVersion: apps/v1

kind: Deployment

metadata:

name: toolbox

labels:

app: toolbox

spec:

replicas: 3

selector:

matchLabels:

app: toolbox

template:

metadata:

labels:

app: toolbox

spec:

containers:

- name: net-toolbox

image: quay.io/wcaban/net-toolbox

ports:

- containerPort: 2000

As can be seen, these Deployment definitions do not specify a runAsUser, fsGroup or SELinux labels to use.

Validate the Pods are in running state:

# oc get pods

NAME READY STATUS RESTARTS AGE

myapp-5bbb7468d5-pcvnl 1/1 Running 0 56m

myapp-5bbb7468d5-wl49t 1/1 Running 0 56m

myapp-5bbb7468d5-zsqbx 1/1 Running 0 56m

toolbox-5d7955557f-5vlx9 1/1 Running 0 4m39s

toolbox-5d7955557f-gw7rq 1/1 Running 0 4m39s

toolbox-5d7955557f-lxn7t 1/1 Running 0 4m40s

Check the runAsUser, fsGroup and SELinux Labels reported by the running Pods:

# oc get pod -o jsonpath='{range .items[*]}{@.metadata.name}{" runAsUser: "}{@.spec.containers[*].securityContext.runAsUser}{" fsGroup: "}{@.spec.securityContext.fsGroup}{" seLinuxOptions: "}{@.spec.securityContext.seLinuxOptions.level}{"\n"}{end}'

myapp-5bbb7468d5-pcvnl runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

myapp-5bbb7468d5-wl49t runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

myapp-5bbb7468d5-zsqbx runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox-5d7955557f-5vlx9 runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox-5d7955557f-gw7rq runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox-5d7955557f-lxn7t runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10As it can be seen from the previous output, all the Pods in the same namespaces are running with the same UID, GID and SELinux labels. Notice these are unprivileged Pods running with an unprivileged UID & GID.

Application 3: unprivileged application with UID & supplementalGroups in range.

apiVersion: apps/v1

kind: Deployment

metadata:

name: toolbox2

labels:

app: toolbox2

spec:

replicas: 3

selector:

matchLabels:

app: toolbox2

template:

metadata:

labels:

app: toolbox2

spec:

securityContext:

supplementalGroups: [1000620001]

seLinuxOptions:

level: s0:c25,c10

containers:

- name: net-toolbox

image: quay.io/wcaban/net-toolbox

ports:

- containerPort: 2000

securityContext:

runAsUser: 1000620001

# oc get pod -o jsonpath='{range .items[*]}{@.metadata.name}{" runAsUser: "}{@.spec.containers[*].securityContext.runAsUser}{" fsGroup: "}{@.spec.securityContext.fsGroup}{" seLinuxOptions: "}{@.spec.securityContext.seLinuxOptions.level}{"\n"}{end}'

myapp-5bbb7468d5-pcvnl runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

myapp-5bbb7468d5-wl49t runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

myapp-5bbb7468d5-zsqbx runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox-5d7955557f-5vlx9 runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox-5d7955557f-gw7rq runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox-5d7955557f-lxn7t runAsUser: 1000620000 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox2-767f8456d9-72k95 runAsUser: 1000620001 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox2-767f8456d9-8b4h5 runAsUser: 1000620001 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10

toolbox2-767f8456d9-rr554 runAsUser: 1000620001 fsGroup: 1000620000 seLinuxOptions: s0:c25,c10When using supplemental GIDs in range, no special privileges are needed.

Using arbitrary UIDs and GIDs

In OpenShift the Security Context Constraints (SCC) are used to manage and control the permissions and capabilities granted to a Pod. There are eight (8) SCC pre-defined in an OpenShift 4.4 cluster and, by default, each namespace is created with three (3) ServiceAccounts.

# oc get scc

NAME AGE

anyuid 32d

hostaccess 32d

hostmount-anyuid 32d

hostnetwork 32d

node-exporter 32d

nonroot 32d

privileged 32d

restricted 32d

# oc get sa

NAME SECRETS AGE

builder 2 10h

default 2 10h

deployer 2 10h

When a ServiceAccount is not specified in the Pod definition, OpenShift uses the “default” ServiceAccount to deploy the Pod. The “default” ServiceAccount uses the “restricted” SCC.

Setting the Pod to run with a UIDs outside the range of the Namespace requires assigning the “anyuid” SCC to the ServiceAccount used to deploy the Pod.

# oc get scc anyuid -o yaml

...<snip>...

runAsUser:

type: RunAsAny

seLinuxContext:

type: MustRunAs

supplementalGroups:

type: RunAsAny

...<snip>...

Upon closer inspection of the “anyuid” SCC, it is clear that any user and any group can be used by the Pod launched by a ServiceAccount with access to the “anyuid” SCC. The “RunAsAny” strategy is effectively skipping the default OpenShift restrictions and authorization allowing the Pod to choose any ID.

From the same SCC definition, we can notice the Pod is still restricted to run under the SELinux labels assigned to the Namespace.

What this means is that even when the Pod might be running with the UID and GID for root, it still has the SELinux MCS label restrictions in place.

# Creating ServiceAccount with “anyuid” SCC

$ oc create sa sa-with-anyuid

$ oc adm policy add-scc-to-user anyuid -z sa-with-anyuid

securitycontextconstraints.security.openshift.io/anyuid added to: ["system:serviceaccount:demo-uid:sa-with-anyuid"]

# Creating Deployment using the ServiceAccount

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-anyuid

labels:

app: demo-anyuid

spec:

replicas: 1

selector:

matchLabels:

app: demo-anyuid

template:

metadata:

labels:

app: demo-anyuid

spec:

securityContext:

fsGroup: 0

supplementalGroups: [1000620001]

seLinuxOptions:

level: s0:c25,c10

serviceAccount: sa-with-anyuid

serviceAccountName: sa-with-anyuid

containers:

- name: net-toolbox

image: quay.io/wcaban/net-toolbox

ports:

- containerPort: 2000

securityContext:

runAsUser: 0

User ID (UID) and Containers

The UID, GID pair and SELinux executing the containers are the ones used as the effective UID to execute the Entrypoint command defined by the Container. Notice that a “User” definition specified in the Containerfile/Dockerfile does not modify this behavior when Container is running in a Pod in Kubernetes.

The following output explores some of these concepts on a Container that has not specified a “User” and that uses the default UID generation mechanism.

Step 1: Create a file in one of the Containers in a Pod.

[root@bastion ~]# oc exec -ti toolbox-5d7955557f-gw7rq -- touch /tmp/foo.txt

Step 2: Find the Container ID and the Node running the Pod.

# The first 12 characters of the Container ID are the ones used

# to identify the running Container

[root@bastion ~]# oc get pod toolbox-5d7955557f-gw7rq -o jsonpath='{"Node: "}{.spec.nodeName}{"\nContainerID: "}{.status.containerStatuses[*].containerID}{"\n"}'

Node: worker-2

ContainerID: cri-o:// 9845e16781c85 f79d74b18658ee73c9b1d36ae6a5ba11a9521a451b24cf66afb

Step 3: Login into the node.

# (using ssh) using ssh and becoming root

[root@bastion ~]# ssh core@worker-2

[core@worker-2 ~]$ sudo -i

# (or using debug) using an account with cluster-admin privileges

[root@bastion ~]# oc debug node/worker-2

Starting pod/worker-2-debug ...

To use host binaries, run `chroot /host`

Pod IP: 198.18.100.17

If you don't see a command prompt, try pressing enter.

sh-4.2#

sh-4.2# chroot /host

sh-4.4#

Step 4: Identify the PID of the Container.

#(this is the PID Namespace of the Container)

[root@worker-2]# crictl inspect 9845e16781c85 -o json | jq '.pid'

216375

Step 5: Identify the UID executing this PID.

[root@worker-2]# head /proc/216375/status

Name: sleep

Umask: 0022

State: S (sleeping)

Tgid: 216375

Ngid: 0

Pid: 216375

PPid: 216362

TracerPid: 0

Uid: 1000620000 1000620000 1000620000 1000620000

Gid: 0 0 0 0

Step 6: Use “nsenter” to enter the Container namespace and list the file that was created.

# Step 6: Use “nsenter” to enter the Container namespace

# and list the file that was created.

# Observe the owner UID and the SELinux labels. This should match the user

# defined in the Container. When not explicitly defined in the Containerfile

# it will use the same UID as the Namespace UID executing the container

[root@worker-2]# nsenter -t 216375 -m ls -lZ /tmp

total 4

-rw-r--r--. 1 1000620000 root system_u:object_r:container_file_t:s0:c10,c25 0 Jun 27 23:11 foo.txt

Step 7: Identify the Container UUID.

[root@worker-2 ~]# crictl inspect 9845e16781c85 -o json | jq '.status.labels."io.kubernetes.pod.uid"'

"8dbcb82c-fd6c-4378-80db-a36e60364f7c"

Step 8: List the Pod path and observe the SELinux labels in use.

[root@worker-2 ~]# ls -lZ /var/lib/kubelet/pods/8dbcb82c-fd6c-4378-80db-a36e60364f7c

total 4

drwxr-x---. 3 root root system_u:object_r:container_var_lib_t:s0 25 Jun 27 05:42 containers

-rw-r--r--. 1 root root system_u:object_r:container_file_t:s0:c10,c25 220 Jun 27 05:42 etc-hosts

drwxr-x---. 3 root root system_u:object_r:var_lib_t:s0 37 Jun 27 05:42 plugins

drwxr-x---. 3 root root system_u:object_r:var_lib_t:s0 34 Jun 27 05:42 volumes

[root@worker-2 ~]# ls -lZ /var/lib/kubelet/pods/8dbcb82c-fd6c-4378-80db-a36e60364f7c/volumes/kubernetes.io~secret/

total 0

drwxrwsrwt. 3 root 1000620000 system_u:object_r:container_file_t:s0:c10,c25 160 Jun 27 05:42 default-token-8zjpn

UIDs and GUIs in Action (Container perspective)

Sample network toolbox Containerfile/Dockerfile without explicit User definition:

# quay.io/wcaban/net-toolbox:latest

FROM registry.access.redhat.com/ubi8/ubi:latest

LABEL io.k8s.display-name="Network Tools" \

io.k8s.description="Networking toolset for connectivity and performance tests" \

io.openshift.tags="nettools"

# NOTE: Using the UBI image with some of these packages requires

# building on a RHEL node with valid subscriptions

RUN dnf install --nodocs -y \

iproute procps-ng bind-utils iputils \

net-tools traceroute \

mtr iperf3 tcpdump \

lksctp-tools \

less tree jq && \

dnf clean all && \

rm -rf /var/cache/dnf

ENTRYPOINT /bin/bash -c "sleep infinity"

When running a Pod with this Container the user is a regular unprivileged UID.

# oc exec -ti toolbox-5d7955557f-5vlx9 /bin/bash

bash-4.4$ whoami

1000620000

bash-4.4$ id

uid=1000620000(1000620000) gid=0(root) groups=0(root),1000620000

bash-4.4$

Notice the Container is using the UID from the Namespace. An important detail to notice is that the user in the Container always has GID=0, which is the root group.

NOTE: In OpenShift 4.x the UID is automatically appended to the /etc/passwd file of the Container.

Traditional Applications and UIDs

The Container user is always a member of the root group, so it can read or write files accessible by GID=0. Any command invoked by the Entrypoint will be executed with this unprivileged UID and GID pair. That means, it is an unprivileged user executing the commands and the UID that will be used during execution is not known in advance. From the technical design perspective, that means, directories and files that may be written to by processes in the Container should be owned by the root group and be read/writable by GID=0. Files to be executed should also have group execute permissions.

This should be enough for most modern applications. Unfortunately, some traditional applications make assumptions that are not compatible with this approach. Some applications were designed to expect a specific UID under which they will be running. Others require the directory and files to be owned by the UID executing them.

- By default, OpenShift 4.x appends the effective UID into /etc/passwd of the Container during the creation of the Pod.

- Note: This was a manual step when deploying applications to OCP 3.x, that required the UID to exist in the passwd file of the Container.

- For cases where a specific UID needs be defined in the /etc/passwd, there are two possible approaches:

- The corresponding User is set in the Containerfile/Dockerfile definition and added to the /etc/passwd file during Container build time.

- When the application also needs to be executed under a specific custom UID, then the Pod definition needs to use a ServiceAccount with the RunAsAny privilege and the Pod needs to use the runAsUser to set the UID to the hardcoded UID. NOTE: Using a hardcoded UID is NOT recommended. Among issues with this approach is that it is prone to UID collisions with system UIDs or with UIDs of the same or different application running in a different Namespace expecting to use the same UID.

- For the Pod to be able to specify the UID to use during execution, in OpenShift 4.4, the ServiceAccount must have at least the “nonroot” or “anyuid” SCC’s

- For cases where the files and directories need to be owned by the UID executing the application:

- An approach that can be used, is to make the files and directory writable by GID=0 and then use a script or wrapper as Entrypoint to dynamically modify the ownership of the files and directories to the allocated Namespace UID used to invoke the Pod.

- Other legacy applications were designed to listen on port < 1024 inside the Container. When the UID is not root, the application cannot bind to ports < 1024. There are two approaches to work with these cases where the application cannot be modified to listen on a different port:

- The Containers need to run with UID set to 0 for those applications to work. SELinux will maintain the isolation between the Container executed with UID 0 and the rest of the system.

NOTE: If SELinux is disabled in the Node, this approach should be avoided as the Containers will be running as root without the same protection of the system.

Future Enhancements

The Unix system was designed with the assumption that only a system administrator was the only one with the ability to allocate ports < 1024. Because of this, many unix services and security policies in organizations, still use this notion as an assumption of the building blocks of their security mechanisms.

With the evolution of Kernel security mechanisms like SELinux where security is far more granular than just ports or file permissions. Requiring that only a privileged user can use these ports, might not be the right assumption and requirements anymore.

In a different context, with the evolution of container orchestrators like Kubernetes, each Pod receiving its own IP address. As a good practice, these should run as unprivileged users. Now, containers are Linux and the assumption or limitation imposed by traditional Unix type systems where using a port < 1024 requires privileged access, is not practical for a Pod. A Pod listening on a “privileged” port should not have any more privileges than a Pod listening in a “unprivileged” port.

With modern Linux Kernels there is the ability to expand the range of what is considered a “unprivileged ports range” to include any port. An example on how to achieve this in Linux systems using system controls is as follows:

sysctl -w net.ipv4.ip_unprivileged_port_start=0

After applying this setting, a user only needs the NET_BIND_SERVICE capabilities to allocate any port in the system. Unfortunately, this does not work for Pods in Kubernetes.

After granting the NET_BIND_SERVICE capability to a Pod using the Security Context Constraint (SCC) or the Pod Security Policies (PSP), and extending the “unprivileged port range”, this technique does not work in Kubernetes. As can be seen in this issue open in the upstream repos since 2017, Kubernetes would need to be updated for this to be supported.

Summary

Understanding the default behavior of the User ID (UID) and Group IDs (GID) in Kubernetes helps with the understanding and troubleshooting of workloads of traditional applications running on Pods.

It is still common to find workloads that were designed using traditional application patterns and assumptions, and now, developers try to reuse those applications “as is” in containerized environments. In these scenarios, developers of these traditional applications expect for these containers to behave with characteristics similar to a VM appliance (e.g. static IPs, prescriptive ports, prescriptie UIDs & GIDs, prescriptive naming, etc). When onboarding these containers as Pod to a Kubernetes environment, developers try to misuse Kubernetes constructs to overcome limitations in the original application design.

Using Pod specification attributes to force a prescribed UIDs, either to force the UID to one the application recognizes or to force the use of prescribed ports, is one of the Kubernetes constructs and attributes prone to be misunderstood, and used incorrectly by developers new to Kubernetes.This blog attempt to describe the default behavior of UIDs and GIDs with OpenShift to promote application patterns that are more friendly to Kubernetes.

About the author

More like this

AI in telco – the catalyst for scaling digital business

Simplify Red Hat Enterprise Linux provisioning in image builder with new Red Hat Lightspeed security and management integrations

The Containers_Derby | Command Line Heroes

Crack the Cloud_Open | Command Line Heroes

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds