Provisioning bare metal Red Hat OpenShift clusters has become incrementally easier, especially with the introduction of agent-based installer and the zero-touch provisioning (ZTP) approaches.

Yet, not all of the provisioning steps are covered in an automated fashion. For example, BareMetalHost (BMH) inventory always requires manual intervention as well as bootstrapping GitOps and the Day 2 configuration may also require separate manual actions.

Imagine a process by which servers are purchased and delivered to the data center. They may come already racked and stacked or they may get racked and stacked after they arrive. Then, we power them up and connect them to the network. Can we have an automated process that takes it from there and provisions bare metal OpenShift clusters or that uses the new servers to add nodes to an existing cluster?

This article will explore an approach for automating the creation of brand new OpenShift clusters or adding hosts to an existing cluster using newly provisioned Bare Metal Hosts.

Objectives

First, let’s describe the objectives that we are looking to achieve:

- When new servers are connected, they are automatically discovered, and necessary configurations are performed (such as DNS and DHCP). These servers are added as BMHs to a Red Hat Advanced Cluster Management for Kubernetes infrastructure environment. This will optionally include network manager configurations (via NMState operator manifests) for the BMHs.

- Bare metal OpenShift clusters can be created declaratively (i.e., a commit against a GitOps repository) using servers from the previously created Red Hat Advanced Cluster Management infrastructure environment.

- When an OpenShift cluster is created, it is automatically registered to Red Hat Advanced Cluster Management, and the GitOps configuration needed to kickstart the Day 2 configuration process is automatically applied.

- Finally, BMHs available within an infrastructure environment can be used to expand existing OpenShift clusters. Again, this can be achieved in a declarative fashion.

Prerequisites

To implement the process described within this document, the following prerequisites are needed:

- A working hub cluster with Red Hat Advanced Cluster Management deployed

- Ability to configure the DNS

High-level architecture

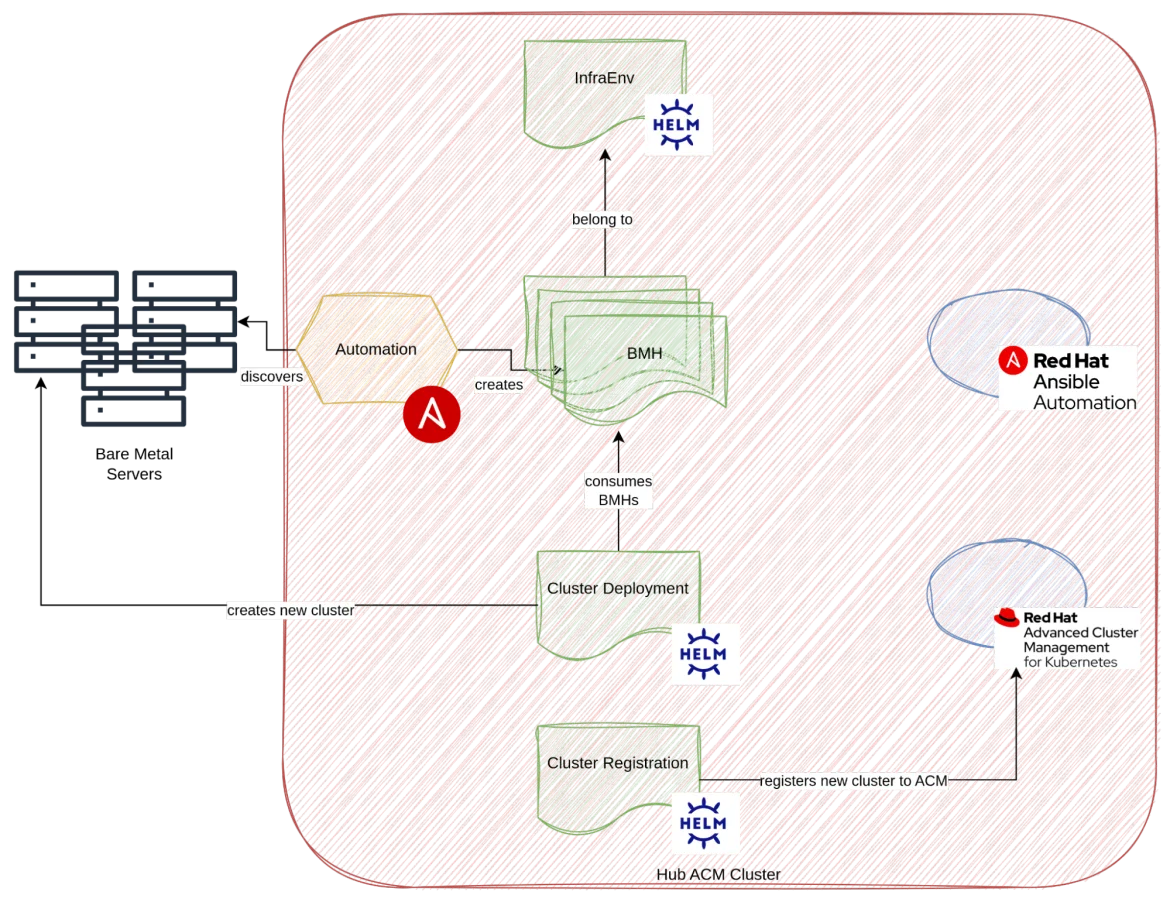

The diagram above depicts a single infrastructure environment defined within the Red Hat Advanced Cluster Management hub cluster.

Infrastructure environments represent a pool of bare metal hosts along with providing a mechanism to serve bootable media to these hosts.

For our purposes, an infrastructure environment will be mapped to a datacenter and a particular environment within a data center. This means we will have infrastructure environments called things like DC1-Prod or DC2-Lab.

The diagram also depicts several bare metal servers. We assume that when servers are added to the network, automation is triggered which creates pull requests (PRs) containing the definitions of the bare metal hosts corresponding to the new servers.

In this case, the automation is written in Ansible and resides in Red Hat Ansible Automation Platform which is deployed on the Red Hat Advanced Cluster Management cluster. However, for the purposes of this article, it is not included as the approach can vary depending on the hardware used for the bare metal servers.

Once the PR is merged, the BMHs become part of an infrastructure environment (based on a specific folder naming convention).

At this point, another discovery process is started, the servers are booted, and additional information is collected and used to decorate the BMHs via annotations. After this discovery phase is successful, the BMHs are ready to be used.

In the bottom section of the diagram, we can see two more Helm charts respectively for the creation and registration (to Red Hat Advanced Cluster Management) of clusters. These charts can, of course, be deployed using GitOps in a declarative fashion.

For the cluster creation Helm chart, we need to specify the standard parameters (domain, VIPs, networking configuration, etc.) that are needed when creating OpenShift clusters plus the list of BMHs that are desired.

When this Helm chart is instantiated, Red Hat Advanced Cluster Management triggers a process similar to the agent-based installer installation process and brings up the cluster.

In the cluster registration Helm chart, we create the manifests needed for the actual registration to Red Hat Advanced Cluster Management (ManagedCluster and KlusterletConfig) and the CRs needed to kickstart GitOps-based Day 2 configurations on the newly created cluster. To that end, we need to complete three items:

- To deploy the GitOps operator.

- To deploy the infra Argo CD instance

- To deploy the root application that implements the app of apps pattern.

This can be achieved several different ways, but the implementation here makes use of a ManifestWork to instruct Red Hat Advanced Cluster Management to deploy those three configurations to the newly created cluster.

Setting up this process — walkthrough

A GitHub repository is available with an example of how to accomplish this automation. Let’s walk through the content in detail.

Infrastructure environment preparation

First we need to prepare the infrastructure environment. An infrastructure environment captures the concept of a set of bare metal servers that will all be managed in a similar way. This is where we describe our spare capacity.

The first step is to deploy and configure the Red Hat Advanced Cluster Manager Operator. Next, we enable the management of infrastructure environments using this configuration.

Once Red Hat Advanced Cluster Management is fully configured, we can create an infrastructure environment. We will create one of them here (called demo-infra), but in a real scenario, there will be a variety of infrastructure environments representing different datacenters and or environments.

We used the infra-env chart to create the demo infrastructure environment. Notice how it creates an Argo CD application that references the /infra-env/demo-infra folder of the same repository.

This folder will contain the BMH definitions. It is recommended that a different repository be created that contains the BMHs of a given infrastructure environment. In order to simplify the configuration within this demonstration, only a single repository will be used.

Populating the infrastructure environment with BMHs

At this point, we can populate the aforementioned /infra-env/demo-infra folder with BMHs.

This can be done manually or through automation as described previously. In our example we didn’t define that automation, and instead three BMHs were added manually. This is suitable for creating a 3-node cluster.

Creating the cluster

One final step before the cluster can be created is to configure the DNS with the VIPs (API and ingress) for the new cluster. If your environment supports it, the external-dns operator can be used to automate this step. But it was not used in this demonstration.

The configuration for the new cluster is defined here.

If you inspect the kustomization file, you will see references to two Helm charts:

- The cluster-deployment Helm chart is responsible for the actual provisioning of the cluster. This Helm chart makes use of a feature called late binding, by which BMH can be added to an infrastructure environment and later be bound to a cluster. Unfortunately this feature is designed to mostly work from the user interface (UI). We used the patch-operator to work around this limitation and perform the same actions that would be performed by the Red Hat Advanced Cluster Management UI.

- The cluster-registration Helm chart is responsible for registering the newly created cluster in Red Hat Advanced Cluster Management and deploying OpenShift GitOps which will apply Day 2 configurations.

Adding nodes to an existing cluster

Now that we have demonstrated how to create a new OpenShift cluster, what if one of the clusters needed additional capacity? Assuming there is spare capacity in the form of unused BMHs, we can always replicate the steps previously described to create pull requests that add the hostname to the list of hostnames that we pass to the cluster creation Helm chart and the new instances will be added as nodes.

Wrap up

In this article, we demonstrate how it is possible to automate the end-to-end provisioning of bare metal clusters using declarative configurations and GitOps. By streamlining these processes, when new capacity enters the data centers in the form of servers, automation can be used to fully configure them to become nodes in a bare metal OpenShift cluster.

About the author

Raffaele is a full-stack enterprise architect with 20+ years of experience. Raffaele started his career in Italy as a Java Architect then gradually moved to Integration Architect and then Enterprise Architect. Later he moved to the United States to eventually become an OpenShift Architect for Red Hat consulting services, acquiring, in the process, knowledge of the infrastructure side of IT.

Currently Raffaele covers a consulting position of cross-portfolio application architect with a focus on OpenShift. Most of his career Raffaele worked with large financial institutions allowing him to acquire an understanding of enterprise processes and security and compliance requirements of large enterprise customers.

Raffaele has become part of the CNCF TAG Storage and contributed to the Cloud Native Disaster Recovery whitepaper.

Recently Raffaele has been focusing on how to improve the developer experience by implementing internal development platforms (IDP).

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Customer support

- Developer resources

- Find a partner

- Red Hat Ecosystem Catalog

- Red Hat value calculator

- Documentation

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit