In our previous article, we experimented with the vpda_sim device simulator. We now move on to the vp_vdpa vDPA driver (parent).

Introduction

The vp_vpda is a vDPA driver for a virtio-pci device which bridges between a vDPA bus and virtio-pci device. It’s especially useful for future feature prototyping and testing.

vp_vdpa can also be used for other purposes aside from testing — for example, as an implementation reference. Some functionalities can be extracted out of a pure virtio device code implementation, such as the masking of features to achieve virtual machine (VM) passthrough. Compared with the vdpa_sim, the vp_vdpa has a drawback in that it can only be used on a virtio-pci device.

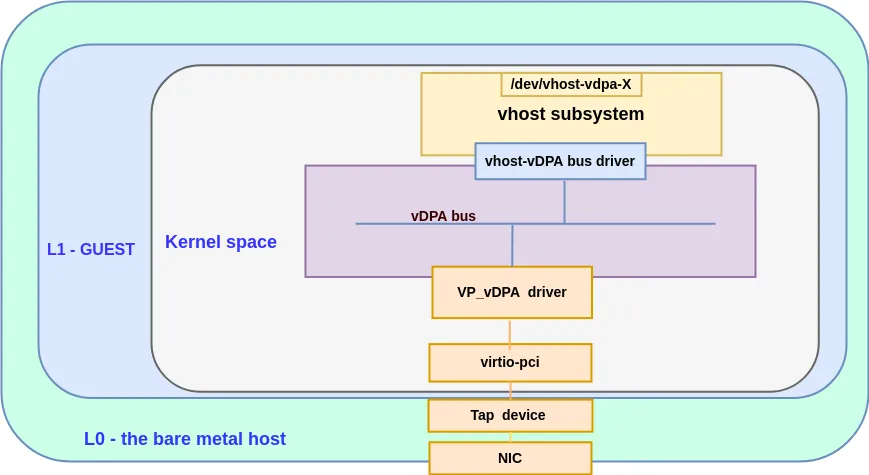

The following diagram shows the building blocks of a vp_vdpa device:

Figure 1: abstraction for vp_vdpa

Here we will provide hands-on instructions for two use cases:

-

Use case #3: vhost_vdpa + vp_vdpa will work as a network backend for a VM with a guest kernel virtio-net driver

-

Use case #4: vp_vdpa + virtio_vdpa bus will work as a virtio device (for container workload)

General requirements

In order to run the vDPA setups, the following are required:

-

A computer (physical machine, VM or container) running a Linux distribution — this guide is focused on Fedora 36, but the commands should not change significantly for other Linux distros

-

A user with `sudo` permissions

-

About 50GB of free space in your home directory

-

At least 8GB of RAM

-

Verify that your CPU supports virtualization technology (note that this is not required for vdpa_sim)

-

Enable nested in KVM — you can get more information here

Verify that your CPU supports virtualization technology

[user@L0 ~]# egrep -o '(vmx|svm)' /proc/cpuinfo | sort | uniq vmx

Note: VMX is the Intel processor flag and SVM is the AMD flag. If you get a match, then your CPU supports virtualization extensions — you can get more information here.

Enable nested in KVM

[user@L0 ~]# cat /sys/module/kvm_intel/parameters/nested Y

Please note that when describing nested guests, we use the following terminology:

-

L0 – the bare metal host, running KVM

-

L1 – a VM running on L0; also called the "guest hypervisor" as it itself is capable of running KVM

-

L2 – a VM running on L1, also called the "nested guest"

Use case #3: Experimenting with vhost_vdpa + vp_vdpa

Overview of the datapath

For a vp_vdpa device, we will use a virtio-net-pci device to simulate the real hardware. You can use it with real traffic as opposed to loopback traffic with vdpa_sim.

This is used in a nested environment, so we start by setting that up.

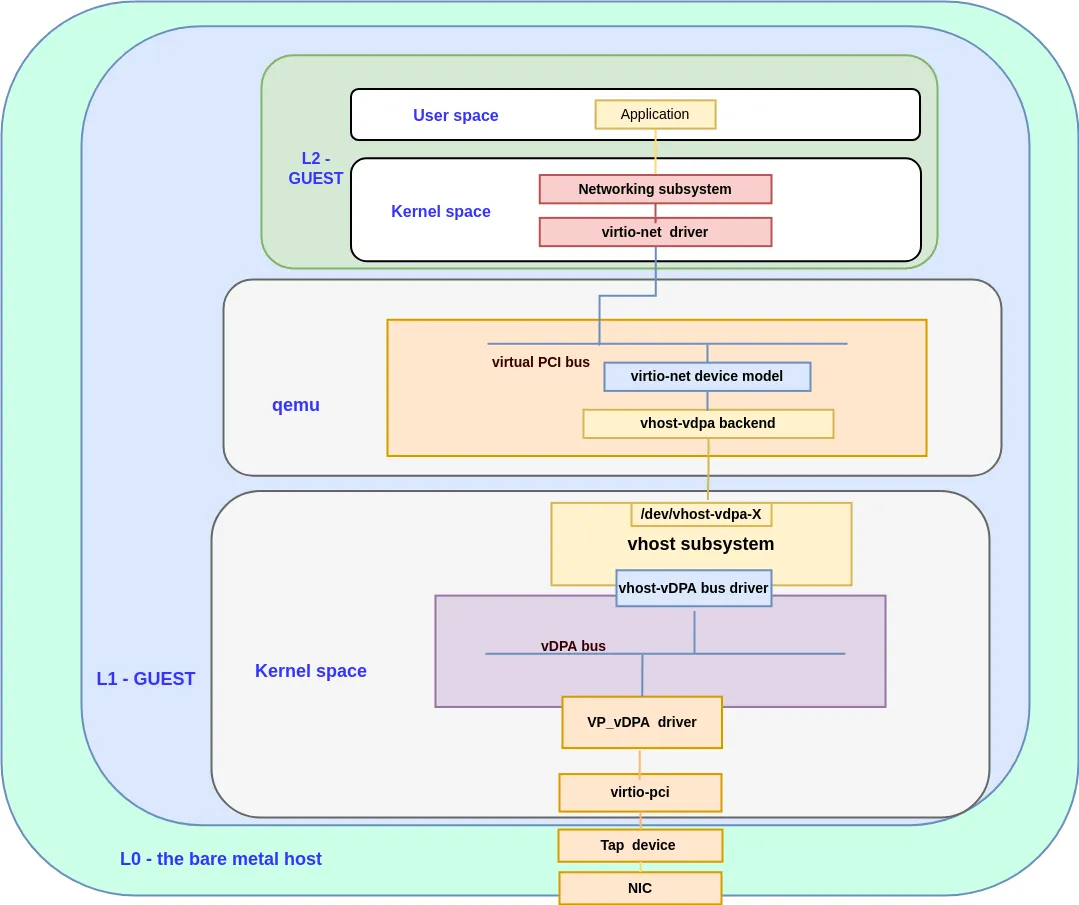

The following diagram shows the datapath for vhost-vDPA using a vp_vdpa device:

Figure 2: the datapath for the vp_vdpa with vhost_vdpa

Create the L1 VM

Start by creating the L1.qcow2.

These steps are the same as in our previous article, so we will only briefly list the commands:

[user@L0 ~]# sudo yum install libguestfs-tools guestfs-tools [user@L0 ~]# sudo virt-sysprep --root-password password:changeme --uninstall cloud-init --network --install ethtool,pciutils,kernel-modules-internal --selinux-relabel -a Fedora-Cloud-Base-36-1.5.x86_64.qcow2 [user@L0 ~]# qemu-img create -f qcow2 -o preallocation=metadata L1.qcow2 30G Formatting 'L1.qcow2', fmt=qcow2 cluster_size=65536 extended_l2=off preallocation=metadata compression_type=zlib size=53687091200 lazy_refcounts=off refcount_bits=16 [user@L0 ~]# virt-resize --resize /dev/sda2=+500M --expand /dev/sda5 Fedora-Cloud-Base-36-1.5.x86_64.qcow2 L1.qcow2

You can create the L2.qcow2 using the same steps.

Load the L1 guest

We will provide two methods to load the VM — a QEMU cmdline and libvirt API (both are valid).

Method 1: Load L1 with QEMU

The virtio-net-pci device is the vDPA device's backend. We will create the vDPA device based on it:

-device intel-iommu,snoop-control=on \ -device virtio-net-pci,netdev=net0,disable-legacy=on,disable-modern=off,iommu_platform=on \ -netdev tap,id=net0,script=no,downscript=no \

The sample QEMU full command line is as follows:

[user@L0 ~]# sudo qemu-kvm\ -drive file=/home/test/L1.qcow2,media=disk,if=virtio \ -nographic \ -m 8G \ -enable-kvm \ -M q35\ -net nic,model=virtio \ -net user,hostfwd=tcp::2222-:22\ -device intel-iommu,snoop-control=on \ -device virtio-net-pci,netdev=net0,disable-legacy=on,disable-modern=off,iommu_platform=on, \ bus=pcie.0,addr=0x4\ -netdev tap,id=net0,script=no,downscript=no \ -smp 4 \ -cpu host \ 2>&1 | tee vm.log

Method 2: Load L1 with libvirt

Following is the sample XML for vp_vdpa_backend.xml, please make sure <driver name='qemu' iommu='on'/> is added in this XML file:

<interface type='network'> <mac address='02:ca:fe:fa:ce:02'/> <source network='default'/> <model type='virtio'/> <driver name='qemu' iommu='on'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </interface>

The command “-device intel-iommu,snoop-control=on” is not yet supported in libvirt, so we'lll use " --qemu-commandline=" to add this feature.

We still use virt-install to create an L1 guest:

[user@L0 ~]# virt-install -n L1 \ --check path_in_use=off\ --ram 4096 \ --vcpus 4 \ --nographics \ --virt-type kvm \ --disk path=/home/test/L1.qcow2,bus=virtio \ --noautoconsole \ --import \ --os-variant fedora36 Starting install... Creating domain... | 0 B 00:00:00 Domain creation completed.

Attach the backend tap device for vDPA:

[user@L0 ~]# cat vp_vdpa_backend.xml <interface type='network'> <mac address='02:ca:fe:fa:ce:02'/> <source network='default'/> <model type='virtio'/> <driver name='qemu' iommu='on'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </interface> [user@L0 ~]# virsh attach-device --config L1 vp_vdpa_backend.xml Device attached successfully [user@L0 //]# virt-xml L1 --edit --confirm --qemu-commandline="-device intel-iommu,snoop-control=on" --- Original XML +++ Altered XML @@ -157,4 +157,8 @@ <driver caching_mode="on"/> </iommu> </devices> + <qemu:commandline xmlns:qemu="http://libvirt.org/schemas/domain/qemu/1.0"> + <qemu:arg value="-device"/> + <qemu:arg value="intel-iommu,snoop-control=on"/> + </qemu:commandline> </domain> Define 'L1' with the changed XML? (y/n): y Domain 'L1' defined successfully.

Start the L1 guest:

[user@L0 ~]# virsh start L1 Domain 'L1' started [user@L0 ~]# virsh console L1 Connected to domain 'L1' Escape character is ^] (Ctrl + ])

Enter the L1 guest and prepare the environment

Update to the latest kernel (if required)

The latest vp_vdpa code was merged in kernel v5.19-rc2. If your kernel is newer than this version you can skip these steps.

Below are the steps to compile the Linux kernel. You can get more information from how to compile Linux kernel.

[user@L1 ~]# git clone git://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git [user@L1 ~]# cd linux [user@L1 ~]# git checkout v5.19-rc2 [user@L1 ~]# git pull [user@L1 ~]# cp /boot/config-$(uname -r) .config [user@L1 ~]# vim .config (please refer to Note1 to enable vDPA kernel framework) [user@L1 ~]# make -j [user@L1 ~]# sudo make modules_install [user@L1 ~]# sudo make install

Now enable IOMMU (if required):

[user@L1 ~]# sudo grubby --update-kernel=/boot/vmlinuz-newly-installed --args="iommu=pt intel_iommu=on"

Now you should reboot the newly installed kernel.

Note 1: We need to set the following lines in the .config file to enable vDPA kernel framework:

CONFIG_VIRTIO_VDPA=m CONFIG_VDPA=m CONFIG_VP_VDPA=m CONFIG_VHOST_VDPA=m

Note 2: If you prefer to use make menuconfig, you can find these options under section Device Drivers, named vDPA drivers, Virtio drivers and VHOST drivers as kernel 5.19.

Note 3: Here is a sample .config based on Fedora 36. This file is for reference only. The actual .config file may be different.

Update QEMU and vDPA tool (if required)

The latest support for vhost-vdpa backend was merged in v7.0.0-rc4, so you should use that version or above. Also we suggest you update the vDPA tool to v5.15.0 or above.

[user@L1 ~]# git clone https://github.com/qemu/qemu.git

Now you need to compile the QEMU, following the commands below (see this for more information):

[user@L1 ~]# cd qemu/ [user@L1 ~]#git checkout v7.0.0-rc4 [user@L1 ~]#./configure --enable-vhost-vdpa --target-list=x86_64-softmmu [user@L1 ~]# make && make install

Compile the vDPA tool:

[user@L1 ~]# sudo dnf install libmnl-devel

Clone and install the vDPA tool:

[user@L1 ~]# Git clone git://git.kernel.org/pub/scm/network/iproute2/iproute2-next.git [user@L1 ~]# cd iproute2-next [user@L1 ~]# ./configure [user@L1 ~]# make [user@L1 ~]# make install

Prepare the L2 guest image.

This step is the same as creating the L1.qcow2 image.

Create the vdpa device in L1 guest

Load all the related kernel modules:

[user@L1 ~]# sudo modprobe vdpa [user@L1 ~]# sudo modprobe vhost_vdpa [user@L1 ~]# sudo modprobe vp_vdpa

Check the virtio net device which is based on the tap device in L0, this is the ethernet that we can use. You can confirm it via the PCI address, which should be the same with what you added in qemu/libvirt.

Here the 00:04.0 is the PCI address we added in vp_vdpa_backend.xml. You can confirm the address and device ID by running the lspci command:

[user@L1 ~]# lspci |grep Ethernet 00:04.0 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01) [user@L1 ~]# lspci -n |grep 00:04.0 00:04.0 0200: 1af4:1041 (rev 01) [user@L1 ~]#

Unbind the virtio-pci device from virtio-pci driver and bind it to vp_vdpa driver. The device ID is the same as you find in lspci -n.

[user@L1 ~]# sudo echo 0000:00:04.0>/sys/bus/pci/drivers/virtio-pci/unbind [user@L1 ~]# sudo echo 1af4 1041 > /sys/bus/pci/drivers/vp-vdpa/new_id

Create the vDPA device using the vDPA tool:

[user@L1 ~]# vdpa mgmtdev show pci/0000:00:04.0: supported_classes net max_supported_vqs 3 dev_features CSUM GUEST_CSUM CTRL_GUEST_OFFLOADS MAC GUEST_TSO4 GUEST_TSO6 GUEST_ECN GUEST_UFO HOST_TSO4 HOST_TSO6 HOST_ECN HOST_UFO MRG_RXBUF STATUS CTRL_VQ CTRL_M [user@L1 ~]# vdpa dev add name vdpa0 mgmtdev pci/0000:00:04.0 [user@L1 ~]# vdpa dev show -jp { "dev": { "vdpa0": { "type": "network", "mgmtdev": "pci/0000:00:04.0", "vendor_id": 6900, "max_vqs": 3, "max_vq_size": 256 } } }

Verify that vhost_vdpa is loaded correctly, and ensure the bus driver is indeed vhost_vdpa using the following commands:

[user@L1 ~]# ls -l /sys/bus/vdpa/devices/vdpa0/driver lrwxrwxrwx. 1 root root 0 Jun 13 05:28 /sys/bus/vdpa/devices/vdpa0/driver -> ../../../../../bus/vdpa/drivers/vhost_vdpa [user@L1 ~]# ls -l /dev/ |grep vdpa crw-------. 1 root root 236, 0 Jun 13 05:28 vhost-vdpa-0 [user@L1 ~]# driverctl -b vdpa list-devices vdpa0 vhost_vdpa [user@L1 ~]#

Launch the L2 guest

As in the previous blog, we provide two methods for this — QEMU or libvirt.

Method 1: Load L2 guest with QEMU

Set the ulimit -l unlimited — ulimit -l means the maximum size that may be locked into memory, since vhost_vdpa needs to lock pages for making sure the hardware direct memory access (DMA) works correctly. Here we set it to unlimited, and you can choose the size you need.

[user@L1 ~]# ulimit -l unlimited

If you forget to set this, you may get the following error message from QEMU:

[ 471.157898] DMAR: DRHD: handling fault status reg 2 [ 471.157920] DMAR: [DMA Read NO_PASID] Request device [00:04.0] fault addr 0x40ae000 [fault reason 0x06] PTE Read access is not set qemu-system-x86_64: failed to write, fd=12, errno=14 (Bad address) qemu-system-x86_64: vhost vdpa map fail! qemu-system-x86_64: vhost-vdpa: DMA mapping failed, unable to continue\

Launch the L2 guest VM.

The device /dev/vhost-vdpa-0 is the vDPA device we can use. The following is a simple example of using QEMU to launch a VM with vhost_vdpa:

[user@L1 ~]# sudo qemu-kvm \ -nographic \ -m 4G \ -enable-kvm \ -M q35\ -drive file=/home/test/L2.qcow2,media=disk,if=virtio \ -netdev type=vhost-vdpa,vhostdev=/dev/vhost-vdpa-0,id=vhost-vdpa0 \ -device virtio-net-pci,netdev=vhost-vdpa0,bus=pcie.0,addr=0x7\ -smp 4 \ -cpu host \ 2>&1 | tee vm.log

Method 2: Load L2 guest with libvirt

Create the L2 guest VM:

[user@L1 ~]# virt-install -n L2 \ --ram 2048 \ --vcpus 2 \ --nographics \ --virt-type kvm \ --disk path=/home/test/L2.qcow2,bus=virtio \ --noautoconsole \ --import \ --os-variant fedora36

Use virsh edit to add the vDPA device.

Here is the sample for vDPA device and you can get more information here.

<devices> <interface type='vdpa'> <source dev='/dev/vhost-vdpa-0'/> </interface> </devices>

Attach the vDPA device in L2 guest, you can also use virsh edit to edit the L2.xml:

[user@L1 ~]# cat vdpa.xml <interface type='vdpa'> <source dev='/dev/vhost-vdpa-0'/> <'address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0''/> </interface> [user@L1 ~]# virsh attach-device --config L2 vdpa.xml Device attached successfully

This is the workaround for locking the guest's memory:

[user@L1 ~]# virt-xml L2 --edit --memorybacking locked=on Domain 'L2' defined successfully.

Start L2 guest:

[user@L1 ~]# virsh start L2 Domain 'L2' started [user@L1 ~]# virsh console L2 Connected to domain 'L2' Escape character is ^] (Ctrl + ])

After the guest boots up we can log into it. We will find a new virtio-net port in the L2 guest. You can verify it by checking the PCI address:

[user@L2 ~]# ethtool -i eth1 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: 0000:00:07.0 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no [root@L2 ~]# lspci |grep Eth …. 00:07.0 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01)

Verify running traffic with vhost_vdpa in L2 guest

Now that we have a vDPA port it can work as a real NIC. You can simply verify it with ping.

Enter the L0 and set the tap device’s IP address. This is the backend to which vDPA port in L2 guest connects:

[user@L0 ~]# ip addr add 111.1.1.1/24 dev tap0 [user@L0 ~]# ip addr show tap0 12: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 1000 link/ether ca:12:db:61:50:71 brd ff:ff:ff:ff:ff:ff inet 111.1.1.1/24 brd 111.1.1.255 scope global tap0 valid_lft forever preferred_lft forever inet6 fe80::c812:dbff:fe61:5071/64 scope link valid_lft forever preferred_lft forever [user@L0 ~]# ip -s link show tap0 12: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN mode DEFAULT group default qlen 1000 link/ether ca:12:db:61:50:71 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 3612 26 0 114 0 0 TX: bytes packets errors dropped carrier collsns 1174 15 0 0 0 0

Enter the L2 guest and set the vDPA port’s IP in L2 guest:

[user@L2 ~]# ip addr add 111.1.1.2/24 dev eth1 [user@L2 ~]# ip -s link show eth1 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT gr0 link/ether 52:54:00:12:34:57 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 9724 78 0 0 0 0 TX: bytes packets errors dropped carrier collsns 11960 86 0 0 0 0

Now the vDPA port can ping the tap port in L0:

[user@L2 ~]# ping 111.1.1.1 -w 10 PING 111.1.1.1 (111.1.1.1) 56(84) bytes of data. 64 bytes from 111.1.1.1: icmp_seq=1 ttl=64 time=6.69 ms 64 bytes from 111.1.1.1: icmp_seq=2 ttl=64 time=1.03 ms 64 bytes from 111.1.1.1: icmp_seq=3 ttl=64 time=1.97 ms 64 bytes from 111.1.1.1: icmp_seq=4 ttl=64 time=2.28 ms 64 bytes from 111.1.1.1: icmp_seq=5 ttl=64 time=0.973 ms 64 bytes from 111.1.1.1: icmp_seq=6 ttl=64 time=2.00 ms 64 bytes from 111.1.1.1: icmp_seq=7 ttl=64 time=1.89 ms 64 bytes from 111.1.1.1: icmp_seq=8 ttl=64 time=1.17 ms 64 bytes from 111.1.1.1: icmp_seq=9 ttl=64 time=0.923 ms 64 bytes from 111.1.1.1: icmp_seq=10 ttl=64 time=1.99 ms --- 111.1.1.1 ping statistics --- 10 packets transmitted, 10 received, 0% packet loss, time 9038ms rtt min/avg/max/mdev = 0.923/2.091/6.685/1.606 ms [root@ibm-p8-kvm-03-guest-02 ~]# ip -s link show eth1 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group defaul0 link/ether 52:54:00:12:34:57 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 1064 12 0 0 0 0 TX: bytes packets errors dropped carrier collsns 12228 88 0 0 0 0

You can see the traffic is passing from L2 guest to L0. You can also connect the tap device to a bridge and connect it to an ethernet. You will then have a vDPA port with real traffic passing from L2 to outside.

Use case #4: Experimenting with vritio_vdpa + vp_vdpa

Overview of the datapath

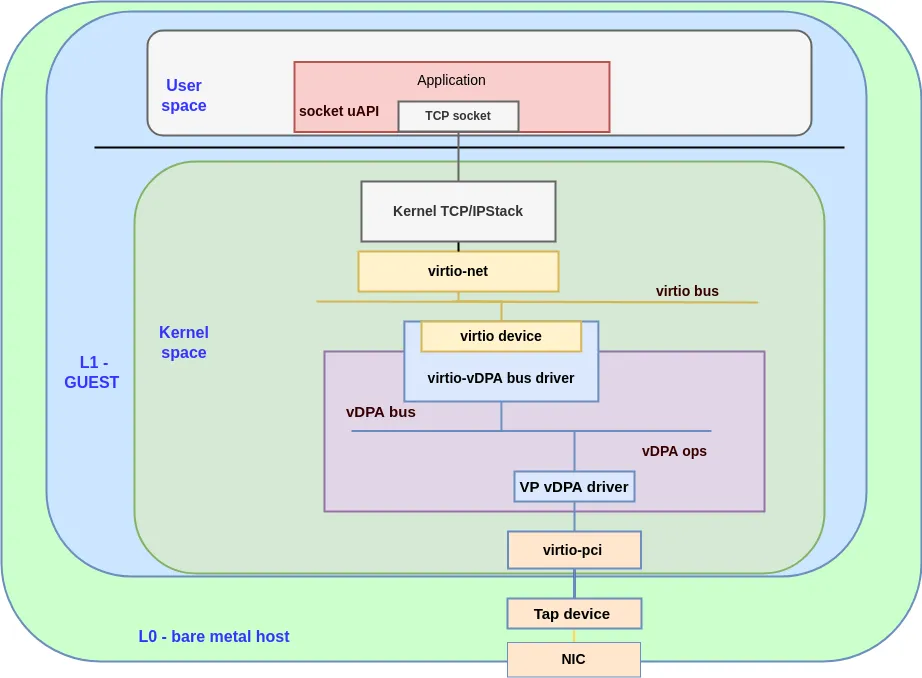

For the virtio_vdpa part, we will use a virtio-net-pci device to simulate the vDPA hardware,

virtio_vdpa will create a virtio device in the virtio bus. For more information on the virtio_vdpa bus see vDPA kernel framework part 3: usage for VMs and containers.

Figure 3: the datapath for the vp_vdpa with vritio_vdpa

Setting up your environment

Load the L1 guest

This part is the same with use case #3: vhost_vdpa + vp_vdpa.

Enter the L1 guest and prepare the environment

Please update the kernel to 5.19-rc2, vdpa tool 5.15 or above. This part is also the same with use case #3.

Create the vDPA device

Load the modules:

[user@L1 ~]# modprobe virtio_vdpa [user@L1 ~]# modprobe vp_vdpa

Create the vDPA device, you can also find the PCI device by running the lspci command:

[user@L1 ~]# lspci |grep Ethernet 00:04.0 Ethernet controller: Red Hat, Inc. Virtio network device (rev 01) [user@L1 ~]# lspci -n |grep 00:04.0 00:04.0 0200: 1af4:1041 (rev 01) [user@L1 ~]# echo 0000:00:04.0>/sys/bus/pci/drivers/virtio-pci/unbind [user@L1 ~]# echo 1af4 1041 > /sys/bus/pci/drivers/vp-vdpa/new_id [user@L1 ~]# vdpa mgmtdev show pci/0000:00:04.0: supported_classes net max_supported_vqs 3 dev_features CSUM GUEST_CSUM CTRL_GUEST_OFFLOADS MAC GUEST_TSO4 GUEST_TSO6 GUEST_ECN GUEST_UFO HOST_TSO4M [user@L1 ~]# vdpa dev add name vdpa0 mgmtdev pci/0000:00:04.0 [user@L1 ~]# vdpa dev show -jp { "dev": { "vdpa0": { "type": "network", "mgmtdev": "pci/0000:00:04.0", "vendor_id": 6900, "max_vqs": 3, "max_vq_size": 256 } } } [user@L1 ~]#

Verify the driver is bounded to virtio_vdpa correctly:

[user@L1 ~]# ethtool -i eth1 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: vdpa0 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no

NOTE: Please check, the bus-info here should be the vDPA bus.

Running traffic on the virtio_vdpa

Now that we have a vp_vdpa port. We can run traffic the same way as in use case #3.

Set the tap device’s address in L0:

[user@L0 ~]# ip addr add 11.1.1.1/24 dev tap0 [user@L0 ~]# ip addr show tap0 12: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 1000 link/ether ca:12:db:61:50:71 brd ff:ff:ff:ff:ff:ff inet 111.1.1.1/24 brd 111.1.1.255 scope global tap0 valid_lft forever preferred_lft forever inet6 fe80::c812:dbff:fe61:5071/64 scope link valid_lft forever preferred_lft forever [user@L0 ~]# ip -s link show tap0 12: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN mode DEFAULT group default qlen 1000 link/ether ca:12:db:61:50:71 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 26446 181 0 114 0 0 TX: bytes packets errors dropped carrier collsns 2378 29 0 0 0 0

Set the vDPA port’s IP address in L1, and ping the tap device in L0

[user@L1 ~]# ip addr add 11.1.1.2/24 dev eth1 [user@L1 ~]# ip -s link show eth2 7: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000 link/ether 52:54:00:12:34:58 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 4766 52 0 0 0 0 TX: bytes packets errors dropped carrier collsns 45891 309 0 0 0 0 altname enp0s4 [user@L1 ~]# ping 111.1.1.1 -w10 PING 111.1.1.1 (111.1.1.1) 56(84) bytes of data. 64 bytes from 111.1.1.1: icmp_seq=1 ttl=64 time=1.11 ms 64 bytes from 111.1.1.1: icmp_seq=2 ttl=64 time=1.04 ms 64 bytes from 111.1.1.1: icmp_seq=3 ttl=64 time=1.06 ms 64 bytes from 111.1.1.1: icmp_seq=4 ttl=64 time=0.901 ms 64 bytes from 111.1.1.1: icmp_seq=5 ttl=64 time=0.709 ms 64 bytes from 111.1.1.1: icmp_seq=6 ttl=64 time=0.696 ms 64 bytes from 111.1.1.1: icmp_seq=7 ttl=64 time=0.778 ms 64 bytes from 111.1.1.1: icmp_seq=8 ttl=64 time=0.650 ms 64 bytes from 111.1.1.1: icmp_seq=9 ttl=64 time=1.06 ms 64 bytes from 111.1.1.1: icmp_seq=10 ttl=64 time=0.856 ms --- 111.1.1.1 ping statistics --- 10 packets transmitted, 10 received, 0% packet loss, time 9015ms rtt min/avg/max/mdev = 0.650/0.885/1.106/0.164 ms [user@L1 ~]# ip -s link show eth1 4: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group defaul0 link/ether 52:54:00:12:34:57 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 1064 12 0 0 0 0 TX: bytes packets errors dropped carrier collsns 12328 88 0 0 0 0

You can see the traffic is passing from L1 guest to L0. You can also connect the tap device to a bridge and connect it to an ethernet. You will then have a vDPA port with real traffic passing from L1 to outside.

Summary

In this (and the previous) article, we’ve guided you through the steps of setting up a vDPA testing environment without using vDPA hardware. We’ve covered use cases that interact with the virtio_vdpa bus and the vhost_vdpa bus.

We hope this helps clarify concepts mentioned in previous vDPA blogs and makes things more concrete by grounding them in actual Linux tooling.

I would like to thank the virtio-networking team for helping review this blog — they gave me a lot of help. I also need to thank Lei Yang from the KVM-QE team — he verified the whole process, step by step. I really appreciate all your help.

To keep up with the virtio-networking community we invite you to sign up to the virtio-networking mailing list. This is a public mailing list, and you can register through the Mailman page.

About the authors

Cindy is a Senior Software Engineer with Red Hat, specializing in Virtualization and Networking.

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Customer support

- Developer resources

- Find a partner

- Red Hat Ecosystem Catalog

- Red Hat value calculator

- Documentation

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit