Edge computing: How to architect distributed scalable 5G with observability

Anni Roenkae from Pexels

Telecommunication, media, and entertainment (TME) solutions are a multiverse of application catalogs that work effectively and efficiently in harmony. Organizations deploy TME solutions to provide high-level availability (with redundancy to cover possible disaster scenarios), accuracy, and low latency (for higher user experience and satisfaction) and to meet regulatory requirements.

In order to achieve these goals, TME solutions usually include their own operational support systems (OSS) and business support systems (BSS). OSS includes an all-seeing eye that observes the running systems and generates insights for proactive and predictive operations. BSS ties the running systems into business workflows.

[ Learn why open source and 5G are a perfect partnership. ]

Recent developments with microservices in application development and delivery have created scalability and complexity issues with the design, implementation, and maintenance of solutions like 5G Core platforms. (See Figure 1 for the mesh of 5G services interacting to deliver 5G connectivity). Hence, various approaches have been brought to market by different organizations.

Our group's previous articles presented the 5G Open HyperCore architecture, using Kubernetes for edge applications, improving container observability with a service mesh, comparing init containers in Istio CNI, and a working solution for a self-scaling 5G core implementation.

This article offers additional details on 5G at the edge, including:

- Collecting and analyzing requirements from geodistributed 5G core solutions across various infrastructures, including on-premises and multicloud.

- Revisiting possible solution options for observability and traffic management using different technology stacks.

- Presenting pros and cons of each approach.

- Sharing experience-driven ideas for the future.

Background and definitions

A typical hyperscaler's infrastructure contains one or more regions with separate availability zones inside which compute, network, and storage services are presented with distributed global network connectivity.

Hyperscalers aim to match their service and product catalog offerings across all of their regions and zones. However, there can be significant variations in availability, accessibility, and affordability among regions, which may limit a tenant workload's portability. This solution aims to avoid such discrepancies with infrastructure-neutral platform components such as Red Hat OpenShift, Red Hat Advanced Cluster Management, Red Hat Advanced Cluster Security, and Red Hat OpenShift Service Mesh.

This scope is centered on fulfilling the basic needs and requirements of a 5G Core deployment using real-world examples, such as having multiple interfaces available to 5G Core microservices, enabling service discovery between them, creating performant networking across all services and numerous cluster footprints and geographies, and complying with local telco service provider security requirements.

[ Boost security, flexibility, and scale at the edge with Red Hat Enterprise Linux. ]

Here are some acronyms to know:

- 3GPP: 3rd Generation Partnership Project (a standard for mobile technology)

- AMF: Access and mobility management function

- Cell_id: An identification number for each base transceiver station

- CNF: Cloud-native network functions

- DNN: Data network name

- gNB: Next-generation NodeB (the base station in 5G networks)

- GTP: General packet radio service (GPRS) tunneling protocol (IP-based protocol that carries GPRS data within mobile networks)

- IMEI: International Mobile Equipment Identity (each device's unique ID number)

- NRF: Network repository function

- RAN: Radio access network

- SMF: Session management function

- TAC: Tracking area code (for selecting the UPF based on the user's location)

- UPF: User plane function

- UE: User equipment

- ZTP: Zero-touch provisioning (allows automatic device configuration)

5G Core standards

It is important to understand how the 3GPP standard envisions a distributed 5G Core, especially for user-plane function placement and selection with respect to the user's or consumer's location.

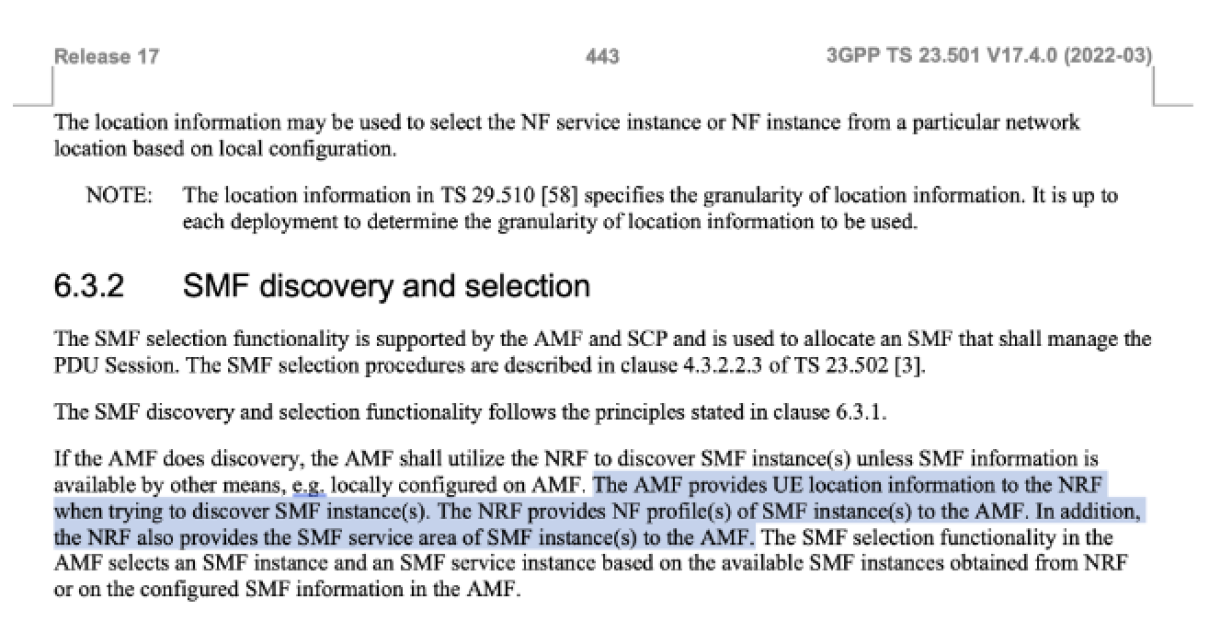

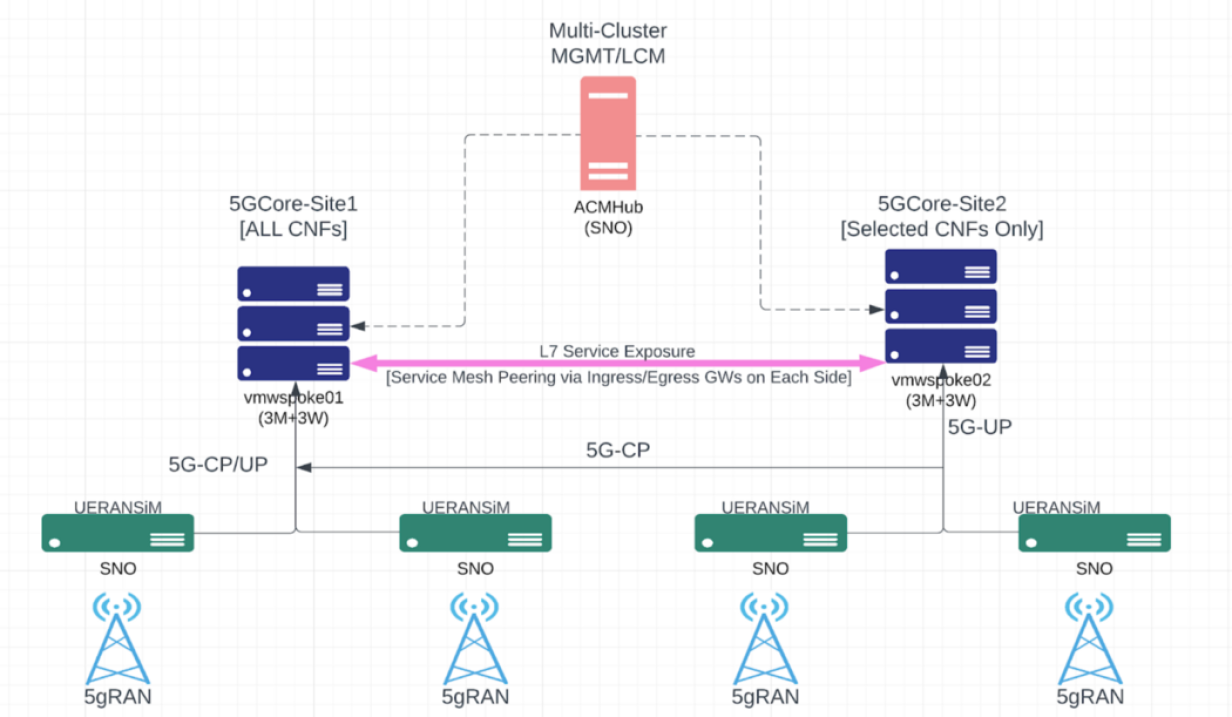

As Figure 3 shows, 3GPP allows you to select a nearby SMF (by AMF) with UE location information through service discovery over an NRF query. In this approach, you need to have SMF and UPF as distributed 5G Core CNF Bundles.

As Figure 4 shows, SMF can be allowed (or disallowed) to control multiple distributed UPF instances based on the 5G Core vendor's implementation or how the solution is configured and deployed in the field. In this approach, only the UPF's CNF must be distributed across different locations.

Both of these are significantly different approaches and come with their own challenges. In our testbed setup, we configured our central site SMF to access multiple UPFs (central and remote). Therefore, only UPF CNF is distributed across different clusters or locations.

The correct UPF instance can be determined by numerous variables, including the DNN, TAC (the example in Figure 5), and cell_id, that have been received from the gNB or UE by AMF or SMF.

One last stop before delving into solution options: We want to emphasize the importance of the automation that fuels the speed of delivering agile solution deployments across different infrastructures. We have been working diligently on designing, testing, and deploying autonomously provisioned 5G Core solutions from the bottom (Infrastructure-as-a-Service) and top (Software-as-a-Service) layers.

[ Learn more about building flexible infrastructure for 5G success. ]

ZTP leverages GitOps patterns to deliver a consistent and non-drifted software delivery experience. Including ZTP with a healthy operational control loop that collects, analyzes, and triggers necessary actions on existing deployments, prevents vulnerabilities, and brings the cloud era's elasticity and performant resource consumption approaches into our deployments.

Three main network fabrics connect the distributed 5G Core deployments:

- Cluster management fabric (hub to spokes)

- Inter-cluster connect (spoke to spoke, repeated across all the spoke clusters that exist)

- Private access to the enabler's fabric (hub or spokes to enablers-access-gateway or hyperscaler-internal-api)

Three possible approaches

We have identified three possible solution approaches (see the OSI layer architecture model for descriptions of L2, L3, and L7):

- Service mesh federation: Service mesh peering-driven interconnect traffic observability and steering

- Layer 2/Layer 3 integrated clusters with Submariner: L2/L3 interconnectivity-driven traffic observability and steering

- Layer 7 integrated and observed clusters: L7 mesh connectivity with or without agents

Each solution is covered below.

1. Service mesh federation

Federation is a deployment model that lets you share services and workloads between separate meshes managed in distinct administrative domains. Service mesh federation assumes each mesh is managed individually and retains its own administrator. The default behavior is that no communication is permitted and no information is shared between meshes. Information sharing between meshes is on an explicit opt-in basis (aligned with cloud-era least-privilege operations). Nothing is shared in a federated mesh unless it has been configured for sharing.

You can configure the ServiceMeshControlPlane on each service mesh to create ingress and egress gateways specifically for the federation and specify the mesh's trust domain. Federation also involves creating additional federation files. Use the following resources to configure the federation between two or more meshes:

- A ServiceMeshPeer resource declares the federation between a pair of service meshes.

- An ExportedServiceSet resource declares that one or more services from the mesh are available for use by a peer mesh. An example is a UPF exported from 5GCore-Site2 for a geolocal breakout for UE.

- An ImportedServiceSet resource declares which services exported by a peer mesh will be imported into the mesh. An example is a UPF imported to 5GCore-Site1 to include it for registered gNBs from that particular geolocation based on the TAC or another element.

[ Learn 3 strategies to transform your 5G network. ]

UPF has three primary network interfaces: N3, N4, and N6. Each has a special purpose:

- N3 is the interface where the GTP tunnel is established to carry user-plane traffic. In our testbed setup, this is a host device (net/tun) mapped using admin privileges to the UPF container.

- N4 is UPF's control plane interface where SMF supervises UPF on establishing an incoming user-plane session. This is the interface that the 5G Core central site-hosted SMF needs to access; it is exported from the remote site to the central site and included in the central site's SMF configuration.

- N6 is the breakout to the internet over a remote site internet uplink.

Service mesh federation inherits native Istio virtual service-routing capabilities such as traffic mirroring or load balancing based on various approaches (including weight and label or path selectors). However, this is abstracted from how 3GPP envisioned UPF selection, so Istio virtual service's HTTP routing capabilities would have no value here. Instead, we use the imported service's alias local name as the UPF association in SMF configuration (Figure 8) for the remote site's local breakout.

2. L2/L3 integrated clusters with Submariner

Submariner enables direct networking between pods and services in different Kubernetes clusters, either on-premises or in the cloud.

This approach does not need to export or import any service or deal with extra configurations but simply ties multiple clusters together and lets SMF directly reach out to remote site UPF over service IP or pod IP addresses. Red Hat Advanced Cluster Management (RH-ACM) enables easy and seamless Submariner setup with no manual configurations needed on clusters.

Enabling network accessibility across different 5G Core sites can open the door for central observability and management solutions for 5G CNFs, such as NetObserv Operator and Red Hat Advanced Cluster Security (RH-ACS), described in the next section.

3. L7 integrated and observed clusters

Various market-ready OSS, BSS, and Software-as-a-Service (SaaS) products are available for Kubernetes stacks and containerized applications. However, very few of them can address TME application stacks' needs, especially the complex microservices architecture of 5G Core. Usually, these OSS and BSS solutions are based on the assumption that inter-networking is in place between where the solution is deployed and where probes, agents, and metrics, logs, and trace collections are placed.

[ Need to speak Kubernetes? Check out our quick-scan Kubernetes primer for IT and business leaders. ]

Security-centric observability

In this approach, the main focus is usually on network policies and how current network configurations on the pod, namespace, and cluster-level are behaving. Such solutions are typically based on installing add-on probes or agents on cluster nodes or through ambassador or sidecar containers.

We have leveraged Red Hat Advanced Cluster Security (RH-ACS) as a platform deployed on a hub cluster that remotely plugs itself to remote 5G Core central and 5G-RAN remote sites and presents collected data in a human-understandable and -actionable manner.

RH-ACS can present network flows per cluster and per time with detailed flow breakdowns at the pod namespace levels.

RH-ACS can represent collected findings against well-defined policies out of the box or custom-tailored with drift analysis and possible remediation offers.

Network fabric-centric observability

Red Hat OpenShift Container Platform (OCP) includes monitoring capabilities from the start. You can view monitoring dashboards and manage metrics and alerts. With the OCP 4.10 release, Network Observability is introduced in Preview mode. The Network Observability feature provides the ability to export, collect, enrich, and store NetFlow data as a new telemetry data source. A frontend also integrates with the OpenShift web console to visualize these flows and sort, filter, and export this information.

In our testbed setup, we have created an observability backend (Loki) on the ACM Hub cluster. We plugged in spoke clusters (where 5G Core CNFs are distributed over two production sites: prod1-5gcore and prod2-5gcore) to the Loki backend on the ACM Hub cluster.

Collecting and storing network data opens up a wide range of possibilities that can aid in troubleshooting networking issues, determining bandwidth usage, planning capacity, validating policies, and identifying anomalies and security issues. This data provides insights into:

- How much 5G user and control traffic flows between any 5G CNF pods?

- What percentage of the overall traffic is from gNB for control plane (SCTP) vs. user plane (GTP)?

- What are the peak times when there is the highest amount of traffic for UPF for user plane?

- How many bytes per second are coming in and out of a single UPF pod?

- How much traffic is handled by a particular SMF instance?

- Is any traffic using insecure protocols, such as HTTP, FTP, or telnet?

Choosing a solution

In summary, 5G Core needs to be distributed to offer effective scalability where user demand is happening. The way to achieve that is to have multiple 5G Core deployments. Some may have all CNFs, and some only few (such as UPF for local breakout, as we presented from our testbed).

When an application domain (a 5G Core solution) spans multiple network boundaries, the key challenge for observability lies in how you connect the observability solution to the leaf and spoke locations.

In this study, we delved in from Layer 2 (Submariner) to Layer 7 (Federated Mesh), each of which comes with pros and cons. Based on our experience from past and present 5G projects, we foresee that the observability solution that is the easiest to install, use, and scale to the company's 5G Core deployment will win the day. A federated mesh roadmap to offer a central Istio control plane may sound like an ideal solution. However, the nature of 3GPP-driven 5G solution architecture implementations so far has not predicted the same outcome (service discovery based on Istio compared to 5G-NRF use).

Unless we find a way to marry Istio service discovery and 5G-NRF, it looks like the best possible solutions will be based on either:

- External agents or probes on host levels with privileged access to network namespaces (or underlying virtual switching).

- Pulling data natively from underlying network fabric constructs (such as the NetObserv operator discussed in the "Network fabric-centric observability" section above).

We favor option two for security and performance. It will not introduce additional attack surfaces and avoids hosting or maintaining additional compute-intensive application overhead (such as agents and probes).

Wrap up

We hope 3GPP 5G architecture working groups and open source service mesh communities will collaborate to address the complex needs of TME solution stacks for the future.

We would like to see NetObserv Operator officially reach the general availability stage with multicluster support (which we used in our testbed in this study) and actionable insights generated against defined network policy drifts, as RH-ACS offers. Features like network data pull security with authentication and authorization (per namespace) would also be helpful.

This article is adapted from the authors' Medium post Distributed scalable 5G with observability and is published with permission.

Fatih Nar

Fatih (aka The Cloudified Turk) has been involved over several years in Linux, Openstack, and Kubernetes communities, influencing development and ecosystem cultivation, including for workloads specific to telecom, media, and More about me

Eric Lajoie

Eric Lajoie is a Telco Solution Architect for Red Hat, EMEA. In his current capacity, Eric is responsible for end-to-end solution design, be it OpenShift, OpenStack, networking, 5G core, or edge designs. More about me

Steven Lee

Steven Lee is a Sr Principal Software Engineer and leading the network observability initiative at Red Hat More about me

Navigate the shifting technology landscape. Read An architect's guide to multicloud infrastructure.

OUR BEST CONTENT, DELIVERED TO YOUR INBOX