gRPC is a compelling technology for communication between a source and target over the network. It's fast, efficient, and because it runs on HTTP/2, gRPC supports both typical request/response interactions and long-running streaming communication.

This version of the Remote Procedure Call (RPC) architectural style has been around since 2015, when Google first released it. Over the years, it's gained a lot of traction. Many companies are putting gRPC to good use in a variety of interesting ways, including the four real-world examples presented here.

Using gRPC in the Kubernetes CRI

Kubernetes' architecture requires massive amounts of internal message-exchange activity. One key area where gRPC is used is supporting container management for each node in a Kubernetes cluster.

Kubernetes creates containers in a cluster of computers using a program called kubelet. A kubelet instance lives on every worker machine in a Kubernetes cluster. When Kubernetes wants to create a container, its main controller sends a message to the kubelet instance on an identified machine, telling it to create the particular container in an abstract organization unit called a pod.

At that point, things get interesting because Kubernetes supports a number of container runtimes. (A container runtime does the work of managing the state and activity of containers running on a given machine.) Kubelet never talks to the container runtime directly. Rather, it talks to a mechanism called a Container Runtime Interface (CRI), and the CRI talks to the container runtime. This conversation between kubelet and the CRI is conducted via gRPC. (See Figure 1 below.)

It's not unusual for a Kubernetes cluster to have hundreds, if not thousands, of computer nodes. And, each of those nodes might support hundreds, if not thousands, of containers on a dynamic basis. (There are many cases in which containers will be created and destroyed based on an application's demand and failure state.) Containers are coming and going all the time at very high levels of activity. All of this activity is conducted using messages sent and received via gRPC.

gRPC is fast and efficient. According to API management platform maker DreamFactory, gRPC connections perform up to seven to 10 times faster than REST API connections. This type of performance makes gRPC well-suited for conducting communication between kubelet and the CRI at web scale. In short, gRPC is an essential part of the internals of Kubernetes. Kubernetes couldn't run without it.

Unifying workflow activities written in several programming languages

Temporal is an open source platform that enables enterprise architects to maintain execution resiliency for business processes in the event of an infrastructure failure. For example, a supply-chain architecture needs to ensure that all purchase requests execute regardless of a momentary failure. Imagine that a company named CoolCo regularly buys widgets from another company called AcmeWidgets. Suppose AcmeWidgets' fulfillment service goes offline. Using Temporal technology, developers at CoolCo can make it so that any purchase request to AcmeWidgets happens no matter what. CoolCo always gets its widgets.

Temporal's benefit is that AcmeWidgets developers can implement purchasing activities easily and in a variety of languages. Temporal does the heavy lifting of ensuring execution in the face of danger, such as a momentary network outage or an unexpected slowdown in disk access.

Now, where it gets interesting is that gRPC is in the communication technology that unifies all the developer-defined workflow activities written in a variety of programming languages into a common data exchange architecture. (See Figure 2 below.)

Maxim Fateev, the CEO and founder of Temporal, explained the details of the technology during an interview at KubeCon 2021. In Fateev's example, one developer might write some activity code in PHP while another one writes an activity in Go. Each activity sends its mission-critical data to a gRPC client that forwards it to a gRPC server. The gRPC server then passes all that data from those language-specific tasks onto the backend Temporal service. The backend Temporal service carries out the work at hand.

What started as data generated in PHP or Go gets transformed into a common data format, which happens to be protocol buffers, the default serialization format for gRPC. The gRPC server does the work of making the data consumable by the backend Temporal services. Using gRPC at web scale to support data communication generated in various languages is a compelling use case for gRPC.

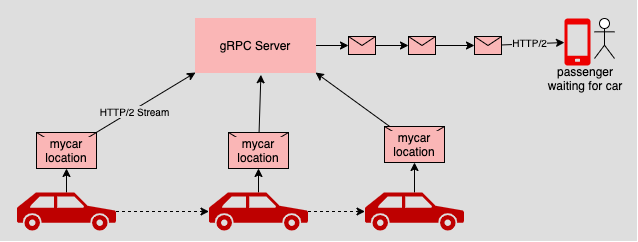

Implementing gRPC streaming to report vehicle location

One of the interesting features of gRPC is that it supports continuous bidirectional streaming under HTTP/2. This makes gRPC well suited for ride-share applications.

One problem that ride-sharing poses is how to inform riders about the location of the car that's coming their way. In the early days of ride-sharing, some cellphone apps called back to a server periodically—for example, every second—to get the car's location. This meant creating a network connection to the server, making the request for the location of the car, getting a response, and then shutting down the network connection. This stateless form of network communication is fundamental to HTTP/1.1. It does the job, but the inefficiency is apparent.

[ You might also be interested in reading the free eBook The automated enterprise: Unify people and processes. ]

Lyft decided to take a different approach, which Michael Rebello, an iOS developer at Lyft, described in his 2018 presentation to the Swift User Group. Instead of using polling to get the location of a vehicle, Lyft uses gRPC to transmit the location of a vehicle in a continuous stream of gRPC messages. (See Figure 3 below.)

It works because the mobile application connects to a gRPC server and then leaves the connection open. The gRPC server then pushes messages continuously through the open connection on to the mobile application. Each message describes the location of the vehicle as it approaches the customer requesting a ride. The mobile application decodes the gRPC messages serialized in protocol buffers and then renders the information on the mobile app's screen.

Using gRPC streaming is a clever approach to reporting vehicle location, but there is an onus on the mobile application to support HTTP/2 and to be able to decode messages serialized in the protocol buffers format. A few years back, this would have been a groundbreaking endeavor. However, today, there is wider support for HTTP/2, and the tools for encoding and decoding protocol buffers' messages have matured. Yet at the time, Lyft's implementation of gRPC was a transformational approach to supporting real-time communication among mobile devices.

Using gRPC in a front-end web client using a proxy

Using gRPC in the front end of a browser-based web application is difficult. While all the popular browsers tout support for the HTTP/2 protocol upon which gRPC runs, full-fledged support of gRPC is wanting. For example, gRPC conducts communication between a client and a server using serialized binary data, but browser-based web clients like textual data. Also, implementing streaming, which is foundational to gRPC, can be tricky from within the browser. These problems are not trivial.

Nonetheless, harnessing the benefits of gRPC from within a browser is an attractive idea for many architects who are designing high-performance streaming applications that need to work reliably. However, it's not going to happen out of the box. Some sort of alternative is required. Fortunately, one exists. The solution involves using a web proxy to mediate between the browser and the gRPC server.

Software company Torq takes this approach. According to Joshua Thorngren, vice president at Torq, the company offers a no-code approach to web-scale threat prevention that is geared toward security professionals. Configuration and automation activities are conducted via a web browser in conjunction with gRPC on the server side.

To make its web pages interoperable with the gRPC backend, Torq uses the open source grpc-web framework. Under grpc-web, the browser connects to a proxy on the internet. The proxy, in turn, communicates with the gRPC server. (See Figure 4 below.) Envoy is the preferred proxy used by many implementations of grpc-web.

The way it works is that an infrastructure engineer configures the Envoy proxy to listen for HTTP/1.1 traffic coming in from a web browser on a specific port, for example, 8080. It then transforms that traffic into a gRPC-compatible version that gets forwarded onto the gRPC server listening on another port, such as 9090. The gRPC server responses are routed back to the browser through the Envoy proxy.

The important thing to understand about the process is that the browser doesn't have to interact with the gRPC server. The proxy handles that accommodation—the browser talks to the proxy, and the proxy talks to the gRPC server, and vice versa. Using a proxy to get a browser to interact with a gRPC server is a clever solution to a very vexing problem for front-end developers.

Putting it all together

gRPC is a compelling technology. It's fast, efficient, and it continues to grow in popularity. But, as with any technology, you need to be judicious when implementing it in an enterprise architecture. gRPC has a lot going for it, but it's complex.

Fortunately, gRPC has a history of implementations that can serve as an instructional roadmap for architects who intend to use the framework. There's a lot to be said for creating novel uses of gRPC by standing on the shoulders of others who've previously worked with the technology.

Hopefully, these use cases provide insights into approaches for using gRPC that you can adapt to meet your architectural needs.

About the author

Bob Reselman is a nationally known software developer, system architect, industry analyst, and technical writer/journalist. Over a career that spans 30 years, Bob has worked for companies such as Gateway, Cap Gemini, The Los Angeles Weekly, Edmunds.com and the Academy of Recording Arts and Sciences, to name a few. He has held roles with significant responsibility, including but not limited to, Platform Architect (Consumer) at Gateway, Principal Consultant with Cap Gemini and CTO at the international trade finance company, ItFex.

More like this

Redefining automation governance: From execution to observability at Bradesco

Friday Five — February 27, 2026 | Red Hat

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds