With the rise of portable devices, IoT, and other distributed small factor devices, edge computing has become a key domain for many enterprises. Edge computing brings application infrastructure from centralized data centers out to the network edge, as close to the consumer as possible to provide low latency and near real-time processing. This use case stretches beyond telecommunications into healthcare, energy, retail, remote offices, and more.

Red Hat OpenStack Platform 13 introduced an edge solution called distributed compute nodes (DCN). This allows customers to deploy computing resources (compute nodes) close to consumer devices, while centralising the control plane in a more traditional datacenter, such as a national or regional site.

With the release of Red Hat OpenStack Services on OpenShift, there is a major change in design and architecture: OpenStack control services now run natively on top of Red Hat OpenShift. This article explores how DCN, and particularly storage, have been redesigned to fit into this new paradigm.

Introducing distributed compute nodes

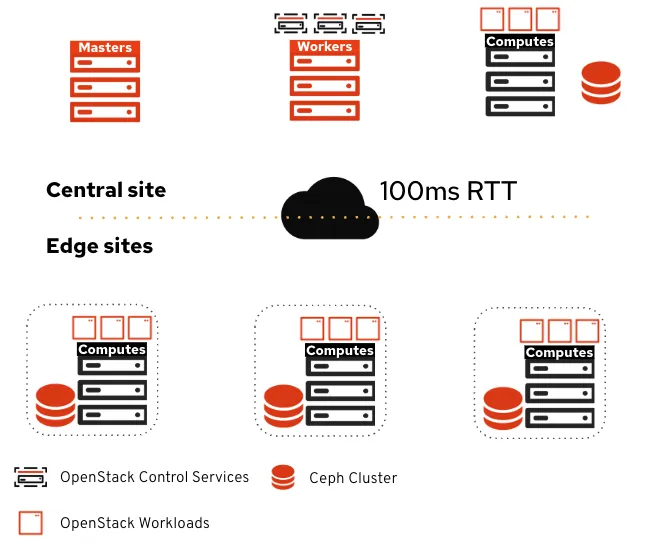

Distributed compute nodes is one of the Red Hat OpenStack Services on OpenShift answers to edge computing use cases. The OpenStack control plane runs on top of OpenShift hosted in a regular datacenter, while computes are distributed remotely across multiple sites.

At the top is the central site, which hosts:

- OpenShift cluster used to run OpenStack control plane services

- Compute nodes (optional)

- Ceph cluster

At the bottom are remote edge sites. These sites only include compute nodes and an optional Ceph cluster. Each site is independent from one another, and they are isolated in their respective availability zone with an optional dedicated Ceph cluster (if workloads require persistent storage.)

What changes with Red Hat OpenStack Services on OpenShift?

While the fundamental approach remains the same, running OpenStack control services on top of OpenShift changes the way you deploy and schedule services, especially the storage ones dedicated to the edge sites.

This article focuses on the changes between the legacy director-based DCN deployment and the new OpenShift driven one. If you're unfamiliar with DCN principles, then you can read Introduction to OpenStack's Distributed Compute Nodes. The core principles remain the same, only the deployment and service’s placement changes.

There are three main components that manage storage access and consumption at the edge:

- Ceph: Provides storage at the edge

- Cinder: Manages persistent storage through volumes

- Glance: Serves images

Storing data at the edge with Red Hat Ceph Storage

The Ceph approach doesn’t change from the previous DCN versions. There's still one dedicated Ceph cluster for each site, including the central site. Ceph clusters at the edge are optional, so some sites can include a Ceph cluster while others can be computes only. Each Ceph cluster is backing Nova ephemeral volumes, Cinder volumes, and Glance images for the site it's bound to.

The compute nodes in each site are configured to connect to their local ceph clusters, the installer takes care of configuring Nova, the ceph.conf file, as well as adding the appropriate Cephx keys.

We still support external and hyperconverged deployments. However, unlike director, the OpenStack Services on OpenShift installer doesn't feature an option to deploy Ceph clusters. Ceph clusters must be deployed separately from OpenStack Services on OpenShift.

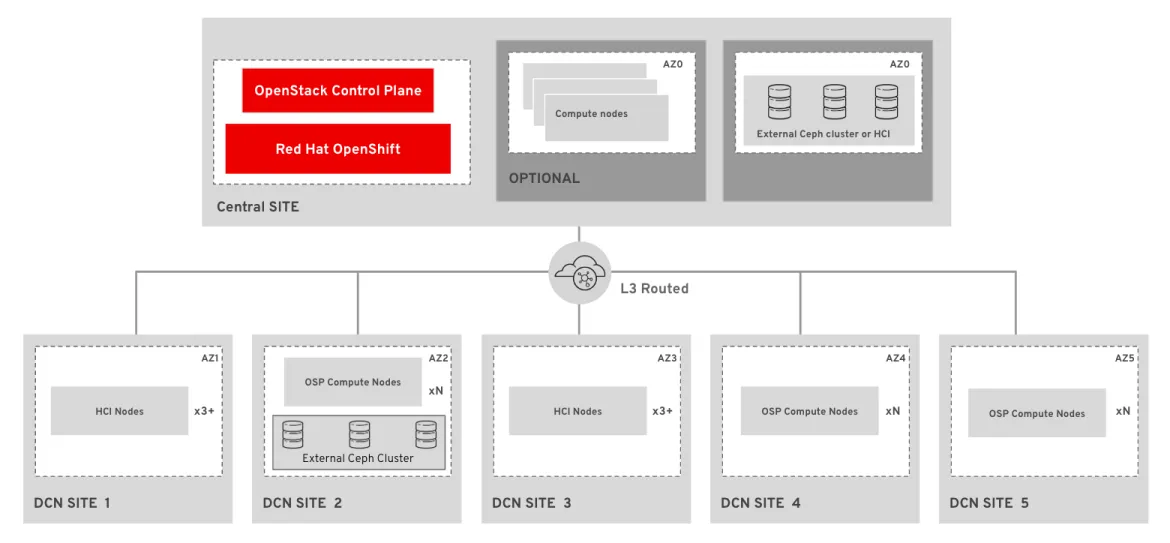

It is possible to define a custom storage topology for each edge site, for example in the diagram above, site 1 and 3 use a hyperconverged (HCI) Ceph cluster while site 2 includes an external Ceph cluster, and sites 4 and 5 are computes only.

Persistent volumes at the edge with Cinder

One of the main reasons for deploying storage backends at the edge is to offer persistent volumes (either boot volumes or data volumes) to the workloads. This also includes features such as snapshots, data encryption, or backups.

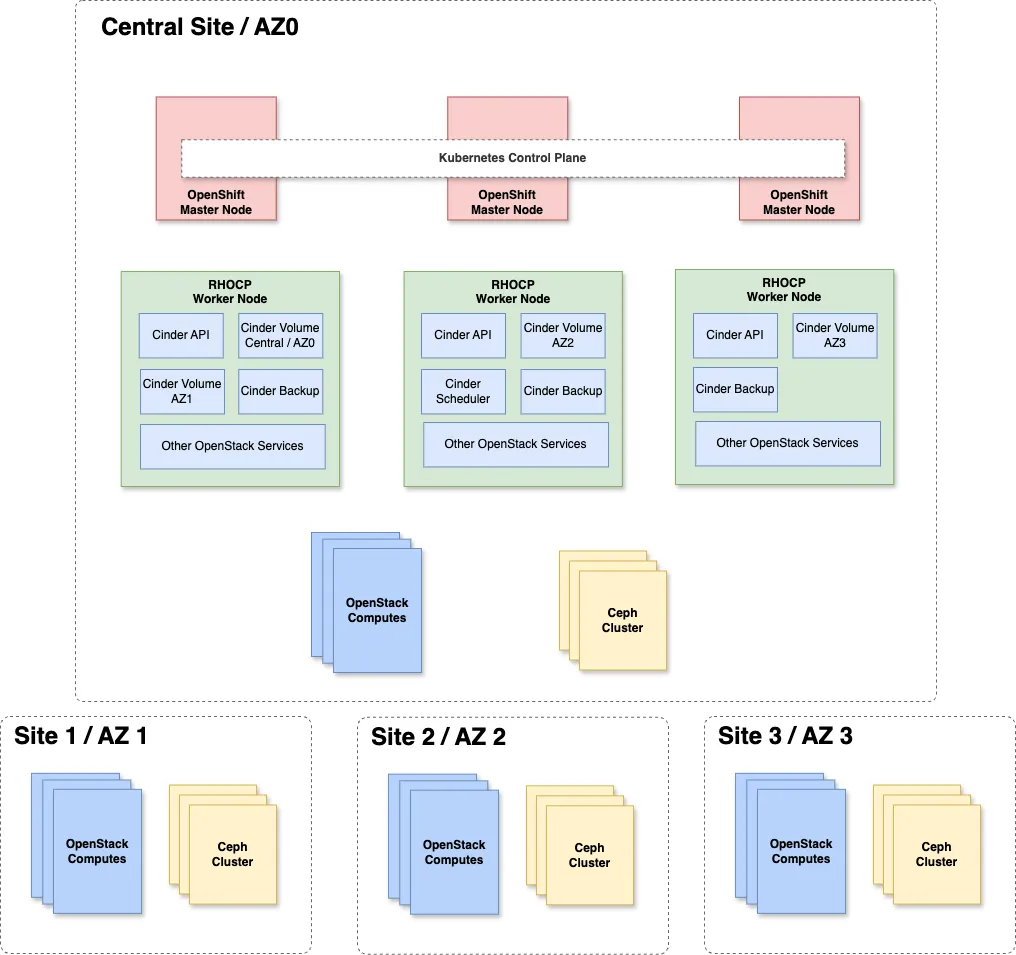

The fundamental approach does not change. You're still dedicating a Cinder volume service and an availability zone for each edge site. However, the way the services are deployed is different.

Due to director limitations, prior to OpenStack Services on OpenShift, the cinder volume service ran as Active/Active. Running the service as Active/Active also required adding a distributed lock manager (etcd) to ensure multi-access consistency. With OpenStack Services on OpenShift, Cinder volumes services run in the central site. The edge computes are not running any storage control services anymore. There is no need to run Cinder volume Active/Active because OpenShift manages high availability. It's worth noting that with the RADOS block device (RBD) driver, the Cinder volume is not part of the data path for operations such as creating volumes from images.

This diagram shows a high level DCN architecture with one central site plus three edge sites as well as the Cinder's service placement. The three green boxes (in the second row from the top) represent the OpenShift workers on top of which the OpenStack control services are running.

The Cinder services are deployed as follows:

- 3 Cinder API services replicas

- 1 Cinder scheduler

- 3 Cinder backup service replicas

- 1 Cinder volume service dedicated to the central site

- 1 Cinder volume service dedicated to each edge site

With this new approach, compute nodes don't host Cinder volume services or etcd any more, which reduces resource usage and deployment complexity at the edge.

User experience remains the same. Each site is assigned to a Cinder Availability Zone (AZ), and a user specifies the AZ when creating a volume. This allows the user to choose which site (that is, which Ceph cluster) the volume is created on. The same applies when creating a virtual machine (VM). The user specifies the AZ upon which a VM boots. By default, cross-AZ volume attachments are disabled, so a VM running on site 1 cannot attach a volume on site 2.

Serving images at the edge with Glance

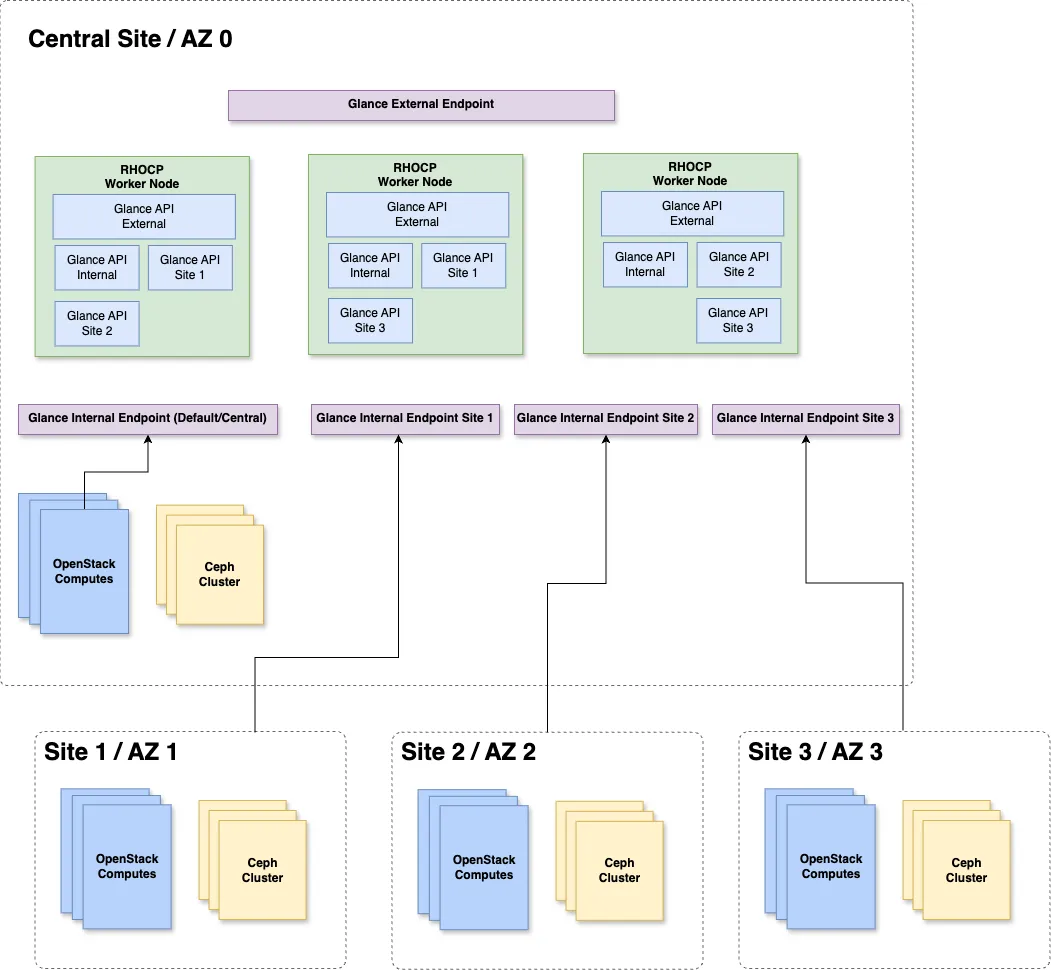

The core OpenStack design does not change from previous versions. Glance multi-store is still used to distribute images across sites. Each site containing a Ceph cluster has an images RBD pool bound to a dedicated Glance store. The central site also includes a dedicated store.

As with Cinder, what changes in OpenStack Services on OpenShift is the way you deploy Glance. In previous versions, the Glance API was deployed on three edge compute nodes (the same as the one running Cinder Active/Active). A HAProxy was also deployed on the edge computes, for load balancing requests. These Glance API services were dedicated to the site, and used a local Ceph cluster for image storage. Hosting images at the edge site allowed features such as fast booting and Copy On Write clones for Nova ephemeral and Cinder volumes.

With Glance API, we're taking the same approach as Cinder volume by moving the Glance API services from the edge computes to the control plane in the central site.

The general topology remains the same. We're dedicating Glance API services for each edge site, and the images are still stored on the local-to-site Ceph cluster images pool. The default number of Glance API service at each site is now set to 2, and it's up to you to increase this value. Thanks to OpenShift, the number of replicas can be adjusted at any time.

This diagram shows a high level DCN architecture with one central site plus three edge sites as well as the Glance's service placement. The three green boxes (in the top row) represent OpenShift workers on top of which the OpenStack control services are running.

The Glance services are deployed as follows:

- 3 Glance External API to serve external requests

- 3 Glance Internal API to serve central and default (no store specified) requests

- 2 Glance API for each edge site to serve store's specific requests

Each Glance API set exposes a unique endpoint (an OpenShift service) used to access underlying pods. In the diagram above, you can see a dedicated endpoint for each site represented by a purple box.

The Cinder volume service for each site is configured to use their corresponding Glance endpoint, the same goes with Nova compute. This allows the Nova and Cinder services of each site to reach their dedicated Glance API service.

With this design, each site has its own dedicated Glance API service backed by a local-to-site Ceph pool. This also brings more flexibility to you as an administrator, because you can scale the number of API services for each site (when you add a site, a new set of Glance API must be added.)

Creating workloads with distributed compute nodes

Now that we have reviewed the internal design of the Distributed Compute Nodes architecture, let's see how users interact with it by looking at how to start workloads at the edge.

Suppose a user wants to boot a VM from a volume on site 1. First, upload a Glance image for the VM to use when booting:

$ glance image-create-via-import \

--disk-format qcow2 --container-format bare --name cirros \

--uri http://download.cirros-cloud.net/0.6.3/cirros-0.6.3-x86_64-disk.img \

--import-method web-download \

--stores central,site1 This instructs Glance to create a Cirros image to the central and site 1. You can use the --all-stores true option to upload it to all stores. Glance converts the image to the raw format before copying it to Ceph.

It's also possible to copy an existing image to another site. For example, to copy the previously uploaded Cirros image to site 2:

$ IMG_ID=$(openstack image show cirros -c id -f value)

$ glance image-import cirros \

--stores site2 \

--import-method copy-image Now that the image is registered into Glance, create a volume from this image on site 1:

$ IMG_ID=$(openstack image show cirros -c id -f value)

$ openstack volume create --size 10 --availability-zone site1 cirros-vol-site1 --image $IMG_IDThe --availability-zone site1 option instructs Cinder to create the volume in the site1 availability zone, which is served by a dedicated Cinder volume configured to use the Ceph cluster hosted in site 1. Cinder finds the image from its dedicated Glance API endpoint.

The volume is now ready to use! Tell Nova to boot a VM from that volume on site 1:

$ VOL_ID=$(openstack volume show \

-f value -c id cirros-vol-site1)

$ openstack server create --flavor tiny \

--key-name mykey --network site1-network-0 \

--security-group basic \

--availability-zone site1 \

--volume $VOL_ID cirros-vm-site1The VM is now booted on site 1 from the previously created volume, and the --availability-zone site1 tells Nova to boot the VM on site 1.

More advanced actions are possible, such as copying copying snapshots, migrating volumes offline, backup edge volumes, and so on.

Build a better edge

Red Hat has moved to a more modern OpenShift-based deployment model with OpenStack Services on OpenShift, and edge use cases remain essential. This new deployment model offers more flexibility in the way you can schedule control services.

OpenStack edge storage integration takes advantage of these new possibilities. It moves site-specific OpenStack storage service from the edge to the central site. It removes the need for edge computes to host control services, and adds more control on the service's placement and scale.

It doesn't stop here. We're looking at improving this solution in the future by adding third party backends, and Manila support at the edge!

Interested in the implementation details? Have a look at our distributed compute nodes deployment guide.

product trial

Red Hat OpenShift Data Foundation | Product Trial

About the author

Gregory Charot is a Senior Principal Technical Product Manager at Red Hat covering OpenStack Storage, Ceph integration as well as OpenShift core storage and cloud providers integrations. His primary mission is to define product strategy and design features based on customers and market demands, as well as driving the overall productization to deliver production-ready solutions to the market. Open source and Linux-passionate since 1998, Charot worked as a production engineer and system architect for eight years before joining Red Hat in 2014, first as an Architect, then as a Field Product Manager prior to his current role as a Technical Product Manager for the Hybrid Platforms Business Unit.

More like this

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Customer support

- Developer resources

- Find a partner

- Red Hat Ecosystem Catalog

- Red Hat value calculator

- Documentation

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Inclusion at Red Hat

- Cool Stuff Store

- Red Hat Summit