In Kubernetes (K8s), the recreation of the pod is handled by the ReplicaSet or Deployment controller, depending on how the pod was created. The desired state of the pod and the actual state of the pod is continuously monitored. Suppose the actual state deviates from the desired state, for example when a pod is deleted. In that case, the controller detects the change and creates a new pod based on the configuration specified in the deployment.

But what happens when our pod hosts a stateful application?

In general, stateful systems tend to be more complex and harder to scale, since they need to keep track of and manage state across multiple interactions.

K8s cannot know for sure whether the original Pod is still running or not, so if a new Pod is started we can end up with a "split-brain" condition that can lead to data corruption which is the worst thing possible for anyone responsible. Let's take for example a situation where the node in the cluster that hosts our application does not respond for any reason, K8s doesn't allow the "split-brain" case to happen and waits until the original node returns, and as long as it doesn't return, the pod will not be recreated and our application will not be available! Alternatively, we can delete the pod manually and deal with this.

This is not acceptable, our application may be critical and must tolerate a failure in a single node or a single network connection. Let's see how to enable a way to get it running elsewhere.

Here is an interesting case that I experienced during a Telco project I led. In this blog, we will see a demo that simulates a 3-node bare-metal cluster architecture that is suitable for an edge cluster solution. This cluster is unique in that each node will be both a control plane and a worker role, and also a member of OpenShift Data Foundation (ODF) as a storage solution. The Telco workloads can be VMs inside pods (stateful applications) by OpenShift virtualization and can provide network functions like router, firewall, load balancer etc.

In this demo, the VM will be a Linux server.

The following diagram shows the environment setup.

NOTE: OpenShift cluster, ODF, and OpenShift Virtualization operators need to be a prerequisite.

Install NodeHealthCheck Operator

Log in to your cluster’s web console as a cluster administrator → OperatorHub → search Node Health Check → install

Node Health Check (NHC) operator identifies unhealthy nodes and uses the Self Node Remediation (SNR) operator to remediate the unhealthy nodes.

SNR operator takes an action that will cause rescheduling of the workload and deletion of the pods from the API - which will trigger the scheduler. The fencing part (i.e making sure that the node stateful workload isn't running) will be automatically rebooting unhealthy nodes. This remediation strategy minimizes downtime for stateful applications and ReadWriteOnce (RWO) volumes.

SNR operator is installed automatically once the NHC operator is installed.

When the NHC operator detects an unhealthy node, it creates a remediation CR that triggers the remediation provider. For example, the node health check triggers the SNR operator to remediate the unhealthy node. For more information, click here.

Check the Installed operators

oc get pod -n openshift-operators

NOTE: In the latest version of the SNR operator, adapted to OpenShift 4.12, there is support for also for nodes with the control-plan role

NodeHealthCheck Configuration

Navigating to operators -> Installed operators -> NodeHealthCheck -> NodeHealthChecks, and Configure the NHC operator

An example of NHC configuration:

apiVersion: remediation.medik8s.io/v1alpha1

kind: NodeHealthCheck

metadata:

name: nhc-master-test

spec:

minHealthy: 51%

remediationTemplate:

apiVersion: self-node-remediation.medik8s.io/v1alpha1

kind: SelfNodeRemediationTemplate

name: self-node-remediation-resource-deletion-template

namespace: openshift-operators

selector:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

unhealthyConditions:

- duration: 150s

status: 'False'

type: Ready

- duration: 150s

status: Unknown

type: Ready

NOTE: In the 2.5.23 NHC version, a new UI option was added which enables the configuration creation

Create VM with OpenShift Virtualization

Once the OpenShift Virtualization operator is installed the virtualization option appeared, and virtual machines can be created.

In this case, I chose to use RHEL 8 operating system from the template catalog

After a few minutes, the machine will be ready with the status “Running”.

VM access by the console

Create a test file in our VM for the next steps

Check which node hosts the VM

oc get vmi -A

NOTE: We can see the node master0-0 hosts the VM

Self Node Remediation (SNR) Operator

We can see that there is currently no managed ‘Self Node Remediation’ in the operator

Disaster in Master0-0! The Node Hosts

Let us shut down the node (master0-0) that hosts the VM, to simulate a disaster. NOTE: shut down the node can be done according to the infrastructure, a virtual interface or BM

oc get node

Node Health Check (NHC) detects an unhealthy node

NHC detects the unhealthy node (NotReady status) automaticly and changes the status to ‘NotReady,SchedulingDisabled’

NHC creates a remediation CR that triggers the remediation provider.

Triggers the SNR operator

SNR operator remediates the unhealthy node. Self Node Remediation created automatically with the unhealthy node name (master-0-0)

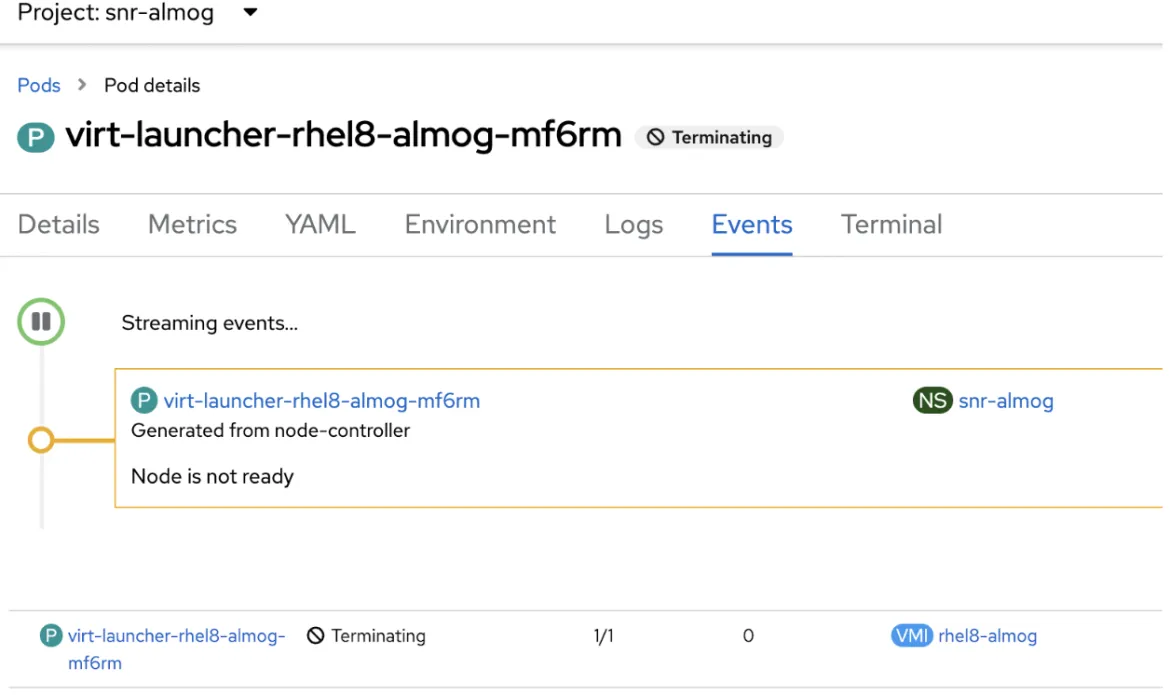

Check the Virtual Machine Status

The virt launcher pod is running libvirt and qemu that implement the virtual machine. SNR is deleting it now so it can be recreated by the scheduler elsewhere. This is safe because SNR made sure that the workload isn’t running on the non-responsive node by now.

When the virt launcher pod status will be ‘ContainerCreating’, the VM status will be ‘Starting’

Once the virt launcher pod status will be ‘Running’, the VM is ‘Running’ on another node.

NOTE: We can see the node master-0-1 hosts the VM

Simulate Transferring Data to the New Host

Let's verify that the file we created in the virtual machine exists

Conclusion

Ensuring minimal downtime for our applications is essential, regardless of whether they are stateful applications, and regardless of whether they are running on a worker, master (when it's scheduling), or a 3-node edge cluster.

We were able to notify Openshift that the disconnected node is certainly not active at the moment even if the node was with a control plane role. Thanks to this guarantee, we caused the automatic recreating of our stateful application (VM) on another node in the cluster.

Über den Autor

Almog Elfassy is a highly skilled Cloud Architect at Red Hat, leading strategic projects across diverse sectors. He brings a wealth of experience in designing and implementing cutting-edge distributed cloud services. His expertise spans a cloud-native approach, hands-on experience with various cloud services, network configurations, AI, data management, Kubernetes and more. Almog is particularly interested in understanding how businesses leverage Red Hat OpenShift and technologies to solve complex problems.

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Original Shows

Interessantes von den Experten, die die Technologien in Unternehmen mitgestalten

Produkte

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud-Services

- Alle Produkte anzeigen

Tools

- Training & Zertifizierung

- Eigenes Konto

- Kundensupport

- Für Entwickler

- Partner finden

- Red Hat Ecosystem Catalog

- Mehrwert von Red Hat berechnen

- Dokumentation

Testen, kaufen und verkaufen

Kommunizieren

Über Red Hat

Als weltweit größter Anbieter von Open-Source-Software-Lösungen für Unternehmen stellen wir Linux-, Cloud-, Container- und Kubernetes-Technologien bereit. Wir bieten robuste Lösungen, die es Unternehmen erleichtern, plattform- und umgebungsübergreifend zu arbeiten – vom Rechenzentrum bis zum Netzwerkrand.

Wählen Sie eine Sprache

Red Hat legal and privacy links

- Über Red Hat

- Jobs bei Red Hat

- Veranstaltungen

- Standorte

- Red Hat kontaktieren

- Red Hat Blog

- Diversität, Gleichberechtigung und Inklusion

- Cool Stuff Store

- Red Hat Summit