MultiPath TCP (MPTCP) gives you a way to bundle multiple paths between systems to increase bandwidth and resilience from failures. Here we show you how to configure a basic setup so you can try out MPTCP, using two virtual guests. We’ll then look at the details of a real world scenario, measuring bandwidth and latency when single links disappear.

What is Multipath TCP?

Red Hat Enterprise Linux 9 (RHEL 9) and later enables the MultiPath TCP daemon (mptcpd) to configure multipath TCP. But what exactly is MPTCP and how can it help us?

Multipath TCP enables a transport connection to operate across multiple paths simultaneously. It became an experimental standard in 2013, which was later replaced by the Multipath v1 specification in 2020 via RFC 8684.

Let’s consider a simple network connection. A client is starting a browser and accessing a website hosted on a server. For this, the client establishes a TCP socket to the server and uses that channel for communication.

There are many possible causes for network failures:

-

A firewall anywhere between client and server can terminate the connection

-

The connection can slow down somewhere along the path of the packets

-

Both client and server use a network interface for communication, and if that interface goes down, the whole TCP connection becomes unusable

MPTCP can help us to mitigate the effects of some of these events. Using MPTCP, we will establish a MPTCP socket between client and server, instead of a TCP socket. This allows additional MPTCP “subflows” to be established.

These subflows can be thought of as separate channels our system can use to communicate with the destination. These can use different resources, different routes to the destination system and even different media such as ethernet, 5G and so on.

As long as one of the subflows stays usable, the application can communicate via the network. In addition to improving resilience, MPTCP can also help us to combine the throughput of multiple subflows into a single MPTCP connection.

You may ask, “Isn’t that what bonding/teaming were created for?” Bonding/teaming focuses on the interface/link layer, and for most modes you are restricted to a single medium. Also, even if you run bonding in aggregation mode, most modes will only increase bandwidth if you run multiple TCP sessions — with a single TCP session you remain bound to the throughput of a single interface.

How is MPTCP useful in the real world?

MPTCP is useful in situations where you want to combine connections in order to increase resilience from network problems or to combine network throughput.

Here we’ll use MPTCP in a real-world scenario with two laptops running RHEL 9. One laptop has a wired connection — 1 Gbit ethernet, low latency. The second connection is wireless — higher latency, but it makes the laptop portable so it can be used anywhere in the house

MPTCP allows us to establish subflows over both of these connections, so applications can become independent from the underlying medium. Of course, latency and throughput of the subflows are different, but both provide network connectivity.

I used RHEL9 for the steps in this article, but alternatively distros like Fedora 36 or Centos Stream 9 can be used.

A simple demo of MPTCP in action

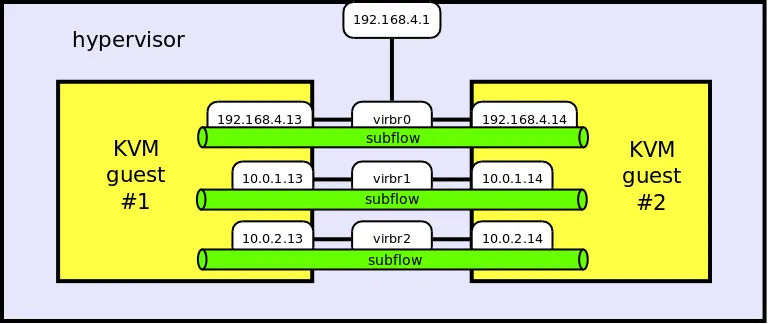

To familiarize ourselves with MPTCP, we’ll first look at a simple setup of a hypervisor (running Fedora 35) and two KVM guests with RHEL 9 on top. Each of the guests has three network interfaces:

-

1st KVM guest: 192.168.4.13/24, 10.0.1.13/24, 10.0.2.13/24

-

2nd KVM guest: 192.168.4.14/24, 10.0.1.14/24, 10.0.2.14/24

-

Hypervisor: 192.168.4.1/24

Next, we will enable MPTCP via sysctl on both guests, and install packages:

# echo "net.mptcp.enabled=1" > /etc/sysctl.d/90-enable-MPTCP.conf # sysctl -p /etc/sysctl.d/90-enable-MPTCP.conf # yum -y install pcp-zeroconf mptcpd iperf3 nc

With that, we can now start to monitor the traffic for the guests’ network interfaces. Open a terminal session to each of the two guests, start the following in both terminals, and keep the command running:

# pmrep network.interface.in.bytes -t2

Now we are ready to run iperf3 in server mode on the first guest, which will start to listen on a port and wait for incoming connections. On the second guest we run iperf3 in client mode, which will connect to the server and do bandwidth measurements.

While iperf3 is by default opening sockets of type IPPROTO_IP (as can be seen with strace), we want it to leverage the MPTCP protocol and open IPPROTO_MPTCP sockets. For that, we can either change the source code and recompile the software, or use the mptcpize tool to modify the requested channel type:

1st guest:> mptcpize run iperf3 -s 2nd guest:> mptcpize run iperf3 -c 10.0.1.13 -t3

The mptcpize tool allows unmodified existing TCP applications to leverage the MPTCP protocol, changing the created sockets type on-the-fly via libcall hijacking.

At this point, we can run the ss tool on the first guest to verify that the iperf3 server is listening on an mptcp socket. The 'tcp-ulp-mptcp' tag marks the paired socket as using the MPTCP ULP (Upper Layer Protocol), that means that the socket is actually an MPTCP subflow, in this case an MPTCP listener.

1st guest:> ss -santi|grep -A1 5201 LISTEN 0 4096 *:5201 *:* cubic cwnd:10 tcp-ulp-mptcp flags:m token:0000(id:0)/0000(id:0) [..]

Our terminals with the running pmrep commands show that just one interface on each client is used.

We can now configure MPTCP. By default, each MPTCP connection uses a single subflow. Each subflow is similar to a plain TCP connection, so the MPTCP default behavior is comparable to plain TCP. To use the benefits like bundling of throughput or resilience from network outage, you must configure MPTCP to use multiple subflows on the involved systems.

Our next command tells the MPTCP protocol that each MPTCP connection can have up to two subflows in addition to the first, so three in total:

2nd guest:> ip mptcp limits set subflow 2

When and why should MPTCP create additional subflows? There are multiple mechanisms. In this example we will configure the server to ask the client to create additional subflows towards different server addresses, specified by the server itself.

The next command tells the system running the client to accept up to two of these subflow creation requests for each connection:

2nd guest:> ip mptcp limits set add_addr_accepted 2

Now we will configure the first guest — that will run the server — to also deal with up to two additional subflows, with the limits set subflow 2. In the following two commands, we configure additional IPs get announced to our peer to create the two additional subflows:

1st guest:> ip mptcp limits set subflow 2 1st guest:> ip mptcp endpoint add 10.0.2.13 dev enp3s0 signal 1st guest:> ip mptcp endpoint add 192.168.4.13 dev enp1s0 signal

Now, if we run mptcpize run iperf3 [..] on the second guest again, we should see this:

n.i.i.bytes n.i.i.bytes n.i.i.bytes n.i.i.bytes lo enp1s0 enp2s0 enp3s0 byte/s byte/s byte/s byte/s N/A N/A N/A N/A 0.000 91.858 1194.155 25.960 [..] 0.000 92.010 26.003 26.003 0.000 91364.627 97349.484 93799.789 0.000 1521881.761 1400594.700 1319123.660 0.000 1440797.789 1305233.465 1310615.121 0.000 1220597.939 1201782.379 1149378.747 0.000 1221377.909 1252225.282 1229209.781 0.000 1232520.776 1244593.380 1280007.121 0.000 671.831 727.317 337.415 0.000 59.001 377.005 26.000

Congrats! Here we see traffic of one channel of the application layer getting distributed over multiple subflows. Note that our two VMs are spinning on the same hypervisor, so our throughput is most likely limited by the CPU. With multiple subflows, we will probably not see increased throughput here, but we can see multiple channels being used.

If you are up for a further test, you can run:

1st guest:> mptcpize run ncat -k -l 0.0.0.0 4321 >/dev/null 2nd guest:> mptcpize run ncat 10.0.1.13 4321 </dev/zero

From the application view, this will establish a single channel and transmit data in one direction, so we can see that a single channel also gets distributed. We can then take down network interfaces on the two guests and see how the traffic changes again.

Using MPTCP in the real world

Now that we have a simple setup running, let’s look at a more practical setup. At Red Hat we have recurring requests from customers to enable applications to switch media transparently, possibly also combining the bandwidth of such media. We can use MPTCP as a working solution for such scenarios. The following setup uses two Thinkpads with RHEL 9, and an MPTCP configuration which uses subflows over ethernet and WLAN.

Each media has specific capabilities:

-

Ethernet: high bandwidth, low latency, but the system is bound to the cable

-

Wireless: lower bandwidth, higher latency, but the system can be moved around more freely

So, will MPTCP help us to survive disconnects from ethernet, keeping network communication up? It does. In the screenshot below we see a Grafana visualization of the traffic, recorded with Performance Co-Pilot (PCP), with WLAN traffic in blue and ethernet in yellow.

At the beginning, an application maxes out the available bandwidth of both media, here the download of a Fedora installation image. Then, when we disconnect ethernet and move the Thinkpad out to the garden, the network connectivity stays up. Eventually we move back into the house, reconnect to ethernet and get full bandwidth again.

Typically, these ethernet interfaces would run at 1 Gbit/second. To make this more comparable to the bandwidth available over wireless, I have restricted the interfaces here to 100 Mbit/second.

What did the application experience? The console output shows that iperf3 had degraded bandwidth for some time, but had always network connectivity:

# mptcpize run iperf3 -c 192.168.0.5 -t3000 -i 5 Connecting to host 192.168.0.5, port 5201 [ 5] local 192.168.0.4 port 50796 connected to 192.168.0.5 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-5.00 sec 78.3 MBytes 131 Mbits/sec 271 21.2 KBytes [..] [ 5] 255.00-260.00 sec 80.0 MBytes 134 Mbits/sec 219 29.7 KBytes [ 5] 260.00-265.00 sec 80.2 MBytes 134 Mbits/sec 258 29.7 KBytes [ 5] 265.00-270.00 sec 79.5 MBytes 133 Mbits/sec 258 32.5 KBytes [ 5] 270.00-275.00 sec 56.8 MBytes 95.2 Mbits/sec 231 32.5 KBytes [ 5] 275.00-280.00 sec 22.7 MBytes 38.1 Mbits/sec 244 28.3 KBytes [..] [ 5] 360.00-365.00 sec 20.6 MBytes 34.6 Mbits/sec 212 19.8 KBytes [ 5] 365.00-370.00 sec 32.9 MBytes 55.2 Mbits/sec 219 22.6 KBytes [ 5] 370.00-375.00 sec 79.4 MBytes 133 Mbits/sec 245 24.0 KBytes [ 5] 375.00-380.00 sec 78.9 MBytes 132 Mbits/sec 254 24.0 KBytes [ 5] 380.00-385.00 sec 79.1 MBytes 133 Mbits/sec 244 24.0 KBytes [ 5] 385.00-390.00 sec 79.2 MBytes 133 Mbits/sec 219 22.6 KBytes [..]

While we see that staying connected works well, we should remember that we need to speak MPTCP on both sides. So:

-

Either all of the applications are getting MPTCP’ized, either natively or for example with mptcpize.

-

Or one system serves as an access gateway, running as a transparent TCP-to-MPTCP proxy. A second system on the internet then acts as a counterpart, running a similar MPTCP-to-TCP reverse proxy. Also others are setting up proxy software to have plain TCP tunneled over MPTCP.

Summary, and more details

In the past, we saw a number of attempts to solve the problem of transparent bundling of network connections. The first approaches used simple, normal TCP channels at the base, but as these suffered from downsides, MPTCP was developed.

If MPTCP is becoming established as the standard way to bundle bandwidth and survive uplink outages, then more applications are likely to implement MPTCP sockets natively. Also, other Linux-based devices such as Android mobile phones are likely to follow that trend.

In RHEL 8 we added MPTCP support to TechnologyPreview, at that time without the userland component mptcpd, which was later added for RHEL 9. Everything in this blog post was done without using mptcpd — with mptcpd running, an API is available for adding/removing subflows etc., which we did by hand in this article.

Learn more

-

Davides Devconf 2021 talk, a great MPTCP introduction: video slides

-

Our RHEL 9 product docs around MPTCP are a good resource

-

Paolo Abeni has written a high level MPTCP introduction

-

MPTCP packet layer details from Davide Caratti

-

The RHEL8 MPTCP docs show a further way to convert TCP sockets to MPTCP, using Systemtap

-

Performance Co-Pilot 5.2 and later offer the MPTCP metrics for reading, recording etc.

Über den Autor

Christian Horn is a Senior Technical Account Manager at Red Hat. After working with customers and partners since 2011 at Red Hat Germany, he moved to Japan, focusing on mission critical environments. Virtualization, debugging, performance monitoring and tuning are among the returning topics of his daily work. He also enjoys diving into new technical topics, and sharing the findings via documentation, presentations or articles.

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Original Shows

Interessantes von den Experten, die die Technologien in Unternehmen mitgestalten

Produkte

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud-Services

- Alle Produkte anzeigen

Tools

- Training & Zertifizierung

- Eigenes Konto

- Kundensupport

- Für Entwickler

- Partner finden

- Red Hat Ecosystem Catalog

- Mehrwert von Red Hat berechnen

- Dokumentation

Testen, kaufen und verkaufen

Kommunizieren

Über Red Hat

Als weltweit größter Anbieter von Open-Source-Software-Lösungen für Unternehmen stellen wir Linux-, Cloud-, Container- und Kubernetes-Technologien bereit. Wir bieten robuste Lösungen, die es Unternehmen erleichtern, plattform- und umgebungsübergreifend zu arbeiten – vom Rechenzentrum bis zum Netzwerkrand.

Wählen Sie eine Sprache

Red Hat legal and privacy links

- Über Red Hat

- Jobs bei Red Hat

- Veranstaltungen

- Standorte

- Red Hat kontaktieren

- Red Hat Blog

- Diversität, Gleichberechtigung und Inklusion

- Cool Stuff Store

- Red Hat Summit