Artificial intelligence (AI) helps drive innovation by helping organizations better leverage their data to gain valuable insights. Enterprises are rapidly adopting AI applications, but developing, training and managing AI workloads at scale remains a challenge. Model-as-a-Service (MaaS) helps address this challenge by enabling enterprises to operationalize AI models as scalable services.

What is Model-as-a-Service (MaaS)?

Models-as-a-Service (MaaS) helps organizations accelerate time-to-value and deliver results more quickly. MaaS provides pre-trained AI models via API gateway on a hybrid cloud AI platform.

MaaS can be developed and deployed by specialized internal teams and made available for the rest of the organization. This allows other teams to be faster and more efficient, able to focus on more strategically important work by accessing these pre-trained models through the APIs. Alternatively, organizations can also get MaaS from a trusted provider, eliminating the need to build a dedicated MaaS team. However, in addition to efficiency and cost savings, MaaS offers your organization increased control over your privacy, data and its use.

When does MaaS make sense?

Managing GPUs and underlying AI infrastructure requires skilled people to develop, train and manage AI models. Instead of simply offering AI Infrastructure-as-a-Service (IaaS), an organization can commit a small number of skilled experts to develop, train and deploy AI models that anyone in the organization can use.

As the demand for model inference calls increases, the underlying serving framework and GPUs are able to more easily scale to meet the demands. MaaS providers also handle all infrastructure maintenance and monitoring tasks, including updates, performance and security.

Another important aspect is that GPUs are expensive and using them inefficiently can lead to soaring costs. MaaS reduces the need for heavy investment in infrastructure, helping businesses save on these upfront costs.

Time to value is also important, and MaaS helps accelerate an organization's return on investment (ROI). Developing and training a model takes a lot of time, and MaaS helps enhance time to value as the models are readily available to teams that want to use them.

Understanding MaaS

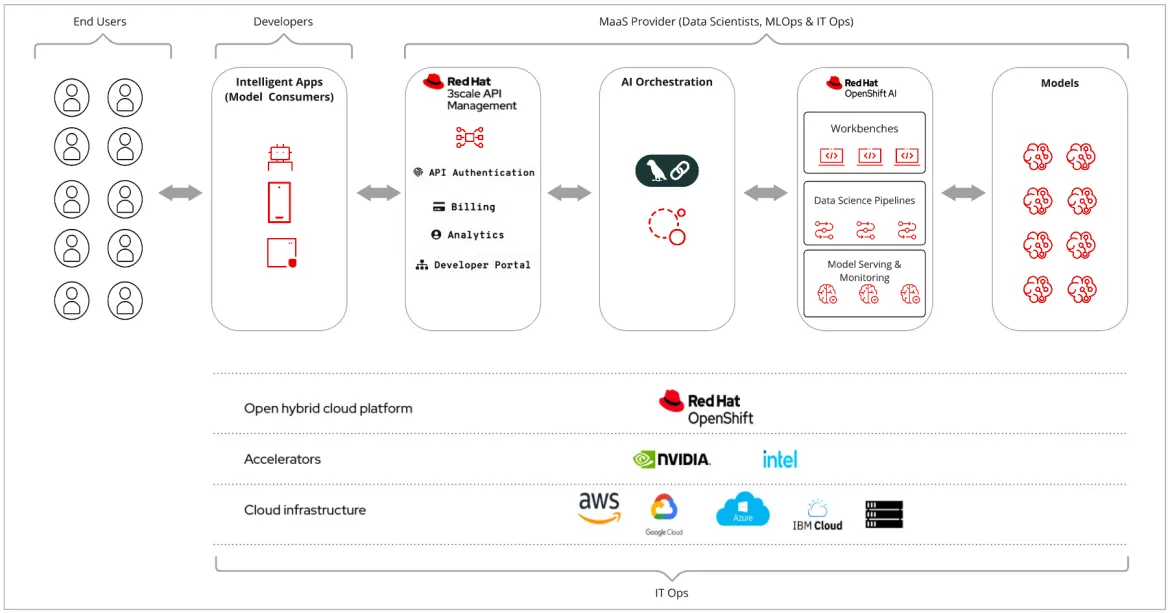

Let’s try to understand MaaS and its core components with an opinionated high level design. The key components of MaaS include the models, a scalable AI platform, an AI orchestration system and robust API management.

When it comes to MaaS, choosing an AI model that actually addresses your business use case is just one piece of the puzzle. There is a lot more that surrounds the model, like data collection, verification, resource management and the infrastructure needed to deploy and monitor the models.

In the world of AI applications, such activities are automated under machine learning operations (MLOps). MLOps encompasses the complete AI project lifecycle with DevOps-like responsibilities within a cross-functional team. In the case of a MaaS life cycle, the MaaS provider has a similar team of cross functional subject matter experts including data scientists, ML engineers and IT operations who work together to deliver and manage the MaaS offering.

Models

The MaaS provider is responsible for developing the model catalog by incorporating Open Source, third party or their own models. Depending on the organization's needs, the MaaS provider may choose to customize models using tuning techniques such as fine tuning , or offer a better user experience thanks to retrieval augmented generation (RAG) or retrieval augmentation with fine tuning (RAFT). Once tuned, the model is saved in the data store and its meta details are stored in the model registry, making it ready for serving. The MaaS provider can also build a model catalog of all the available models and document it and the exposed APIs in a developer portal for the developers.

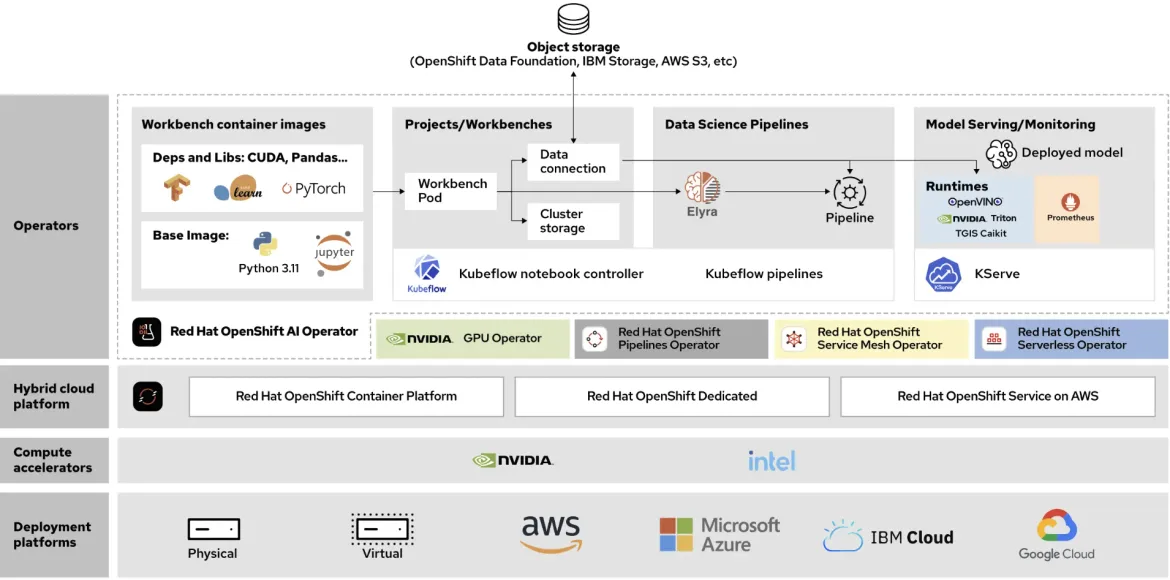

Red Hat OpenShift AI

The foundation of MaaS is the AI platform used to tune, serve and monitor the models. The MaaS provider is responsible for setting this system up with appropriate observeability tools for monitoring.

The MaaS provider needs to serve models efficiently, handling multiple tenants, monitoring for and mitigating security threats and integrating with various data sources.

In our opinionated design, we're using Red Hat OpenShift AI as our AI platform as it addresses the needs of MaaS, offering features like multi-tenancy support, a strong security posture for model serving and integration with data services. OpenShift AI streamlines the workflows of data ingestion, model training, model serving and observability, and enables seamless collaboration between teams.

Benefits of Red Hat OpenShift AI

OpenShift AI offers a number of benefits for anyone looking to set up a MaaS system, including:

- The ability to efficiently scale to handle the demands of larger AI workloads

- The ability to run AI workloads across the hybrid cloud, including edge and disconnected environments

- Built-in authentication and role based access control (RBAC)

- A variety of controls help address the security and compliance challenges

Furthermore, OpenShift AI is built to be modular, allowing your MaaS team to build a customized AI/ML stack, plugging in partner or other open source technologies as needed.

AI orchestration

Red Hat OpenShift AI also provides AI orchestration capabilities that give MaaS providers the ability to experiment with and better control different versions of the same model or even different models for a particular use case. One of the main purposes of AI orchestration is to route the API requests to the right model instance. This layer may also include additional components to manage various model tuning techniques.

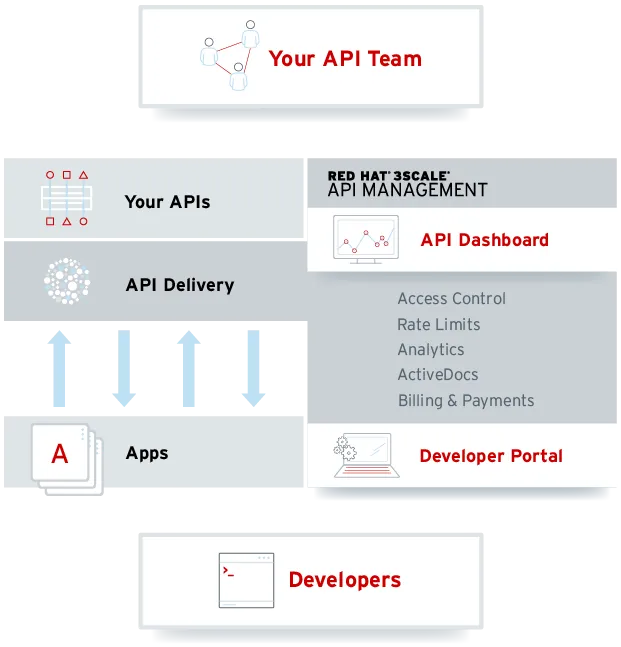

API management

API management is one of the most important components in MaaS design. MaaS providers must have the ability to manage access, onboard applications, provide analytics and charge back so customers can manage and observe their apps and effectively measure ROI. The API management component also allows for extensive onboarding and usage policies and offers sophisticated analytics about the use, overuse, underuse and potential abuse of the published APIs.

The API management component provides support for high availability (HA), traffic control, API authentication, integration with third-party identity providers, analytics, access control, monetization and developer workflows.

Intelligent applications

Consumer applications—such as chatbots, mobile applications or a portal—are the final component in this architecture. The main stakeholders here are the developers who want to integrate the available AI models in their intelligent applications through the APIs published by the MaaS provider. The developers should be able to onboard their apps and leverage the API management features through a dedicated developer portal.

Developers are now able to serve the end users with their intelligent applications that seamlessly integrate with the models through their APIs. This allows developers to focus on solving business problems using the ready-made model APIs, while the MaaS provider takes care of models, MLOps and underlying infrastructure.

Conclusion

By abstracting infrastructure and data science complexities, organizations can leverage MaaS to deliver AI solutions more quickly and efficiently while maintaining control over costs and complexities of MLOps.

With AI adoption growing day by day, MaaS is a great approach to accelerating AI development and time-to-market. Start exploring Red Hat OpenShift AI’s capabilities to see how we can help you build your MaaS offering and unlock the full potential of your AI investments.

product trial

Red Hat OpenShift AI (Self-Managed) | Product Trial

About the author

Muhammad Bilal Ashraf (preferred name: Bilal) is a Senior Architect at Red Hat, where he empowers organizations to harness the transformative potential of open-source innovation. Since joining Red Hat in 2021 as a Cloud Native Architect, Bilal has advanced to his current role, collaborating closely with customers and partners to deliver strategic value through cloud-native solutions, AI/ML, and cutting-edge technologies. His expertise lies in architecting scalable, open-source-driven solutions to accelerate digital transformation, optimize operations, and future-proof businesses in an evolving technological landscape. Bilal’s work bridges the gap between enterprise challenges and open-source opportunities, driving customer success through tailored AI and cloud-native strategies.

More like this

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech