Overview of F5 solutions for the 5G ecosystem

When designing 5G architecture, a modern service provider is likely to turn first to cloud-native deployment. There are many benefits to building architecture with cloud-native methodologies, including increased flexibility, greater resource efficiency, and more agility in function, deployment, and alteration.

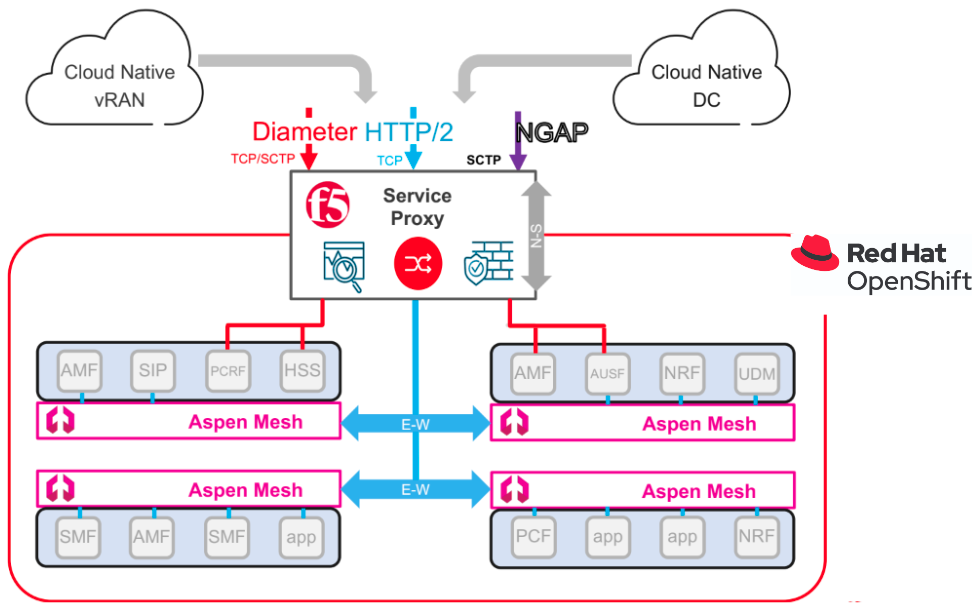

Red Hat OpenShift unlocks these key benefits, providing the service provider with uniform orchestration, a variety of existing tooling and pattern designs, and horizontal scaling flexibility from core to edge. However, there are challenges using unified cloud-native platforms to implement 5G core CNFs, including:

- Difficulty with the management of network protocols (NGAP/SCTP, HTTP/2, Diameter, GTP, SIP, lawful intercept, and so on)

- Lack of routing integration with service provider networks

- Limited egress capabilities

- Limited security controls and integration with overall security policies

- Lack of visibility and revenue controls

Implementing a 5G core using OpenShift and F5 products together reduces:

- Operational complexity: Enables complex IP address management

- Security complexity: Ensures each new CNF has their own additional security policies

- Architectural complexity: Exposes internal complexity and failures

To achieve this, F5 adds two networking components to OpenShift: Service Proxy for Kubernetes (SPK) and Carrier-Grade Aspen Mesh (CGAM).

- Service Proxy for Kubernetes (SPK) is a cloud-native application traffic management solution, designed for service provider’s 5G networks. SPK integrates F5’s containerized Traffic Management Microkernel (TMM), Ingress Controller, and Custom Resource Definitions (CRDs), into the OpenShift container platform to proxy and load balance low-latency 5G

- F5’s Carrier Grade Aspen Mesh (CGAM), based on Cloud Native Computing Foundation’s Istio project, enables service providers migrating from 4G virtualized network functions (VNF) to the service-based architecture (SBA) of 5G, which relies on a microservice infrastructure

As shown in the diagram below, in the absence of SPK each CNF requires a Multus underlay as an additional interface to communicate with services and nodes external to the cluster. Operationally, in terms of provisioning interfaces and IP addresses to every CNF replica, this can be more challenging and prone to error. Additionally, exposing all individual CNF pods to external networks can be a real security risk.

Both SPK and CGAM work independently of each other, solving similar but separate problems, since CGAM is used for East-West traffic, and SPK is focused on North-South traffic. In this way, the service provider can select the most appropriate technology depending on their needs. This traffic pattern and the location of both F5 solutions in an OpenShift cluster can be found in the following diagram:

SPK Architecture

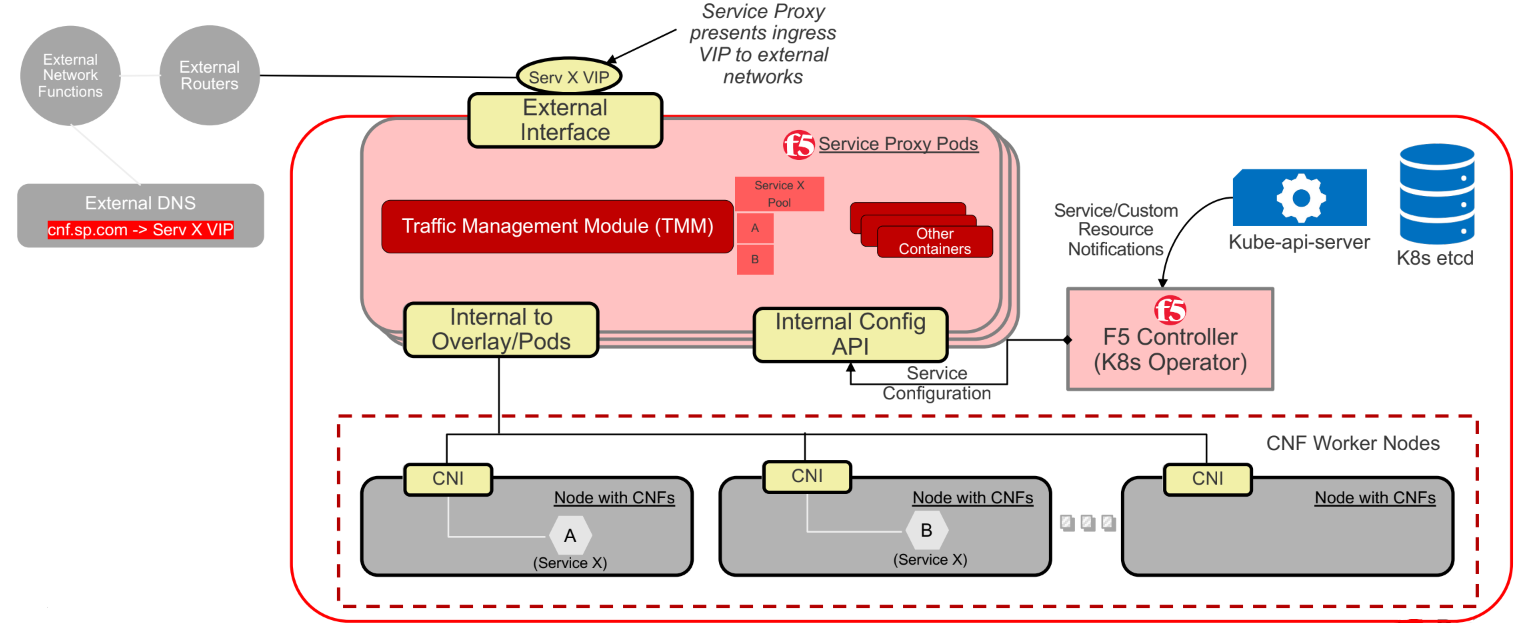

Each SPK deployment serves a single NF namespace. An SPK instance includes two components. A control plane pod that serves as ingress controller (F5 controller) and a data plane pod that handles traffic (TMM).

Each TMM has two network interfaces. The external interface faces the outside, and the internal interface faces the cluster using CNI. SPK comes with various Custom Resource Definitions (CRD), which you can use to create a Configuration Resource object (CR) as needed.

For example, if the CNF requires its Kubernetes service (Service X) to be exposed to an external network so that external clients can talk to Service X, then a F5-SPK-IngressTCP CR can be deployed. This creates a listening Virtual Service (VS) address (Service X VIP) with a defined port in SPK on the external network. This CR is mapped to the CNF's Service X and its endpoints. When an external client needs to talk to this CNF service, it sends traffic to Service X VIP of F5-SPK-IngressTCP CR in SPK, and SPK load-balances the traffic across Service X’s endpoints using the load balance method also defined in that CR.

The F5 Controller continuously monitors changes within the namespace, and informs the TMM. The TMM maintains the Kubernetes service and endpoints corresponding to its F5IngressTCP CR information, so when an endpoint or pod gets added or removed based on the update provided by the F5 Controller, the TMM makes all necessary changes to the IP service pool:

Carrier Grade Aspen Mesh (CGAM) description

CGAM enables service providers to migrate from 4G virtualized network functions (VNF) to the service-based architecture of 5G, which relies on a microservice infrastructure. Some key functions provided by CGAM:

- Communication: mTLS between any node-to-node communication. Encrypted East-West or North-South Traffic

- Packet Inspector: per-subscriber and per-service traffic visibility allows traceability for compliance tracking, troubleshooting, and billing purposes

- Control: DNS Controller, Ingress/Egress GWs, dashboards and other features allowing operators to control and monitor the traffic

Collaboration between F5 and Red Hat

F5 and Red Hat are collaborating in different initiatives, including work with Red Hat Enterprise Linux, Red Hat OpenStack Platform, Red Hat OpenShift and Red Hat Ansible Automation Platform to offer joint services, solutions, and platform integrations that simplify and accelerate the process of developing, deploying, and protecting enterprise applications.

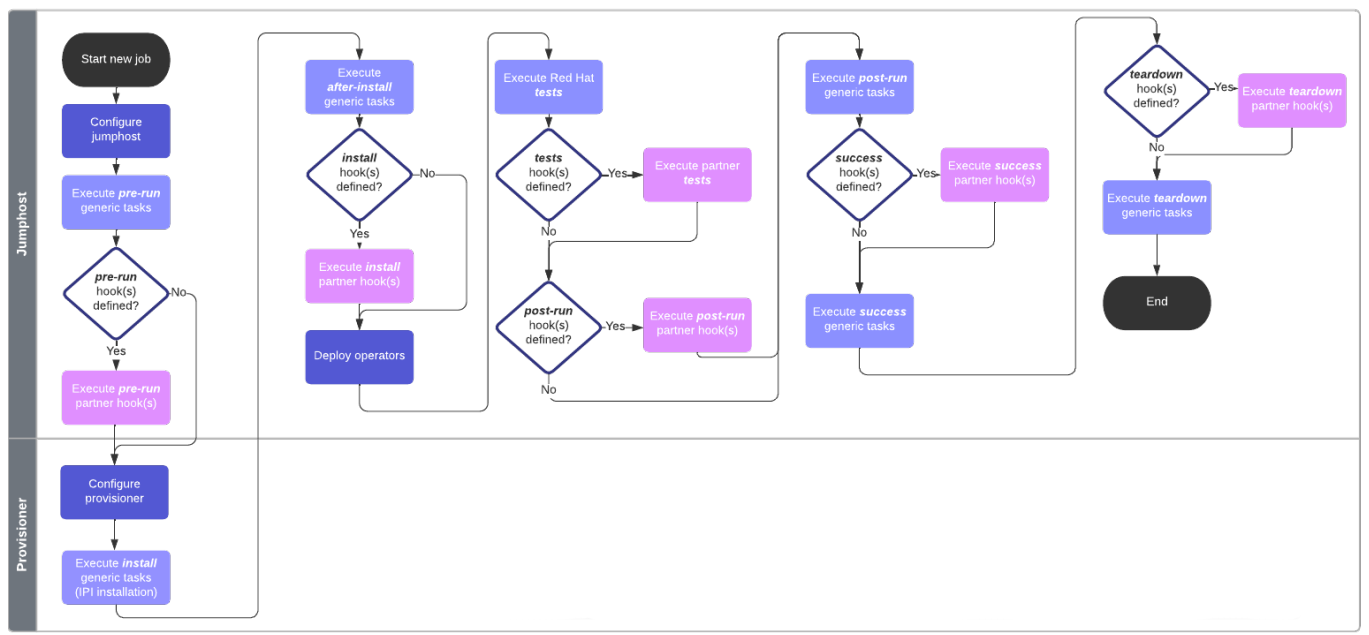

To address the integration and testing of F5 SPK and CGAM with different OpenShift versions, the solution proposed is based on a custom and pre-packaged continuous integration process, managed by Red Hat, where deployments are made based on pipelines, chaining components and configurations to be used to trigger different Ansible Playbooks to automate the deployment. These CI workflows are accomplished thanks to the Red Hat Distributed CI (DCI) tool.

By joining a wide community with over 10,000 CI jobs launched for different partner use cases, F5 is able to continuously test, validate, and pre-certify their products on OpenShift, while Red Hat can act on this feedback faster to fix bugs.

Enabling continuous integration workflows for F5 with Red Hat Distributed CI

The following diagram presents the main building blocks of DCI:

A full explanation of DCI and how it works is out of scope for this article (see the official docs or this article for more information), but generally one thing DCI can do is track resources deployed. These are referred to as components, which may refer to any artifact (file, package, URL) used in the deployment. A component can even refer to the RHOCP version used to build a cluster, or to the F5 product version deployed in a cluster.

This information is mainly supported by Red Hat through DCI. F5’s responsibility in this scenario is to manage hardware, deploying an RHOCP installation through an agent based on Ansible, running on pipeline logic, having multiple available options to deploy RHOCP (following IPI, Assisted Installer), and configuring the hardware (baremetal, virtualized nodes).

In particular, the scenario covered for F5 testing and validation of SPK and CGAM consists of some Red Hat labs, accessible by F5, with the following configuration:

- Multiple RHOCP clusters, with 3 worker and 3 master nodes, which can be created and used simultaneously on demand

- The clusters are based on a disconnected environment and IPI deployment, using a jumphost machine to centralize access to external resources and to run the DCI automation, and a provisioner machine for each cluster to support the RHOCP installation

- Source code containing all pipeline configurations and Ansible playbooks that are launched to set up the desired scenario

The following diagram represents the description provided above:

You may be wondering, how can Ansible help achieve the desired automation in this kind of scenario? An agent running in the partner's lab is used for this purpose, and there are two types of agents supported by DCI, currently:

- dci-openshift-agent: Install any RHOCP version using different deployments supported by Red Hat

- dci-openshift-app-agent: Deploy workloads on top of RHOCP, run tests, and certify them following Red Hat certification programs

Specific playbooks and roles are provided on each agent to support the execution. Apart from standard Ansible modules, they use some resources that makes the automation journey easier:

- DCI imports Ansible projects to cover different types of RHOCP deployments supported by Red Hat. For example, the case for F5 testing and validation is IPI installation, publicly available through openshift-kni/baremetal-deploy repository

- To interact with the RHOCP resources to be used in a deployment, Ansible's Kubernetes collection can help define specific Ansible tasks to control the lifecycle of RHOCP

The agents follow a well-defined workflow, separating different Ansible tasks to be performed over the infrastructure needing configuration. Below is a case for the dci-openshift-agent workflow, the agent used by F5 to run the DCI automation, and which distinguishes between tasks running on the jumphost (almost all stages) or in the provisioner (only its configuration and the RHOCP installation with the selected method):

Each stage (pre-run, install, tests, post-run, success and teardown) is composed of generic tasks, which are directly provided by the agent, and partner hooks, which is optional code that can be provided by partners to run custom deployments and configurations. In this case, partner hooks are used for properly deploying, configuring, and testing SPK and CGAM.

The execution of proper tasks for each stage is controlled by the definition of the already-commented pipelines, which eventually automate the deployment of the different jobs with the desired configuration. Pipelines are, in the end, YAML files containing all parameters and configuration needed for the CI system to achieve the desired scenario, such as:

- Location of Ansible configuration (agent playbooks, hooks playbooks)

- Network configuration

- RHOCP operators to activate (or not)

- Components that will be used (for both RHOCP and F5-other partners)

With all these tools in mind, a correct performance of all the pieces, all together, results in:

- The availability of an OpenShift cluster with the desired configuration

- The execution of tests to evaluate the applied configuration

A graphical report of the execution. You can visualize multiple reports, including the execution of a given deployment, and analytics with the F5’s component coverage showing the percentage of successful jobs for each SPK version tested on DCI. Below you can see the component coverage for the SPK component in debug DCI jobs (not for production environments) using RHOCP 4.12.

SPK deployment steps and test cases

This is a summarized list of tasks that are executed for the SPK installation and configuration during the install stage, all implemented in F5 partner’s hooks using Ansible. This configuration is based on SPK v1.8.2, executed in RHOCP 4.14:

- Apply configuration variables from pipelines and F5 SPK custom CRD

- Label RHOCP nodes

- Create webhook configuration

- Create CoreDNS configuration

- Create SPK Cert Manager configuration

- Create SPK Fluentd configuration

- Create SPK DSSM configuration

- Create SPK CWC configuration

- Create SPK instances: SPK DNS46 and SPK Data

- Create SPK Conversion Webhook

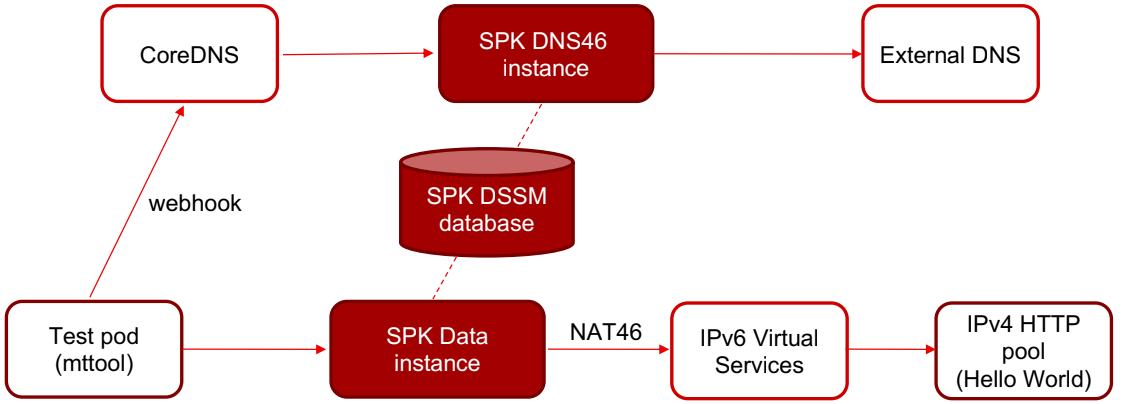

In an example test environment with an RHOCP cluster that is single stack IPv4, a test pod (mttool) is IPv4 only. However, the “Hello World” FQDN is an AAAA record with IPv6 address in the External DNS. So in this case, a separate SPK (SPK DNS46) is also in the flow to provide the DNS46 service:

In the tests hook, a test pod client (mttool) is created. With the use of webhook, this pod forwards all its external DNS to CoreDNS. The CoreDNS pods egress all queries to SPK DNS46. The client pods need to talk to Hello World, so it sends a DNS A query for Hello World. SPK DNS46 passes A query to External DNS. However, as noted above, External DNS holds only AAAA records for Hello World with IPv6.

So the External DNS responds with an empty answer. SPK DNS46 resends DNS query for Hello World as AAAA record. The External DNS responds with an IPv6 address.

SPK DNS46 using the DNS46 function, creates a IPv6 to IPv4 mapping in the SPK DSSM database and passes the corresponding IPv4 address from the mapping.

Client test pod starts sending TCP packets to port 80 of that IPv4 towards SPK Data instance.

SPK Data uses its NAT46 functionality to find the corresponding IPv6 address from the mapping in DSSM created by SPK DNS46 above.

SPK data forwards the TCP packet to port 80 of the IPv6 Virtual Service, which is forwarded to the IPv4 HTTP pod.

Carrier-Grade Aspen Mesh deployment steps and test cases

Similar to SPK, several tasks have been implemented as F5 partner’s hooks to run the CGAM automation. The list above is based on CGAM 1.14.6-am5 running RHOCP 4.14:

- Apply configuration variables from pipelines

- Extract Aspen Mesh installer and generate certificates

- Deploy istio base chart in istio-system namespace

- Verify CRDs are in place

- Deploy istio-cni chart in kube-system namespace

- Deploy istiod chart in istio-system namespace

- Deploy istio-ingress chart in istio-system namespace

- Install dnscontroller and citadel add-ons if required

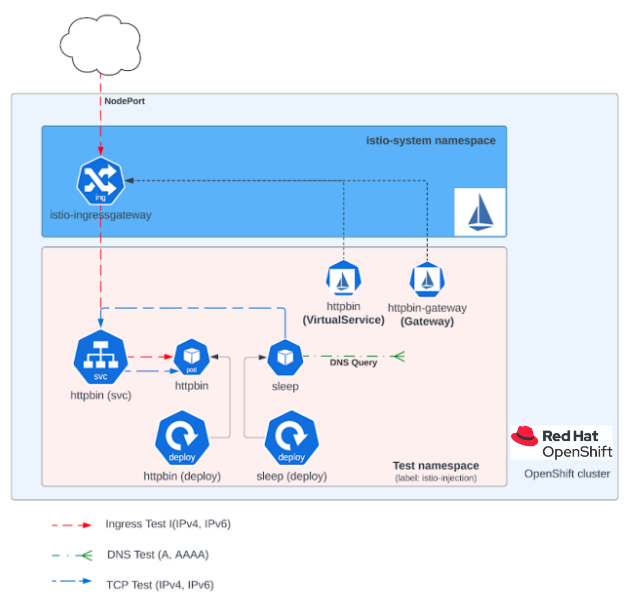

The testing scenario for the CGAM validation is presented in the following diagram:

Three types of test cases are run in this scenario:

- Gateway verify test: Verify that a pod configured with Istio is running the sidecar container from Aspen Mesh. The communication is verified by using the host IP of pod and NodePort of the gateway service

- Inter-namespace verify test: Verify communication between the namespaces under test

- Dual stack test: Finally, validate whether control and data planes are prepared for dual stack, verifying dual-stack DNS and TCP workflows from different points of the network

Overcoming challenges and conclusions

To sum up, based on the platform automation configured as a result of the continuous integration process enabled by Red Hat Distributed CI, we can see we have achieved the desired configuration and automation capabilities we drafted at the beginning of our joint work. This effort is helping Red Hat and F5 to move forward faster than ever before, tackling some major challenges including:

- Validating F5 solutions on different OpenShift versions, and verifying the latest available

- Parameterizing certain configurations (not only for 5G components, but also for OpenShift operators, network capabilities, common utilities, and so on) to customize each deployment

- Easily introducing new developments and fixes, to be tested in subsequent deployments

It is true that this achievement required some time to reach the desired configuration for the proposed platform automation. This explains why, in Figure 7, the reported success rate for the DCI jobs deployed with each SPK component is less than 50%.

The reason why we have such a considerable amount of failed tests is because we are in a testing and validation process. In these negative cases, DCI detects early issues in the deployment of RHOCP or F5 solutions, generating useful logs and feedback for Red Hat and F5 engineers. All this information is used to propose a solution for the issue as well as to improve the pipelines and the automation, and here it is where time is needed to achieve all this. While this work is ongoing, the solution is retested, having negative results until the issue is eventually resolved.

So, this low success rate, at the end, implies that there is ongoing work between Red Hat and F5 to improve their solutions, following troubleshooting and testing activities such as quickly checking new releases, detecting bugs, and fixing them as soon as possible, so that we eventually achieve an automated deployment without error. Next goals in this joint work aim at minimizing the time to achieve a stable solution when detecting issues, thus maximizing the success rate of F5 product’s components on DCI.

About the authors

Ramon Perez works at Red Hat as a Software Integration Engineer. He has a Ph.D. in Telematics Engineering, having expertise in virtualization and networking. He is passionate about technology and research, and always willing to learn new knowledge and make use of open-source technologies.

Manuel Rodriguez works at Red Hat as a Software Engineer, he has studied and worked with open source projects during all his career in different domains as security, administration and automation. He likes learning new technologies and contributing to the community.

Over 20 years’ experience in SP technology. Started as Network Design Engineer in 2001, launched Sprint/T-Mobile’s 3G network on CDMA. Previously also worked for Nokia on LTE and IMS technologies.

Over 14 years of experience working with SP business, I worked as a Network Integration Engineer with Ericsson mainly focused on 3G and 4G LTE Packet Core. Thereafter, I worked as a Technical Marketing Engineer with Cisco SP business unit – Presales of 4G and 5G mobility products. Currently working as Solutions Engineer with F5 Networks mainly focused on Service Proxy for Kubernetes, cloud native applications, and functions.

More like this

AI in telco – the catalyst for scaling digital business

Metrics that matter: How to prove the business value of DevEx

Ready to Commit | Command Line Heroes

The Fractious Front End | Compiler: Stack/Unstuck

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds