Support for Microsoft Windows-based applications along with the technologies that are common to a Windows environment continue to be a popular topic in both Kubernetes and OpenShift ecosystems. As the ability to leverage these types of workloads has become more readily available through the introduction of Windows Containers support in Kubernetes, providing a seamless integration has never been more important. A prior article based on written for OpenShift 3, it was described how one could leverage a Kubernetes persistent storage plugin type called FlexVolumes to consume Common Internet FIle System (CIFS) shares, a common type of shared storage that is typically used by Windows workloads. FlexVolumes were essentially an executable that resides on the master and node instances and contained the necessary logic to manage access to a storage backend for the primary operations that needed to be performed to be able to leverage the storage backend, such as mounting and unmounting of volumes.

Today, in OpenShift 4, FlexVolumes are still a supported storage provider, but there is an emphasis from the Kubernetes community on embracing a standard-based approach for consuming storage. The Container Storage Interface (CSI) has become the defacto standard for exposing file and block storage to containerized workloads as it leverages the Kubelet device plugin registration system to allow externalized storage to integrate with the platform. One of the key benefits of the CSI implementation is that it no longer requires the modification of the Kubernetes codebase itself (in-tree) as was required previously. Ongoing work in the community has evolved over time to migrate all storage plugins to the CSI specification, including prior FlexVolume based implementations for CIFS. The csi-driver-smb project within the Kubernetes CSI group has been working on providing a CSI based solution for CIFS and recently announced their first release of the project. With a new viable option available, the remainder of this article will demonstrate how this project can be implemented in an OpenShift environment in order to consume a set of existing CIFS shares. For a deeper dive into the CSI specification, the CSI developer documentation provides a wealth of information on the specification as a whole and how it can be leveraged.

As with any type of persistent storage installation and configurations in a Kubernetes environment, elevated cluster access privileges are required. Be sure that you have the OpenShift Command Line tools installed locally and are logged in to a cluster

The first step is to install the CSI Driver resource, which enables a discovery mechanism of all defined CSI drivers along with any customizations that may be needed in order for Kubernetes to interact with the driver. Execute the following command to add the resource to the cluster:

$ oc apply -f https://raw.githubusercontent.com/kubernetes-csi/csi-driver-smb/master/deploy/csi-smb-driver.yaml

With the CSI driver now defined, deploy a DaemonSet to the cluster to make the driver available to mount CIFS shares on all nodes. CSI drivers make use of several standard sidecar containers that aim to alleviate many of the common tasks needed during the lifecycle of a deployed driver. This allows the developer to focus on fulfilling the three primary functions of the CSI specification:

- Identity - Enables Kubernetes to identify the driver and functionality that it supports

- Node - Runs on each node and responsible for making calls into the Kubelet

- Controller - Responsible for managing the volume, such as attaching and detaching

Each of these components is packaged into a single container and deployed with the rest of the standard CSI sidecar containers in a DaemonSet.

Execute the following command to deploy the DaemonSet to the cluster:

$ oc apply -f https://raw.githubusercontent.com/kubernetes-csi/csi-driver-smb/master/deploy/csi-smb-node.yaml

After the DaemonSet starts and the driver registers with the Kubelet, a new custom resource called CSINode is created for each node in the cluster and can be viewed by executing the following command:

$ oc get csinode

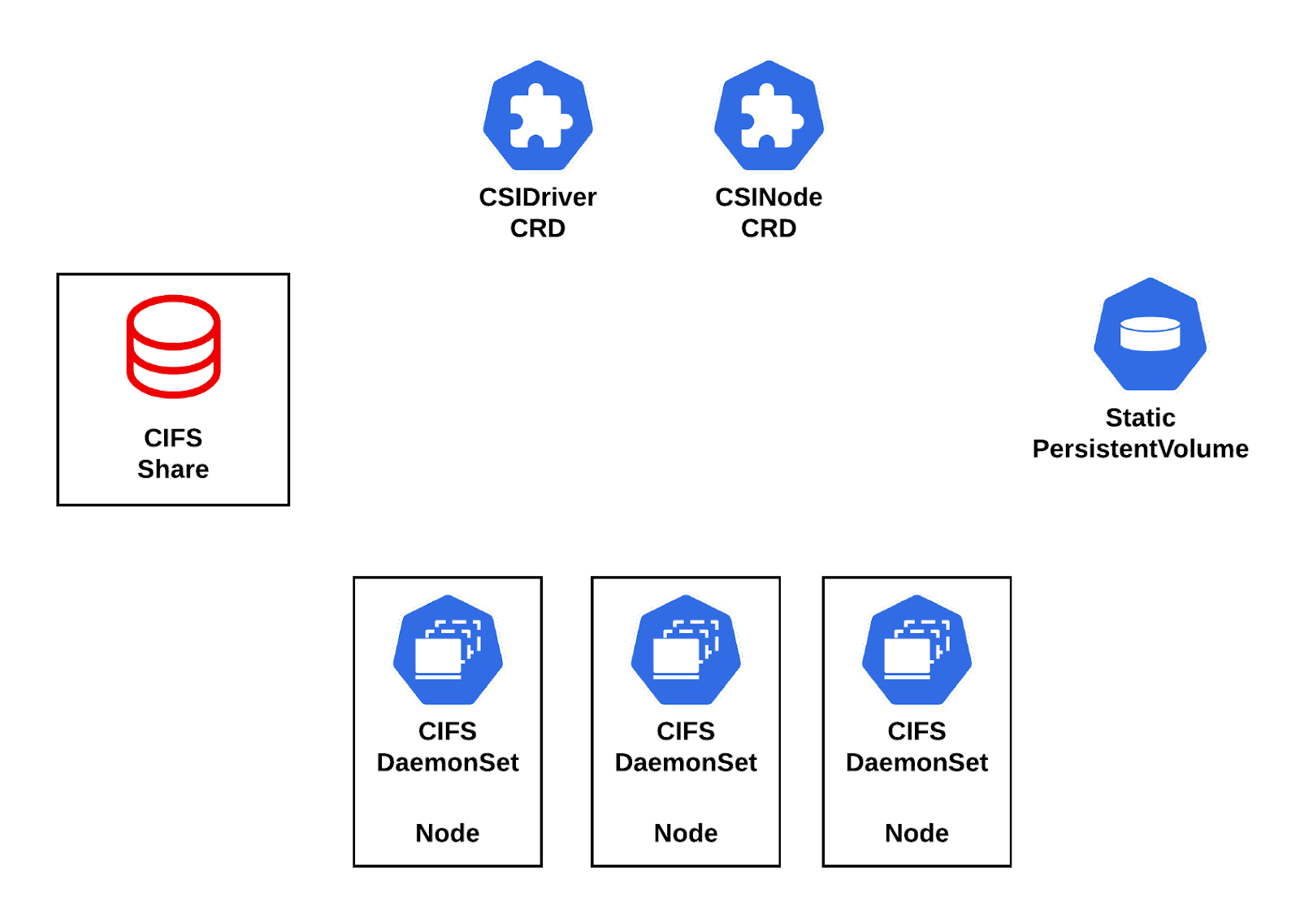

With the CSI driver installed, CIFS shares can be used by OpenShift. The following diagram depicts the key components of the CIFS CSI driver architecture:

To demonstrate the integration between OpenShift and the ability to make use of CIFS as a storage backend, let’s create a new project for which we will deploy a sample application:

$ oc new-project cifs-csi-demo

The next step is to statically define PersistentVolumes to associate with any existing CIFS shares. CSI drivers, depending on their capabilities, can leverage either statically defined PersistentVolumes or dynamically provision PersistentVolumes at runtime. This CSI driver only supports the use of statically defined, pre-provisioned PersistentVolumes at this time. A statically defined PersistentVolume for a CIFS share takes the following format:

apiVersion: v1

kind: PersistentVolume

metadata:

name: <name>

spec:

accessModes:

- ReadWriteMany

capacity:

storage: <size>

csi:

driver: smb.csi.k8s.io

volumeAttributes:

source: //<host>/<share>

volumeHandle: <unique_id>

nodeStageSecretRef:

name: <credentials_secret_name>

namespace: <credentials_secret_namespace>

mountOptions:

- <array_mount_options>

persistentVolumeReclaimPolicy: Retain

volumeMode: Filesystem

With an understanding of the structure that represents the PersistentVolume, let’s create a secret that contains the credentials for accessing the share. Regardless of whether the share can be accessed anonymously or not, a secret must be created. Create a new secret called cifs-csi-credentials by specifying the username, password, and domain (optional parameter) as secret key literals as shown below:

$ oc create secret generic cifs-csi-credentials --from-literal=username=<username> --from-literal=password=<password>

Once the secret has been created, the name of the secret along with the namespace can be added to the PersistentVolume under the nodeStageSecretRef field.

Next, specify a name for the PersistentVolume underneath the metadata section along with providing a size that represents the amount of storage backing this volume. Kuubernetes does not enforce quota limitations at a storage level, so any reasonable value can be provided.

Specify the host and share of the existing CIFS share in the source field under the volumeAttributes section.

The volumeHandle field represents a unique value for the share. It can be set to any value (for example cifs-demo-share).

The final field that needs to be set is an array of mount options that will be used when mounting the share. At a minimum, the following mount options should be set:

mountOptions:

- dir_mode=0777

- file_mode=0777

- vers=3.0

If the CIFS share should be accessed anonymously without the need of a username and password, add guest as an additional mount option.

Once each of these fields have been set, the PersistentVolume is represented similar to the following:

apiVersion: v1

kind: PersistentVolume

metadata:

name: cifs-csi-demo

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 10Gi

csi:

driver: smb.csi.k8s.io

volumeAttributes:

source: //<host>/<share>

volumeHandle: cifs-demo-share

nodeStageSecretRef:

name: cifs-csi-credentials

namespace: cifs-test

mountOptions:

- dir_mode=0777

- file_mode=0777

- vers=3.0

persistentVolumeReclaimPolicy: Retain

volumeMode: Filesystem

Save the contents to a file called cifs-csi-demo-pv.yaml and execute the following command to create the PersistentVolume:

$ oc create -f cifs-csi-demo-pv.yaml

Check the status of the PersistentVolume:

$ oc get pv cifs-csi-demo

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

cifs-csi-demo 10Gi RWX Retain Available 2s

Now, create a PersistentVolumeClaim to associate with the Persistent Volume created above:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cifs-csi-demo

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: ""

volumeMode: Filesystem

volumeName: cifs-csi-demo

Add the contents of the PersistentVolume to a file called cifs-csi-demo-pvc.yaml and execute the following command to add the PersistentVolumeClaim to the cluster:

$ oc create -f cifs-csi-demo-pvc.yaml

After a few moments, verify that the PersistentVolumeClaim has been bound the the PersistentVolume previously created:

$ oc get pvc cifs-csi-demo

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cifs-csi-demo Bound pv-smb-secure 10Gi RWX 32s

Finally, let's deploy a simple application that will write the current date to a file located on the CIFS share.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: cifs

name: deployment-smb-secure

spec:

replicas: 1

selector:

matchLabels:

app: cifs

template:

metadata:

labels:

app: cifs

name: deployment-smb-secure

spec:

containers:

- name: deployment-smb-secure

image: registry.redhat.io/ubi8/ubi:latest

command:

- "/bin/sh"

- "-c"

- while true; do echo $(date) >> /mnt/smb/outfile; sleep 1; done

volumeMounts:

- name: smb

mountPath: "/mnt/smb"

readOnly: false

volumes:

- name: smb

persistentVolumeClaim:

claimName: pvc-smb-secure

strategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

Create a file called cifs-csi-demo-deployment.yaml and execute the following command to add the deployment to the cluster:

$ oc create -f cifs-csi-demo-deployment.yaml

The CIFS share specified in the PersistentVolume will then be mounted to the node the pod associated with the deployment is scheduled on.

Display the log file that is being appended to within the CIFS share by executing the following command:

$ oc rsh $(oc get pods -o jsonpath='{.items[*].metadata.name}') cat /mnt/smb/outfile

Wed Jul 1 02:35:02 UTC 2020

Wed Jul 1 02:35:03 UTC 2020

Wed Jul 1 02:35:04 UTC 2020

Wed Jul 1 02:35:05 UTC 2020

Wed Jul 1 02:35:06 UTC 2020

Wed Jul 1 02:35:07 UTC 2020

Wed Jul 1 02:35:08 UTC 2020

Wed Jul 1 02:35:09 UTC 2020

Wed Jul 1 02:35:10 UTC 2020

Wed Jul 1 02:35:11 UTC 2020

Wed Jul 1 02:35:12 UTC 2020

Now that you have verified logs are being written to the CIFS share from within OpenShift, as an additional validation step, you can confirm that the file is being written to the CIFS share by directly accessing the share from outside of OpenShift.

While this scenario merely provided for the validation that applications running in OpenShift can communicate with persistent storage being hosted on CIFS shares, it demonstrated that by using a standards-based approach through the use of the Container Storage Interface (CSI) for accessing storage backends, new capabilities that go beyond the set of included Kubernetes and OpenShift featureset could be unlocked to drive new workloads onto the platform. A future enhancement to the CIFS CSI driver could add support for managingPersistentVolumes in a dynamic fashion to eliminate the cluster administrator from manually provisioning volumes prior to their use.

About the author

Andrew Block is a Distinguished Architect at Red Hat, specializing in cloud technologies, enterprise integration and automation.

More like this

AI in telco – the catalyst for scaling digital business

Building the foundation for an AI-driven, sovereign future with Red Hat partners

The Containers_Derby | Command Line Heroes

You Can't Automate Buy-In | Code Comments

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds