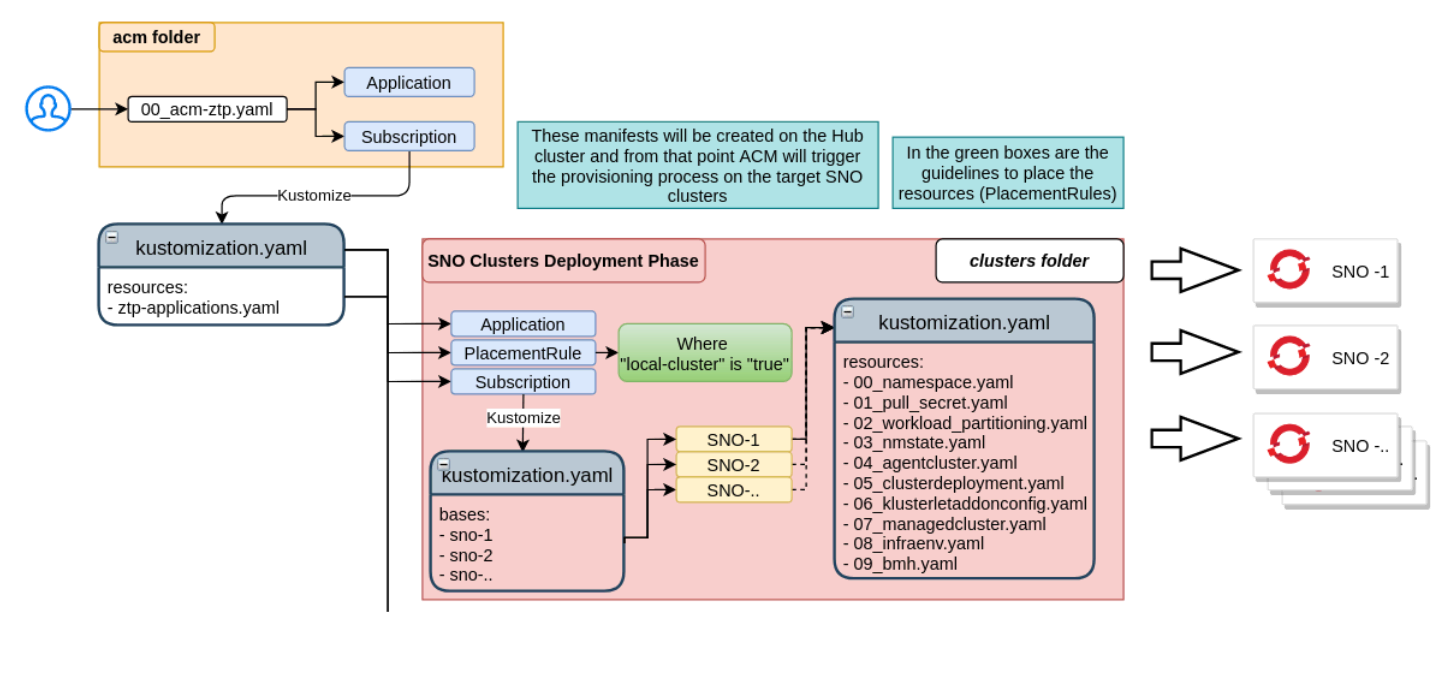

Zero touch provisioning (ZTP) is a technique that deploys new edge sites with declarative configurations of bare-metal equipment. Template or overlay configurations install Red Hat OpenShift features required for edge workloads. All configurations are declarative and managed with Red Hat Advanced Cluster Management for Kubernetes.

ZTP leverages Red Hat Advanced Cluster Management and GitOps approaches to manage edge sites remotely. The service is optimized for single-node OpenShift (SNO) and three-node OpenShift. I will deploy a SNO scenario for this example.

Configure channels in Red Hat Advanced Cluster Management that contain URLs and secrets to connect to Git and subscriptions that have Git paths, branches or tags. The configuration will be generated using kustomize, which will merge the base templates with the proper overlay for a specific site.

The base directory is used as a template and contains all global information. Inside the overlay folder is a subfolder for each SNO containing the specific configuration for the given site.

Use the files in my GitHub repository as examples and templates.

SNO installation steps

The following steps are executed automatically via an assisted installer and Red Hat Advanced Cluster Management. The following list is only for explanation, and no manual actions are required for the SNO installation:

- The assisted installer generates an ISO image used for discovery.

- The discovery ISO is mounted using the Redfish API.

- Boot the host with the discovery ISO. DNS services resolve the URL exposed by the assisted installer (assisted-image-service route). The rootfs image is downloaded.

- By default, the discovery network is configured via DHCP.

- The agent service on the host starts the discovery and registers with the Red Hat Advanced Cluster Management Hub. The AgentClusterInstall and ClusterDeployment CRs define install configurations like cluster version, name, networking, encryption, SSH and proxies.

- The cluster installation begins. When complete, the ManagedCluster and KlusterletAddonConfig CRs trigger the registration of the cluster to Red Hat Advanced Cluster Management.

Prerequisites

- Enable the Redfish API at the remote location for hardware.

- At least one cluster hub for management must exist (Red Hat Advanced Cluster Management as a single pane of glass).

- Configure the network infrastructure (DNS, DHCP and NTP) and network firewall rules for connectivity.

- One or more Git repos containing manifests for the following:

- Day 1 ZTP deployment (site config custom resources (CRs)).

- Day 2 infrastructure configuration (governance policies).

- Application-related manifests (using Red Hat Advanced Cluster Management application subscriptions or Red Hat GitOps).

- A vault for keeping external secret operator secrets (only if you want to manage secrets with the external secret operator).

Git structure

The Git structure for SNO provisioning is divided into base and overlays. This uses the best features of the kustomize tool, avoiding duplication of common information. The following is an example:

Installing Red Hat Advanced Cluster Management

- Install the operator:

---

apiVersion: v1

kind: Namespace

metadata:

name: open-cluster-management

---

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: open-cluster-management

namespace: open-cluster-management

spec:

targetNamespaces:

- open-cluster-management

---

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: advanced-cluster-management

namespace: open-cluster-management

spec:

channel: release-2.7

installPlanApproval: Automatic

name: advanced-cluster-management

source: redhat-operators

sourceNamespace: openshift-marketplace

---

apiVersion: operator.open-cluster-management.io/v1

kind: MultiClusterHub

metadata:

name: multiclusterhub

namespace: open-cluster-management

spec: {}- Define the AgentServiceConfig resource and the Metal3 provisioning server using the code below:

---

apiVersion: agent-install.openshift.io/v1beta1

kind: AgentServiceConfig

metadata:

name: agent

spec:

databaseStorage:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: <db_volume_size> # For Example: 10Gi

filesystemStorage:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: <fs_volume_size> # For Example: 100Gi

imageStorage:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: <image_volume_size> # For Example: 50Gi- Proceed with the configuration of the Metal3 provisioning server resource:

apiVersion: metal3.io/v1alpha1

kind: Provisioning

metadata:

name: metal3-provisioning

spec:

provisioningNetwork: Disabled # [ Managed | Unmanaged | Disabled ]

virtualMediaViaExternalNetwork: true

watchAllNamespaces: true- Set the Red Hat Advanced Cluster Management role-based access control (RBAC) configuration:

oc adm policy add-cluster-role-to-user --rolebinding-name=open-cluster-management:subscription-admin open-cluster-management:subscription-admin kube:admin

oc adm policy add-cluster-role-to-user --rolebinding-name=open-cluster-management:subscription-admin open-cluster-management:subscription-admin system:adminExample use case

ztp/

├── README.md

├── environments

│ ├── base

│ │ ├── 00-namespace.yaml

│ │ ├── 01-bmh.yaml

│ │ ├── 02-pull-secret.yaml

│ │ ├── 03-agentclusterinstall.yaml

│ │ ├── 04-clusterdeployment.yaml

│ │ ├── 05-klusterletaddonconfig.yaml

│ │ ├── 06-managedcluster.yaml

│ │ ├── 07-infraenv.yaml

│ │ └── kustomization.yaml

│ └── overlays

│ ├── sno1

│ │ ├── 00-namespace.yaml

│ │ ├── 01-bmh.yaml

│ │ ├── 02-pull-secret.yaml

│ │ ├── 03-agentclusterinstall.yaml

│ │ ├── 04-clusterdeployment.yaml

│ │ ├── 05-klusterletaddonconfig.yaml

│ │ ├── 06-managedcluster.yaml

│ │ ├── 07-infraenv.yaml

│ │ └── kustomization.yaml

│ └── sno2

│ ├── 00-namespace.yaml

│ ├── 01-bmh.yaml

│ ├── 02-pull-secret.yaml

│ ├── 03-agentclusterinstall.yaml

│ ├── 04-clusterdeployment.yaml

│ ├── 05-klusterletaddonconfig.yaml

│ ├── 06-managedcluster.yaml

│ ├── 07-infraenv.yaml

│ └── kustomization.yaml

└── policies

├── policy-htpasswd.yaml

├── policy-lvms.yaml

└── policy-registry.yamlUsing OpenShift GitOps and SiteConfig custom resource definitions (CRDs)

siteconfig

├── environments

| ├── site1-sno-du.yaml

| ├── site2-standard-du.yaml

| └── extra-manifest

| └── 01-example-machine-config.yaml

└── policies

├── policy-htpasswd.yaml

├── policy-lvms.yaml

└── policy-registry.yaml Manifest

To speed up the provisioning of the SNO on a single system, I did not install OpenShift GitOps. Therefore, I could not use a single SiteConfig manifest, as shown below:

apiVersion: ran.openshift.io/v1

kind: SiteConfig

metadata:

name: "<site_name>"

namespace: "<site_name>"

spec:

baseDomain: "example.com"

pullSecretRef:

name: "assisted-deployment-pull-secret"

clusterImageSetNameRef: "openshift-4.10"

sshPublicKey: "ssh-rsa AAAA..."

clusters:

- clusterName: "<site_name>"

networkType: "OVNKubernetes"

clusterLabels:

common: true

group-du-sno: ""

sites : "<site_name>"

clusterNetwork:

- cidr: 1001:1::/48

hostPrefix: 64

machineNetwork:

- cidr: 1111:2222:3333:4444::/64

serviceNetwork:

- 1001:2::/112

additionalNTPSources:

- 1111:2222:3333:4444::2

#crTemplates:

# KlusterletAddonConfig: "KlusterletAddonConfigOverride.yaml"

nodes:

- hostName: "example-node.example.com"

role: "master"

#biosConfigRef:

# filePath: "example-hw.profile"

bmcAddress: idrac-virtualmedia://<out_of_band_ip>/<system_id>/

bmcCredentialsName:

name: "bmh-secret"

bootMACAddress: "AA:BB:CC:DD:EE:11"

bootMode: "UEFI"

rootDeviceHints:

wwn: "0x11111000000asd123"

cpuset: "0-1,52-53"

nodeNetwork:

interfaces:

- name: eno1

macAddress: "AA:BB:CC:DD:EE:11"

config:

interfaces:

- name: eno1

type: ethernet

state: up

ipv4:

enabled: false

ipv6:

enabled: true

address:

- ip: 1111:2222:3333:4444::aaaa:1

prefix-length: 64

dns-resolver:

config:

search:

- example.com

server:

- 1111:2222:3333:4444::2

routes:

config:

- destination: ::/0

next-hop-interface: eno1

next-hop-address: 1111:2222:3333:4444::1

table-id: 254The SiteConfig CRD simplifies cluster deployment by generating the following CRs based on a SiteConfig CR instance:

- AgentClusterInstall

- ClusterDeployment

- NMStateConfig

- KlusterletAddonConfig

- ManagedCluster

- InfraEnv

- BareMetalHost

- HostFirmwareSettings

- ConfigMap for extra-manifest configurations

I created these files in the Git repo, as described below:

---

apiVersion: v1

kind: Namespace

metadata:

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

labels:

kubernetes.io/metadata.name: sno1

name: sno1

---

apiVersion: v1

data:

password: XXXXXXXXXXXXX

username: XXXXXXXXXXXXX

kind: Secret

metadata:

name: bmc-credentials

namespace: sno1

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

type: Opaque

---

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

annotations:

bmac.agent-install.openshift.io/hostname: sno1

inspect.metal3.io: disabled

apps.open-cluster-management.io/do-not-delete: 'true'

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

labels:

infraenvs.agent-install.openshift.io: sno1

cluster-name: sno1

name: sno1

namespace: sno1

spec:

automatedCleaningMode: disabled

online: true

bmc:

disableCertificateVerification: true

address: redfish-virtualmedia+https://example.com/redfish/v1/Systems/1/

credentialsName: bmc-credentials

bootMACAddress: 5c:ed:8c:9e:23:1c

#rootDeviceHints:

# hctl: 0:0:0:0

---

apiVersion: v1

data:

.dockerconfigjson: XXXXXXXXXXX

kind: Secret

metadata:

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

name: pull-secret

namespace: sno1

type: kubernetes.io/dockerconfigjson

---

apiVersion: extensions.hive.openshift.io/v1beta1

kind: AgentClusterInstall

metadata:

name: sno1

namespace: sno1

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

agent-install.openshift.io/install-config-overrides: '{"networking":{"networkType":"OVNKubernetes"}}'

spec:

clusterDeploymentRef:

name: sno1

imageSetRef:

name: img4.12.14-x86-64-appsub

networking:

clusterNetwork:

- cidr: 100.64.0.0/15

hostPrefix: 23

serviceNetwork:

- 100.66.0.0/16

userManagedNetworking: true

machineNetwork:

- cidr: 10.17.0.0/28

provisionRequirements:

controlPlaneAgents: 1

sshPublicKey: ssh-rsa XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

---

apiVersion: hive.openshift.io/v1

kind: ClusterDeployment

metadata:

name: sno1

namespace: sno1

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

spec:

baseDomain: example.com

clusterInstallRef:

group: extensions.hive.openshift.io

kind: AgentClusterInstall

version: v1beta1

name: sno1

controlPlaneConfig:

servingCertificates: {}

clusterName: sno1

platform:

agentBareMetal:

agentSelector:

matchLabels:

cluster-name: sno1

pullSecretRef:

name: pull-secret

---

apiVersion: agent.open-cluster-management.io/v1

kind: KlusterletAddonConfig

metadata:

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

name: sno1

namespace: sno1

spec:

clusterName:

clusterNamespace:

clusterLabels:

cloud: hybrid

applicationManager:

enabled: true

policyController:

enabled: true

searchCollector:

enabled: true

certPolicyController:

enabled: true

iamPolicyController:

enabled: true

---

apiVersion: cluster.open-cluster-management.io/v1

kind: ManagedCluster

metadata:

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

apps.open-cluster-management.io/reconcile-option: replace

labels:

name: sno1

cluster-name: sno1

base-domain: example.com

name: sno1

spec:

hubAcceptsClient: true

leaseDurationSeconds: 120

---

apiVersion: agent-install.openshift.io/v1beta1

kind: InfraEnv

metadata:

annotations:

apps.open-cluster-management.io/do-not-delete: 'true'

labels:

agentclusterinstalls.extensions.hive.openshift.io/location: sno1

networkType: dhcp

name: sno1

namespace: sno1

spec:

clusterRef:

name: sno1

namespace: sno1

agentLabels:

agentclusterinstalls.extensions.hive.openshift.io/location: sno1

cpuArchitecture: x86_64

ipxeScriptType: DiscoveryImageAlways

pullSecretRef:

name: pull-secret

sshAuthorizedKey: ssh-rsa XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXIf you need to enter custom configurations at Day 1, you can provide them as a ConfigMap to the AgentClusterInstall. Below is an example:

---

apiVersion: extensions.hive.openshift.io/v1beta1

kind: AgentClusterInstall

metadata:

name: sno1

namespace: sno1

spec:

manifestsConfigMapRefs:

- name: sno1-customconfig

---

apiVersion: v1

kind: ConfigMap

metadata:

name: sno1-customconfig

namespace: sno1

data:

htpasswd-authentication.yaml: |

apiVersion: config.openshift.io/v1

kind: OAuth

metadata:

name: cluster

spec:

identityProviders:

- htpasswd:

fileData:

name: htpass-secret

mappingMethod: claim

name: HTPasswd

type: HTPasswdWrap up

Zero touch provisioning simplifies bare-metal deployments at edge sites by combining the power of Red Hat OpenShift, Red Hat Advanced Cluster Management and GitOps. This demonstration used a single system to deploy a small SNO cluster as a proof of concept.

Build on this design in your own environment to learn more about deploying these features in your own network.

About the authors

More like this

Metrics that matter: How to prove the business value of DevEx

Simplifying modern defence operations with Red Hat Edge Manager

Edge IT: A space odyssey | Technically Speaking

A vested interest in 5G | Technically Speaking

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds