In Part 1 of this series, we explained how to build a system in which it is possible to facilitate the creation of namespaces in a self-service manner. But as we explained, a namespace does not constitute a full environment.

What constitutes a full environment is subjective and depends on the organization and the application in question. But there's one component that you can expect to find in almost every environment: sensitive assets stored as secrets, such as credentials.

If your objective is to build a developer platform, then it should be the responsibility of the platform to serve credentials in the environments where they are needed for consumption by applications.

In this installment, we will illustrate an approach to set up the platform to serve static credentials to the environment that we created in Part 1.

Requirements

Static secrets are secrets that are stored within a secret store and don’t change until a human or some form of external (to the secret store) automation updates them.

In this scenario, assume that developers are able to configure their static secrets within the secret store, which in turn are consumed by applications running within the environments.

Here is a more precise list of requirements that you are looking to achieve:

- A team of developers should be able to set secrets in secret stores that are mapped to applications and environments.

- These secret stores should be isolated to an individual team, so Team A cannot access secrets owned by Team B.

- Applications running in an environment should be able to access the secret store along with the secrets that have been prepared for that application and environment in a read-only mode. Access to other environment/application team secret stores should be forbidden.

- Finally, automation should be in place so that developers should not need to explicitly request the creation of a secret to the team managing the associated credential management tool.

Design

For this design, assume that the secret management tool in use is HashiCorp Vault. Vault seems to be the de facto standard for secret management in cloud native systems. So this assumption should fit most real-world situations.

Vault allows you to create different secret stores and assign permissions to them, which makes it relatively straightforward to meet the previously stated set of requirements. However, given these constraints, there would need to be an extensive set of configurations applied within Vault. To implement the desired configurations in a declarative fashion that aligns with the key goals of everything defined as Infrastructure as Code, you will use the vault-config-operator.

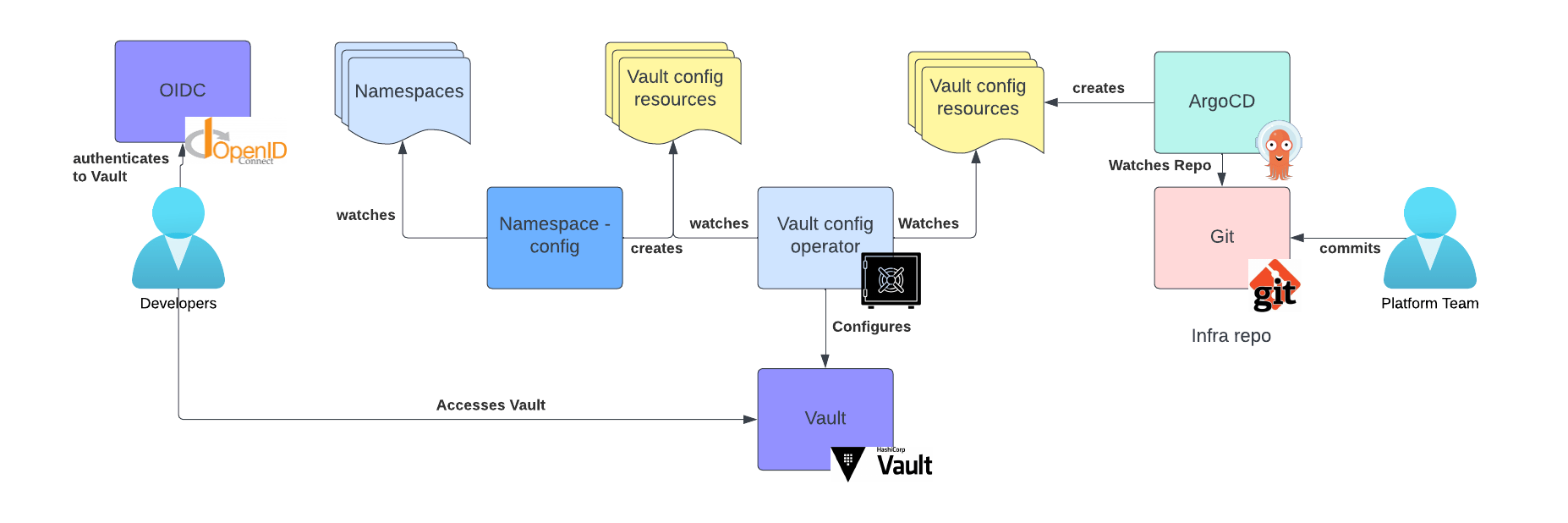

The following diagram depicts the desired architecture:

On the right side of this diagram, you can see that the platform team defines a set of Vault configuration resources within the infra GitOps repository. These resources are then interpreted by the vault-config-operator and applied to your Vault instance.

On the right side of the diagram, you can see that, based on the existence of a set of namespaces, the namespace configuration operator (explained in Part 1) creates another set of Vault configuration resources that will help configure Vault. These configurations can be managed on a per-team or per-namespace basis.

Also on the left side, you can also see developers accessing Vault using an OIDC-based authentication so that they can manage the secrets of their applications. In this configuration, they can set secrets in the appropriate secret stores.

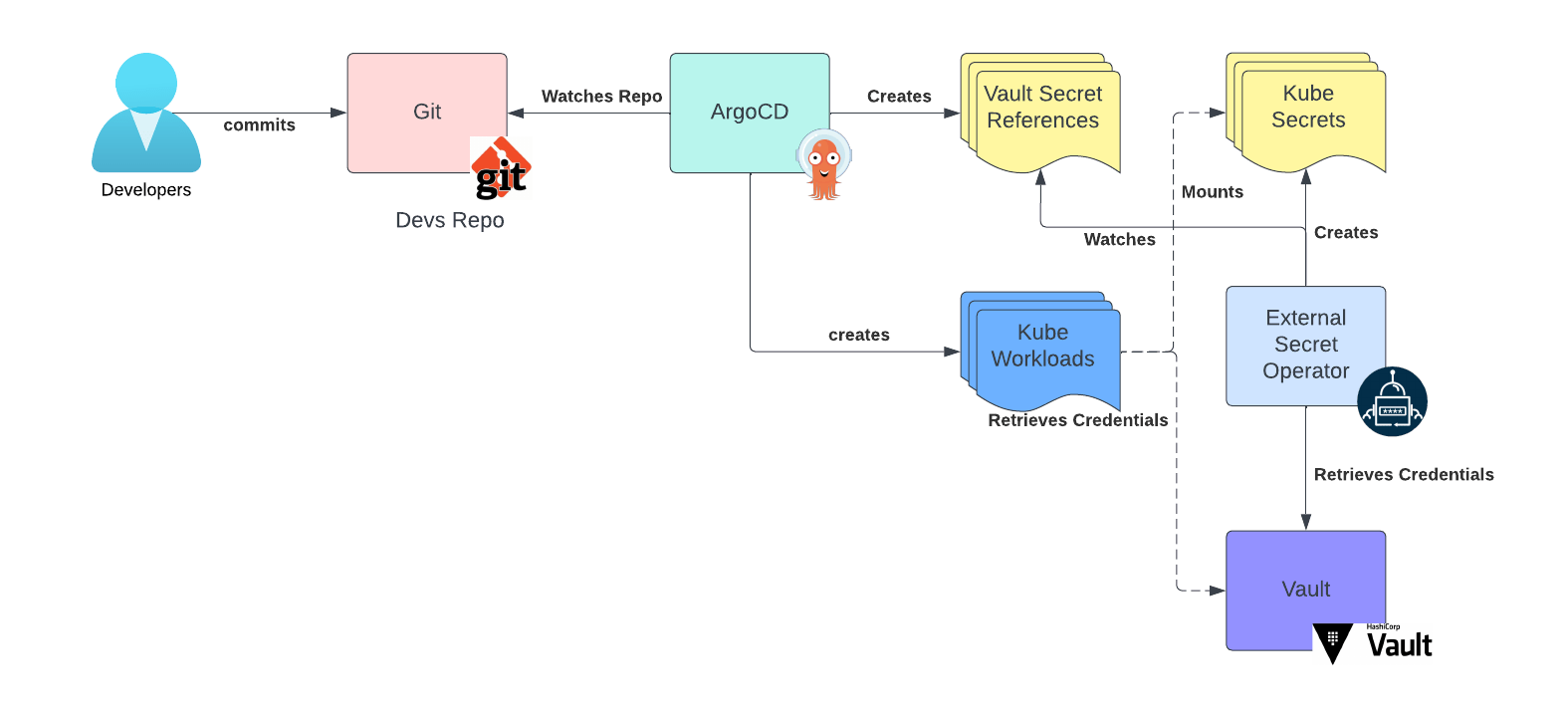

Once secrets are present in Vault, applications can access them with the following pattern:

This is a classic pattern for accessing secrets by the External Secret Operator (ESO). Developers will describe the secrets their application needs as secret references using the ESO resources and commit those resources to their GitOps repository, together with other resources describing the application. We have yet to fully describe the concept of a tenant GitOps repository; that will be the subject of another installment within this series. For now, assume that one already exists.

Once ArgoCD creates the ESO resources in Kubernetes, the External Secrets Operator will fetch the secrets from Vault and turn them into Kubernetes secrets that the workloads can consume.

As an improved alternative to this pattern, workloads can also access secrets directly from Vault if they are built with the ability to communicate with the Vault API.

Implementation walkthrough

Referring to the same repository as introduced in Part 1, let’s walk through this setup.

First, we need a Vault instance. Normally, Vault is managed by a dedicated team and is already available. However, in this case, where there is no other dedicated team, we have to install Vault ourselves. We are going to install Vault on the hub cluster, and the configurations can be found here.

One thing to notice about this setup is that the configuration auto-initializes and auto-unseals the Vault instance. This is not recommended for production use cases, but it’s convenient for demos, tutorials and examples. Also, this setup configures a Kubernetes Authentication Method so that the default service account vault-admin namespace can communicate with Vault. This configuration is performed by a sidecar and can be inspected here.

Once this deployment is complete, we can use the vault-configuration-operator and the default service account in the vault-admin namespace to perform any other configuration in Vault.

The next piece of configuration enables human access to Vault. For this, we are going to create an OIDC Authentication Method to allow access for the users defined within the Keycloak instance that we set up in Part 1. The configuration for this component can be found here.

Next, we need the clusters that we created from the hub to also be able to authenticate to Vault and create configurations. To do so, we use a helm chart that is applied at cluster creation. This helm chart creates a Kubernetes Authentication Method and a role that, again, makes the default ervice account in the vault-admin namespace (this time in the managed clusters) a highly privileged account within Vault. At this point, each cluster can manage the Vault configuration as needed.

We can now complete the configuration that will allow developers to set their secrets. First, our path convention for secrets within Vault is the following: /applications/<team>/<namespace>/static-secrets

We want to give developers a policy that will allow them to read/write secrets under the path /applications/<team>

To do so, we create a NamespaceConfig defined here, such that when a namespace of environment type dev is created by a specific team, that team is given access to that path in Vault. We make an assumption here that all of the teams will have a dev namespace representing their development environment. For this simple demo, this is good enough.

The next step is to make sure that each namespace owned by a team can read from the path /applications/<team>/<namespace>. This configuration can be found here.

Finally, we need to create the Key/Value (kv) secret engine that will actually hold the secrets at the aforementioned paths, which is performed by this configuration.

If all of the resources were applied successfully, we should now be able to test accessing the secrets. First, let’s log into Vault as a privileged user and verify that we have the correct configurations. On the authentication methods page, we should find a Kubernetes Authentication Method per cluster and one OIDC authentication method:

On the Group view, you should see the groups that we have defined in Keycloak:

On the Secret Engine page, you should see one static-secrets secret engine per namespace. The page should look similar to the following:

We are going to now test our access rules to confirm that our setup is correct. Recall the users and groups that we previously defined in Keycloak:

Group | Users |

team-a | Adam, Amelie |

team-b | Brad, Brumilde |

Let's first test that Adam can set secrets in the /applications/team-a/<namespace>/static-secret path, but he cannot do the same in the /applications/team-a/<namespace>/static-secrets path.

We are going to execute these tests using the Vault CLI:

Log in as Adam:

vault login -method=oidc -path=/keycloak role="developer"Once authenticated by Keycloak, you should get this output:

token xxx

token_accessor dSZDBK8cSUrzxn5iYRbMPMEQ

token_duration 768h

token_renewable true

token_policies ["default"]

identity_policies ["developer-team-a"]

policies ["default" "developer-team-a"]

token_meta_role developerExport the provided vault token:

export VAULT_TOKEN=xxxLet’s set a secret at /applications/team-a/myapp-dev/static-secrets

vault kv put -mount=/applications/team-a/myapp-dev/static-secrets foo bar=baz

Success! Data written to: applications/team-a/myapp-dev/static-secrets/fooNext, let’s verify that Adam cannot create a secret in another team workspace:

vault kv put -mount=/applications/team-b/myservice-dev/static-secrets foo bar=baz

Error making API request.

URL: GET https://vault.apps.rosa-vnm9f.m4a1.p1.openshiftapps.com/v1/sys/internal/ui/mounts/applications/team-b/myservice-dev/static-secrets

Code: 403. Errors:

* preflight capability check returned 403, please ensure client's policies grant access to path "applications/team-b/myservice-dev/static-secrets/"Now, we are also going to test that service accounts from the namespace in which applications are running can read secrets only at their specific path and cannot write secrets anywhere.

Verify that the default service account in the myapp-dev namespace can read secrets from /applications/team-a/myapp-dev/static-secret

Note: Ensure that you are authenticated to the cluster containing the target namespace prior to executing the following command

export token=$(oc create token default -n myapp-dev)

vault write auth/non-prod/login role=secret-reader-team-a jwt=${token}

Key Value

--- -----

token xxx

token_accessor sytKPYO9ahsxFy98o0JRWk8U

token_duration 768h

token_renewable true

token_policies ["default" "secret-reader-team-a-non-prod"]

identity_policies []

policies ["default" "secret-reader-team-a-non-prod"]

token_meta_role secret-reader-team-a-non-prod

token_meta_service_account_name default

token_meta_service_account_namespace myapp-dev

token_meta_service_account_secret_name n/a

token_meta_service_account_uid 1019be26-cf39-4acb-b052-05d6b7ec1632Use the returned token to impersonate the default service account in the myapp-dev namespace to confirm that it can access our secrets:

export VAULT_TOKEN=xxxNow, verify that the service account can read a secret within the secrets engine defined within the namespace path:

vault kv get -mount=/applications/team-a/myapp-dev/static-secrets foo

=== Data ===

Key Value

--- -----

bar bazWith read access confirmed, verify that this service account cannot write a secret to this secrets engine:

vault kv put -mount=/applications/team-a/myapp-dev/static-secrets foo bar=baz

Error writing data to applications/team-a/myapp-dev/static-secrets/foo: Error making API request.

URL: PUT https://vault.apps.rosa-vnm9f.m4a1.p1.openshiftapps.com/v1/applications/team-a/myapp-dev/static-secrets/foo

Code: 403. Errors:

* 1 error occurred:

* permission deniedFinally, verify that this service account cannot get a secret from another namespace:

vault kv get -mount=/applications/team-a/myapp-qa/static-secrets foo

Error making API request.

URL: GET https://vault.apps.rosa-vnm9f.m4a1.p1.openshiftapps.com/v1/sys/internal/ui/mounts/applications/team-a/myapp-qa/static-secrets

Code: 403. Errors:

* preflight capability check returned 403, please ensure client's policies grant access to path "applications/team-a/myapp-qa/static-secrets/"Conclusions

In this article, we saw how we can solve the use case of supporting static secrets – a common use case that is almost always required when implementing a developer platform.

We also showed a possible approach using Vault (a very popular credential management tool) as a secret store.

Our assumption was that all environments will require static secrets, so we automated the creation of key value stores to contain credentials and we tied that automation to the namespace provision process described in Part 1 of this series.

We then verified that permissions were correctly set such that multiple developers teams could work together without being able to access each other's secrets.

About the author

Raffaele is a full-stack enterprise architect with 20+ years of experience. Raffaele started his career in Italy as a Java Architect then gradually moved to Integration Architect and then Enterprise Architect. Later he moved to the United States to eventually become an OpenShift Architect for Red Hat consulting services, acquiring, in the process, knowledge of the infrastructure side of IT.

Currently Raffaele covers a consulting position of cross-portfolio application architect with a focus on OpenShift. Most of his career Raffaele worked with large financial institutions allowing him to acquire an understanding of enterprise processes and security and compliance requirements of large enterprise customers.

Raffaele has become part of the CNCF TAG Storage and contributed to the Cloud Native Disaster Recovery whitepaper.

Recently Raffaele has been focusing on how to improve the developer experience by implementing internal development platforms (IDP).

More like this

Strategic momentum: The new era of Red Hat and HPE Juniper network automation

Redefining automation governance: From execution to observability at Bradesco

Technically Speaking | Taming AI agents with observability

At Your Serverless | Command Line Heroes

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds