In a hybrid IT environment, you'll often have a combination of Red Hat OpenShift deployments on public, private, hybrid and multi cloud environments as well as on Red Hat Enterprise Linux (RHEL) systems at the edge. As a site reliability engineer (SRE), it is essential to monitor all of these systems to meet service level agreements (SLAs) and service level objectives (SLOs). This post guides you through setting up Performance Co-Pilot, our monitoring solution for RHEL, and configuring OpenShift Monitoring to scrape metrics from your RHEL systems at the edge.

Creating a RHEL edge image

Open the Red Hat Console and navigate to Edge Management > Manage Images. Click the “Create new image” button and follow the dialog to create a customized image. Make sure to include the pcp package in the list of additional packages to install. Download the .iso image, flash it to a storage medium and boot an edge device from it.

For more information on how to use the Edge Management application, please refer to the Edge Management documentation.

Deciding which metrics to monitor

PCP comes with a wide range of metrics out-of-the-box, and supports installing additional agents to gather metrics from different subsystems and services.

Currently, you need to install an additional SELinux policy. We are working on removing this extra step in a future RHEL release (RHEL 9.3 or later):

$ test -d /var/lib/pcp/selinux && sudo /usr/libexec/pcp/bin/selinux-setup /var/lib/pcp/selinux install pcpupstream

Let’s start and enable the metrics collector, and list all installed metrics:

$ sudo systemctl enable --now pmcd $ pminfo -t

You can search for additional agents with the following command:

$ dnf search pcp-pmda

Once you have identified one or more additional agents, you install and enable them with the following steps. In this example, we’ll install the SMART (Self-Monitoring, Analysis and Reporting Technology) PMDA (Performance Metric Domain Agent) to monitor the health of the hard drives in our system:

$ sudo rpm-ostree install pcp-pmda-smart $ sudo systemctl reboot $ cd /var/lib/pcp/pmdas/smart && sudo ./Install

We can list all new SMART metrics with pminfo -t smart and run pminfo -df smart.nvme_attributes.data_units_written to show the current value of a metric.

Tip: Another interesting PMDA for edge devices is the netcheck PMDA, which performs network checks on the edge device.

Exporting metrics in the OpenMetrics format

The pmproxy daemon (included with PCP) can export metrics in the OpenMetrics format. First, let’s start and enable the daemon:

$ sudo systemctl enable --now pmproxy

pmproxy exports the metrics on http://<hostname>:44322/metrics. By default, all available metrics are exported. This provides us with great insights, but it also consumes more CPU cycles while scraping and requires more storage space. Therefore, it is recommended to limit the set of exported metrics with the names parameter, for example:

$ curl "http://localhost:44322/metrics?names=disk.dev.read_bytes,disk.dev.write_bytes"

Note: Metric values must be floating point numbers or integers. Strings are not supported in the OpenMetrics format and are not exported by pmproxy.

Allow outside access to pmproxy by enabling the pmproxy service in the firewall:

$ sudo firewall-cmd --permanent --add-service pmproxy $ sudo firewall-cmd --reload

Note: The above command allows access to pmproxy from the default zone. For production environments, it is recommended that access be restricted to an internal network.

Ingesting metrics with OpenShift Monitoring

Once we’ve decided on a list of metrics to ingest and started the pmproxy daemon as described above, we can configure OpenShift Monitoring to ingest metrics from our RHEL systems.

As a prerequisite, monitoring for user-defined projects needs to be enabled in the cluster. Please refer to the OpenShift Monitoring manual for instructions.

In the next step, we create a new project:

$ oc new-project edge-monitoring

To monitor hosts outside the OpenShift cluster, the following manifests need to be created for each monitored host. In this example, the host to monitor is called node1, with the IP address 192.168.31.129 and the metrics disk.dev.read_bytes and disk.dev.write_bytes are scraped every 30 seconds. Save the following manifests to manifests.yaml, adjust the values accordingly and apply them to your cluster by running oc apply -f manifests.yaml:

kind: Service apiVersion: v1 metadata: labels: app: node1-pmproxy name: node1-pmproxy namespace: edge-monitoring spec: type: ClusterIP ports: - name: metrics port: 44322 --- kind: Endpoints apiVersion: v1 metadata: name: node1-pmproxy namespace: edge-monitoring subsets: - addresses: - ip: 192.168.31.129 ports: - name: metrics port: 44322 --- apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: k8s-app: node1-pmproxy name: node1-pmproxy namespace: edge-monitoring spec: endpoints: - port: metrics interval: 30s params: names: ["disk.dev.read_bytes,disk.dev.write_bytes"] selector: matchLabels: app: node1-pmproxy

Visualizing metrics with the OpenShift Console

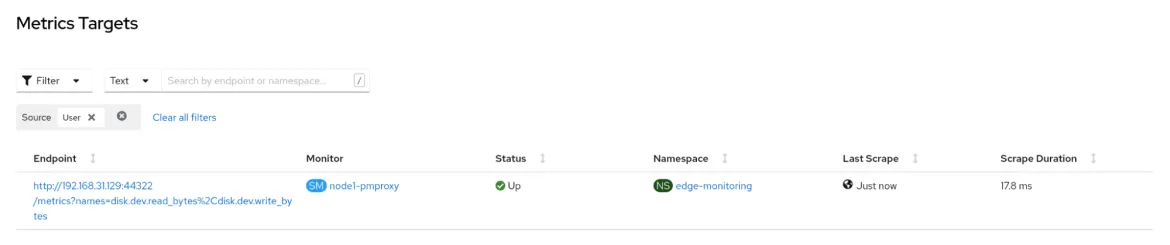

Navigate to your OpenShift Console and visit Observe > Targets. You will see your configured hosts in the list of targets (you can use the "Source: User" filter to list only targets of user-defined projects):

Figure 1: List of configured metric targets

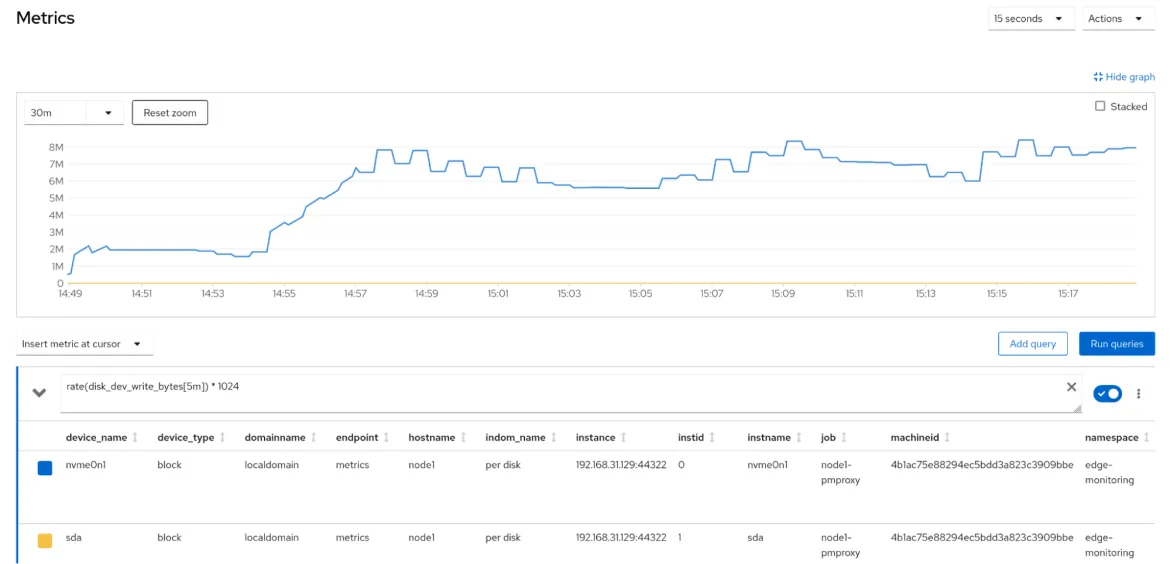

Now click the Metrics button in the navigation bar. Type rate(disk_dev_write_bytes[5m]) * 1024 and press the “Run queries” button to see new metric values.

Figure 2: Visualizing metrics in the OpenShift Console

Note: The disk.dev.write_bytes PCP metric is stored in kilobytes (visible with pminfo -d disk.dev.write_bytes), therefore we need to multiply by 1024 to get the metric values in bytes. Additionally, metrics in PCP use a dot as a separator, whereas OpenMetrics metrics use an underscore as a separator.

Conclusion

In this article, we learned how to use OpenShift Monitoring to gather metrics from RHEL systems on the edge. If you want to learn more about Performance Co-Pilot, please refer to Automating Performance Analysis and the Performance Optimization Series. Refer to the hybrid cloud blog for more articles about OpenShift and hybrid cloud.

About the author

Andreas Gerstmayr is an engineer in Red Hat's Platform Tools group, working on Performance Co-Pilot, Grafana plugins and related performance tools, integrations and visualizations.

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Customer support

- Developer resources

- Find a partner

- Red Hat Ecosystem Catalog

- Red Hat value calculator

- Documentation

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit