The average smartphone user pays little attention to what’s going on behind the scenes. Applications can be updated, security bugs can be fixed and more, with minimal interruption to the user’s experience. The process is mostly automated, freeing the user from potential problems that could come from manual changes. Imagine if the average smartphone user had to update their own applications manually -- each individual user would be a vector for introducing any of countless possible human-based variables to the update process, potentially leaving inactive users stuck on older, less secure, or less performant versions.

The same model can be found in the enterprise behind the scenes. Think of it as the “consumerization” of backend enterprise IT infrastructure where the usage model and expectations of consumer platforms is carried over to enterprise IT. Automation behind the scenes allows systems to work and grow while the rest of IT goes about the daily business of innovation, rather than systems maintenance.

Imagine deploying or updating a database, monitoring service, or build system across an entire cluster with the ease of installing an app on your smartphone. For many containerized applications, the initial installation can be easy. But then these applications likely need to have permissions configured a certain way, be updated when software patches fix a security issue, or backed up for data protection or reconfigured when the state drifts away from the expressed intent. When specific operational knowledge is required for backups or patches to be handled correctly, there is more business logic required for the application to function as expected. As you scale your applications, you further multiply the knowledge required to run these apps. This entails larger amounts of IT coordination, from network permissions, to systems allocation, to backup, logging, and service updating. To deliver an “App Store experience,” you should have a way to package the business logic with the application in a way that can be repeated through automation. Red Hat OpenShift combined with Kubernetes Operators is designed to provide this out of the box.

Operators: Making automation attainable for Kubernetes users

Operators are a method to design the “how” for applications in Kubernetes, the cloud native container orchestrator. While Red Hat and the larger Kubernetes community have developed this concept since 2016, the OpenShift platform is adding Operators as a model for hosting Kubernetes applications. Red Hat OpenShift is already incorporating Kubernetes Operators to better streamline installation and updates of the platform, and working with partners to make their Operators more easily consumable to our users. Let’s take a step back and explain what the goal of Operators means.

With the move to cloud computing, further abstraction and portability has been enabled by the use of Linux containers. This next step in applications compartmentalization required solutions to manage these applications at scale. Thus, the use of Kubernetes. As Linux is the foundation for any container platform, we’ve built upon decades of experience of production Linux deployments running existing applications.

The dichotomy that’s come to be used to describe this shift of how we manage infrastructure has been the pets versus cattle metaphor: Do you treat your application servers as beloved single pets, or as disposable, interchangeable units with a market value attached to them?

Kubernetes is built to logically scale container workloads. This can be relatively easy with stateless applications, like web front ends, because they require little context of the services around them. However, with stateful applications or services suddenly you've got to have a lot more contextual - or human - knowledge about the right thing to do. So how do you do that intelligently, and at scale?

Site Reliability Engineers (SREs), whose roles originated to maintain the operational stability of massively distributed workloads, have often come to rely on automating as much of their role as possible. These individuals and their teams encapsulate their domain knowledge into software, which is often custom logic for their application. Kubernetes Operators are a way of packaging that specific knowledge up for container-based applications, and using Kubernetes itself to execute that knowledge automatically when it's needed.

In the pets vs. cattle analogy, a Site Reliability Engineer (SRE) is a person that helps add and manage all the cattle in large herds. They’re not pet groomers and vets, instead they’re cowboys and cowgirls wrangling servers with “smart” tools like Red Hat Ansible. This person operates an application by writing software. That resulting piece of software has an application's operational domain knowledge programmed into it. Since this knowledge is embedded in the Operator, SRE teams at separate companies don’t need to maintain their own failover, scaling and backup functionality. That behavior is already embedded by the experts – the Operator authors – for that specific type of database or application.

How Operators work

We’ve previously described Operators as ways of packaging, deploying and managing Kubernetes applications, with Kubernetes. Here are some ways Operators are important:

-

Operators can reduce complexity: Operators are designed to simplify the processes around managing distributed applications by defining the installation, scale, updates, and management lifecycle.

-

Operators extend Kubernetes functionality: Kubernetes itself is already extensible by design - rather than dictating runtime, storage, or networking solutions, it offers interfaces that allow these solutions to plug in. Operators take this idea one step further, and extend Kubernetes through CRDs to encode application- or service-specific knowledge into a single bundle, allowing those applications and services to be run and controlled with Kubernetes itself. Automating operations in this way simplifies management, thus helping teams to focus on things more valuable to their business.

-

Operators take human knowledge and systemize it as code: Operators enable automation by taking human knowledge of an application's operational domain and puts it into software, enabling more consistent and repeatable management.

-

Operators make application management more scalable and repeatable: Teams have a more standardized approach thanks to how Operators work like reusable templates for various tasks in an application’s lifecycle.

-

Operators are useful in multi-cloud and hybrid cloud environments: Operators can run where Kubernetes runs, on public, private, hybrid, multi-cloud, or on-premises.

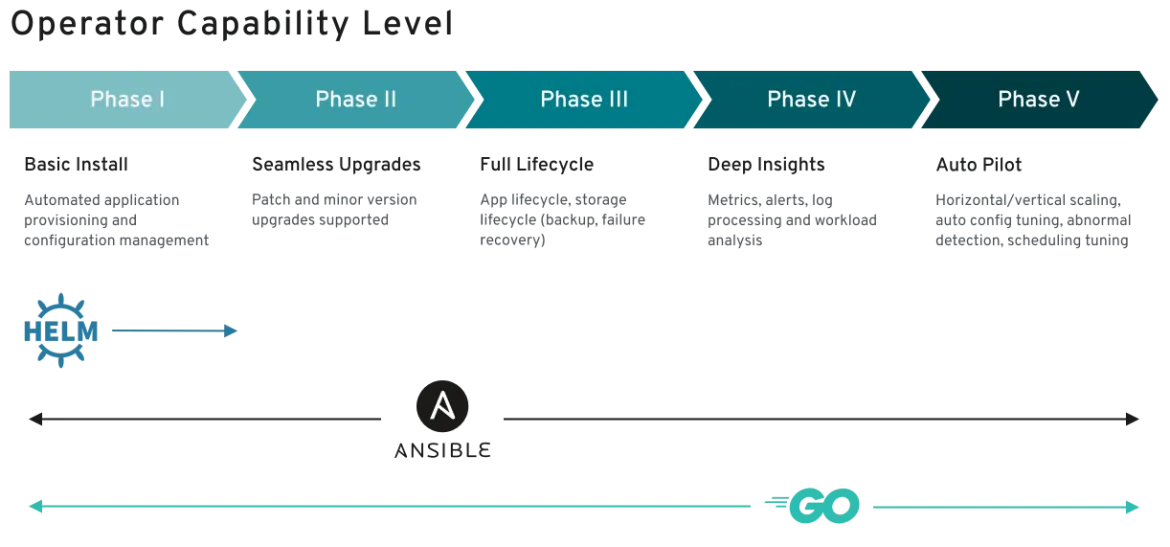

How does an Operator evolve? We see it in five general phases, in what we call the Operator Maturity Model. As an Operator progresses between each phase, the user experience that it provides for SREs gets closer to a self-managed, cloud-like experience.

Operator Maturity model from the Operator SDK

Operator Maturity model from the Operator SDKOperators have a set of tools available to them through the Kubernetes API and the cloud native ecosystem. Plugging to these tools allows an Operator to have deep insights into current utilization and be on “Auto Pilot:”

-

Cluster-provided Storage: Rely on block storage for persisting backups and executing other types of data migrations on behalf of the application owner.

-

Monitoring: Tie into the Prometheus Operator for a managed monitoring stack that can detect and alert the application owner of problems. Advanced Operators can automatically tune themselves based on throughput or app-specific metrics.

-

Horizontal Pod Autoscalers: Scale out the stateless tiers of the application using HPAs built into Kubernetes.

-

Pod Disruption Budgets: Set smart limits controlling the disruption of the app during scheduled downtime and other maintenance activities.

The Kubernetes community is busy creating new Operators. If you are currently in the same process, you can share your work on OperatorHub.io. The Prometheus Operator is one example available today. To get started in making your own Operator, see the Operator Framework for step-by-step guidance.

Automation to make a hybrid cloud world a reality

Red Hat OpenShift and the Kubernetes community have both matured to the point where mission critical enterprise systems are now run in container-based environments with full orchestration from an open source project. Day one was setting up container native infrastructure. Day two was setting up the internal systems needed to run clusters, such as build systems, backup, and middleware.

Next, it is about solving the problems around the lifecycle of a Kubernetes application. This can happen through creating Operators, the codified knowledge of how to run a Kubernetes application. Even further, users can look to the Operator Framework, the toolkit to help create and manage Operators in a more effective, automated, and scalable way.

What does this bring to the infrastructure world? A cloud operates its own applications in this way, keeping them serviced and up to date. With Operators, it can bring a cloud-like experience based on Kubernetes, in a variety of environments.

This is how smartphones have operated, with application lifecycles managed agnostically from the underlying infrastructure. The same potential can be brought to the world of hybrid cloud, powered by Kubernetes.

Learn more in a webinar, building better Kubernetes applications with Operators and OpenShift.

About the author

More like this

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech