If you want to know what post-quantum cryptography is or why any one will care, see part 1 of my series.

On August 24, 2023 the National Institute of Standards and Technology (NIST) published its first draft of post-quantum algorithms. The technologies behind those algorithms were described in part 2 (hash-based signatures) and part 3 (lattice-based cryptography) of this series.This leads to the question: If NIST already has serviceable post-quantum replacements for the Rivest-Shamir-Adleman (RSA) and Elliptic Curve Cryptography (ECC) algorithms, why would they need any other technology? The answer is because lattice-based cryptography is relatively new and it would be good to have an alternative in case a general solution to the underlying lattice-based problems or the various derived module-based lattice problems is found. One option is to use error correction codes as a cryptographic primitive.

The basics

Error correction codes are digital codes used to reliably send data through an unreliable channel. If there is some noisy transmission line, which is the most common example, transmission reliability can be increased by encoding the data to be sent down the line in packages with extra redundant bits. This larger package is called a code word, and only certain values in the space represented by the larger package are valid code words. In a noisy channel, corruption of some of the bits would yield an invalid code word, so the error is detected. Furthermore, if the space between code words is big enough, a reasonable guess can be made about which code word was probably corrupted and the error can be corrected.

"The space between" or the "distance between" code words is measured by the number of bits that are different in each. This is generally called the Hamming distance. Through the rest of this post, distance, closeness, etc., will all be shorthand for the Hamming distance. Related to Hamming distance is the idea of Hamming weight. The Hamming weight of a value is simply the number of 1 bits in that value. Error values purposefully introduced in encryption schemes usually choose Hamming weights large enough to be secure and small enough to be corrected.

This process not only has been understood for decades, but has been formalized into a full mathematical system. The mapping from a base value (a), to be transmitted, to a code word (w), that is sent down the channel, is accomplished by treating the base value as a vector of bits and multiplying it by a matrix G called a generator:

w = a . G

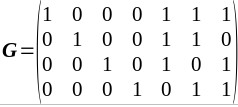

Generators are created by concatenating two arrays: I, (called the identity matrix) which is a square matrix with ones in the diagonal and zeros everywhere else and P (called the parity matrix) which has the error coding values.

![| 1 0 0 0 p1 p2 p3 | G = [I||P] = | 0 1 0 0 p4 p5 p6 | | 0 0 1 0 p7 p8 p9 | | 0 0 0 1 p10 p11 p12 |](/rhdc/managed-files/post-quantum-cryptography-code-based-img1.png)

This form is called the standard form. It maps a basic value (a) to a larger dimension code word (w) of the form a concatenated with parity information (p) where w = a || p. As noted earlier, not all values of size w map are a valid code word mapping to a given a, so w can be used to detect if there was corruption if the received value on the channel (w’) isn’t a valid code word. Furthermore, if the size of w is sufficiently larger than the size of a, the distances between various code words are large enough, and the error is small enough, the corrupted value w’ can be used to determine what the original w was, and thus to determine the original a. To correct w’, you can use the P matrix to calculate a new matrix call, the parity check matrix H.

![| p1 p4 p7 p10 1 0 0 | H = [P^T||I] = | p2 p5 p8 p11 0 1 0 | | p3 p6 p9 p12 0 0 1 |](/rhdc/managed-files/post-quantum-cryptography-code-based-img2.png)

Then use H to calculate s (called the syndrome) as follows:

s = H . w'

If w’ is a valid code word, then the syndrome (s) will be zero. This is why H is called the parity check matrix. In addition if s is non-zero and the error in w’ is small enough you can use s to recover the error (e) where w’ = w + e, and thus the original w = w’ + e (in binary arithmetic addition is xor and is the same as subtraction so e + e = 0). Unfortunately, in the general case, mapping s to e involves trying all possible errors and calculating their expected s values, storing them in a table, then looking up the error from the table using s. If you select H carefully, however, you can create a function which gives you e from s directly. Once you have the error (e), you can calculate the correct w, and then recover a. This means we can create a function Dg based on H, which will take a corrupted w’ and give us the original a.

Efficient mapping syndromes to errors

Hamming codes

There are several schemes which are used to build H in such a way that we can calculate the error from the syndrome efficiently. The most common scheme is to build up a parity matrix (P) that takes log2(n) bits (where n is the size of the code word (w)). A parity check matrix that can create this encoding would look like this:

and the generator, G, would look like this:

When the syndrome is calculated, the resulting value s is the single bit that was changed. For example, say the original message was a = 1 1 0 0. Multiplying a by G results in a w = 1 1 0 0 0 0 1. If the first (high) bit is corrupted (w’ = 0 1 0 0 0 0 1) , s = Hw'= | 1 1 1 | = 7. If the second bit is corrupted, w’= 1 0 0 0 0 0 1, s = | 1 1 0 | =6. The syndrome s will point directly to the single bit that was corrupted, with exception of corruption of the last bit and the first parity bit. In those cases they are swapped. This works because the parity check matrix (H) was chosen such that half the bits in the rows are 1. This matrix was arranged as a binary count in the column starting with 1 on the right and moving to 7 on the left. To preserve "standard form" 3 and 4 are swapped, which is why there is some result swapping in the syndrome. These are called Hamming codes (not to be confused with Hamming weight or Hamming distance) and they are popular because they are easy to implement for both encoding and error correction. They are not the most efficient encoding scheme for correcting multibit errors with the minimum size code word, however. That would be Goppa codes.

Goppa codes

Goppa codes were developed to give the most efficient mapping of maximum correctable error versus smallest size difference between w and a. The scheme involves picking several base values and functions and building up H from powers of those values multiplied by polynomials of these functions. To find the error, the syndrome is turned into a polynomial, then that polynomial is processed into an oracle function. The oracle function can be queried for each bit of the error to determine whether it is 1 or zero. The actual math is beyond this article, but the full algorithm can be found here.

Low density parity check codes

Goppa codes, while quite efficient, can be very complicated. Another scheme used in coding is called low density parity check. In this scheme, the number of "1" bits in the parity check matrix is small. This allows efficient decoding of the result while being able to correct many errors. The idea is that because the density of 1s in the parity check matrix is small, the connections between the error bits and syndrome bits are small. This fact can be used to create probabilistic algorithms for picking likely error bits by walking down each error bit and seeing how likely that error bit would be one or zero based on the connection this error bit has to the syndrome bits, and the value of the connected syndrome bits. The result can be run iteratively with each iteration getting closer to the correct answer. This is sometimes called a "bit flipping decoder" as it starts with a guess for the error bits and flips the guess each iteration based on the probability that the current guess is wrong. Unlike the other codes, the chance of success is probabilistic, but the probability of failure can be made to be quite low.

Turning Goppa codes into a public key encryption algorithm

In 1978, Robert McEliece turned Goppa codes into a cryptographic system as follows:

1. Generate a key pair:

- Construct a Goppa code H (which has a reversible function Dg)

- Put H in standard form H=[PT||I]

- Calculate G=[I|P]

- Choose an invertible permutation array Pr (and array that just moves the columns around without disturbing the values), and another invertible random array S

- Calculate Gp=SGPr

- The public key is the array Gp

- The private key is Dg (invertible function for G based on H), S-1 and Pr-1

2. To encrypt a message:

- Calculate c=mGp+e where e is a noise vector and m is the message, turned into a vector

- Send ciphertext c

3. To decrypt the message:

- Calculate m= Dg(cPr-1)S-1

The arrays S and Pr hide the details of our original matrix G so the attacker doesn’t know H and thus can’t perform the Goppa algorithm to recover the error. Note that the encrypt step 1 looks a lot like a lattice operation, where Gp is a ‘cooked’ lattice array.

The main drawback to this is the public key, Gp, is very large (order of megabytes). It has decades of cryptanalysis, so we are confident in its security. A slightly modified version is a Round 4 finalist in the NIST post-quantum "competition" called Classic McEliece. It is described below.

Other error correcting code schemes have been proposed to replace Goppa codes in the McEliece scheme. Those schemes have been broken. Attempts at making a signature scheme based on error correcting codes have also been broken.

Code-based encryption schemes in NIST Round 4

Round 3 of the NIST Post Quantum Cryptography Standardization resulted in four algorithms selected for standardization: Crystals-Kyber, Crystals-Dilithium, Falcon and Sphincs+. Crystals-Kyber is a lattice-based key-encapsulation mechanism (KEM), while Crystals-Dilithium and Falcon are lattice-based signatures. Sphincs+ is a stateless hash-based signature scheme. These are discussed in part 2 and part 3 of this series. The remaining signature algorithms in round 3 (Rainbow, GeMSS and Picnic) were broken, and the remaining non-lattice-based KEM algorithms moved to Round 4. Those algorithms are Classic McEliece, BIKE, HQC and SIKE. SIKE has been broken, and the remaining three algorithms are code-based. In the following descriptions I use the nomenclature and formatting used in the specifications for the standards in each description, which actually varies between the different specs.

Classic McEliece

Classic McEliece modernizes Robert McEliece’s original scheme. First, instead of using a generator (G) to encode a message (m), the parity check matrix (H) is used to generate a syndrome (s) from a random error value (e). This e is used in a key derivation function (KDF) to generate the encapsulated key. Decapsulation is done by finding the e value from the syndrome and using it in the KDF. If the decode fails, a fake KDF is used to hide the failure to prevent creating an oracle. Since the parity check matrix is in standard form (Pt || I) and I is the known identity Matrix, the public key only needs to be the Pt portion, which the spec calls T. So the steps for Classic McEliece are:

1. Generate a key pair:

- Construct a Goppa code H (which has a reversible function Γ)

- Put H in standard form H=[I||T]

- The public key is the array T

- The private key is Γ and s, which is a fixed random per-key noise value

2. To encapsulate a key:

- Calculate C=[I|T]e where e is a noise vector

- K=H(1, e, C) where H() is a hash function

- Return the key K and the cipher text C

3. To decrypt the message:

- set b to 1

- Use Γ to find e from C. If no e found, set b to 0. and e to s

- K=H(b, e, C)

Public keys in Classic McCliece are between 260 KBytes and 1 Mbyte with cipher texts around 96 to 200 bytes.

BIKE – bit flipping and medium density codes

While Classic McEliece reduces the public key down from the traditional McEliece encrypt, it is still too large for many operations. This is unavoidable when using Goppa codes. If Hamming or low density codes are used, however, arrays can be constructed by using a single row vector and shifting that vector in each succeeding row. This is called a cyclical or quasi-cyclic array. BIKE uses quasi-cyclic arrays to reduce key sizes. BIKE also uses medium density parity check codes, which function like low density codes, but there are more connections between error bits and various syndrome bits. The steps for BIKE are:

1. Generate a key pair:

- Find h0 and h1 each with an appropriately low hamming weight (for medium density). These are our quasi-cyclic parity check arrays

- Calculate h=h1h0-1

- The public key is the array h

- The private key is h0, h1 and σ, which is a fixed random per key noise value

2. To encapsulate a key:

- Find m, a uniformly random message

- Calculate e0,e1 =hashH(m), which uses m to determine e0 and e1 with appropriately low hamming weights

- Calculate e0 + e1h and m xor hashL(e0||e1). These two make up the ciphertext c

- K = hashK(m, c)

3. To decrypt the message:

- (c0,c1) = split the components of c

- e0,e1= decode(c0h0, h0,h1) using a black-gray bit flip algorithm. This could fail in which case set e0,e1 is set to 0, 0

- m’ = c1 xor hashL(e0||e1)

- if (e0,e1) == hashH(m’) then K= hashK(m’, c) else K = hashK(m’,σ)

This works because h0 and h1 are appropriately hidden by calculation h=h1h0-1. The values e0 and e1 are hidden with e0+e1h, and m is hidden by xoring with the hash of e0||e1. Decoding works because c0h0 gives e0h0+e1h1, which can be used as the syndrome to decode e0 and e1 with knowledge of h0 and h1 using bit flipping. Correct reconstruction of e0 and e1 from m’ guarantees that the decode was successful. Unsuccessful decodes are hidden by calculating a fake K from σ.

Public keys and cipher texts sizes are roughly equivalent to each other and vary between 1500 bytes to 5kBytes.

HQC – reducing the key size and Hamming codes

HQC also uses quasi-cyclic arrays to reduce the key sizes. HQC doesn’t hide the Generator matrix, G. Instead it defines G as either a traditional Hamming generator, a Reed-Solomon generator, or a Reed-Muller generator (The latter two are modern Hamming variants). G is public and anyone can use G to recover the original message as long as the error bits are small enough (have a low enough Hamming weight). HCQ instead encodes a message using more error bits than can be recovered and the private key is used to reduce those error bits down to a recoverable value.

1. Generate a key pair:

- The generator G is already given

- Find a random array h (not the parity matrix of G), and vectors x and y. The former have hamming weights w

- Calculate s= x +hy

- The public key is h,s

- The private key is x, y

2. To encapsulate a key:

- Find m, a uniformly random message

- Use m and a salt to find e, r1, and r2 where the hamming weight of e is we and the hamming weights of r1 and r2 is wr

- Calculate u=r1+hr2, v=mG+sr2+e

- K = K(m, c), d=H(m), c=(u,v)

- Because sr2 creates a larger error than is recoverable, the attacker can’t use the decode operation on v directly

- Cipher text is (c, d, salt)

3. To decrypt the message:

- (u,v) = c

- Recover m’ = Decode(v-uy). Note that v-uy= mG+xr2+hyr2+e-r1y-hyr2 = mG+xr2-r1y+e. The hamming weights of x, y, r1, r2, and e are chosen so that the error xr2-r1y+e is below the decoding threshold and m should be recovered.

- Verify d=H(m’) and c=Encode(m’,salt). If they aren’t, abort

- K= K(m’, c)

The public key sizes for HQC run from 2.2 kBytes to 7.2 kBytes with cipher texts roughly 2x the size of the public key.

Wrap up

Code-based KEMs are likely to be the next type of KEM standardized by NIST. They are based on very well understood systems we use to provide data integrity on communications channels today. Some forms have held up to cryptanalysis for half a century, but unfortunately those forms have very large key sizes.

About the author

Relyea has worked in crypto security on the Network Security System code used in Mozilla browsers since 1996. He joined Red Hat in 2006. He is also the co-chair of the OASIS PKCS #11 Technical Committee.

More like this

The nervous system gets a soul: why sovereign cloud is telco’s real second act

MCP security: The current situation

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds