A new version of Red Hat OpenShift Service Mesh has been announced and is due to be released later this year. This new version will be aligning much more closely with upstream Istio, enabling Red Hat to work more upstream while delivering a supported Istio implementation that will give users much more flexibility and supported features. Istio has matured a lot over the years and with its graduation in the CNCF it’s in a great place for us to do this.

There will be some changes coming to the service mesh control plane and deployment topologies. This article will aim to highlight some of these and if you are a current OpenShift Service Mesh user, point out things you can potentially do now to make moving later easier.

Gateways

In OpenShift Service Mesh 2, an ingress and egress gateway are created by default in the control plane namespace. It is also possible to define additional gateways in the ServiceMeshControlPlane(SMCP) resource. However, since the introduction of gateway injection in OpenShift Service Mesh 2.3, the preferred method for deploying service mesh ingress/egress gateways is to do so via a Deployment resource using gateway injection. This not only gives much greater flexibility and control but is a much better practice, as it allows you to manage gateways with their corresponding applications rather than in the control plane resource and the operator managing the deployment of the gateways. Thus, it is recommended to disable the default gateways and move away from the SMCP declaration to gateway injection, which is done through deploying gateways using the following resources:

Minimal:

- Deployment

- Service

- Role

- RoleBinding

- NetworkPolicy

Additional (recommended):

- HorizontalPodAutoscaler

- PodDisruptionBudget

With this change we're following community best practices, as can be seen from the Istio documentation:

- “Using auto-injection for gateway deployments is recommended as it gives developers full control over the gateway deployment, while also simplifying operations. When a new upgrade is available, or a configuration has changed, gateway pods can be updated by simply restarting them. This makes the experience of operating a gateway deployment the same as operating sidecars.” Istio.io - gateway injection

- "As a security best practice, it is recommended to deploy the gateway in a different namespace from the control plane." istio.io - deploying a gateway

- "It may be desired to enforce stricter physical isolation for sensitive services. This can offer a stronger defense-in-depth and help meet certain regulatory compliance guidelines." istio.io - isolate sensitive services

In order to remove the default gateways from the control plane, disable them in the SMCP as follow:

apiVersion: maistra.io/v2

kind: ServiceMeshControlPlane

metadata:

namespace: istio-system

spec:

gateways:

egress:

enabled: false

ingress:

enabled: falseIf you are wanting to migrate away from a SMCP defined gateway you’d first deploy your custom gateway with gateway injection, create a Route for it or LoadBalancer type service (depending how it’s being exposed), then switch over usage to that and then disable the SMCP managed gateway. Then moving forward these gateway deployments can be defined as part of the application deployment.

In the future, Kubernetes Gateway API can be used to create and manage both Istio and Kubernetes gateways using a common API. This feature is technology preview in OpenShift Service Mesh 2.5, but will be generally available in time for OpenShift Service Mesh 3 and will eventually become the recommended approach for managing gateways across both Istio and Kubernetes. Red Hat is also working on an implementation of Gateway API for the Ingress operator.

Automatic Route Creation

Automatic route creation, also known as Istio OpenShift Routing (IOR) should be disabled as it is a deprecated feature. It will be disabled by default in OpenShift Service Mesh 2.5 for new SMCP deployments and will be removed in Service Mesh 3. This feature was handy for development, but in production environments we found that it created many challenges. We found that it could create excess unnecessary routes, and exhibited unpredictable behavior during upgrades. The feature also lacked the configurability of an independent Route resource. Thus, in practice we found customers were much better served by explicitly creating route resources so that they can be fully managed with other Gateway and application resources as part of a GitOps management model. The IOR feature can be disabled through a parameter change in the SMCP, see: disabling automatic route creation. For more information on how to create routes for ingress gateways, read Exposing a gateway to traffic outside the cluster by using OpenShift Routes.

apiVersion: maistra.io/v2

kind: ServiceMeshControlPlane

metadata:

namespace: istio-system

spec:

gateways:

openshiftRoute:

enabled: falseObservability Integrations

Going forward, integrations will be managed by separate operators and the OpenShift Service Mesh operator will only be responsible for managing Istio. Kiali will be managed by a standalone operator, while tracing will be supported via Red Hat OpenShift Distributed Tracing. Metrics and monitoring will be supported through OpenShift user-workload monitoring. As of right now, by default, OpenShift Service Mesh installs a dedicated instance of Prometheus for collecting metrics from a mesh, however, as aforementioned this model of a ‘tooling bundle’ in Service Mesh 2 is moving to standalone operators and integrations to provide greater flexibility, including to integrate with 3rd party solutions.

Since OpenShift Service Mesh 2.3, it has been possible to integrate with OpenShift user monitoring, see: Integrating with user-workload monitoring. We would recommend integrating with OpenShift user monitoring now, as this will align to the model with the monitoring setup in OpenShift Service Mesh 3.

You may also want to look at what your future integrations will look like and start to think about how you would want to go about decoupling components that were default deployed addons in Service Mesh 2. Additionally, OpenShift Distributed Tracing 3 which is based on Tempo should be a consideration as it is now supported as of OpenShift Service Mesh 2.5. However, that’s not the only option, the choice is yours. You can adopt whatever observability stack works best with this new more decoupled model.

The example below would disable the included prometheus and grafana, integrate with user-workload monitoring and connect to an external distributed tracing instance:.

apiVersion: maistra.io/v2

kind: ServiceMeshControlPlane

metadata:

namespace: istio-system

spec:

addons:

prometheus:

enabled: false

grafana:

enabled: false

kiali:

name: kiali-user-workload-monitoring

meshConfig:

extensionProviders:

- name: prometheus

prometheus: {}

- name: jaeger

zipkin:

service: jaeger-collector.istio-system.svc

Port: 9411

tracing:

type: NoneControl Planes

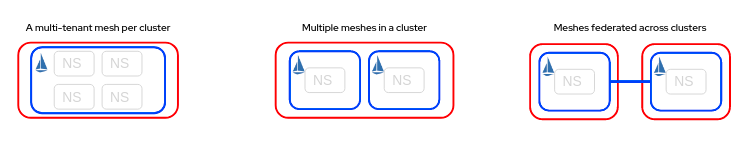

Depending on your chosen mesh topology, you may also have to think about changes to your mesh model. In OpenShift Service Mesh 2, you could have multiple control planes per cluster, or just use a single mesh per cluster. A few different examples are illustrated below.

If you're using a single mesh per cluster, you should enable cluster wide mode. This was made generally available in OpenShift Service Mesh 2.4 and it is strongly encouraged to migrate to if this applies to your chosen mesh topology model. The cluster wide mode greatly reduces the number of Kubernetes API server calls required during a proxy reconciliation when a mesh has multiple namespaces, resulting in performance improvements for large meshes composed of many namespaces. This mode can be defined upfront for new meshes and if you're using OpenShift Service Mesh 2.5 or later, you can enable it for an existing mesh.

In order to enable cluster-wide mode, configure the SMCP as follow:

apiVersion: maistra.io/v2

kind: ServiceMeshControlPlane

metadata:

namespace: istio-system

spec:

mode: ClusterWideIf you are deploying multiple meshes per cluster with OpenShift Service Mesh, the multiple control plane model will be different in OpenShift Service Mesh 3. As covered in a previous article a new mechanism to deploy multiple control planes based on a different model is being introduced.

Summary

To round things up, there are a number of things you can do now to minimize what you’ll have to do later when you wish to transition to OpenShift Service Mesh 3. Namely these are:

- If you have yet to adopt service mesh and will only require a single mesh per cluster, use the cluster wide topology mode.

- Move to gateway injection if you haven't already, thus removing existing SMCP defined gateway deployments.

- Disable automatic Route creation (Istio OpenShift Routing).

- Consider setting up external observability integrations now, moving off of the integrated Prometheus, Grafana, Jaeger and Elasticsearch components to OpenShift user workload monitoring and Distributed tracing 3.

About the authors

James Force is a Principal Consultant at Red Hat whose career spans system administration, infrastructure, operations, system engineering, and platform engineering. He is a passionate advocate of open source, cloud native technologies, and the UNIX philosophy.

Jamie Longmuir is the product manager leading Red Hat OpenShift Service Mesh. Prior to his journey as a product manager, Jamie spent much of his career as a software developer with a focus on distributed systems and cloud infrastructure automation. Along the way, he has had stints as a field engineer and training developer working for both small startups and large enterprises.

Software engineer in the Service Mesh team, Istio and Envoy contributor.

More like this

AI trust through open collaboration: A new chapter for responsible innovation

Strategic momentum: The new era of Red Hat and HPE Juniper network automation

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds