Red Hat Enterprise Linux CoreOS (RHCOS) is the only operating system supported on the OpenShift control plane nodes.

Even though it is possible to deploy Red Hat Enterprise Linux (RHEL) worker nodes, RHCOS is generally the first choice for worker nodes due to the simplified management it provides.

RHCOS is a Red Hat product based on the upstream project Fedora CoreOS. RHCOS is supported only as a component of OpenShift Container Platform.

An immutable operating system

RHCOS is based on RHEL, which means that under the hood it uses the same bits.

The main difference with RHEL is that RHCOS provides controlled immutability: Many parts of the system can be modified only through specific tools and processes. The operating system starts from a generic disk image which is customized on the first boot via a process named Ignition.

This method of managing the operating system simplifies the whole administration process: The nodes are completely provisioned by the cluster itself via the Machine Config Operator (MCO), which is responsible for the Ignition provisioning process of the nodes.

Thanks to this approach, OpenShift nodes are remotely managed by the cluster itself. The administrator does not need to deploy upgrades or patches to the node: The nodes are updated by the cluster itself.

The Ignition provisioning process

Ignition uses JSON formatted files to define the customization information.

The provisioning process is executed during the first boot of the system. Ignition is one of the very first tasks executed at the boot, still inside the initramfs.

Ignition is capable of modifying disk partitions; creating filesystems; managing files and directories, and users; and defining systemd units before the userspace is loaded.

How Ignition configurations are passed to the CoreOS system depends on the platform where the operating system is deployed. When installing onto bare-metal systems, coreos-installer copies the Ignition config into the /boot filesystem, from which it is read on first boot. The Ignition configuration URL is defined via the kernel parameter coreos.inst.ignition_url.

In other cases, the Ignition config is read from a metadata datastore provided by the underlying infrastructure (for example, cloud providers or hypervisors).

Inside an OpenShift cluster, Ignition configurations are served by the Machine Config Server (MCS).

The MCS is a component controlled by the Machine Config Operator and is serving Ignition configurations rendered out of MachineConfig objects.

Generating the Ignition files

As previously mentioned, Ignition configurations are plain JSON files. The CoreOS Ignition project defines the JSON schema specification.

Generating JSON Ignition files from scratch is possible, but it is error prone and certainly not a user-friendly process. For that reason, the Fedora CoreOS project introduced Butane, a tool capable of parsing human-readable YAML formatted files and converting them into JSON Ignition configuration. Butane, during the transpilation, can validate the syntax and detect errors before the Ignition file is consumed.

Butane defines its own schema specification: Each Butane version gets translated into a specific Ignition configuration version.

It is also possible to define a specific variant in order to create configurations for a specific environment. Currently, the supported variants are fcos and openshift.

The following example clarifies better the usage of the version and the variant fields:

In the following examples, we use Butane to transpile a simple configuration that creates a simple file.

This first Butane config declares a fcos as variant and version 1.1.0:

$ cat << EOF | butane --pretty

variant: fcos

version: 1.1.0

storage:

files:

- path: /opt/file

contents:

inline: Hello, world!

EOF

Following is the output returned by the command. The result is an Ignition configuration version 3.1.0:

{

"ignition": {

"version": "3.1.0"

},

"storage": {

"files": [

{

"contents": {

"source": "data:,Hello%2C%20world!"

}

}

]

}

}

In this second example, we use an openshift variant and version 4.9.0:

cat << EOF | butane --pretty

variant: openshift

version: 4.9.0

metadata:

name: 99-ocp-test

labels:

machineconfiguration.openshift.io/role: master

storage:

files:

- path: /opt/file

contents:

inline: Hello, world!

EOF

The result is an OpenShift MachineConfig object:

# Generated by Butane; do not edit

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: master

name: 99-ocp-test

spec:

config:

ignition:

version: 3.2.0

storage:

files:

- contents:

source: data:,Hello%2C%20world!

path: /opt/file

Please note that even if the openshift variant requires some additional fields, most of the Butane config remains the same as the previous example.

Butane can also generate a JSON Ignition configuration out of an openshift variant using the --raw command line option. This feature becomes very handy when the same configuration must be applied through a MacineConfig object and a JSON Ignition config.

OpenShift installation process

At this point, it is clear that the CoreOS Ignition is a fundamental part of the OpenShift deployment process.

In order to understand how Ignition is involved in the installation phase, we will dissect the so-called “platform agnostic” installation method.

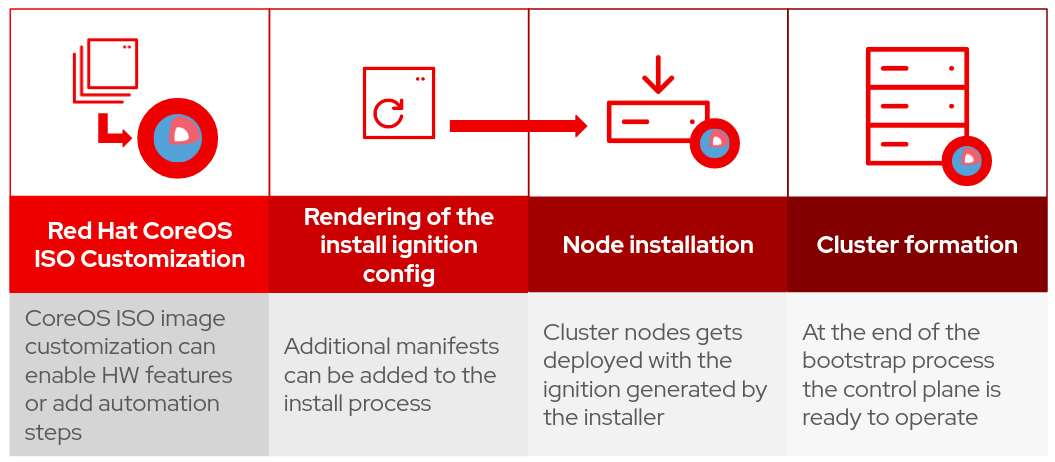

The installation process can be briefly summarised in a four main steps:

Fig 1: The installation process

The install-config.yaml is the very first manifest you create for your cluster.

It describes the fundamental properties of the cluster, such as the cluster name, networks, the global pull secret, the public SSH key to be deployed on the nodes, the cluster-wide proxy, and others.

Please refer to the documentation for more details.

The install-config.yaml must be placed into an install folder (install-dir), and then it is possible to generate the manifests files with the following command:

$ ./openshift-install create manifests --dir install-dir

This command generates a number of yaml manifests:

$ tree install-dir

install-dir/

├── manifests

│ ├── 04-openshift-machine-config-operator.yaml

│ ├── cluster-config.yaml

│ [...]

│ ├── openshift-config-secret-pull-secret.yaml

│ └── openshift-kubevirt-infra-namespace.yaml

└── openshift

├── 99_kubeadmin-password-secret.yaml

[...]

├── 99_openshift-machineconfig_99-worker-ssh.yaml

└── openshift-install-manifests.yaml

The generated manifests represent, in the Kubernetes and OpenShift API format, all the needed objects that must be deployed on nodes in order to set up an OpenShift cluster.

Since Kubernetes or OpenShift API manifests are not directly consumable by Red Hat CoreOS, the next step converts those YAML manifests into Ignition files that can be injected into the cluster nodes.

The following command renders the YAML manifests into the Ignition configurations that we will provide to the nodes:

$ ./openshift-install create ignition-configs --dir install-dir

$ tree install-dir

├── auth

│ ├── kubeadmin-password

│ └── kubeconfig

├── bootstrap.ign

├── master.ign

├── metadata.json

└── worker.ign

We now have the Ignition files for bootstrap, worker, and control plane nodes (bootstrap.ign, worker.ign and master.ign).

Looking into the generated Ignition configuration, we will see that master.ign and worker.ign are just pointers to another Ignition source hosted on the cluster Machine Config Server:

$ jq . install-dir/master.ign

{

"ignition": {

"config": {

"merge": [

{

"source": "https://api-int.cluster.example.com:22623/config/master"

}

]

},

"security": {

"tls": {

"certificateAuthorities": [

{

"source": "data:text/plain;charset=utf-8;base64,LS0t[...]aaHg=="

}

]

}

},

"version": "3.2.0"

}

}

As you can see, the master and worker Ignition files are pointing to the api-int URL, which should be resolved with the internal load balancer.

But if the cluster is not spawned-up yet, who is backing the HTTPS service on the TCP port 22623?

The bootstrap node is here to solve this chicken-egg problem, also serving the Ignition files before pivoting all the control plane features to the master nodes.

At this point, we have to publish the Ignition files on a staging web server reachable by the nodes.

To avoid corruptions of the Ignition files, it is a good practice to calculate the SHA512 checksum of the Ignition files:

$ sha512sum *ign

7c2f[...]3da2b1003e92030 bootstrap.ign

5e37[...]de8b7eed1797ee0 master.ign

c694[...]782a564fc7c9acf worker.ign

The installation of the cluster nodes is performed from a live version of CoreOS: The nodes are booted from a live ISO image of Red Hat CoreOS; the ISO provides the coreos-installer tool that will perform the installation of RHCOS on the node disks.

The first node to be deployed is the bootstrap. We now have to start the node from the live Red Hat CoreOS ISO image. Once the node finishes loading the live image, you should get a shell prompt. It is possible to install Red Hat CoreOS on the node by executing the command:

$ sudo coreos-installer install --ignition-url=http://<HTTP_server>/bootstrap.ign <storage-device> --ignition-hash=SHA512-7c2f[...]3da2b1003e92030

The installation will complete in a few minutes, and then it is possible to reboot the node, making sure that it will boot from the disk device identified by the <storage-device> parameter.

The bootstrap node is now waiting for the other nodes to join the cluster, so we should repeat the procedure for all three control plane nodes and, later, the worker nodes.

We have to make sure the value of --ignition-url parameter is properly set, according to the node type.

As we already said above, the master.ign and worker.ign Ignition files are referencing the cluster API endpoint on the port 22623. This is a Machine Config Server running on the bootstrap node.

For that reason, to accomplish those steps, it is fundamental that the external load balancer is up, running, and forwarding the connections to the control plane and the bootstrap node. Please refer to the OCP documentation for the full list of prerequisites.

Fig 2: The bootstrap process

After some time, at the end of the process, the control plane should be up and running and using the oc CLI. It should be possible to interact with the cluster.

We have completed the cluster installation.

Red Hat CoreOS Customization

As we have seen, the installation process uses RHCOS in two different phases:

- as a live image running an ephemeral operating system

- as a persistent operating system on the cluster nodes

In both stages, Red Hat CoreOS can be customized using Ignition.

The Ignition files generated by the OpenShift installer are rendered out of MachineConfig objects. The same MachineConfig manifests will be also used by the Machine Config Operator.

The live ISO image is instead using plain Ignition JSON files.

Customizing the live ISO image

Customizing the live ISO image can be helpful to implement some automations or to provide some additional features to the ISO image, such as enabling some particular hardware device that must be available during the installation phase of CoreOS (for example, network or storage devices).

Is important to highlight that ISO customizations are not applied to the permanent installation of RHCOS.

Injecting Ignition configuration into the ISO image is a straightforward operation:

- As a first step, we have to generate the Ignition JSON file.

- We need to download the ISO from the Red Hat OpenShift mirror website.

- With the coreos-installer tool, we can inject the Ignition file.

Let’s see the example of injecting an Ignition configuration deploying a simple systemd unit named foo.service.

For convenience, we will use Butane to generate the Ignition file. In this way, we can easily adapt the Butane configuration to generate the MachineConfig objects out of the same content.

Butane can be used directly from the binary or in a container. In the following example, we will use the latter, so first we have to download the Butane image:

$ podman pull quay.io/coreos/butane:release

We can now download the CoreOS image:

$ curl -s -o rhcos-live.x86_64.iso https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.9/4.9.0/rhcos-live.x86_64.iso

At this point, we have to create the Butane config to deploy the foo.service systemd unit.

The autologin feature of the live image is disabled when a custom Ignition gets injected. As a result, we need also to deploy another systemd unit drop-in providing the autologin feature.

The autologin is needed to manually run the installer or provide network customizations. We will see later how we can make the whole process unattended.

Following is the live.bu Butane config that will be injected into the ISO image. Please note that the metadata.name and metadata.labels are defined because they are mandatory by the openshift variant, but in this step are not relevant because we will generate a JSON Ignition config.

variant: openshift

version: 4.9.0

metadata:

name: 99-worker-dummy

labels:

machineconfiguration.openshift.io/role: worker

systemd:

units:

# the foo.service unit

- name: foo.service

enabled: true

contents: |

[Unit]

Description=Setup foo

After=network.target

[Service]

Type=oneshot

ExecStart=/usr/local/bin/start-foo.sh

RemainAfterExit=true

ExecStop=/usr/local/bin/stop-foo.sh

StandardOutput=journal

[Install]

WantedBy=multi-user.target

# the autologin drop-in

- name: getty@tty1.service

dropins:

- name: autologin.conf

contents: |

[Service]

TTYVTDisallocate=no

ExecStart=

ExecStart=-/usr/sbin/agetty --autologin core --noclear %I xterm-256color

# the files needed by the foo.service unit

storage:

files:

- path: /usr/local/bin/start-foo.sh

mode: 0755

contents:

inline: |

#!/bin/bash

echo "Starting foo service"

touch /tmp/foo-service

- path: /usr/local/bin/stop-foo.sh

mode: 0755

contents:

inline: |

#!/bin/bash

echo "Stopping foo service"

if [ -f /tmp/foo-activated ]; then

rm /tmp/foo-service

else

echo "foo service is not running."

fi

Using Butane we can now generate the Ignition JSON file:

$ podman run --rm --tty --interactive \

--volume ${PWD}:/pwd:z --workdir /pwd \

quay.io/coreos/butane:release \

--pretty --strict --raw ./live.bu > ./live.ign

This command will generate a live.ign JSON file that we are now ready to inject into the Red Hat CoreOS live image.

To inject the Ignition file, we will use the coreos-installer command. As with Butane, we will run it from a container, so first we have to pull the image:

$ podman pull quay.io/coreos/coreos-installer:release

Inject the Ignition is a single command:

$ podman run --rm --tty --interactive \

--volume ${PWD}:/data:z --workdir /data \

quay.io/coreos/coreos-installer:release \

iso ignition embed -i ./live.ign ./rhcos-live.x86_64.iso

Now we are ready to boot the node from the live ISO image and check if the dummy service is active on the live operating system:

[core@localhost ~]$ systemctl is-active foo

active

Nodes customization

As we described before, the Ignition configurations of the cluster nodes are generated by the OpenShift installer. In this section, we will see how to inject an Ignition config at install-time.

As seen before, after the creation of the install-config.yaml manifest, we execute the openshift-install create manifests command. This command generates the yaml manifests that will be converted into the Ignition config files bootstrap.ign, master.ign, and worker.ign.

Inspecting the generated manifests, we also can find some MachineConfig objects. For example, the file openshift/99_openshift-machineconfig_99-worker-ssh.yaml contains the MachineConfig definition of the core user SSH public key to be deployed on the worker nodes.

In this phase it is possible to add a MachineConfig definition into the openshift directory. This object will be merged into the Machine Configs handled by the MCO; thus the renderer Ignition will be served by the Machine Config Server running on the bootstrap node during the cluster bootstrap.

Following the previous example, we will now generate the Machine Configuration to enable our foo.service systemd unit.

In this case, for obvious security reasons we do not have to enable the auto login, so we will now omit the autologin.conf systemd drop-in.

Since Butane is able to generate a proper MachineConfig manifest, we will use the same process we already saw before. This gives us the advantage that we can reuse most of the Butane config we already created for the live ISO image.

Following is the openshift-worker-foo.bu Butane config file. Please note that:

- The variant value is openshift. Since we will not use the --raw option this instructs Butane to generate a MachineConfig manifest.

- The version field must be set according to the OpenShift version that is going to be installed

- We must define the metadata section, including the name of the MachineConfig object and the node role selector.

variant: openshift

version: 4.9.0

metadata:

name: 99-worker-dummy

labels:

machineconfiguration.openshift.io/role: worker

systemd:

units:

- name: foo.service

enabled: true

contents: |

[Unit]

Description=Setup foo

After=network.target

[Service]

Type=oneshot

ExecStart=/usr/local/bin/start-foo.sh

RemainAfterExit=true

ExecStop=/usr/local/bin/stop-foo.sh

StandardOutput=journal

[Install]

WantedBy=multi-user.target

storage:

files:

- path: /usr/local/bin/start-foo.sh

mode: 0755

contents:

inline: |

#!/bin/bash

echo "Starting foo service"

touch /tmp/foo-service

- path: /usr/local/bin/stop-foo.sh

mode: 0755

contents:

inline: |

#!/bin/bash

echo "Stopping foo service"

if [ -f /tmp/foo-activated ]; then

rm /tmp/foo-service

else

echo "Not running ..."

fi

Now we can use the Butane tool to generate the MachineConfig manifest:

$ podman run --rm --tty --interactive \

--volume ${PWD}:/pwd:z --workdir /pwd \

quay.io/coreos/butane:release \

./openshift-worker-foo.bu > 98-openshift-worker-foo.yaml

The file 98-openshift-worker-foo.yaml can be now included into the openshift directory before generating the Ignition configs.

$ ./openshift-install create manifests --dir install-dir

$ cp 98-openshift-worker-foo.yaml install-dir/openshift/

$ ./openshift-install create ignition-configs --dir install-dir

Now the install-dir folder contains the JSON Ignition configurations. We can double-check that the bootstrap file contains our manifest. The following command should return the same MachineConfig generated by Butane:

$ cat install-dir/bootstrap.ign | \

jq -r '.storage.files[] |

select(.path=="/opt/openshift/openshift/98-openshift-worker-foo.yaml") |

.contents.source' | cut -d , -f2 | base64 -d

The generated Ignition files need to be transferred in a web server to be available for the installation.

At this point, after booting the bootstrap node from the live RHCOS ISO image, we can proceed with the installation of the node using the bootstrap.ign config file:

$ sudo coreos-installer install \

--ignition-url=http://<HTTP_server>/bootstrap.ign <storage-device> \

--ignition-hash=SHA512-7c2f[...]3da2b1003e92030

The Ignition config file can be delivered to the installer also via a local path (for example, mounting an USB stick containing the file). In this case, the option --ignition-file should be used.

It is now possible to proceed with the master and worker nodes installation following the same procedure.

At the end of the installation, the worker nodes will have the foo.service deployed and activated.

It is important to remember that since the Ignition provisioning process is activated during the initramfs phase of the first boot, foo.service will be available right away after the initramfs.

Network configuration

Network configuration of the nodes, such as configuring static IP addresses, or defining a bond connection, can be managed via custom Ignition files directly injecting Network Manager configuration files.

By the way, this is not a very handy approach, because it would require a dedicated MachineConfig pool per each node. A better alternative is to use dracut and the --copy-network option of the coreos-installer.

Manual configuration

The Red Hat CoreOS live image comes with Network Manager tools included: nmcli end nmtui.

After booting the live image, it is possible to configure the network of the node directly with nmtui or nmcli. In this way, it is possible to set static IPs, VLAN, or any other setting.

Fig. 3: The nmtui user interface

After configuring the network of the live system, it is possible to transfer the network configuration from the live system to the persistent operating system on the nodes using the --copy-network option:

$ sudo coreos-installer install \

--ignition-url=http://<HTTP_server>/bootstrap.ign \

<storage-device> \

--ignition-hash=SHA512-7c2f[...]3da2b1003e92030 \

--copy-network

The --copy-network will transfer the content of /etc/NetworkManager/system-connections/ from the live system to the storage device of the installed node. This will make the network settings persistent.

Dracut

The dracut utility is able to create network configurations based on the kernel boot arguments.

For a comprehensive list of supported arguments, please refer to the documentation.

By leveraging dracut, it is possible to define the entire network configuration as a kernel boot parameter avoiding the manual configuration after the boot.

As an example, the following kernel boot parameters will start the live image configuring the network interface enp1s0 with IP address 192.168.122.2/24, 192.168.122.1 as DNS server and gateway:

ip=192.168.122.2::192.168.122.1:255.255.255.0::enp1s0:on:192.168.122.1

As a more complex example, we can set up a bond named interface bond0 with enp1s0 and enp7s0 as slave devices:

bond=bond0:enp1s0,enp7s0,mode=balance-rr

ip=192.168.122.2::192.168.122.1:255.255.255.0::bond0:on:192.168.122.1

Fig. 4: Booting RHEL CoreOS with network parameters

As an additional example we can add a VLAN device to the bond:

bond=bond0:enp1s0,enp7s0,mode=balance-rr

vlan=bond0.155:bond0

ip=192.168.122.2::192.168.122.1:255.255.255.0::bond0.155:on:192.168.122.1

Automating the Red Hat CoreOS installation

Red Hat CoreOS can run the coreos-installer command automatically if proper kernel boot parameters are set:

- coreos.inst.install_dev defines the target device where to install the OS.

- coreos.inst.ignition_url defines the URL of the Ignition file.

Triggering the automated coreos-installer will make all the kernel parameters persist; thus, the network configurations also will be permanent.

In this way, everything is defined at boot time, and after entering the kernel arguments, the installation on the node will be fully unattended.

Fig. 5: Booting RHEL CoreOS with network parameters and install instructions

An additional automation can be to use an ipxe staging environment to provide the proper kernel arguments. In such a way it would be possible to set up a fully unattended cluster installation.

Resources

Following is a list of useful community and upstream documentation pages:

Conclusions

Red Hat Enterprise Linux CoreOS is the operating system for OpenShift cluster nodes. RHCOS, in conjunction with the OpenShift management functionality, simplifies the administration and management of the cluster nodes by centralizing those tasks and making it easier to apply known configuration to the nodes. CoreOS can be customized via the Ignition provisioning process.

The platform agnostic installation method uses a live CoreOS ISO image as a staging ephemeral system to persistently install the operating system on the nodes via the coreos-installer command.

Customizing the live ISO can be helpful if some hardware feature must be activated before the installation of the operating system on the node disk. In this article we described how to generate the Ignition configuration and how to inject them into the ISO image.

Customizing the nodes requires an integration with the Machine Config Operator; thus, the generation of MachineConfig objects that can be converted into Ignition JSON files by the OpenShift installer.

Fig 6: The installation process

Supportability considerations

Some important notes need to be considered during the customization of Red Hat CoreOS images and nodes.

Red Hat supports the injection of MachineConfig YAML manifests during the cluster installation only if mentioned in the official documentation (for example, the disk partitions configuration).

Red Hat does not provide support for custom scripts, systemd units, or any other content that is not explicitly mentioned in the official production documentation.

The customization of the ISO live image is not supported by Red Hat; thus, Red Hat cannot guarantee bug fixing, troubleshooting, or assistance regarding those customization tasks to customers.

The OpenShift and the CoreOS roadmap include some new tools to simplify the customization workflow. Stay tuned for a second part of this article.

About the author

Pietro Bertera is a Senior Technical Account Manager working with Red Hat OpenShift. He is based in Italy.

More like this

AI in telco – the catalyst for scaling digital business

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 6)

OS Wars_part 1 | Command Line Heroes

OS Wars_part 2: Rise of Linux | Command Line Heroes

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds