Being able to enforce airtight application security at the cluster-wide level has been a popular ask from cluster administrators. Key admin user stories include:

- As a cluster administrator, I want to enforce non-overridable admin network policies that have to be adhered to by all user tenants in the cluster, thus securing my cluster’s network traffic.

- As a cluster administrator, I want to implement specific network restrictions across multiple tenants (a tenant here refers to one or more namespaces), thus facilitating network multi-tenancy.

- As a cluster administrator, I want to enforce the tenant isolation model (a tenant cannot receive traffic from any other tenant) as the cluster’s default network security standard, thus delegating the responsibility of explicitly allowing traffic from other tenants to the tenant owner using NetworkPolicies.

NetworkPolicies in Kubernetes were created for developers to be able to secure their applications. Hence they are under the control of potentially untrusted application authors. They are namespace scoped and are not suitable for solving the above user stories for cluster administrators.

Starting from OCP 4.14, a new TechPreview OpenShift Networking feature called AdminNetworkPolicy has been designed precisely to solve these problems. It provides a comprehensive cluster-wide network security solution for cluster administrators. In this post, we will explore how AdminNetworkPolicies work and how they interact with NetworkPolicies on an OpenShift cluster with OVN-Kubernetes as the CNI plugin.

What are AdminNetworkPolicy (ANP) and BaselineAdminNetworkPolicy (BANP)?

Starting from OCP 4.14, the AdminNetworkPolicy and BaselineAdminNetworkPolicy APIs–which are cluster scoped Custom Resource Definitions (CRDs) shipped by the sig-network-policy-api working group as out-of-tree Kubernetes APIs–are installed on a TechPreviewNoUpgrade OpenShift cluster. Both these APIs can be used to define cluster-wide admin policies in order to secure your cluster’s network traffic. These APIs are currently in the v1alpha1 version, supporting only intra-cluster use cases.

New features such as being able to express easier and accurate tenancy expressions, cluster egress traffic controls, FQDN support, etc., are being worked on within the upstream community. These APIs are subject to change in the future versions and policies created in 4.14 may not work in newer releases, which is why this feature is only available on TechPreviewNoUpgrade clusters.

$ oc get crd adminnetworkpolicies.policy.networking.k8s.io

NAME CREATED AT

adminnetworkpolicies.policy.networking.k8s.io 2023-10-01T11:33:58Z

$ oc get crd baselineadminnetworkpolicies.policy.networking.k8s.io

NAME CREATED AT

baselineadminnetworkpolicies.policy.networking.k8s.io 2023-10-01T11:33:58Z

Integration with NetworkPolicy API

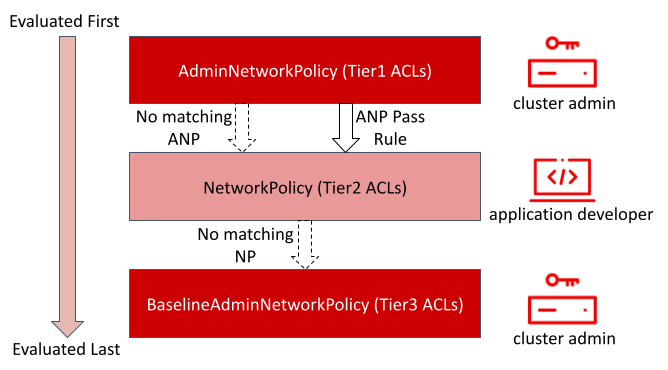

ANP and BANP are designed to complement and coexist with the NetworkPolicy API within a cluster. The AdminNetworkPolicies have the highest precedence on a cluster. They are implemented in OVN-Kubernetes using OVN’s tiered Access Control Lists (ACLs).

If traffic matches an ANP, the rules in that ANP will be evaluated first. If the match was on an ANP allow or deny rule, any existing NetworkPolicies and BaselineAdminNetworkPolicy in the cluster will be intentionally skipped from evaluation. If the match was on an ANP pass rule or no matching ANPs were found, then evaluation will jump from AdminNetworkPolicies to NetworkPolicies. If a NetworkPolicy matches the traffic, then any existing BaselineAdminNetworkPolicy in the cluster will be intentionally skipped from evaluation. If no matching NetworkPolicies are found, then we jump to baseline admin network policy for evaluation. If a matching BANP is found, then that takes effect. In OVN-Kubernetes, ANPs correspond to tier1 ACLs, NPs correspond to tier2 ACLs, and BANP corresponds to tier3 ACLs.

AdminNetworkPolicy API

An AdminNetworkPolicy resource allows users to specify.

- A subject which consists of a set of pods, selected by the policy

- A list of ingress rules to be applied for all ingress traffic towards the subject. Each ingress rule contains:

- A list of from peer pods which allows us to match on the source of the ingress traffic at L3.

- A list of ports on the subject pods to match on the destination ports of the ingress traffic at L4.

- An action to perform on the matched ingress traffic–it can either be allow, deny, or pass.

- A list of egress rules to be applied for all egress traffic from the subject. Each egress rule contains:

- A list of to peer pods which allows us to match on the destination for the egress traffic at L3

- A list of ports on the to peer pods to match on the destination ports of the egress traffic at L4

- An action to perform on the matched egress traffic–it can either be allow, deny, or pass.

- A priority value that decides the precedence of this AdminNetworkPolicy versus the other AdminNetworkPolicies in the cluster: The lower the value, the higher the precedence.

apiVersion: policy.networking.k8s.io/v1alpha1

kind: AdminNetworkPolicy

metadata:

name: allow-monitoring

spec:

priority: 9

subject:

namespaces: {}

ingress:

- name: "allow-ingress-from-monitoring"

action: "Allow"

from:

- namespaces:

namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: monitoring

The above AdminNetworkPolicy, defined at priority 9, ensures all ingress traffic from monitoring namespace towards any tenant (all namespaces) in the cluster are allowed. This is an example of a strong ALLOW that is non-overridable by all the parties involved here. None of the tenants can block themselves from being monitored using NetworkPolicies and the monitoring tenant also has no say in what it can or cannot monitor.

apiVersion: policy.networking.k8s.io/v1alpha1

kind: AdminNetworkPolicy

metadata:

name: block-monitoring

spec:

priority: 5

subject:

namespaces:

matchLabels:

security: restricted

ingress:

- name: "deny-ingress-from-monitoring"

action: "Deny"

from:

- namespaces:

namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: monitoring

The above AdminNetworkPolicy, defined at priority 5, ensures all ingress traffic from monitoring namespace towards restricted tenants (namespaces that have labels security: restricted) are blocked. This is an example of a strong DENY that is non-overridable by all the parties involved. The restricted tenant owners cannot choose to allow monitoring traffic even if they want to, and the infrastructure’s monitoring service cannot scrape anything from these sensitive namespaces even if it wants to. Since block-monitoring ANP has lower priority value compared to allow-monitoring ANP, block-monitoring takes higher precedence, thereby ensuring restricted tenants are never monitored.

apiVersion: policy.networking.k8s.io/v1alpha1

kind: AdminNetworkPolicy

metadata:

name: pass-monitoring

spec:

priority: 7

subject:

namespaces:

matchLabels:

security: internal

ingress:

- name: "pass-ingress-from-monitoring"

action: "Pass"

from:

- namespaces:

namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: monitoring

The above AdminNetworkPolicy, defined at priority 7, ensures all ingress traffic from monitoring namespace towards internal infrastructure tenants (namespaces that have label security: internal) are passed on to be evaluated by the namespaces’ NetworkPolicies. This is an example of a strong PASS action using which AdminNetworkPolicies delegate the decision to NetworkPolicies defined by tenant owners. Since pass-monitoring ANP has lower priority value compared to allow-monitoring ANP, pass-monitoring takes higher precedence, thereby ensuring all tenant owners grouped at security level internal will have an option to choose if their metrics should be scraped by the infrastructure's monitoring service using namespace scoped NetworkPolicies.

Sample output when listing AdminNetworkPolicies on OpenShift:

$ oc get anp

NAME PRIORITY AGE

allow-monitoring 9 9s

block-monitoring 5 9s

pass-monitoring 7 8s

NOTE: It is not recommended to create two AdminNetworkPolicies at the same priority because the outcome for which takes precedence is non-deterministic. Currently we only support priority values between 0 and 99. See the developer docs for more information.

BaselineAdminNetworkPolicy API

A BaselineAdminNetworkPolicy resource allows users to specify:

- A subject which consists of a set of pods, selected by the policy

- A list of ingress rules to be applied for all ingress traffic towards the subject. Each ingress rule contains:

- A list of from peer pods which allows us to match on the source for the ingress traffic at L3

- A list of ports on the subject pods to match on the destination ports of the ingress traffic at L4

- An action to perform on the matched ingress traffic–it can either be allow or deny but not pass.

- A list of egress rules to be applied for all egress traffic from the subject. Each egress rule contains:

- A list of to peer pods which allows us to match on the destination for the egress traffic at L3

- A list of ports on the to peer pods to match on the destination ports of the egress traffic at L4

- An action to perform on the matched egress traffic–it can either be allow or deny but not pass.

The BaselineAdminNetworkPolicy resource is a cluster singleton object and acts mostly as the default catch-all guardrail policy in case a passed traffic does not match any NetworkPolicies in the cluster. You must use default as the name when creating a BaselineAdminNetworkPolicy object.

Sample YAML:

apiVersion: policy.networking.k8s.io/v1alpha1

kind: BaselineAdminNetworkPolicy

metadata:

name: default

spec:

subject:

namespaces:

matchLabels:

security: internal

ingress:

- name: "deny-ingress-from-monitoring"

action: "Deny"

from:

- namespaces:

namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: monitoring

The above BaselineAdminNetworkPolicy singleton ensures that the admin has set up default deny guardrails for all ingress monitoring traffic coming into the tenants at internal security level. This acts as the catch-all policy for all traffic that is passed by the pass-monitoring admin network policy.

Let’s say we have two tenants foo and bar, both defined with the label security: internal. Tenant foo wants to gather monitoring data on their applications. The tenant owner can define a NetworkPolicy that looks like this:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-monitoring

namespace: foo

spec:

podSelector:

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: monitoring

If the traffic is destined towards the tenant foo, once the pass-monitoring ANP matches and delegates traffic to the NetworkPolicy layer, the above NetworkPolicy will be matched for the foo tenant that implicitly denies all ingress and explicitly allows only ingress coming from the monitoring namespace, thus satisfying the tenant’s requirements. Without this NetworkPolicy, the BANP would have taken effect, which would have ended up denying the monitoring of internal tenants.

If the traffic is destined towards the tenant bar, once the pass-monitoring ANP matches and delegates traffic to the NetworkPolicy layer, since the tenant bar has not created any NetworkPolicies, no match will be found at the NP layer. We will then evaluate the above BANP that will explicitly deny the tenant bar to be monitored. This satisfies the admin's requirements of not monitoring the internal tenants by default.

Sample output when listing BaselineAdminNetworkPolices on OpenShift:

$ oc get banp

NAME AGE

default 5s

NOTE: In order to ensure the AdminNetworkPolicy was created correctly, check the status field of the CRD that shows the status reported by each node's OVNKubernetes zone controller.

Sample output:

Status:

Conditions:

Last Transition Time: 2023-10-02T10:11:46Z

Message: Setting up OVN DB plumbing was successful

Reason: SetupSucceeded

Status: True

Type: Ready-In-Zone-ci-ln-47fd152-72292-zd569-master-2

Last Transition Time: 2023-10-02T10:11:46Z

Message: Setting up OVN DB plumbing was successful

Reason: SetupSucceeded

Status: True

Type: Ready-In-Zone-ci-ln-47fd152-72292-zd569-worker-b-d77tp

Summary

In this post, we saw how a TechPreviewNoUpgrade OCP cluster running OVN-Kubernetes CNI can use AdminNetworkPolicy feature to secure their cluster network traffic. We also saw how AdminNetworkPolicies and BaselineAdminNetworkPolicy in a cluster can be used to achieve an extensive cluster-wide multi-tenancy solution and how they interact with the existing NetworkPolicies.

In the future, these APIs will also support cluster-egress traffic controls, and once the API semantics stabilize and graduate to v1beta1, they will be generally available in the next minor release of OpenShift clusters that are running the OVN-Kubernetes CNI.

We invite all readers to get involved in the sig-network-policy-api meetings and bring us feedback on these APIs!

About the author

More like this

AI in telco – the catalyst for scaling digital business

The nervous system gets a soul: why sovereign cloud is telco’s real second act

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds