Overview

Kubernetes can assist with AI/ML workloads by making code consistently reproducible, portable, and scalable across diverse environments.

The role of containers in AI/ML development

When building machine learning enabled applications, the workflow is not linear, and the stages of research, development, and production are in perpetual motion as teams work to continuously integrate and continuously deliver (CI/CD). The process of building, testing, merging, and deploying new data, algorithms, and versions of an application creates a lot of moving pieces, which can be difficult to manage. That’s where containers come in.

Containers are a Linux technology that allow you to package and isolate applications along with all the libraries and dependencies it needs to run. Containers don’t require an entire operating system, only the exact components it needs to operate, which makes it lightweight and portable. This provides an ease of deployment for operations and confidence for developers that their applications will run exactly the same way on different platforms or operating systems.

Another benefit of containers is that they help reduce conflicts between your development and operations teams by separating areas of responsibility. And when developers can focus on their apps and operations teams can focus on the infrastructure, integrating new code into an application as it grows and evolves throughout its lifecycle becomes more seamless and efficient.

Red Hat resources

What Kubernetes brings to AI/ML workloads

Kubernetes is an open source platform that automates Linux container operations by eliminating many of the manual processes involved in deploying and scaling containerized applications. Kubernetes is key to streamlining the machine learning lifecycle as it provides data scientists the agility, flexibility, portability, and scalability to train, test, and deploy ML models.

Scalability: Kubernetes allows users to scale ML workloads up or down, depending on demand. This ensures that machine learning pipelines can accommodate large-scale processing and training without interfering with other elements of the project.

Efficiency: Kubernetes optimizes resource allocation by scheduling workloads onto nodes based on their availability and capacity. By ensuring that computing resources are being utilized with intention, users can expect a reduction in cost and an increase in performance.

Portability: Kubernetes provides a standardized, platform-agnostic environment that allows data scientists to develop one ML model and deploy it across multiple environments and cloud platforms. This means not having to worry about compatibility issues and vendor lock-in.

Fault tolerance: With built-in fault tolerance and self-healing capabilities, users can trust Kubernetes to keep ML pipelines running even in the event of a hardware or software failure.

Deploying ML models on Kubernetes

The machine learning lifecycle is made up of many different elements that, if managed separately, would be time consuming and resource intensive to operate and maintain. With a Kubernetes architecture, organizations can automate portions of the ML lifecycle, removing the need for manual intervention and creating more efficiency.

Toolkits such as Kubeflow can be implemented to assist developers in streamlining and serving the trained ML workloads on Kubernetes. Kubeflow solves many of the challenges involved in orchestrating machine learning pipelines by providing a set of tools and APIs that simplify the process of training and deploying ML models at scale. Kubeflow also helps standardize and organize machine learning operations (MLOps).

How Red Hat can help

Kubernetes can help you streamline AI/ML workloads, but you still need a platform to experiment, serve models, and deliver your applications.

Red Hat® AI is our portfolio of AI products built on solutions our customers already trust. This foundation helps our products remain reliable, flexible, and scalable.

Red Hat AI can help organizations:

- Adopt and innovate with AI quickly.

- Break down the complexities of delivering AI solutions.

- Deploy anywhere.

Stay flexible while you scale

Red Hat AI includes Red Hat OpenShift AI: an integrated MLOps platform that can manage the lifecycle of both predictive and generative AI models.

This AI platform provides a space to build, train, deploy, and monitor AI/ML workloads in on-premise datacenters or closer to where data is located. This makes it easier to scale operations to the cloud or at the edge when needed.

Layering kubernetes with Red Hat AI will allow your team to stay nimble when delivering AI applications across hybrid cloud environments.

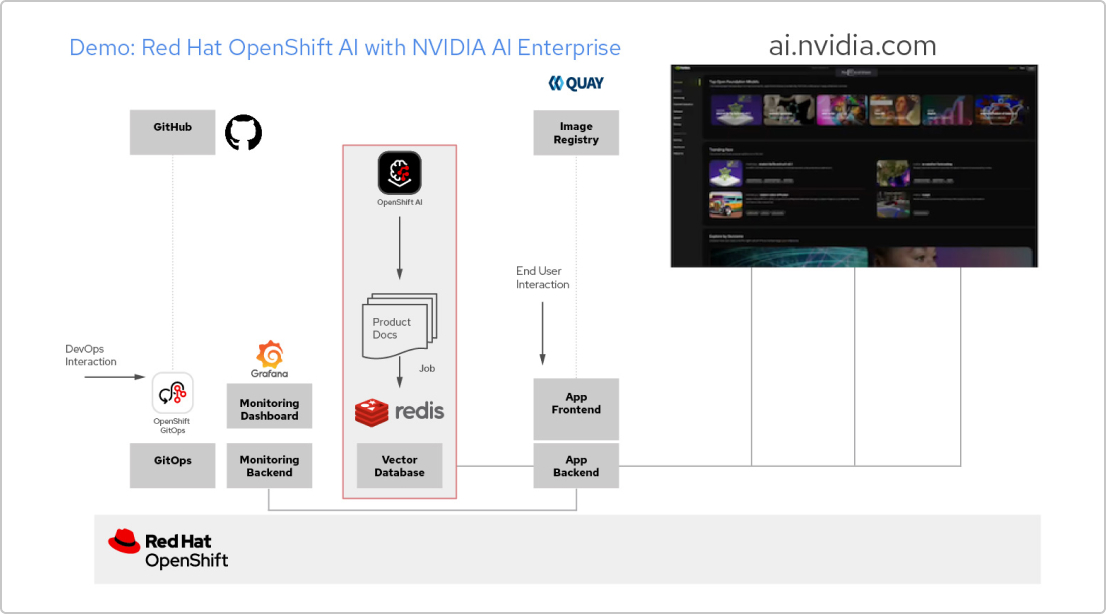

Solution pattern: AI apps with Red Hat & NVIDIA AI Enterprise

Create a RAG application

Red Hat OpenShift AI is a platform for building data science projects and serving AI-enabled applications. You can integrate all the tools you need to support retrieval-augmented generation (RAG), a method for getting AI answers from your own reference documents. When you connect OpenShift AI with NVIDIA AI Enterprise, you can experiment with large language models (LLMs) to find the optimal model for your application.

Build a pipeline for documents

To make use of RAG, you first need to ingest your documents into a vector database. In our example app, we embed a set of product documents in a Redis database. Since these documents change frequently, we can create a pipeline for this process that we’ll run periodically, so we always have the latest versions of the documents.

Browse the LLM catalog

NVIDIA AI Enterprise gives you access to a catalog of different LLMs, so you can try different choices and select the model that delivers the best results. The models are hosted in the NVIDIA API catalog. Once you’ve set up an API token, you can deploy a model using the NVIDIA NIM model serving platform directly from OpenShift AI.

Choose the right model

As you test different LLMs, your users can rate each generated response. You can set up a Grafana monitoring dashboard to compare the ratings, as well as latency and response time for each model. Then you can use that data to choose the best LLM to use in production.

The official Red Hat blog

Get the latest information about our ecosystem of customers, partners, and communities.