Performance is important when it comes to security features such as SELinux. While the performance impact of typical workloads has been long known to be small for most workloads (see for example the SELinux benchmarks of Fedora 31 by Phoronix), certain specific operations are slower than they could be.

In addition, there are also memory and disk space usage issues, which can lead to unnecessarily large virtual machine images or minimum memory requirements.

In this post, I will present some of these gaps that I found and fixed upstream. Together, these improvements:

-

reduced the time to load SELinux policy into the kernel on Fedora from ~1.3 seconds to only ~106 milliseconds (cca 12x),

-

reduced the time to rebuild SELinux policy from modules on Fedora from ~21.9 seconds to ~5.7 seconds (cca 3.8x),

-

reduced the size of the final SELinux policy binary blob from 7.6 MB to only 3.3 MB (cca 2.3x),

-

reduced the memory overhead of SELinux from ~30 MB to ~15 MB,

-

reduced the time to create a file with SELinux enabled from ~55 microseconds to ~44 microseconds (cca 1.25x).

These improvements were gradually introduced through versions 5.7 and 5.9 of the Linux kernel (the kernel bits) and in version 3.2 of SELinux userspace tools (the user-space bits). The kernel improvements were first available in Fedora 32 and the user-space improvements in Fedora 34. All of them are planned to be available in RHEL 9 and later (inherited from Fedora) and a few of the kernel improvements have been also backported to RHEL 8.

The kernel benchmarks in this blog post have been performed on a cloud virtual machine running Fedora x86_64 on a 2 GHz Intel Xeon CPU with 2GB RAM. The user-space benchmarks have been run directly on a physical machine running Fedora x86_64 on a 1.9-4.8GHz Intel Core i7-8665U CPU with 32GB RAM. As usual, your mileage may vary.

Kernel-side performance improvements

Faster and more space-efficient filename transitions

Over time, Fedora/RHEL SELinux policy has managed to accumulate quite a few so-called named type transitions. Compared to regular type transition rules, these allow taking into account also the name of the object being created (in addition to the type of the process, type of the parent object, and the class of the object) when computing the SELinux type to use for a newly created object (usually a file or directory).

So high, in fact, that it caused a very noticeable difference in the kernel's memory usage and the size of the final binary policy file (see BZ 1660142 where it was reported). I was concerned about this, so I tried to find a solution.

Most of the named transition rules that were added were rules for /dev nodes so they would get the correct label. While a lot of these rules are likely not necessary in practice, it would be difficult to identify which of them can be safely removed without causing a regression, so instead I tried to find a way to make their storage (both in the binary policy and in memory when loaded into the kernel) more efficient.

The problem with named type transitions is that their internal representation is not very efficient. When they were originally introduced, it was assumed that they would be used only on rare occasions, so the authors went for the simplest solution.

By analyzing the existing rules in the policy, I discovered that if I group all the named transition rules in the Fedora policy by (target type, target class, object name), I always get rules with one or more different source types, but only one result type.

So I could optimize for this case switch from mapping keys of (source type, target type, class, name) to result types to mapping keys of (target type, class, name) to a list associating a set of source types with the corresponding result type.

The first step was to change the internal representation in the kernel (see Linux kernel commit c3a276111ea2 (“selinux: optimize storage of filename transitions”)), which already brought some nice improvements:

-

The policy load time was reduced from ~1.3 seconds to ~0.95 seconds.

-

The memory usage of the SELinux kernel subsystem dropped from ~30 MB to ~15 MB.

-

The time to create a file (which includes a lookup in the named type transitions table) decreased from ~55 microseconds to ~40 microseconds.

The next step was to update the binary policy format to use this new representation. This required an update of both the kernel and user-space tools to be able to read and write this new modified format. This was implemented by commits 430059024389 (kernel), 42ae834a7428 (user space), and 8206b8cb0039 (user space). This step has brought further improvements (all numbers are for Fedora Rawhide at that time):

-

The policy load time was reduced from ~157 ms to ~106 ms.

-

NB: These numbers are notably different from the previous step, because there have been other improvements in between (these are discussed later in this article).

-

-

The size of the Fedora binary policy blob was reduced from 7.6 MB to only 3.3 MB.

-

As a consequence of 2., the size of the

selinux-policy-targetedRPM package was reduced from 8.0 MB to 6.2 MB (here the difference is smaller due to compression and other files in the package).

More optimal sizing of internal hash tables

The SELinux kernel subsystem makes heavy use of hash tables in the internal policy representation. I noticed that in almost all cases the hash tables were created with a fixed hard-coded size, rather than using the number of elements to choose an appropriate size. In many cases, the hard-coded sizes were less than optimal, leading to poor performance of some operations (mainly the policy load).

So I put together a patch to fix this. It was fairly easy since in almost all cases the number of elements was known ahead of adding the entries and the entries were never removed. This patch has been eventually merged as commit e0ac568de1fa.

This change brought a dramatic improvement in the policy load time, which dropped from ~790 ms to ~167 ms. It is likely that other operations were also made faster with this change, but the policy load speedup alone was worth it, so I didn’t even bother measuring anything else.

In many cases, the resulting hash table arrays became larger than before (when the Fedora policy was loaded on x86_64), so I also calculated this difference to make sure the memory usage is not badly affected. It turned out the arrays grew from ~58 KB to ~163 KB, which is a negligible increase compared to the overall memory usage of SELinux.

Faster lookup of role transitions

After improving the lookup performance of named type transitions, there was one more thing that made computing SELinux context transitions slower than necessary. Since their introduction, role transitions have been stored in a simple linked list.

This ends up being a problem because linked lists have a poor lookup performance whenever they have more than just a few elements. The Fedora policy contains around 426 role transitions, so the lookup was very inefficient.

The obvious solution to this was to switch from linked list to hash table for storing the role transitions. This was relatively straightforward and ended up merged as commit e67b2ec9f617.

The change reduced the time to compute an SELinux context transition to about a half. I no longer have data on more high-level operations, but it should speed up things like creating a new file or executing a file.

Faster hash table operations thanks to inlining

Another performance improvement came from moving some parts of the SELinux hash table function definitions to the header file so that the compiler could inline them and generate more efficient code. Namely, this allowed several indirect calls to become direct calls. That makes a big difference especially due to Spectre/Meltdown mitigations.

This improvement has been merged in commits 24def7bb92c1 and 54b27f9287a7 and brought some further speedup for policy load, context transitions, and parsing SELinux contexts from their string representation.

Making SELinux policy updates faster

Another set of recent performance improvements was focused on speeding up the policy rebuild operation in user space (basically what happens when you run semodule -B or semodule -i/-r .

Before these improvements, this operation was taking more than 20 seconds on Fedora x86_64, which is quite a noticeable delay. The policy rebuild happens every time the selinux-policy or another SELinux policy package (e.g. container-selinux) is updated/installed/removed, or when the user performs SElinux policy customizations (e.g. installs/removes a custom policy module or performs a persistent change of a SELinux boolean).

Removing dead code

When I started investigating why the policy rebuild is so slow, I soon noticed that the time was significantly reduced with the latest SELinux user space snapshot compared to the version that was in Fedora at that time.

To my surprise, it turned out that this speedup was caused by my patch, which had been merged just days before. That patch was just a trivial cleanup, removing some pointless code. The removed code was creating some hash tables that weren’t actually used later on and inserting into these tables is what was taking up about half (!) of the whole policy build time – the duration of semodule -NB (-N is to skip loading the policy into the kernel, which we don’t want to measure here) decreased from ~21.9 seconds to only ~9.4 seconds.

So that was a nice gain and I hadn’t even started yet :)

Profiling with Callgrind and KCachegrind

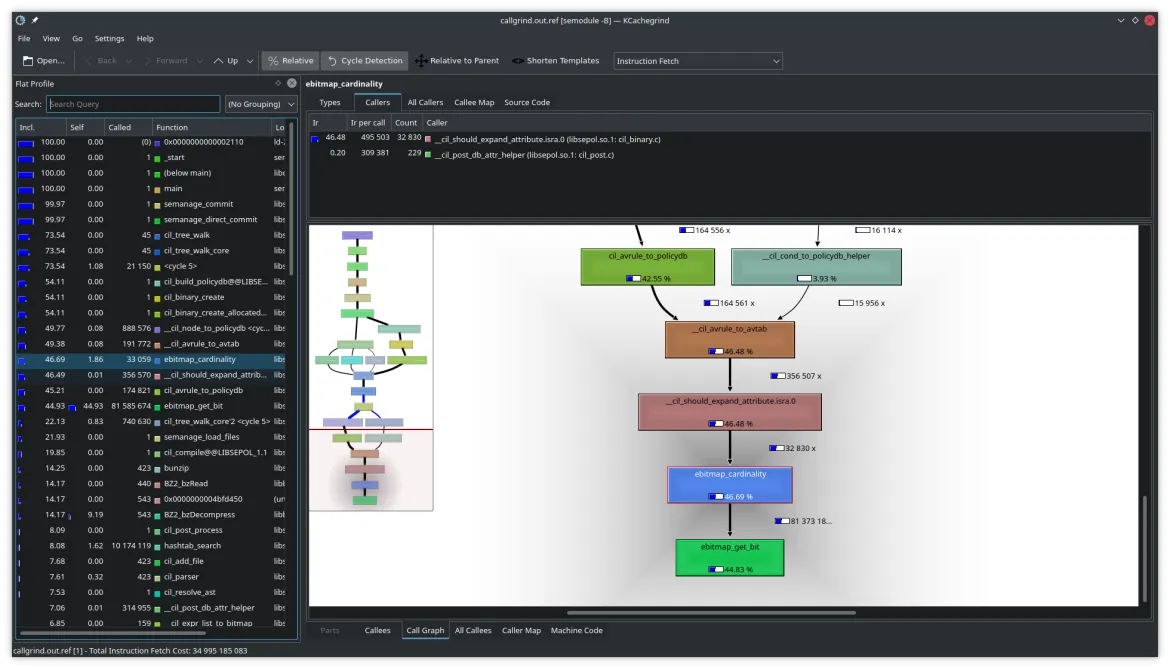

To analyze what’s taking up the most time when calling semodule -B, I used Callgrind, which is a very useful command-line tool from the well-known Valgrind collection that can be used to analyze what functions your C/C++ program is spending the most time in. KCachegrind is a graphical interface that is able to nicely visualize the data captured by Callgrind and Cachegrind (another performance debugging tool focused on CPU cache usage efficiency).

For example, to analyze semodule-NB, I would run:

LD_BIND_NOW=1 valgrind --tool=callgrind semodule -NB LD_BIND_NOW=1 is needed to instruct the dynamic library loader to load all libraries at startup because the default lazy loading would mess up the performance data.

This will create a callgrind.out. file that you can open with KCachegrind for analysis. Note that if you run the profiled program as root, you will need to use the chown command to switch the owner and group of the output file so you can read it as a regular user.

First offender - the bitmap cardinality function

After generating an initial profiling sample, I quickly noticed that one function was responsible for almost half of the computation time (roughly estimated by instruction load count), even though it wasn’t called very often.

ebitmap_cardinality() is a function from the libsepol library whose task is to count the number of set bits in a bitmap. A closer look at the definition of this function revealed that it is implemented in a very simple albeit inefficient way.

Since it is quite non-trivial to implement counting bits efficiently in a portable way, I first tried to just add simple caching of the cardinality value, so that it is computed just once for each bitmap. This turned out to be sufficient to reduce the overall overhead of ebitmap_cardinality() to a negligible level, so I contributed this improvement upstream.

Later, it was found that perfect cross-compiler portability is not that important for this library, so the caching mechanism was replaced by Nicholas Iooss with a more optimal implementation of bit counting, which maintained good performance while keeping the code simple.

This optimization brought the time to do semodule -NB from ~9.4 seconds down to ~8.0 seconds.

Automatically growing hash tables

Another low-hanging fruit that the profiling uncovered was the suboptimal performance of hash tables. Same as the kernel implementation, the user-space generic hash table implementation was working with fixed and often hard-coded sizes, which led to poor insertion and lookup performance if the actual element count was higher.

Since in the user space the hash table also implements the removal of elements and the size is not always known at the time of creation, I decided to implement a simple automatic resizing whenever the number of elements became too high for the current table size. This required changing only the common hash table code and thus it was a very small patch.

This small tweak decreased the duration of semodule -NB from ~8.0 seconds further down to ~6.3 seconds.

Speedup of policy rules optimization

The final potential performance improvement identified by profiling was in the “binary policy optimization” functionality, which is an optional step in the policy compilation that removes redundant rules from the final binary policy (the goal of this is mainly to reduce its on-disk size).

The optimization here was to use a more efficient implementation for a “set of numbers” data structure, which is used to store some auxiliary data. The change is described in a bit more detail in the commit message.

This optimization further reduced the duration ofsemodule -NB from ~6.3 seconds down to ~5.7 seconds.

Epilogue

These performance improvements demonstrate that it is worth spending some time to investigate performance problems, as there may often be some “low-hanging fruit” allowing one to make significant improvements without too much effort (especially in the case of projects containing legacy code).

Performance analysis tools (such as Callgrind + KCachegrind or perf + FlameGraph) can be of great help to identify bottlenecks.

Sull'autore

Ondrej Mosnacek is a Software Engineer in the SELinux team at Red Hat. Mosnacek is an active contributor to the SELinux subsystem of the Linux kernel and helps co-maintain other SELinux components as well. In the past, he also briefly worked on the Audit and Crypto kernel subsystems.

Altri risultati simili a questo

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Serie originali

Raccontiamo le interessanti storie di leader e creatori di tecnologie pensate per le aziende

Prodotti

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servizi cloud

- Scopri tutti i prodotti

Strumenti

- Formazione e certificazioni

- Il mio account

- Supporto clienti

- Risorse per sviluppatori

- Trova un partner

- Red Hat Ecosystem Catalog

- Calcola il valore delle soluzioni Red Hat

- Documentazione

Prova, acquista, vendi

Comunica

- Contatta l'ufficio vendite

- Contatta l'assistenza clienti

- Contatta un esperto della formazione

- Social media

Informazioni su Red Hat

Red Hat è leader mondiale nella fornitura di soluzioni open source per le aziende, tra cui Linux, Kubernetes, container e soluzioni cloud. Le nostre soluzioni open source, rese sicure per un uso aziendale, consentono di operare su più piattaforme e ambienti, dal datacenter centrale all'edge della rete.

Seleziona la tua lingua

Red Hat legal and privacy links

- Informazioni su Red Hat

- Opportunità di lavoro

- Eventi

- Sedi

- Contattaci

- Blog di Red Hat

- Diversità, equità e inclusione

- Cool Stuff Store

- Red Hat Summit