I recently had the pleasure of linking up with one of my favorite Red Hat colleagues (David “Pinky” Pinkerton) from Australia while we were both in Southeast Asia for a Red Hat event. We both have a propensity for KVM and Red Hat Virtualization (RHV) in particular, and he brought up a fantastic topic - truly segregated networks to support other security requirements. The reason came up because he had a “high security” client that needed to keep different traffic types separated within RHV, as the VMs were used to scan live malware. And that is why I made the comment about the (justifiably) paranoid.

Let’s take a look. |

To be completely transparent, while I did cover segregating 10GbE traffic for RHV and KVM in prior posts in my other blog, I have to give full credit to Pinky here. Most of the details for this post are his, I really just helped organize thoughts. Big thanks and kudos to Mr. Pinkerton.

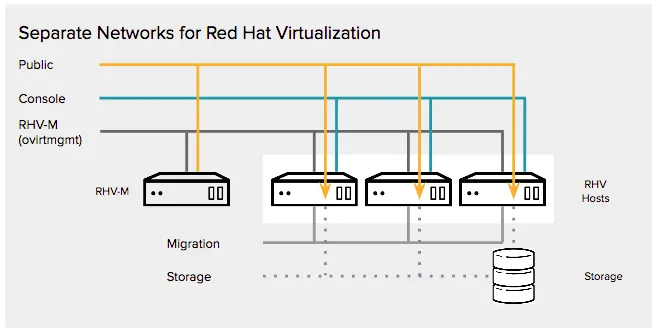

Example Networks

As a bit of a level set, allow me to define the networks that we’re separating:

- Management - this is VDSM traffic between the RHV-M and the RHV hosts. By default, it shows up as “ovirtmgmt” and is created automatically at deployment time. It is the only network that is created by default; all other networks must be created.

- Console - this is traffic to the consoles of Virtual Machines (spice or vnc). To access virtual machines consoles, an IP must be bonded to the hypervisor.

- Public - this is traffic that accesses a virtual machine via its network interface (i.e. SSH traffic for a linux server, HTTP for a web server)

- Storage - (Jumbo Frames) this is private traffic for Ethernet storage and is further broken down into:

- NFS - used for VM and ISO images

- iSCSI - used for VM images

- Migration - (Jumbo Frames) this network is used to migrate virtual machines between hypervisors.

- Fencing - this is used by the hypervisors to send fencing commands to fence (reboot or power-off) other hosts when instructed by RHV-M. (RHV-M does not fence hosts itself; it sends fence requests to a host to execute the command on its behalf.)

All of these networks have VLANs and note that both the storage and migration networks are also configured with Jumbo Frames.

RHV Manager

In this scenario, RHV-M has two Ethernet interfaces. The first is connected to the public VLAN for admin/user access. The second interface is connected to the default management VLAN. This has the added effect of isolating all VDSM traffic from users, in this case both virtually and physically. Virtually from the VLAN and logical network standpoint, but also because the public and management networks are assigned to different interface bonds. I will state that in most environments, if 10GbE is available then go that route as a single pair of bonded 10GbE interfaces for most of the traffic is preferred.

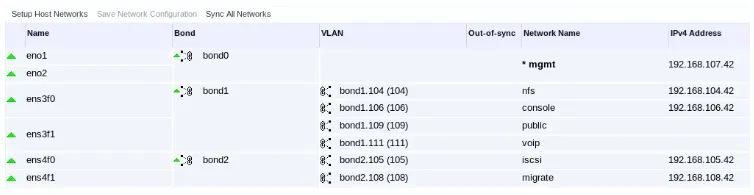

Hosts

Whether you have dual 10GbE interfaces (preferred) or multiple 1GbE interfaces, they should be bonded w/ LACP. In the example here, there are multiple 1GbE pairs bonded as follows:

- Bond 0 management traffic, native so the host can be PXE booted

- Bond 1 carries NFS, console, and public traffic (in this case, NFS is only for ISO images, otherwise it would have the Jumbo Frames.)

- Bond 2 carries ISCSI and Migration traffic (MTU is set to 9000).

(Non-)Configuration of Note

As pointed out above under RHV Manager, all VDSM traffic has been isolated from users. This helps prevent VDSM from being used as an attack point. However, take a second look at the diagram above, specifically at the “Public” network and the “arrow ends” (yellow points), then look at the screen capture below, specifically at the “public” IPv4 Address. Or rather the lack of defined IP address. This is not an incomplete configuration; this is a methodical approach that helps to prevent access. The “arrows” in the diagram above signify that while there is in fact a Linux Bridge configured, there is no IP address assigned to it. This allows traffic to pass through as required, but there isn’t any fixed address to log into, scan, ping, etc. This provides an additional layer of separation between the “public” and the “private”.

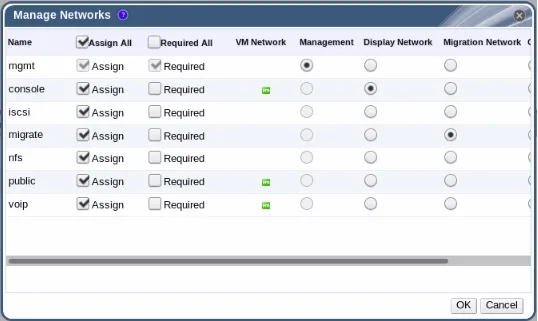

See how the VM networks are (and are not) assigned below. Displays are only available on the “console” logical network, and live migrations are restricted to the “migrate” logical network. Likewise, management traffic is also restricted. This too was thought out and methodical.

What All of This Buys You

This forethought and planning provide additional layers of security and isolation. As mentioned above, the customer that my colleague originally designed this for was doing live scans of malware within individual VMs. So having the additional layers of separation was not only useful, it was required. And yes, SELinux is in full enforcement.

What It Doesn’t Cost You

Administrative overhead; you have to create logical networks anyway, you might as well put a little thought into it. Furthermore, with the addition of the Ansible 2.3 integration with RHV 4.1, you can automate the configuration if you like. Better yet, you can automate the re-configuration, as requirements typically change over time.

Is It For You?

Even if you aren't quarantining live malware code, this type of network segregation makes good sense. Keeping storage traffic separate from migration traffic, and VM traffic separate from management traffic, and other examples means that resources are not competing. Granularity like MTU (Jumbo Frames) sizes can be adjusted on a per VLAN basis, without affecting other VLANs unnecessarily. So in a word, "yes", this is probably for you, even if you're not paranoid.

Sull'autore

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Serie originali

Raccontiamo le interessanti storie di leader e creatori di tecnologie pensate per le aziende

Prodotti

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servizi cloud

- Scopri tutti i prodotti

Strumenti

- Formazione e certificazioni

- Il mio account

- Supporto clienti

- Risorse per sviluppatori

- Trova un partner

- Red Hat Ecosystem Catalog

- Calcola il valore delle soluzioni Red Hat

- Documentazione

Prova, acquista, vendi

Comunica

- Contatta l'ufficio vendite

- Contatta l'assistenza clienti

- Contatta un esperto della formazione

- Social media

Informazioni su Red Hat

Red Hat è leader mondiale nella fornitura di soluzioni open source per le aziende, tra cui Linux, Kubernetes, container e soluzioni cloud. Le nostre soluzioni open source, rese sicure per un uso aziendale, consentono di operare su più piattaforme e ambienti, dal datacenter centrale all'edge della rete.

Seleziona la tua lingua

Red Hat legal and privacy links

- Informazioni su Red Hat

- Opportunità di lavoro

- Eventi

- Sedi

- Contattaci

- Blog di Red Hat

- Diversità, equità e inclusione

- Cool Stuff Store

- Red Hat Summit