The networking architecture of Red Hat OpenShift provides a robust and scalable front end for myriad containerized applications. Services supply simple load balancing based on pod tags, and routes expose those services to the external network. These concepts work very well for microservices, but they can prove challenging for applications running in virtual machines on OpenShift Virtualization, where existing server management infrastructure is already in place and assumes that full access to virtual machines is always available.

In a previous article, I demonstrated how to install and configure OpenShift Virtualization, and how to run a basic virtual machine. In this article, I discuss different options for configuring your OpenShift Virtualization cluster to allow virtual machines to access the external network in ways very similar to other popular hypervisors.

Connecting OpenShift to external networks

OpenShift can be configured to access external networks in addition to the internal pod network. This is achieved using the NMState operator in an OpenShift cluster. You can install the NMState operator from OpenShift Operator Hub.

The NMState operator works with Multus, a CNI plugin for OpenShift allowing pods to communicate to multiple networks. Because we already have at least one great article on Multus, I forgo the detailed explanation here, focusing instead on how to use NMState and Multus to connect virtual machines to multiple networks.

Overview of NMState components

After you've installed the NMState operator, there are three CustomResoureDefinitions (CRD) added that enable you to configure network interfaces on your cluster nodes. You interact with these objects when you configure network interfaces on the OpenShift nodes.

NodeNetworkState

(

nodenetworkstates.nmstate.io) establishes one NodeNetworkState (NNS) object for each cluster node. The contents of the object detail the current network state of that node.NodeNetworkConfigurationPolicies

(

nodenetworkconfigurationpolicies.nmstate.io) is a policy that tells the NMState operator how to configure different network interfaces on groups of nodes. In short, these represent the configuration changes to the OpenShift nodes.NodeNetworkConfigurationEnactments

(

nodenetworkconfigurationenactments.nmstate.io) stores the results of each applied NodeNetworkConfigurationPolicy (NNCP) in NodeNetworkConfigurationEnactment (NNCE) objects. There is one NNCE for each node, for each NNCP.

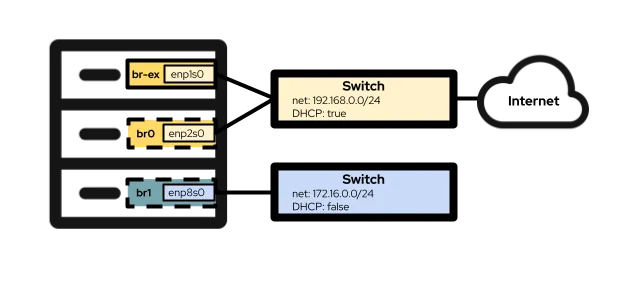

With those definitions out of the way, you can move on to configuring network interfaces on your OpenShift nodes. The hardware configuration of the lab I'm using for this article includes three network interfaces. The first is enp1s0, which is already configured during the cluster installation using the bridge br-ex. This is the bridge and interface I use in Option #1 below. The second interface, enp2s0, is on the same network as enp1s0, and I use it to configure the OVS bridge br0 in Option #2 below. Finally, the interface enp8s0 is connected to a separate network with no internet access and no DHCP server. I use this interface to configure the Linux bridge br1 in Option #3 below.

Option #1: Using an external network with a single NIC

If your OpenShift nodes only have a single NIC for networking, then your only option for connecting virtual machines to the external network is to reuse the br-ex bridge that is the default on all nodes running in an OVN-Kubernetes cluster. That means that this option may not be available to clusters using the older Openshift-SDN.

Because it's not possible to completely reconfigure the br-ex bridge without negatively affecting the basic operation of the cluster, you must add a local network to that bridge instead. You can accomplish this with the following NodeNetworkConfigurationPolicy setting:

$ cat br-ex-nncp.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br-ex-network

spec:

nodeSelector:

node-role.kubernetes.io/worker: ''

desiredState:

ovn:

bridge-mappings:

- localnet: br-ex-network

bridge: br-ex

state: presentFor the most part, the example above is the same in every environment that adds a local network to the br-ex bridge. The only parts that would commonly be changed are the name of the NNCP (.metadata.name) and the name of the localnet (.spec.desiredstate.ovn.bridge-mappings). In this example, they are both br-ex-network, but the names are arbitrary and need not be the same as each other. Whatever value is used for the localnet is needed when you configure the NetworkAttachmentDefinition, so remember that value for later!

Apply the NNCP configuration to the cluster nodes:

$ oc apply -f br-ex-nncp.yaml

nodenetworkconfigurationpolicy.nmstate.io/br-ex-networkCheck the progress of the NNCP and NNCE with these commands:

$ oc get nncp

NAME STATUS REASON

br-ex-network Progressing ConfigurationProgressing

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Progressing 1s ConfigurationProgressing

lt01ocp11.matt.lab.br-ex-network Progressing 3s ConfigurationProgressing

lt01ocp12.matt.lab.br-ex-network Progressing 4s ConfigurationProgressingIn this case, the single NNCP called br-ex-network has spawned an NNCE for each node. After some seconds, the process is complete:

$ oc get nncp

NAME STATUS REASON

br-ex-network Available SuccessfullyConfigured

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Available 83s SuccessfullyConfigured

lt01ocp11.matt.lab.br-ex-network Available 108s SuccessfullyConfigured

lt01ocp12.matt.lab.br-ex-network Available 109s SuccessfullyConfiguredYou can now move on to the NetworkAttachmentDefinition, which defines how your virtual machines attach to the new network you've just created.

NetworkAttachmentDefinition configuration

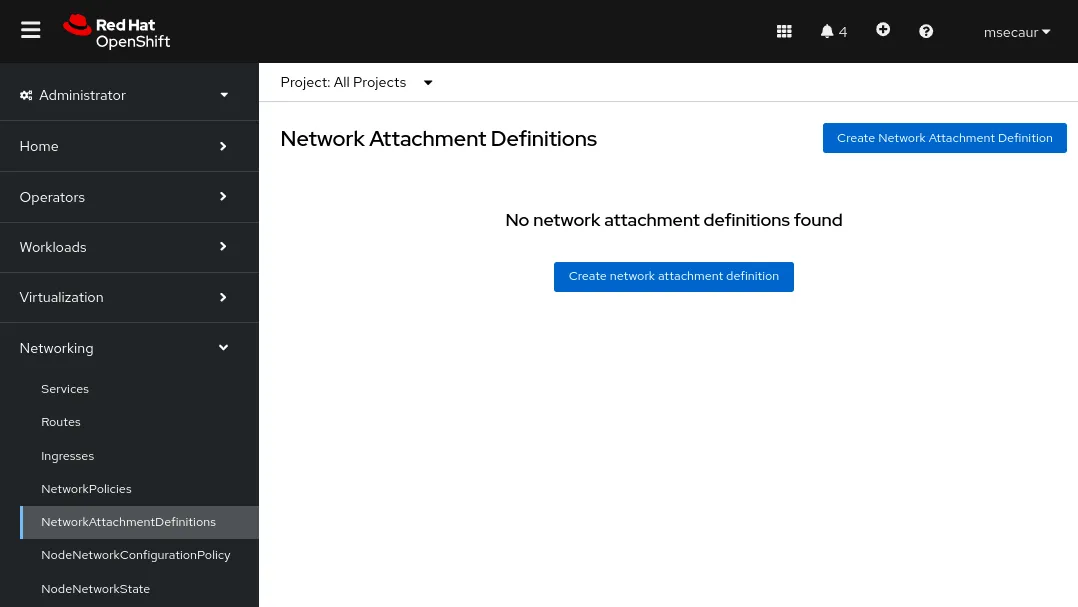

To create a NetworkAttachmentDefinition in the OpenShift console, select the project where you create virtual machines (vmtest in this example) and navigate to Networking > NetworkAttachmentDefinitions. Then click the blue button labeled Create network attachment definition.

The Console presents a form you can use to create the NetworkAttachmentDefinition:

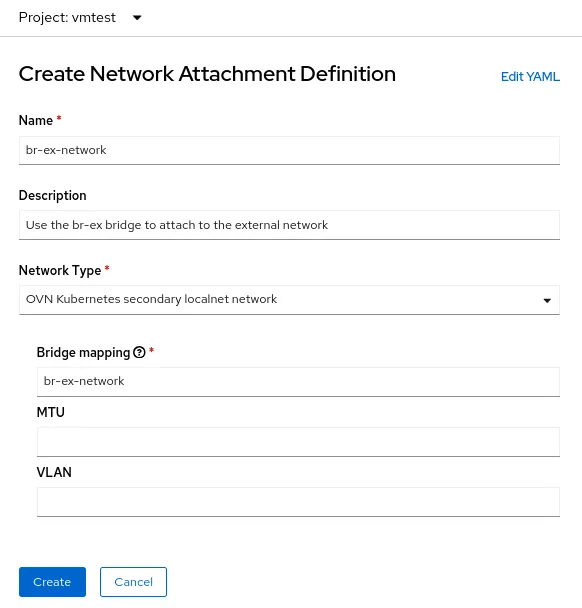

The Name field is arbitrary, but in this example I'm using the same name I used for the NNCP (br-ex-network). For Network Type, you must choose OVN Kubernetes secondary localnet network.

For the Bridge mapping field, enter the name of the localnet you configured earlier (which also happens to be br-ex-network in this example). Because the field is asking for a "bridge mapping", you may be tempted to input "br-ex", but in fact, you must use the localnet that you created, which is already connected to br-ex.

Alternatively, you can create the NetworkAttachmentDefinition using a YAML file instead of using the console:

$ cat br-ex-network-nad.yaml

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: br-ex-network

namespace: vmtest

spec:

config: '{

"name":"br-ex-network",

"type":"ovn-k8s-cni-overlay",

"cniVersion":"0.4.0",

"topology":"localnet",

"netAttachDefName":"vmtest/br-ex-network"

}'

$ oc apply -f br-ex-network-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/br-ex-network createdIn the NetworkAttachmentDefinition YAML above, the name field in .spec.config is the name of the localnet from the NNCP, and netAttachDefName is the namespace/name that must match the two identical fields in the .metadata section (in this case, vmtest/br-ex-network).

Virtual machines use static IPs or DHCP for IP addressing, so IP Address Management (IPAM) in a NetworkAttachmentDefinition

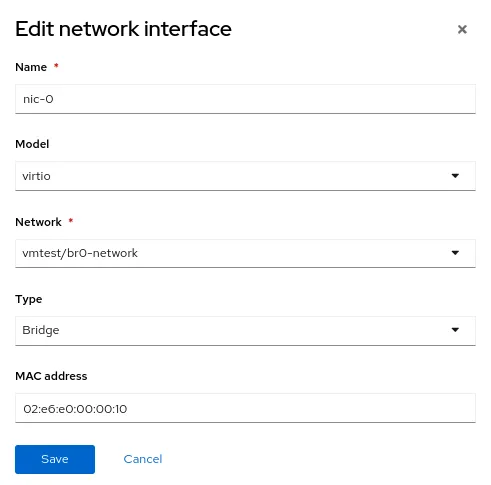

Virtual machine NIC configuration

To use the new external network with a virtual machine, modify the Network interfaces section of the virtual machine and select the new vmtest/br-ex-network as the Network type. It's also possible to customize the MAC address in this form.

Continue creating the virtual machine as you normally would. After the virtual machine boots, your virtual NIC is connected to the external network. In this example, the external network has a DHCP server, so an IP address is automatically assigned, and access to the network is permitted.

Delete the NetworkAttachmentDefinition and the localnet on br-ex

If you ever want to undo the steps above, first ensure that no virtual machines are using the NetworkAttachmentDefinition, and then delete it using the Console. Alternately, use the command:

$ oc delete network-attachment-definition/br-ex-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br-ex-network" deletedNext, delete the NodeNetworkConfigurationPolicy. Deleting the policy does not undo the changes on the OpenShift nodes!

$ oc delete nncp/br-ex-network

nodenetworkconfigurationpolicy.nmstate.io "br-ex-network" deletedDeleting the NNCP also deletes all associated NNCE:

$ oc get nnce

No resources foundFinally, modify the NNCP YAML file that was used before, but change the bridge-mapping state from presentto absent:

$ cat br-ex-nncp.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br-ex-network

spec:

nodeSelector:

node-role.kubernetes.io/worker: ''

desiredState:

ovn:

bridge-mappings:

- localnet: br-ex-network

bridge: br-ex

state: absent # Changed from presentRe-apply the updated NNCP:

$ oc apply -f br-ex-nncp.yaml

nodenetworkconfigurationpolicy.nmstate.io/br-ex-network

$ oc get nncp

NAME STATUS REASON

br-ex-network Available SuccessfullyConfigured

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Available 2s SuccessfullyConfigured

lt01ocp11.matt.lab.br-ex-network Available 29s SuccessfullyConfigured

lt01ocp12.matt.lab.br-ex-network Available 30s SuccessfullyConfiguredThe localnet configuration has now been removed. You may safely delete the NNCP.

Option #2: Using an external network with an OVS bridge on a dedicated NIC

OpenShift nodes can be connected to multiple networks that use different physical NICs. Although many configuration options exist (such as bonding and VLANs), in this example I'm just using a dedicated NIC to configure an OVS bridge. For more information on advanced configuration options, such as creating bonds or using a VLAN, refer to our documentation.

You can see all node interfaces using this command (the output is truncated here for convenience):

$ oc get nns/lt01ocp10.matt.lab -o jsonpath='{.status.currentState.interfaces[3]}' | jq

{

…

"mac-address": "52:54:00:92:BB:00",

"max-mtu": 65535,

"min-mtu": 68,

"mtu": 1500,

"name": "enp2s0",

"permanent-mac-address": "52:54:00:92:BB:00",

"profile-name": "Wired connection 1",

"state": "up",

"type": "ethernet"

}In this example, the unused network adapter on all nodes is enp2s0.

As in the previous example, start with an NNCP that creates a new OVS bridge called br0 on the nodes, using an unused NIC (enp2s0, in this example). The NNCP looks like this:

$ cat br0-worker-ovs-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br0-ovs

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br0

description: |-

A dedicated OVS bridge with enp2s0 as a port

allowing all VLANs and untagged traffic

type: ovs-bridge

state: up

bridge:

options:

stp: true

port:

- name: enp2s0

ovn:

bridge-mappings:

- localnet: br0-network

bridge: br0

state: presentThe first thing to note about the example above is the .metadata.name field, which is arbitrary and identifies the name of the NNCP. You can also see, near the end of the file, that you're adding a localnet bridge-mapping to the new br0 bridge, just like you did with br-ex in the single NIC example.

In the previous example using br-ex as a bridge, it was safe to assume that all OpenShift nodes have a bridge with this name, so you applied the NNCP to all worker nodes. However, in the current scenario, it's possible to have heterogeneous nodes with different NIC names for the same networks. In that case, you must add a label to each node type to identify which configuration it has. Then, using the .spec.nodeSelector in the example above, you can apply the configuration only to nodes that will work with this configuration. For other node types, you can modify the NNCP and the nodeSelector, and create the same bridge on those nodes, even when the underlying NIC name is different.

In this example, the NIC names are all the same, so you can use the same NNCP for all nodes.

Now apply the NNCP, just as you did for the single NIC example:

$ oc apply -f br0-worker-ovs-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br0-ovs createdAs before, the NNCP and NNCE take some time to create and apply successfully. You can see the new bridge in the NNS:

$ oc get nns/lt01ocp10.matt.lab -o jsonpath='{.status.currentState.interfaces[3]}' | jq

{

…

"port": [

{

"name": "enp2s0"

}

]

},

"description": "A dedicated OVS bridge with enp2s0 as a port allowing all VLANs and untagged traffic",

…

"name": "br0",

"ovs-db": {

"external_ids": {},

"other_config": {}

},

"profile-name": "br0-br",

"state": "up",

"type": "ovs-bridge",

"wait-ip": "any"

}NetworkAttachmentDefinition Configuration

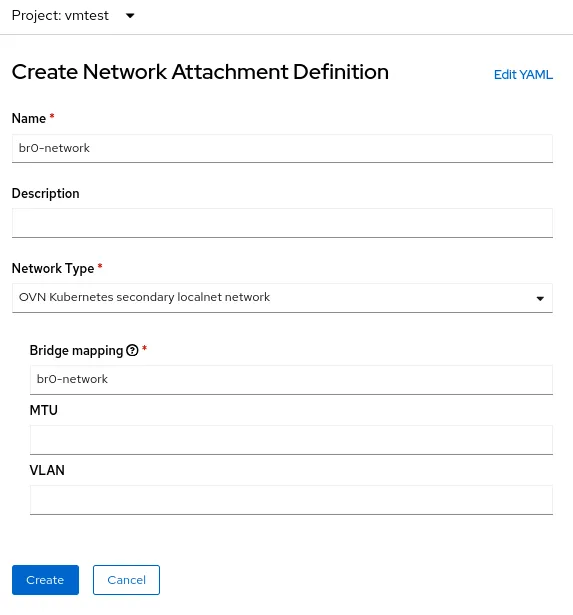

The process of creating a NetworkAttachmentDefinition for an OVS bridge is identical to the earlier example of using a single NIC, because in both cases you're creating an OVN bridge mapping. In the current example, the bridge mapping name is br0-network, so that's what you use in the NetworkAttachmenDefinition creation form:

Alternatively, you can create the NetworkAttachmentDefinition using a YAML file:

$ cat br0-network-nad.yaml

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: br0-network

namespace: vmtest

spec:

config: '{

"name":"br0-network",

"type":"ovn-k8s-cni-overlay",

"cniVersion":"0.4.0",

"topology":"localnet",

"netAttachDefName":"vmtest/br0-network"

}'

$ oc apply -f br0-network-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/br0-network createdVirtual Machine NIC configuration

As before, create a virtual machine as normal, using the new NetworkAttachmentDefinition called vmtest/br0-network for the NIC network.

When the virtual machine boots, the NIC uses the br0 bridge on the node. In this example, the dedicated NIC for br0 is on the same network as the br-ex bridge, so you get an IP address from the same subnet as before, and network access is permitted.

Delete the NetworkAttachmentDefinition and the OVS bridge

The process to delete the NetworkAttachmentDefinition and OVS bridge are mostly the same as the previous example. Ensure that no virtual machines are using the NetworkAttachmentDefinition, and then delete it from the Console or the command line:

$ oc delete network-attachment-definition/br0-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br0-network" deletedNext, delete the NodeNetworkConfigurationPolicy (remembor, deleting the policy does not undo the changes on the OpenShift nodes):

$ oc delete nncp/br0-ovs

nodenetworkconfigurationpolicy.nmstate.io "br0-ovs" deletedDeleting the NNCP also deletes the associated NNCE:

$ oc get nnce

No resources foundFinally, modify the NNCP YAML file that was used before, but change the interface state from “up” to “absent” and the bridge-mapping state from “present” to “absent”:

$ cat br0-worker-ovs-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br0-ovs

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

Interfaces:

- name: br0

description: |-

A dedicated OVS bridge with enp2s0 as a port

allowing all VLANs and untagged traffic

type: ovs-bridge

state: absent # Change this from “up” to “absent”

bridge:

options:

stp: true

port:

- name: enp2s0

ovn:

bridge-mappings:

- localnet: br0-network

bridge: br0

state: absent # Changed from presentRe-apply the updated NNCP. After the NNCP has processed successfully, you can delete it.

$ oc apply -f br0-worker-ovs-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br0-ovs createdOption #3: Using an external network with a Linux bridge on a dedicated NIC

In Linux networking, OVS bridges and Linux bridges both serve the same purpose. The decision to use one over the other ultimately comes down to the needs of the environment. There are myriad articles available on the internet that discuss the advantages and disadvantages of both bridge types. In short, Linux bridges are more mature and simpler than OVS bridges, but they are not as feature rich, while OVS bridges have the advantage of offering more tunnel types and other modern features than Linux bridges, but are a bit harder to troubleshoot. For the purposes of OpenShift Virtualization, you should probably default to using OVS bridges over Linux bridges due to things like MultiNetworkPolicy, but deployments can be successful with either option.

Note that when a virtual machine interface is connected to an OVS bridge, the default MTU is 1400. When a virtual machine interface is connected to a Linux bridge, the default MTU is 1500. More information about the cluster MTU size can be found in the official documentation.

Node configuration

As in the prior example, we're using a dedicated NIC to create a new Linux bridge. To keep things interesting, I'm connecting the Linux bridge to a network that doesn't have a DHCP server so you can see how this affects virtual machines connected to that network.

In this example, I'm creating a Linux bridge called br1 on interface enp8s0, which is attached to the 172.16.0.0/24 network that has no internet access or DHCP server. The NNCP looks like this:

$ cat br1-worker-linux-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br1-linux-bridge

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br1

description: Linux bridge with enp8s0 as a port

type: linux-bridge

state: up

bridge:

options:

stp:

enabled: false

port:

- name: enp8s0

$ oc apply -f br1-worker-linux-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br1-worker createdAs before, the NNCP and NNCE takes some seconds to create and apply successfully.

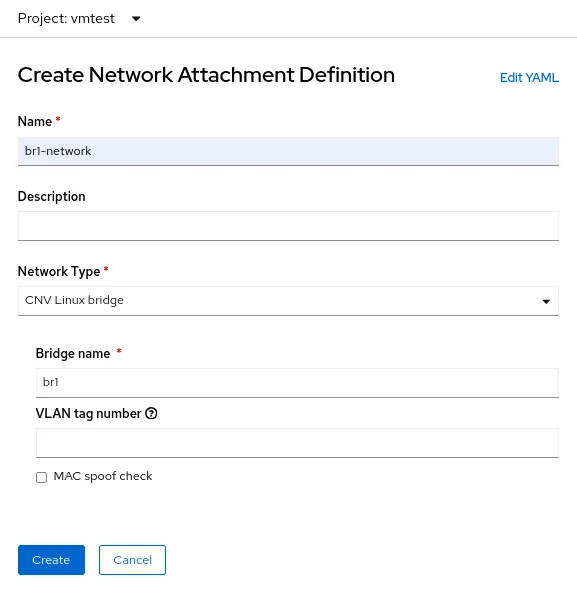

NetworkAttachmentDefinition Configuration

The process of creating a NetworkAttachmentDefinition for an Linux bridge is a bit different from both of the earlier examples because you aren't connecting to an OVN localnet this time. For a Linux bridge connection, you connect directly to the new bridge. Here's the NetworkAttachmenDefinition creation form:

In this case, select the Network Type of CNV Linux bridge and put the name of the actual bridge in the Bridge name field, which is br1 in this example.

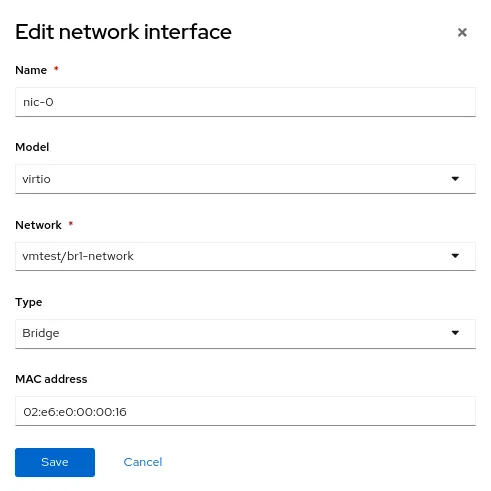

Virtual machine NIC configuration

Now create another virtual machine, but use the new NetworkAttachmentDefinition called vmtest/br1-network for the NIC network. This attaches the NIC to the new Linux bridge.

When the virtual machine boots, the NIC uses the br1 bridge on the node. In this example, the dedicated NIC is on a network with no DHCP server and no internet access, so give the NIC a manual IP address using nmcli and validate connectivity on the local network only.

Delete the NetworkAttachmentDefinition and the Linux bridge

As with the previous examples, to delete the NetworkAttachmentDefinition and the Linux bridge, first ensure that no virtual machines are using the NetworkAttachmentDefinition. Delete it from the Console or the command line:

$ oc delete network-attachment-definition/br1-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br1-network" deletedNext, delete the NodeNetworkConfigurationPolicy:

$ oc delete nncp/br1-linux-bridge

nodenetworkconfigurationpolicy.nmstate.io "br1-linux-bridge" deletedDeleting the NNCP also deletes all associated NNCE (remember, deleting the policy does not undo the changes on the OpenShift nodes):

$ oc get nnce

No resources foundFinally, modify the NNCP YAML file, changing the interface state from up to absent:

$ cat br1-worker-linux-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br1-linux-bridge

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br1

description: Linux bridge with enp8s0 as a port

type: linux-bridge

state: absent # Changed from up

bridge:

options:

stp:

enabled: false

port:

- name: enp8s0Re-apply the updated NNCP. After the NNCP has processed successfully, it may be deleted.

$ oc apply -f br1-worker-linux-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br1-worker createdAdvanced networking in OpenShift Virtualization

Many OpenShift Virtualization users can benefit from the variety of advanced networking features that are already built-in to Red Hat OpenShift. However, as more workloads are migrated from traditional hypervisors, you might want to leverage existing infrastructure. This may necessitate having OpenShift Virtualization workloads connected directly to their external networks. The NMState operator in conjunction with Multus networking provides a flexible method to achieve performance and connectivity to ease your transition from a traditional hypervisor to Red Hat OpenShift Virtualization.

To learn more about OpenShift Virtualization, you can check out another blog post of mine about the topic, or have a look at the product on our website. For more information about the networking topics covered in this article, Red Hat OpenShift documentation has all of the details you need. Finally, if you want to see a demo or use OpenShift Virtualization yourself, contact your Account Executive.

About the author

Matthew Secaur is a Red Hat Senior Technical Account Manager (TAM) for Canada and the Northeast United States. He has expertise in Red Hat OpenShift Platform, Red Hat OpenStack Platform, and Red Hat Ceph Storage.

More like this

Browse by channel

Automation

The latest on IT automation that spans tech, teams, and environments

Artificial intelligence

Explore the platforms and partners building a faster path for AI

Cloud services

Get updates on our portfolio of managed cloud services

Security

Explore how we reduce risks across environments and technologies

Edge computing

Updates on the solutions that simplify infrastructure at the edge

Infrastructure

Stay up to date on the world’s leading enterprise Linux platform

Applications

The latest on our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Customer support

- Developer resources

- Find a partner

- Red Hat Ecosystem Catalog

- Red Hat value calculator

- Documentation

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit