table { border: #ddd solid 1px; } td, th { padding: 8px; border: #ddd solid 1px; } td p { font-size: 15px !important; }

With the push towards wide-scale 5G and edge service deployments, it’s important for Digital Service Providers (DSPs) to have a solid foundation for responding to customer challenges and requirements. Red Hat Enterprise Linux 8 offers several benefits for DSPs. With improved operating system performance, DSPs can take advantage of better throughput to deliver enhanced user experience for their customers.

With the release of Red Hat Enterprise Linux (RHEL) 8.3 and building on our successful collaboration with Intel, Red Hat has extended the list of certified hardware to include Intel’s 3rd Gen Xeon Scalable processors (code-named Ice Lake, model Platinum 8360Y), and support for Intel’s latest 100Gb network adapter, the E810 series.

This post compares the performance of an Intel Ice Lake processor to Intel’s previous generation Cascade Lake processor using a benchmark based on RFC 2544 to demonstrate accelerated network packet processing. Note that this was drafted using the performance lab’s “white-box” hardware for Intel's Ice Lake CPUs and the information here is supplied for understanding the improvements in packet processing.

What is DPDK in RHEL 8.3?

Data Plane Development Kit (DPDK) technology is a bundled feature of RHEL, where network adapters can be 100% controlled by userspace programs. This allows for faster operations as network packets can completely skip the kernel network stack. RHEL includes DPDK (dpdk-20.11), which is a core set of libraries that accelerate packet processing to enable these purpose-built networking programs, and “Dynamic Device Personalization” (DDP) which provides the ability to reconfigure the packet processing pipeline across a broad range of traffic types.

Testing for Ultra-high Performance Networking

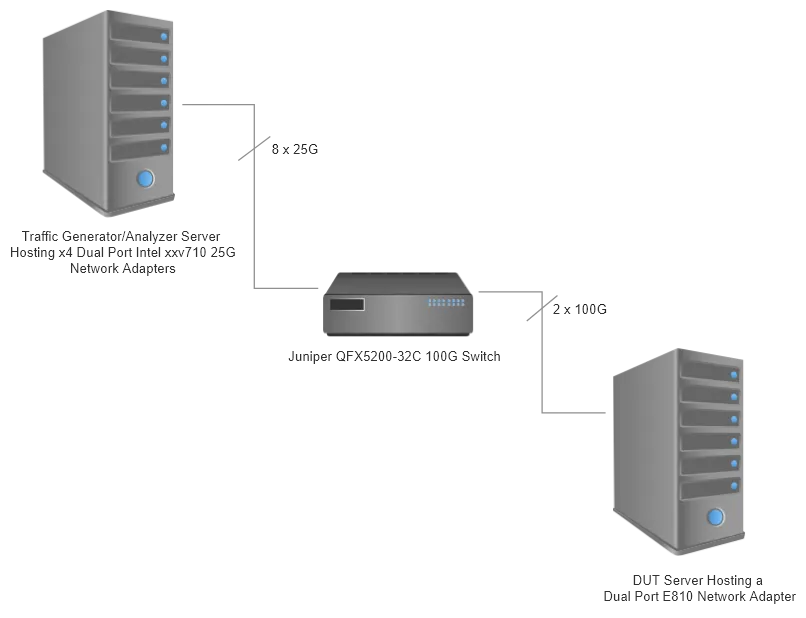

Measuring the absolute highest packet processing performance involves using a dedicated traffic generator, which also happens to use DPDK, and a simple packet-forwarding program using DPDK libraries. RHEL’s DPDK package bundles the “testpmd” application, which was used to demonstrate the performance of these platforms.

Our test involves transmitting Ethernet frames to both 100Gb ports on the Intel E-810 network adapter, and the testpmd application listens on both ports and forwards the data out on the opposite port they were received. The traffic generator, TRex, monitors packet loss and adjusts for peak packet rate while staying below a prescribed loss threshold. We repeated this type of test with these parameters:

-

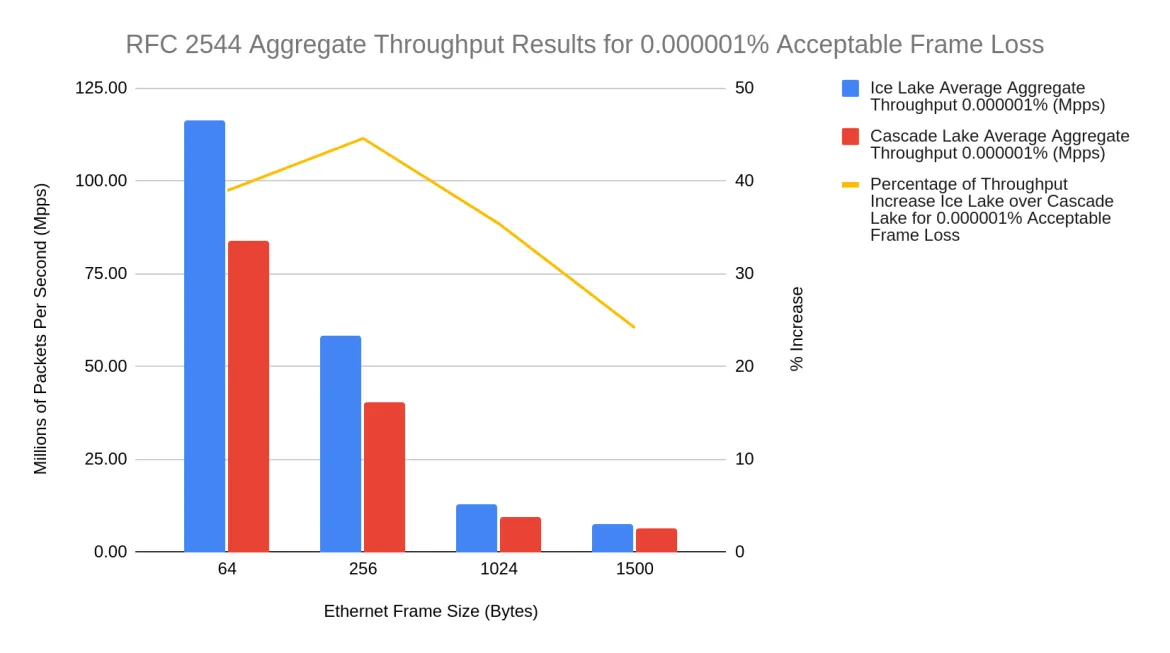

Maximum acceptable frame loss: 0.000001%

-

Ethernet frame sizes: 64, 256, 1024, and 1500 bytes

The guidelines for the test followed procedures outlined within RFC 2544: Benchmarking Methodology for Network Interconnect Devices. Test scenarios were given an acceptable packet loss criteria equivalent to the maximum acceptable frame loss, and this had to be maintained for a certain amount of time. Each test used a binary search technique to determine the best packet throughput rate given the packet loss criteria.

Performance Gains

The Device Under Test (DUT) server, was either:

-

An Ice Lake prototype system housing a dual port Intel Ethernet Network 100G Adapter E810-C-Q2 within a PCI Express 4 supported slot

-

A Cascade Lake system again housing a dual port Intel Ethernet Network 100G Adapter E810-C-Q2 within a PCI Express 3 supported slot

Ice Lake also implements a larger 3rd level cache and a different micro-architecture. Thus Ice Lake’s average network throughput outperformed Cascade Lake average network throughput across all frame sizes.

|

Ethernet Frame Size (Bytes) |

Ice Lake Average Aggregate Throughput 0.000001% Acceptable Loss (Mpps) |

Cascade Lake Average Aggregate Throughput 0.000001% Acceptable Loss (Mpps) |

Ice Lake Percentage of Throughput Increase Compared to Cascade Lake for 0.000001% Acceptable Ethernet Frame Loss (%) |

|

64 |

116.54 |

83.85 |

39 |

|

256 |

58.56 |

40.49 |

45 |

|

1024 |

12.91 |

9.53 |

35 |

|

1500 |

7.80 |

6.28 |

24 |

The gain is substantial when it comes to small frame sizes (64 to 256 byte ethernet frames) which telco applications are sensitive to. Applications such as VOIP to maximize speech quality, with each frame carrying a fraction of the syllable of speech. Both Ice Lake and Cascade Lake approach the same network bandwidth for larger packet sizes.

Conclusion

The Intel’s 3rd Generation Ice Lake Xeon Platinum 8360Y combined with the E810 adapter - as compared to Cascade Lake with E810 - sees a significant network performance improvement. Based on our tests, measured network throughput is increased by 39% with 64-byte ethernet frames and 45% with 256-byte ethernet frames.

Tuning a network system for optimum throughput can be a complex challenge. Red Hat Enterprise Linux provides not only the ability to run with different certified hardware, but also comes with pre-packaged mechanisms and tunables to get the best performance out of your networking hardware. In this case, isolcpus (a utility to isolate CPUs from the kernel scheduler) along with the “CPU-partitioning” TuneD profile were used to isolate cores and allow for uninterrupted packet forwarding by DPDK poll mode driver (PMD) threads.

Building on decades-long collaboration, the Red Hat Performance Team, along with our partners at Intel, have been working together to leverage the latest technologies and features in the networking space to respond to dynamic customer demands and challenges.

关于作者

产品

工具

试用购买与出售

沟通

关于红帽

我们是世界领先的企业开源解决方案供应商,提供包括 Linux、云、容器和 Kubernetes。我们致力于提供经过安全强化的解决方案,从核心数据中心到网络边缘,让企业能够更轻松地跨平台和环境运营。