The Everglades is a region of Florida that consists of wetlands and swamps. These natural areas are filled with life and possibility, so it makes sense that Everglades was the code for the new Red Hat Gluster Storage (RHGS) 3.1, announced today, and available this summer.

What is it, what’s new

For those not familiar with RHGS, it is a combination of the GlusterFS 3.7 and Red Hat Enterprise Linux platforms. Combined, they create space efficient, resilient, scalable, high performance and cost-effective storage.

This release brings:

- Erasure Coding

- Tiering

- Clustered NFS ganesha

- Bit-rot detection

- And more!

RHGS 3.1 also includes enhancements to address the data protection and storage management challenges faced by users of unstructured and big data storage.

Getting into the details

These are just a handful of the new capabilities in this update. For more insight, please visit this page.

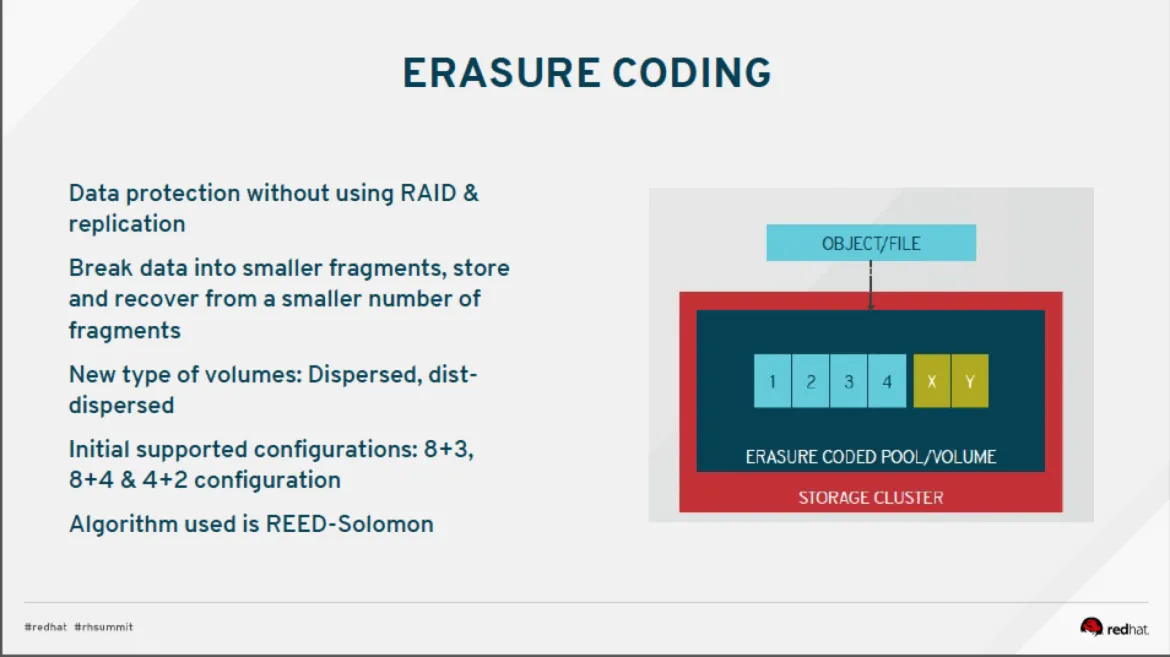

Erasure coding

Erasure coding is an advanced data protection mechanism that reconstructs corrupted or lost data by using information about the data that’s stored elsewhere in the storage system.

Erasure coding is an alternative to h/w raid. Although RAID5/6 provides protection up to single/double disk failure, the risk of multiple disks failing during rebuild is very high for higher capacity disks. Erasure coding provides failure protection beyond just single/double component failure and consumes less space than replication.

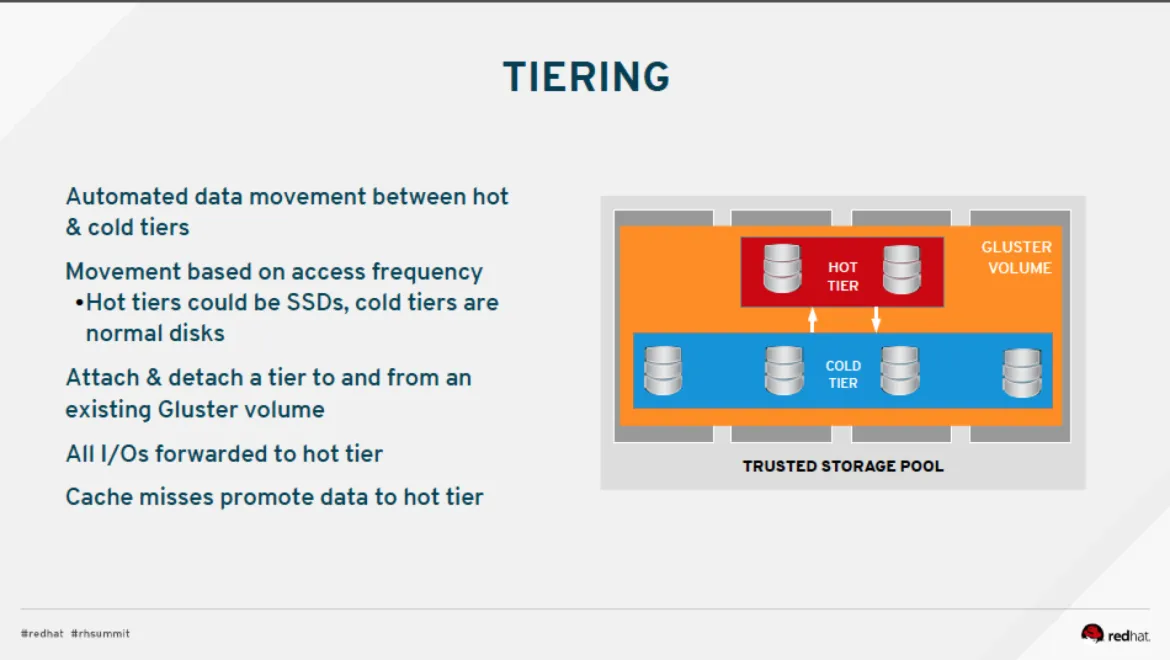

Tiering

Moving data between tiers of hot and cold storage is a computationally expensive task. To address this, RHGS 3.1 supports automated promotion and demotion of data within a volume, so different sub-volume types act as hot and cold tiers. Data is automatically assigned or reassigned a “temperature” based on the frequency of access. RHGS supports an "attach" operation which converts an existing volume to become a "cold" tier within a volume and creates a new "hot" tier in the same volume. Together, the combination is a single "tiered" volume. For example, the existing volume may be erasure coded on HDDs and the "hot" tier could be distributed-replicated on SSDs.

Active/Active NFSv4

RHGS 3.1 supports active-active NFSv4 using the NFS ganesha project. This allows an user to export Gluster volumes through NFSv4.0 and NFSv3 via NFS ganesha. NFS ganesha is an user space implementation of the NFS protocol which is very flexible with simplified failover and failback in case of a node/network failure. The high availability implementation

(that supports up to 16 nodes of active-active NFS heads) uses the corosync and pacemaker infrastructure. Each node has a floating IP address which fails-over to a configured surviving node in case of a failure. Failback happens when the failed node comes back online.

Bit rot detection

This detects silent-data corruption which is usually encountered at the disk level. This corruption leads to a slow deterioration in the performance and integrity of data stored in storage systems. RHGS 3.1 periodically runs a background scan to detect and report bit-rot errors via a checksum using the SHA256 algorithm.

Because this process can have a severe impact on storage system performance, RHGS allows an administrator to execute the processes at three different speeds (lazy, normal, aggressive).

Your next step

To get more details about RHGS 3.1, please visit this page. To take RHGS for a test drive, please visit https://engage.redhat.com/aws-test-drive-201308271223.

关于作者

产品

工具

试用购买与出售

沟通

关于红帽

我们是世界领先的企业开源解决方案供应商,提供包括 Linux、云、容器和 Kubernetes。我们致力于提供经过安全强化的解决方案,从核心数据中心到网络边缘,让企业能够更轻松地跨平台和环境运营。