This article is the last in a six-part series (see my previous blog) presenting various usage models for Confidential Computing, a set of technologies designed to protect data in use. In this article, I explore interesting support technologies under active development in the confidential computing community.

Kernel, hypervisor and firmware support

Confidential Computing requires support from the host and guest kernel, the hypervisor, and firmware. At the time of writing, that support is uneven between platforms. Hardware vendors tend to develop and submit relatively large patch series, which can take a number of iterations to get approved.

Among the current active areas of development:

- Host kernel support for SEV-SNP

- Hypervisor, guest, and host support for TDX (and a few ancillary firmware projects)

- Platform support for ARM CCA

The impact this has on the attestation process is primarily the appearance of multiple not-yet-stabilized interfaces to collect measurements about the guest, typically exposed as a /dev entry with a variety of similar but not identical ioctls. This is an area where standardization has not even begun.

Platform provisioning tools

Before running confidential virtual machines, it's necessary to provision the host. This provisioning corresponds to the Endorse step in the REMITS pipeline, and typically generates a number of host-specific keys.

The tools to do that are highly platform-specific. In the case of AMD SEV, there are two toolsets. One is sev-tool, initially created by AMD for developers. The other is sevctl, a more polished and user-friendly implementation with the same functionality. Currently, there isn’t a single set of tools that can present a uniform user interface for all platforms.

Generic Key Broker and Attestation Services

In part 3 of this series, I introduced a REMITS pipeline model that allows you to compare and contrast various forms of attestation. In this model, the S stands for "Secrets". A good way to ensure that a non-trusted execution environment does not receive sensitive data is to tie its execution to secrets that can only be unlocked through attestation. This is a good reason to bind key or secret brokering to attestation, even though the two are conceptually and (in most implementations) separate.

The Confidential Containers project defines a generic key broker service (KBS), the primary access point for an agent running in a guest. KBS relies on the attestation service to verify evidence from the TEE. This is still a very active area of development, with the objective to make the design more modular, and to be able to support more hardware platforms through platform-specific drivers (both on the attestation server side and on the client side). This platform is intended to support both infrastructure-facing attestation as well as workload-facing attestation.

This is not the only solution. Intel is working on Project Amber, which aims to provide an independent trust authority providing attestation of workloads in a public or private multi-cloud environment. It currently only supports Intel's own technologies (TDX and SGX), but the marketing material announces support for other trusted execution environments.

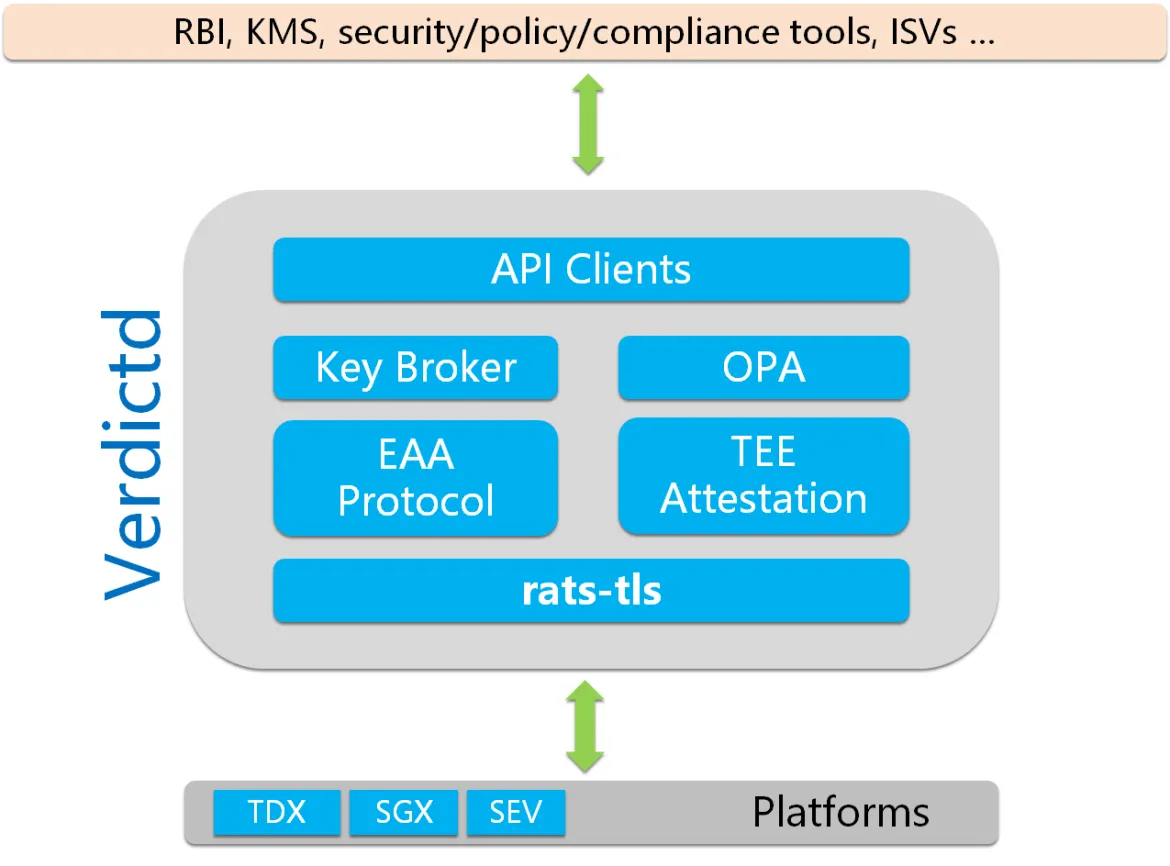

The Inclavare Containers project has developed its own attestation infrastructure, called verdictd. This also integrates with the key broker, incorporating open policy agent support. It's based on their rats-tls project, an implementation of the remote attestation procedures (RATS) framework using transport layer security (TLS).

While there are a number of products, such as Keylime, that are pure attestation players, they were not deemed suitable because they are not designed to act as a synchronous, blocking attestation enforcement mechanism, delivering secrets on success. Instead, Keylime was designed to analyze the compliance of machines in a fleet for manual or semi-automated operator actions.

There was already some sharing of the key broker service interface defined by the Confidential Containers project with Confidential Workloads. Sharing this interface further, e.g. for virtual machine or cluster attestation, is desirable, and appears possible, although some changes will be required.

Secure Virtual Machine Service Module (SVSM)

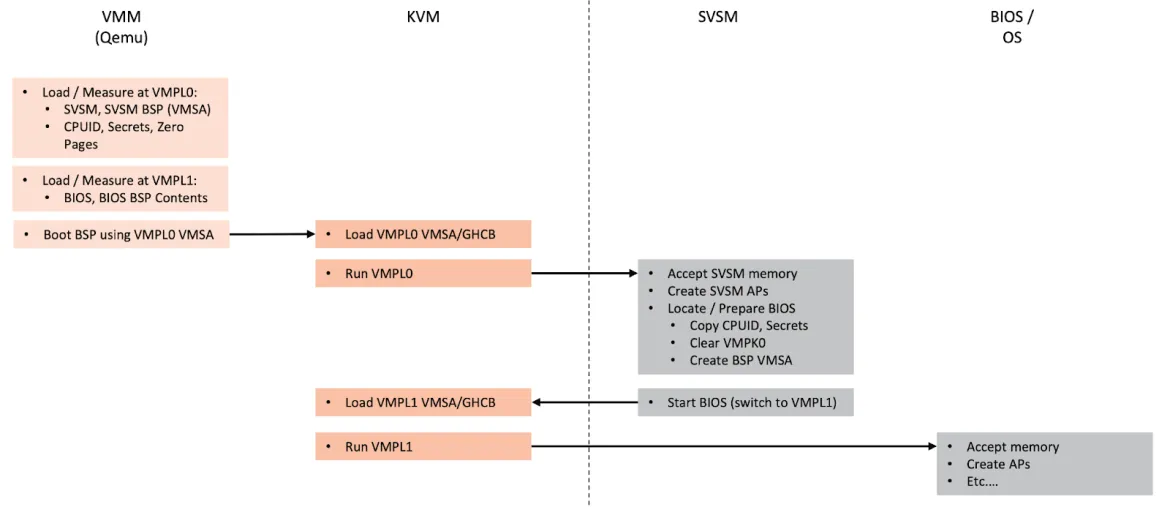

The Secure Virtual Machine Service Module (SVSM) is a new piece of firmware that runs at the least restrictive virtual machine privilege level (VMPL0) and can procure secure services to be used by a secure virtual machine running with reduced privilege.

The primary reason to implement such privilege services is to implement emulation for older interfaces, notably the standard TPM, or to run guests that aren't aware that they're running in a confidential virtual machine.

In order to build a virtual TPM that the guest cannot tamper with, it is necessary to protect the vTPM code and data from a malicious guest or hypervisor. Functionally, this is roughly equivalent to what would be monitor code in ARM CCA, hypervisor code in SE or PEF, and a vTPM enclave or TD in TDX.

A draft SVSM specification has been published for review. There are currently two efforts underway. One was originally by AMD, and the other (Coconut) was proposed by SuSE. The community seems to have agreed to move forward with Coconut, but as with much of what is being discussed in this article, this is an area of active development.

Virtual Trusted Platform Modules (vTPM)

The original TPM was a physical device attached to a physical machine. With the advent of virtual machines, there is a desire to provide similar facilities to virtual machine guests.

In the Confidential Computing world, the abstraction of the vTPM provides a convenient unified measurement mechanism across multiple Confidential Computing implementations while allowing existing TPM tools to be reused.

Prior to Confidential Computing, a vTPM was often implemented as a separate process or module on the hypervisor. This ensured that the vTPM state was protected from attack by the guest. In the Confidential Computing world however, the vTPM state must be protected from both the host and guest. In the case of SEV-SNP, this can be inside guest firmware and protected by the use of VMPLs. Other designs use a vTPM running in a separate confidential VM, TD, or realm. Part of the problem is how to connect this vTPM securely to the confidential VM using it.

Another challenge with vTPM in a Confidential Computing environment is how to store the vTPM non-volatile state securely without placing trust in the host or surrounding cloud environment. Some implementations sidestep the problem by providing an ephemeral vTPM with new a state generated on each boot, which may limit the use of some existing TPM tools.

Because both the vTPM and the underlying Confidential Computing technology can produce attestations, there needs to be a way to tie them together to prove to an attestation system that the system attested by the vTPM is really running on confidential compute hardware. Ways to do this include:

- Mix a hash of the vTPMs keys, specifically the endorsement key (EK), into the confidential compute attestation

- Hash the confidential compute attestation into a vTPM PCR

Note that the TPM interface tends to provide more capabilities than the simpler measurement registers found in some confidential computing platforms. Not all features are necessarily relevant for confidential computing.

Conclusion

Many pieces need to cooperate to achieve the objective of confidential computing, and no two platforms do it exactly the same way. Even the supporting tools differ from platform to platform. Debugging confidential computing code is especially difficult. There are numerous challenges with, for example, mismatched interfaces between firmware and operating system.

I hope that the overview presented in this series has helped you navigate this very complex landscape. The Confidential Computing ecosystem is an area of intense research and development.

关于作者

更多此类内容

产品

工具

试用购买与出售

沟通

关于红帽

我们是世界领先的企业开源解决方案供应商,提供包括 Linux、云、容器和 Kubernetes。我们致力于提供经过安全强化的解决方案,从核心数据中心到网络边缘,让企业能够更轻松地跨平台和环境运营。