This blog post was created to summarize some of the most frequent questions about OpenShift Online 3 that we’ve noticed in various channels in the past months: Ticket-based technical support, Stack Overflow questions, and in discussions with customers.

Common Questions

- Starter Clusters' Performance

- What’s the Deal with Route Hosts?

- Unexpectedly Terminated Builds

- Application Resource Management

- Local Directory as the Build Source

- The Built WAR File is Not Deployed

- MySQL Database Migrated from OpenShift Online v2

- Wait! I Have Another Question

Starter Clusters' Performance

Occasionally, we get questions about Starter clusters’ performance, when things appear to be slow. The Starter Status page has the most up-to-date information about the status of all the clusters. You can get to the Status page easily from the web console, by clicking System Status item in the help drop-down menu:

You can also see if there’s currently an incident report, by clicking the open issues on the top right:

There is a similar status page for the Pro tier.

I recommend subscribing to issues that may be affecting your applications so that you are notified about status updates. Simply click on Subscribe for the respective report and then Subscribe to Incident.

Anything published on the Status Page is in the capable hands of our Operations Team. Let’s move on to some issues that you can fix yourself.

What’s the Deal with Route Hosts?

If you create a route for one of your services, a default route URL in form of <route_name>-<project_name>.<shard>.<cluster>.openshiftapps.com is provided by OpenShift Online.

- The

<route_name>would usually be inherited from the service name, unless specified otherwise explicitly, when creating the route. - The default router

<shard>and<cluster>would be one of the following pairs:1d35.starter-us-east-18a09.starter-us-east-2(OpenShift.io cluster)a3c1.starter-us-west-17e14.starter-us-west-2193b.starter-ca-central-1b9ad.pro-us-east-1e4ff.pro-eu-west-14b63.pro-ap-southeast-2

Although the default shard and cluster lists are complete at the time of writing this article, more clusters may be added in the future, and not updated here. Therefore, please consider the list as an illustration of the shard and cluster naming format.

Note that custom route hosts are supported on the OpenShift Online Pro tier only. If you’re using the Starter subscription plan, the abovementioned default route hosts are going to be enforced on the router. Take a look at comparison of OpenShift Online subscription plans, for more information.

Once you set a custom route host, you’ll also need to point to your DNS record accordingly. Create a canonical name (CNAME) record in your DNS service provider’s interface that points to elb.<shard>.<cluster>.openshiftapps.com, so that users get to your application hosted on OpenShift Online.

If you’re using the Pro plan, you can also provide a custom certificate for your encrypted custom route. More details about using routes and OpenShift Online specific restrictions can be found in the documentation.

Unexpectedly Terminated Builds

Did your build log terminate unexpectedly, without an error explaining why? Have a look at the build pod using oc describe pod/<name.. You may see OOMkilled as the reason why the pod was terminated there, similar to this example (the output is shortened):

$ oc describe po/myapp-2-build

Name: undertow-2-build

Namespace: my-own-project

(...)

Containers:

sti-build:

(...)

State: Terminated

Reason: OOMkilled

In this case, you are going to need more memory for the build to complete. Without the limit being set explicitly, the default container memory limit is enforced. (Run oc describe limits resource-limits to check default limits.)

In order to use a custom memory limit for your build, namely 1 GiB in the examples below, you can edit YAML file of the build configuration. There are two options on how to do that:

A) Use the Actions menu in the web console, when browsing the given Build Configuration:

Remove the empty record {} and replace it with an actual memory limit:

B) Use the oc CLI, run the oc edit bc/<build_config>, again replacing the resources: {} line with an explicit limit:

resources:

limits:

memory: 1Gi

Alternatively, you can directly patch the Build Configuration in question, by running oc patch bc/<buildconfig_name> -p '{"spec":{"resources":{"limits":{"memory":"1Gi"}}}}'

Application Resource Management

The above addressed a situation where an application build was failing. What should you do when the resulting application deployment itself needs more resources for its run? The application resource limit can be set the same way in the Deployment Configuration, as well as by a few simple clicks in the web interface:

If you’re short on resources, don’t forget to strictly use the Recreate deployment strategy, which first scales down the currently deployed application pods, before deploying the new version. While the Rolling strategy is fancier and means no downtime on deployments of newer application versions, it also means you need to have enough resources available to run two instances of your application at the same time (and no volumes mounted). More about deployment strategies can be found in the documentation.

Keep in mind that the Starter plan is intended for individual learning and experimenting. The free resource quota is generous enough for that purpose, however, should you need more resources, take a look at the OpenShift Online Pro plan.

If you’re using the Pro plan and would like to purchase more resources, simply do that on the subscription management page.

Local Directory as the Build Source

One of many differences between OpenShift Online v2 and OpenShift Online 3 is that the new platform does not provide an internal Git repository for your code. While most users take advantage of an externally available Git repository hosted on popular services like GitHub, GitLab, or BitBucket, some people are interested in direct deployments from a local directory.

Sometimes, people simply want to keep their source private. I’m not affiliated with any of the abovementioned SCM service providers and I do not intend to compare their services at all here, but I’d like to point out that they also offer private repositories, some of them even include this in their free offerings. More details on private Git repositories can be found in this five-part series. If you want only to keep your code in the repository for yourself, I would still recommend using an external repository and all the benefits that may be valuable for you, such as issue tracking, collaboration, webhook build triggers, and more.

For pulling code from private repositories, make sure to set up your source clone secrets properly.

If you absolutely do not want to store your source in the cloud, private repository or not, you can also start builds manually, uploading the source from a local directory (or file, or a repository). For this to work, you need to have all the needed objects, such as build configuration and deployment configuration, already created. The most straightforward way to do that is to deploy example application using one of the builder images. I’ll try to illustrate this on a Java Undertow servlet example application:

- Select the desired application template, OpenJDK 8 in this example, and proceed to deploy the sample source from a public repository:

- Once the application is built and deployed, check the build configuration. You would probably want to remove the

contextDirdirectory, if the sample application is using one (the JBoss OpenShift quickstarts do), which most likely is not relevant to your own source code:

You can do that in the web interface, as seen on the above screenshot, or simply byoc edit bc/<build_config_name>. - Use the `oc start-build` command, providing it with the existing build configuration and specifying a custom source directory:

$ oc start-build <build_config_name> --from-dir=<path_to_source>

(Provide the name of build config that has been created and your custom local path to the source code.) - That’s it. Wait for the binary build source to be uploaded, built, and deployed.

You can also provide a single file or local Git repository with the oc start-build command. Check our documentation on how to start builds and have a look on --from-file and --from-repo options.

The Built WAR File is Not Deployed

Certain issues are sometimes difficult to spot, as they do not produce errors that are highlighted in logs which you can act upon easily. A good example and frequently asked question is connected to deploying Java applications using the JBoss Web Server (and other S2I Java server images). It’s worth noting that people who migrated their applications from OpenShift Online v2 may be experiencing this, as the maven-war plugin configuration in the default pom.xml is specifying the webapps output directory, which is not where you want the built war file to be.

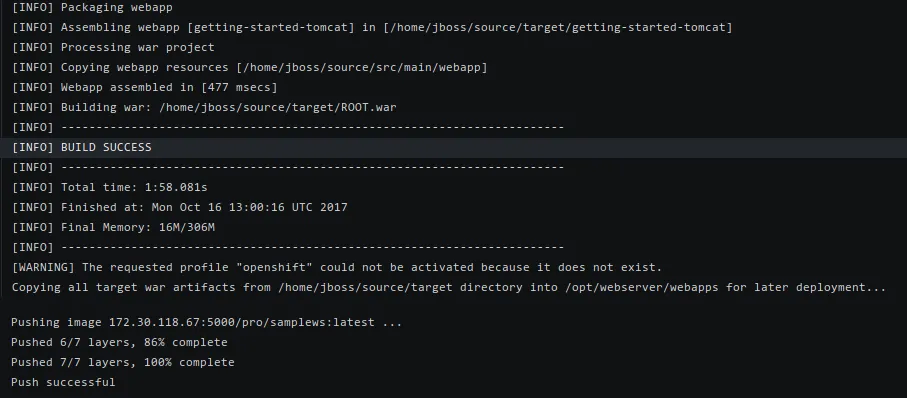

This is similar to what you could see in the build log:

It does not look too suspicious, right? No error, the build was clearly a success, resulting image pushed into the registry just fine. However, there is one important line missing there:

Yes, the built artifact has to be copied from /home/jboss/source/target/ over to the /home/jboss/source/webapps/ directory. As it’s not there, you won’t have your built application deployed. Therefore, make sure you do not have any <outputDirectory>webapps</outputDirectory> specified for the maven-war plugin, so the built war file is placed in the expected target directory. This would be under the openshift profile (that is used by OpenShift Online 3 S2I builder again) in your pom.xml file, if you have migrated your application from OpenShift Online v2:

(...)

<profiles>

<profile>

<id>openshift</id>

<build>

<finalName>myapp</finalName>

<plugins>

<plugin>

<artifactId>maven-war-plugin</artifactId>

<version>2.1.1</version>

<configuration>

<outputDirectory>webapps</outputDirectory> <-- remove this line

<warName>ROOT</warName>

</configuration>

</plugin>

</plugins>

</build>

</profile>

</profiles>

(...)

MySQL Database Migrated from OpenShift Online v2

We’ve noticed a few questions indicating that the user table has been modified inappropriately when using a full database dump of an application (formerly) running on the MySQL 5.5 cartridge on OpenShift Online v2.

As you have an older MySQL 5.5 database dump, the actual structure of the database has changed slightly in MySQL 5.7, the default choice for deploying the mysql-persistent template, and you will need to upgrade the schema. This can be done after importing your data using mysql_upgrade. Also, you’ll probably not want to drop the user table, thus losing the root user. I recommend proceeding as follows, to address both these possible issues:

- Create a backup copy of the database dump that you have previously created using

mysqldumpon OpenShift Online v2, so that you can always get back to it, if things go south. - Edit the database dump

sqlfile and remove all the content that manipulates the user table; that is, delete lines under the-- Table structure for table 'user'and-- Dumping data for table 'user'sections and save the modified file. - Scale your MySQL pod down to zero, if you already have it deployed on OpenShift Online 3. If not, simply deploy the

mysql-persistenttemplate, define environment variables as mentioned in the migration guide (step 3) and skip to step 7 here. - Delete your mysql persistent volume claim; this will delete the database that has been restored from the dump file before and is probably not working well.

- Recreate the PVC under the same name.

- Scale up your MySQL pod to one replica. That will initialize the database and create a user as per the environment variables (the v2 user and password should be there already, as defined when following the migration guide).

- Copy the modified

sqldump file created in step 2 (that is, the one not affecting themysql.usertable) to the database pod usingoc rsync. - In the MySQL pod, restore the database using the uploaded file, as per the migration guide (step 6).

- Grant all privileges to your user on the application database by:

GRANT ALL PRIVILEGES ON <database> TO '<mysql_user>'@'%'

Replace<database>and<mysql_user>appropriately, per the environment variables set in step 3 of the migration guide. - Exit the MySQL CLI and run

mysql_upgrade -u rootin the shell.

Once this is completed, you should not get errors about damaged system tables or have trouble restarting the MySQL pod due to a missing user.

Wait! I Have Another Question

You may have other questions not answered here. We also answer questions about how to perform simple tasks in OpenShift in our documentation. If you don’t want to browse all the documentation sections, use the search field there.

Another resource beyond this blog is the Interactive Learning Portal, a great place to get hands-on experience in free, short, guided courses. Check back from time to time, new courses are still being added.

People migrating their applications from OpenShift Online v2 to the new platform may find useful links to various resources on how to succeed with that task, in our Migration Center.

Finally, check out our help center, where you can simultaneously search our docs, blog, and Stack Overflow in one place. After searching for your problem, if you can’t find a solution, you will be presented with contact options for finding a resolution.

关于作者

产品

工具

试用购买与出售

沟通

关于红帽

我们是世界领先的企业开源解决方案供应商,提供包括 Linux、云、容器和 Kubernetes。我们致力于提供经过安全强化的解决方案,从核心数据中心到网络边缘,让企业能够更轻松地跨平台和环境运营。