As Red Hat OpenStack Platform has evolved in recent years to accommodate a diverse range of customer needs, the demand for scalability has become increasingly vital. Customers depend on Red Hat OpenStack Platform to deliver a resilient and adaptable cloud infrastructure, and as its usage expands, so does the necessity for deploying more extensive clusters.

Over the past years we have undertaken efforts to scale Red Hat Openstack Platform 16.1 to more than 700+ baremetal nodes. This year, the Red Hat Performance & Scale Team has dedicated itself to pushing Red Hat OpenStack's Platform scalability to unprecedented heights. As demand for scaling the Red Hat OpenStack Platform increased, we conducted an exercise to test the scalability of over 1000+ virtual computes. Testing such large scales typically requires substantial hardware resources for baremetal setups. In our endeavor, we achieved a new milestone by successfully scaling to over 1000+ overcloud nodes on Red Hat OpenStack Platform 17.1.

In this blog, we talk about how we scaled to 1000+ overcloud nodes using Red Hat OpenStack Platform 17.1, Control plane tests carried out and tests run for dynamic workloads to mimic real time workloads.

Hardware and Setup

To scale to 1000+ overcloud nodes, we used a supermicro server as an baremetal undercloud host with 32 total cores, 64 threads. To scale beyond 1000+ nodes you need to choose the baremetal undercloud wisely depending on the load. The controlplane/provisioning NIC was 25G. We used 3 baremetal controllers and 7 baremetal CephStorage and more than 250+ baremetal dell servers with 40 total cores, 80 threads that served as hypervisors for 1150 virtual computes. We added 30 baremetal dell servers that served as NFV computes with SRIOV and OVNDPDK. We had 6 composable roles for virtual computes, 1 composable role each for SRIOV Computes, OVNDPDK Computes, Controllers, Undercloud and in total we had 10 composable roles. Scaling to such a limit was made possible by the server hardware in Scale Lab. The Scale Lab is an initiative to operate Red Hat’s products at their upper limits.

Test Methodology

Our objective was to scale beyond 1000+ overcloud nodes to assess their impact on scalability and performance, identify the bottlenecks and resolve any issues encountered.

Here’s a breakdown of the steps:

- Deployed the undercloud and configure sufficient control plane subnet ranges to facilitate the large scale overcloud deployment

- Provisioning NICs utilized is 25G

- We Initially deployed with 3 baremetal Controllers, 7 baremetal CephStorage and 1 virtual Compute

- Register the nodes and run node provisioning

- Incrementally scale in batches of 100 using –limit option until we scaled to 1000+

- We run stack operation and config-download separately and captured the operation timings for each scale out of 100 overcloud nodes

- Conducted control plane tests and dynamic workloads such as creating networks, routers and spawning a bunch of VM’s

- We installed a single Shift-on-Stack cluster for functional testing on the NFV baremetal Computes.

- So towards the end we had a hybrid deployment with 3 baremetal Controllers, 7 baremetal CephStorage nodes, 1150 virtual Computes and 30 baremetal NFV Computes.

Observations

We scaled out in batches of 100 virtual computes with initial deployment of 3 baremetal Controllers and 7 baremetal CephStorage nodes. The first issue that we encountered during scale out was OSError: [Errno 24] Too many open files while scaling from 200-300 nodes BZ 2159663. We increased the open file limit from 1024 to 4096.

From 300-400 the stack operations were taking longer than the default configured and stack failed with timeout Task create from ResourceGroup "Compute" Stack "overcloud" [b9e181ea-0881-4785-8f45-065c54ba8391] Timed out. We also need to ensure how many nodes are present in each composable role as having a huge number of nodes can cause these timeouts BZ 2239130. During this we also noticed from user experience view that the default configurations for ephemeral heat were not configurable from templates and we had to modify the tunings in jinja2 heat.conf file BZ 2256595.

After registering new nodes after 750 the introspection failed with ironic-inspector inspection failed:The PXE filter driver DnsmasqFilter, state=uninitialized: my fsm encountered an exception: Can not transition from state 'uninitialized' on event 'sync' (no defined transition). The process of synchronizing DHCP for introspection and hardware discovery in larger clusters can be time-consuming due to operational overhead. This can lead to processes remaining continuously busy, potentially impacting the successful introspection of recently added nodes. To address this issue, it's suggested to adjust the [pxe_filter]sync_period setting to a larger value, allowing conductors more time between synchronization runs BZ 2251309.

At 700 nodes the stack creation failed with Failed to deploy: ERROR: Request limit exceeded: Template size (528209 bytes) exceeds maximum allowed size (524288 bytes) so we tuned the max_template_size in heat.conf. During 750 scale onwards we noticed heat stack failures with TimeoutError: resources[192].resources.NovaCompute: QueuePool limit of size 5 overflow 64 reached, connection timed out, timeout 30. To fix the issue we had to tune the num_engine_workers, rpc_response_timeout and executor_thread_pool_size BZ 2251191. The CPU consumption was around ~50 cores which was close to the number of cores on the Undercloud. We had to increase the number of heat engine workers which defaulted to the number of cores/2.

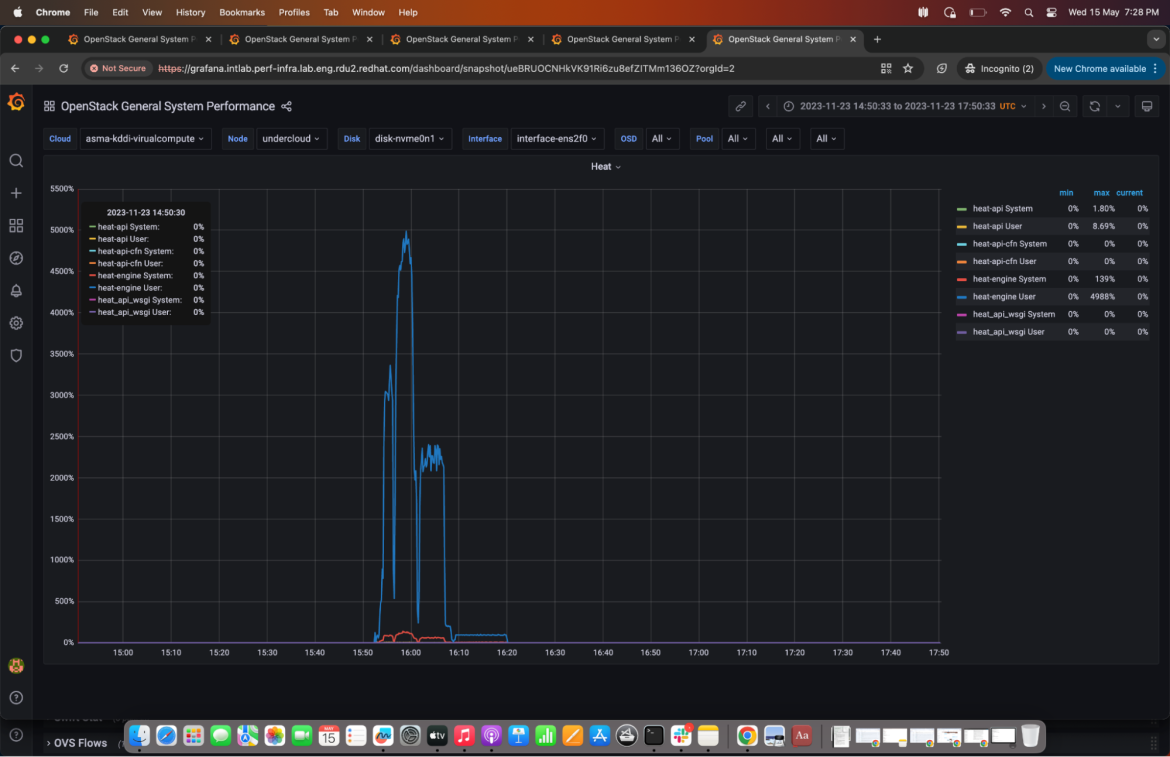

Grafana snapshot: depicts heat-engine CPU consumption during stack creation

During our previous testing with a 250-node baremetal setup on RedHat OpenStack Platform 17.1, we encountered that metalsmith list command taking too long, leading to a poor user experience BZ 2166865.. We reported this issue as a bug due to its impact on performance. In response, a fix was proposed to enhance the performance of the metalsmith list command. Before the patch was applied in our scaled environment, the command was taking approximately ~18 minutes to execute. However, post-fix implementation, the execution time drastically improved to around ~3 minutes, marking a significant enhancement in performance.

Workloads

To thoroughly evaluate the performance of Red Hat OpenStack Platform (RHOSP) under demanding workloads, we utilize the Browbeat, which is an Opensource, performance benchmarking, monitoring and analysis tool that uses Rally. By simulating various scenarios and workloads, Browbeat allows us to assess the responsiveness and scalability of critical control plane components such as Nova, Neutron, Glance, Keystone, etc. These tests help identify potential bottlenecks, performance degradation, or failures that could affect the overall stability and availability of the OpenStack infrastructure. And one of Browbeat’s crucial aspects is its ability to create Dynamic Workloads.

We run control plane tests for Glance, Nova, Neutron and Keystone tuning the concurrency and times. Concurrency means the number of parallel Rally processes trying to run a scenario based on the specified times.

Dynamic workloads are a type of workload within Browbeat that mimics real-world cloud usage patterns. These workloads continuously generate activity within the cloud environment, simulating actions that real users might perform. We conducted rigorous performance tests on Red Hat OpenStack Platform (RHOSP) to assess its handling of dynamic workloads at a large scale (1000+ overcloud nodes). These tests simulated real-world usage by constantly creating, deleting, migrating, rebooting and stop and start VMs, along with floating IP swaps between them. Additionally, the dynamic workloads included tasks such as subport deletion from random trunks, subport addition to random trunks, and floating IP swaps between random trunk subports, etc.

The workload ran for 400+ iterations, involving API intensive tests in each iteration with a concurrency of 16. This heavy concurrent workload directly stresses Openstack API services. While RHOSP performed admirably overall, but we did encounter intermittent failures in SSH connectivity to random VMs BZ 2251734. This is because instances were not able to fetch nova metadata and thus cloud-init service fails to configure sshd service. To understand the root cause we employed tcpdump to capture packets exchanged between the OVSDB server and the metadata agent and observed that sometimes the OVSDB server sends the events to a metadata agent that it is not designed to handle as a recipient. So, here the OVSDB server sent an insert event that had the merged data of the insert and update3 messages. In the metadata agent event processing, the agent looks only for a separate update3 event and not merged data. These unmatched events keep us from creating the ovnmeta network namespace entirely and thus results in SSH connectivity failures.

We also did encounter intermittent ping timeout failures after stopping and starting a VM randomly BZ 2259890. These tests helped identify areas for improvement within RHOSP, leading to bug reports, optimizations and tunings, further highlighting the importance of rigorous performance testing for large-scale cloud deployments.

Conclusion

Conducting performance and scale testing with 1160 virtual computes, 30 baremetal Computes for NFV , 3 baremetal Controllers and 7 high-end storage class servers for Ceph represents a significant endeavor, showcasing a commitment to pushing the boundaries on Red Hat OpenStack Platform 17.1. Through meticulous examination, various tunings were identified to optimize resource utilization, enhance workload distribution, and mitigate bottlenecks. Extensive time and effort were dedicated to investigating the challenges, ranging from fine-tuning configurations to diagnosing complex interactions between components, demonstrating a relentless pursuit of excellence to scale beyond 1000+ nodes showcasing Red Hat OpenStack Platform is scalable.

关于作者

Asma is a Senior Software Engineer, joined Red Hat in 2018 working on cloud performance and scale.

I started at Red Hat as an intern in January 2022, joining the OpenStack Performance and Scale team. My main focus is on optimizing and scaling OpenStack environments to ensure robust and efficient cloud infrastructure. I bring expertise in Linux, OpenStack, OpenShift, Ansible and other open source technologies. My work involves performance tuning, automation and leveraging open source tools to enhance the capabilities of OpenStack deployments.

产品

工具

试用购买与出售

沟通

关于红帽

我们是世界领先的企业开源解决方案供应商,提供包括 Linux、云、容器和 Kubernetes。我们致力于提供经过安全强化的解决方案,从核心数据中心到网络边缘,让企业能够更轻松地跨平台和环境运营。