In part 2 of this three-part blog series, I covered a practical implementation of OpenShift Platform Plus tools and policies that help with achieving compliance with certain NIST SP 800-53 security controls in multicluster environments. In this part, I continue to demonstrate this implementation.

Demo Environment Description - Reminder

As a reminder, the following components are used for the demo environment:

-

Hub cluster - An OpenShift cluster where Red Hat Advanced Cluster Managment for Kubernetes (RHACM) is deployed. The hub cluster is used to manage OpenShift clusters. It is used as a management portal for cluster provisioning, application deployment, and governance policy propagation. Version 2.3.1 of RHACM is deployed on the hub cluster for this demo environment.

-

Managed clusters - Two OpenShift clusters are connected to the RHACM hub cluster, and RHACM manages these clusters. The clusters are affected by policies that are defined in the RHACM administration portal. For this demo, the cluster names are

cluster-aandcluster-b. -

Application - A simple application is provisioned on the managed clusters. The application is used to demonstrate RHACM application security capabilities. Furthermore, use the application to understand how RHACM can improve software engineering practices in the organization. The name for the application is

mariadb-app, and it is deployed in themariadbnamespace on both of the managed clusters.The resource in the application can be found in my forked rhacm-demo repository in GitLab, and it can be deployed by running the following command on the hub cluster:

<hub cluster> $ oc apply -f https://gitlab.com/michael.kot/rhacm-demo/-/raw/master/rhacm-resources/application.ymlView the following image of the application topology:

Comply to NIST 800-53 standards with the help of the governance framework

Before you begin: All files and resources used in the next demonstrations are present in my forked GitHub repository. To follow the demonstrations in the article, it is recommended to fork the mentioned repository.

Comply to the AC-2 (2) (Account Management) Security Control

The AC-2 (2) security control states, "The information system automatically removes/disables temporary and emergency accounts after organization-defined time period for each type of account".

One of the temporary accounts that needs to be removed in OpenShift is kubeadmin. As stated in the OpenShift documentation - "After you define an identity provider and create a new cluster-admin user, you can remove the kubeadmin to improve cluster security". Removing the kubeadmin user in all clusters improves the compliance of the environment to the AC-2 (2) security control.

Use the kubeadmin-policy to remove the kubeadmin user. Let's create the kubeadmin-policy named policy-remove-kubeadmin, and deploy it in the rhacm-policies namespace. The policy monitors the kubeadmin Secret resource in the kube-system namespace on the managed clusters. When the policy is set to enforce, the secret from the namespace is removed from the namespace. Thereby, removing access to the kubeadmin user.

Note: - An identity provider must be configured on the managed cluster before the policy takes effect. Deleting the kubeadmin user before you configure a permanent cluster-admin might result in locking you outside of your own managed cluster. Run the following command to deploy the policy:

<hub cluster> $ ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies/AC-2 --branch master --name ac-2 --namespace rhacm-policies

After you apply the resources, notice that the kubeadmin user is removed from all clusters. Removing the kubeadmin user from all clusters in the environment moves the organization closer towards compliance with the AC-2 (2) security control:

Comply to the AC-6 (Least Privilege) Security Control

The AC-6 security control states, "The organization employs the principle of least privilege, allowing only authorized accesses for users (or processes acting on behalf of users) which are necessary to accomplish assigned tasks in accordance with organizational missions and business functions".

The AC-6 security control is vast, and it is complex to become fully compliant with this control. In this section, learn about the two policies that can be applied in order to enforce the least privilege idea on the OpenShift cluster fleet for the organization. Note that OpenShift Platform Plus offers defense in depth to address least privilege goals with a combination of the following components:

- OpenShift Security Context Constraint admission controller to prevent the admission of privileged pods

- The RHACS admission controller for additional enforcement of allowed security Context and deployment configurations

- RHACS dashboard with visibility and alerting on running pods with privilege, and

- RHACM to enable alignment and enforcement of the desired state across multiple clusters as described in the next sections..

Disallow roles with high privileges

Disallow roles with high privileges by disallowing roles that have access to all resources in all API groups. This helps mitigate risks of having over-privileged users in the environment.

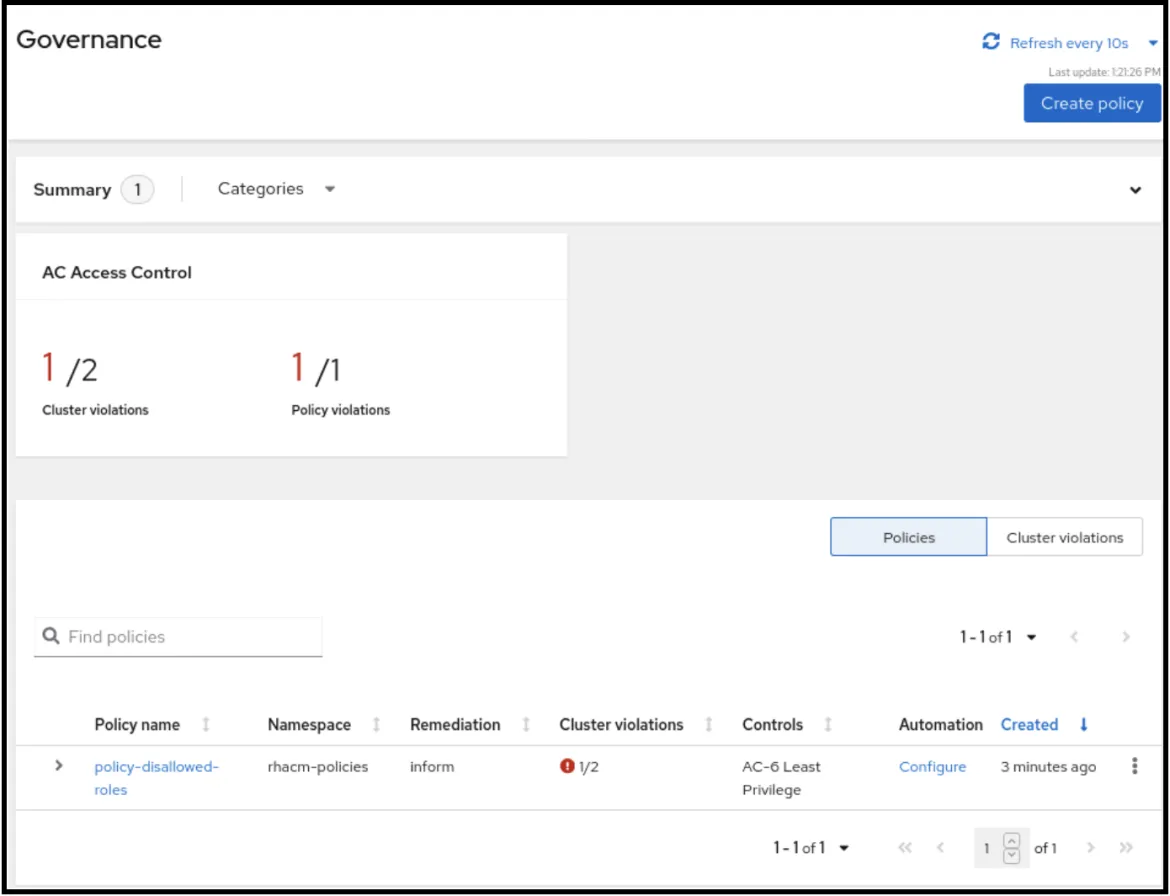

In order to configure such a rule, a policy needs to be configured. Let's create a disallowed-role-policy named policy-disallowed-roles, and deploy it in the rhacm-policies namespace. The policy monitors roles that have access to all resources in all API groups in the project. If such a role exists, the policy controller informs the security administrator of the violation:

<hub cluster> $ ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies/AC-6-1 --branch master --name ac-6-1 --namespace rhacm-policies

After the policy is configured, notice that there are no roles that allow access to all resources in both cluster-a and cluster-b. The Governance dashboard shows that all clusters are compliant to the policy:

Now, if such a role is created on one of the clusters locally, the dashboard immediately updates itself and initiates a violation:

<cluster-a> $ cat role.yml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: priv-role

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

<cluster-a> $ oc apply -f role.yml

As for the current state, the cluster is non-compliant with the AC-6 security control. The initiated violation might appear similar to the following image:

It's important to note that the policy defined in this scenario is a simple example. Many other roles can be considered as "unsafe" too. Such roles need to be monitored using similar policies according to the security regulations that are defined by the organization.

Disable the self-provisioners ClusterRole

The self-provisioners ClusterRole allows users to provision their own projects. By allowing users to provision their own clusters, you can end up losing control over which projects are assigned to which application. You can lose control over quotas, and resources can get exhausted quickly.

One good practice for addressing the least privilege principle (and the AC-6 security control), is to ensure that users have a project assigned to them based on the authority decision that is defined by the organization. Thereby, allowing users to access only the resources which are crucial to their work and business functions.

Disabling the self-provisioner ClusterRole can be done using a policy, or manually, as described in the OpenShift documentation. In this scenario, let's remove the self-provisioner ClusterRoleBinding in order to disassociate all authenticated users from the self-provisioners ClusterRole resource.

In the following scenario, the disallow-self-provisioner-policy is named policy-disallow-self-provisioner, and is deployed in the rhacm-policies namespace on the hub cluster. The policy is set to enforce to delete the self-provisioner ClusterRoleBinding:

<hub cluster> $ ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies/AC-6-2 --branch master --name ac-6-2 --namespace rhacm-policies

Now, if you log in to cluster-a as user1, notice that the user is unable to create new namespaces with the following command:

<cluster-a> $ oc whoami

user1

<cluster-a> $ oc new-project test-project

Error from server (Forbidden): You may not request a new project via this API.

From the Governance dashboard, you can see that both clusters are compliant with the defined policy:

Comply to the SC-7 (5) (Boundary Protection) Security Control

The SC-7 (5) security control states, "The information system at managed interfaces denies network communications traffic by default and allows network communications traffic by exception (i.e., deny all, permit by exception)". In order to comply with the SC-7 (5) security control, traffic between services in all clusters need to be restricted. All traffic is denied, except for specific rules declared in network policies that are monitored by RHACM. Note that RHACS provides network policy visualization as well as suggested policies and enforcement. Later, I illustrate how RHACM can be used to align network policies across clusters and enforce the desired configuration state.

In the next scenario, let's declare two policies. A policy created to deny all traffic to the mariadb namespace on the managed clusters, and another policy to define a network policy that allows traffic to the mariadb pod in port 3306. By combining both policies, the mariadb application deployment complies with the SC-7 (5) security control.

In order to test the scenario, let's deploy a second pod in another namespace. Use the pod to generate traffic to the mariadb pod in the mariadb namespace. You can test the connection to the mariadb namespace before applying any policy, and notice that the connection is successful:

<pod on cluster-a> $ mysql -u root -h mariadb.mariadb.svc.cluster.local -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 8

mysql>

View the following illustration of the scenario:

Now, let's apply the deny-all network policy to the mariadb namespace. First, create the networkpolicy-denyall-policy named policy-networkpolicy-denyall-mariadb, and deploy it in the rhacm-policies namespace on the hub cluster. Apply the deny-all NetworkPolicy object to the mariadb namespace. If the object does not exist, it is created when the remediation action is set to enforce:

<hub cluster> $ ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies/SC-7 --branch master --name sc-7 --namespace rhacm-policies

After you apply the resources, notice that both clusters are compliant with the newly defined policy in the Governance dashboard:

If you try to initiate the same traffic that was sent before applying the policy, notice that the packets are dropped. This means that the policy defined is effective. Run the following command:

<pod on cluster-a> $ mysql -u root -h mariadb.mariadb.svc.cluster.local -p

Enter password:

ERROR 2003 (HY000): Can't connect to MySQL server on 'mariadb.mariadb.svc.cluster.local' (110)

View the following illustration of the scenario:

In order to allow traffic to pass to the mariadb namespace, you need to create another policy on the hub cluster. This time, define the policy to allow traffic to come on port 3306 from the client namespace to the mariadb namespace. Create the network policy named policy-networkpolicy-allow-3306-mariadb and deploy the policy to the rhacm-policies namespace on the hub cluster. Configure the policy to be set to enforce in the mariadb namespace on the managed clusters.

To deploy the second policy, create a file with the networkpolicy-allow-3306-policy.yml name in the GitHub repository you forked under the policies/SC-7 directory. Copy the contents of the following policy to the file, and commit it to your forked repository.

Note: The policy only allows incoming traffic from a namespace with the client name. Make sure to modify the name according to the setup you are using to test the policy.

After you apply the resources, notice that both clusters are compliant with the newly defined policy in the Governance dashboard:

If you try to initiate the same traffic that you sent before applying the policy, notice that the connection is established successfully. Meaning that the policy defined is effective. Run the following command:

<pod on cluster-a> $ mysql -u root -h mariadb.mariadb.svc.cluster.local -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 8

mysql>

View the following illustration of the scenario:

Comply to the SC-4 (Information in Shared Resources) Security Control

The SC-4 security control states, "The information system prevents unauthorized and unintended information transfer via shared system resources". OpenShift makes use of features of the underlying OS to isolate the workloads to their own processor, memory, and storage spaces. OpenShift provides security context constraints (SCCs) that control the actions that a pod can perform and what it can access.

By default, OpenShift assigns the restricted SCC to pods. The restricted SCC sets a limited list of capabilities for the pod, it does not allow the pod to run as the root user, and it defines the SELinux context for the pod. Editing the restricted SCC could end up exposing the OpenShift cluster to vulnerabilities. Therefore, one of the practices that can be implemented in order to comply with the SC-4 security control is to make sure that the restricted SCC does not change.

Let's create a restricted-scc-policy resource named policy-securitycontextconstraints-restricted to deploy in the rhacm-policies namespace on the hub cluster. The policy remediation action is set to inform to notify the security administrator if the default restricted SCCs changes in any of the clusters:

<hub cluster> ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies/SC-4 --branch master --name sc-4 --namespace rhacm-policies

After you apply the resources, it appears that both clusters are compliant with the newly defined policy in the Governance dashboard:

Let's try editing the default restricted SCC in cluster-a, and see how the Governance dashboard displays the violation. For this example, change the runAsUser type from MustRunAsRange to RunAsAny. Changing the value allows pods to run as the root user:

<cluster-a> $ oc edit scc restricted

…

runAsUser:

type: RunAsAny

...

As soon as the value changed, the Governance dashboard updates, and initiates a violation:

If you want to change the policy to automatically remediate the restricted SCC to remain the same across all clusters, you need to change the remediation action value in the policy definition to enforce. Continue reading to change the remediation action to enforce.

To change the remediation action of the policy for this example, copy the following policy, and override the policies/SC-4/restricted-scc-policy.yml file at your forked repository. Make sure to commit the changes to GitHub.

As soon as the policy is applied, the violation is deleted from the Governance dashboard. View the following image:

Log in to cluster-a again to verify that the default restricted SCC is applied to the cluster. Pods can no longer use the restricted SCC in order to run as the root user:

<cluster-a> $ oc get scc restricted -o yaml

…

runAsUser:

type: MustRunAsRange

...

Integrating RHACM with the Compliance Operator

So far, compliance with many of the security controls I described in the paper requires custom organizational decisions. For example, the organization needs to decide what user permissions it is going to enforce to comply with the AC-3 security control. It needs to create custom LimitRange and ResourceQuota objects per namespace to comply with the SC-6 security control. The organization needs to create custom NetworkPolicy objects to comply with the SC-7 (5) security control.

As I explained at the beginning of the article, compliance with some security controls does not require custom organizational attention. NIST 800-53 security controls can be evaluated and remediated automatically by the Compliance Operator in OpenShift 4.6+ clusters. Continue reading to learn how you can distribute the Compliance Operator and its capabilities to multicluster environments using RHACM. By distributing the Compliance Operator across multiple clusters, you can evaluate all of your multicluster OpenShift environments against NIST 800-53 security controls.

Two Policy resources are deployed in the rhacm-policies namespace on the hub cluster to integrate the Compliance Operator with RHACM. The first policy deploys the Compliance Operator on the managed clusters, while the second policy monitors ComplianceCheckResult objects to identify any violations found by compliance scans. Note that while RHACM provides a high-level summary of compliance results, RHACS integrates with the Compliance Operator to provide a detailed drill-down view of compliance results.

Deploy the Compliance Operator

A compliance-operator-policy is created to deploy the Compliance Operator on the managed clusters. The policy consists of three ConfigurationPolicy definitions:

-

The first

ConfigurationPolicyensures that theopenshift-compliancenamespace exists on the managed clusters. The policy remediation action is set toenforce, so the namespace is created as soon as the policy is deployed. -

The second and the third

ConfigurationPolicyobjects ensure that the Compliance Operator is installed. The policies validate that theOperatorGroupandSubscriptionresources are present in theopenshift-compliancenamespace on the managed clusters. The remediation action in theseConfigurationPolicyobjects is set toenforce, so the Compliance Operator is installed as soon as the policy is created.

Run the following command to deploy the Compliance Operator:

<hub cluster> ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies/CA-2 --branch master --name ca-2 --namespace rhacm-policies

After you apply the Policy resource, it appears that both clusters are compliant with the newly created policy in the Governance dashboard. The Compliance Operator is now installed on both clusters, view the following image:

Monitor ComplianceCheckResults using RHACM

A compliance-operator-moderate-scan-policy is created to initialize a compliance scan on the managed clusters. The policy informs you of any violations found in the compliance scans of the Compliance Operator. The policy consists of three ConfigurationPolicy definitions:

-

The first

ConfigurationPolicyis namedcompliance-moderate-scan, and it ensures that aScanSettingBindingresource is created on the managed clusters. TheScanSettingBindingresource is responsible for associatingScanSettingobjects with compliance profiles. By creating aScanSettingBindingyou are initiating a compliance scan on the managed clusters. In our case, the policy starts a scan against theocp4-moderateandrhcos4-moderatecompliance profiles. The policy remediation action is set toenforce, so theScanSettingBindingresource is created as soon as the policy is deployed. -

The purpose for the second

ConfigurationPolicyis to make sure that the compliance scan has been completed successfully. After creating theScanSettingBindingwith the previousConfigurationPolicy, aComplianceSuiteresource is created automatically on the managed clusters. The name of theComplianceSuiteresource is moderate. TheComplianceSuiteresource defines the status of the scan and its result. TheConfigurationPolicymakes sure that thestatusfield of theComplianceSuiteobject is set toDONE. The policy remediation action is set toinform, so a violation is initiated in the Governance dashboard as long as the status of theComplianceSuiteis notDONE. -

The third

ConfigurationPolicychecks the scan results and initiates a violation in the Governance dashboard if any rule that is associated with the moderateComplianceSuiteis violated.As soon as the scan is finished, the Compliance Operator creates multiple

ComplianceCheckResultobjects. EachComplianeCheckResultobject shows a scan result for a specific compliance rule. TheConfigurationPolicymonitors theComplianeCheckResultresources and their status. TheConfigurationPolicystates that managed clustersmustnothaveComplianeCheckResultresources with thecheck-status: FAILlabel associated with them. Therefore, if one of theComplianeCheckResultresources indicates that the managed cluster is non-compliant with a compliance rule, a violation is initiated in the Governance dashboard. Run the following command:<hub cluster> ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies/CM-6 --branch master --name cm-2 --namespace rhacm-policies

Immediately after you apply the Policy resource, it appears that both clusters are non-compliant with the newly defined policy in the Governance dashboard:

The policy takes some time to initiate a scan and aggregate violations. If you log into a managed cluster, you are able to see the scan in progress. Run the following command:

<cluster-a> $ oc get ComplianceSuite -n openshift-compliance

NAME PHASE RESULT

moderate RUNNING NOT-AVAILABLE

As soon as the status of the scan changes to DONE, you can find an evaluation of the scan result for both clusters in the Governance dashboard. To understand which compliance rules each cluster violated, navigate to the Governance dashboard, and click on policy-moderate-scan. Afterward, navigate to the Status tab, and examine the findings. You can see that both clusters are non-compliant with the compliance-suite-moderate-results ConfigurationPolicy:

To validate which compliance rules are violated by the managed clusters, select View details next to the ConfigurationPolicy definition. The page presents a list of ComplianceCheckResult resources with the FAIL status. Each entry in the list represents a Compliance Operator benchmark that the managed cluster does not pass. View the following image:

You can remediate the violations from the Governance dashboard by eliminating the ComplianceCheckResult resources that have violations. Each ComplianceCheckResult resource that has violations is associated with remediation instructions in its definition. You can access these instructions by clicking the View yaml button next to the violating ComplianceCheckResult name.

Subscribe to all aforementioned policies

In case you want to deploy all of the previously described policies at once, run the following command against your forked GitHub repository:

<hub cluster> ./deploy.sh --url https://github.com/<your-username>/rhacm-blog.git --path policies --branch master --name all-policies --namespace rhacm-policies

View the following image where you can view the policy status for all of the policies in this three-part blog series:

It’s not all about security

Since the majority of the article described security controls and practices, it is important to note that security teams are not the only teams that can take advantage of the governance policy framework that is offered by RHACM. System administrators and software engineers can also benefit from using governance, risk and compliance as a part of their day-to-day work. They can use it to ensure that resiliency and software engineering aspects are properly configured to enterprise best practices.

System administrators can use the governance policy framework to configure and monitor DNS zones. They can configure policies that initiate an alert if one of the cluster operators is degraded. They can also install and configure operators by applying subscriptions with the governance policy framework.

Software engineers can use the governance policies to configure and monitor Kubernetes resources that might affect their application, like Deployments and ConfigMaps. Furthermore, software engineers can use policies to deploy third-party tools that can integrate with their application, like Red Hat AMQ, or 3scale API management.

More examples and demonstrations regarding software engineering and resiliency while using governance framework policies can be found in the following article - Implement Policy-based Governance Using Configuration Management of Red Hat Advanced Cluster Management for Kubernetes.

Conclusion

In this three-part blog series, I went through some practices that you can apply in a multicluster OpenShift environment in order to further comply with an OpenShift environment with the NIST 800-53 standard. Most of the policies used in the article take an OpenShift security practice and distribute it across all OpenShift clusters using RHACM.

Even though I did not go through all the controls in NIST 800-53, it is important to understand the power RHACM can pose in an organization. If implemented and maintained according to global security and organization standards, RHACM can become the main component in a security stack of an organization.

Furthermore, RHACM provides integration with many third-party tools in order to help harden and validate security compliance in managed clusters. RHACM can be integrated with the following third-party tools:

- Sysdig Secure deployments on the managed clusters

- OpenSCAP scans using the OpenShift Compliance Operator

- Image scan results from images managed in Quay with the Container Security Operator

- Gatekeeper policies

- Integrity Shield implementations.

And it does not end there

The policies discussed throughout the blog present just a glimpse of what can be achieved if you integrate an environment with RHACM. As it was explained towards the end of the article, governance, risk, and compliance is not only about the security teams, both software engineers and system administrators can benefit from it as well!

Other policy examples can be found in the policy-collection GitHub repository, and even more policies are described in blogs such as:

- How to Integrate Open Policy Agent with Red Hat Advanced Cluster Management for Kubernetes policy framework

- Securing Kubernetes Clusters with Sysdig and Red Hat Advanced Cluster Management

- Implement Policy-based Governance Using Configuration Management of Red Hat Advanced Cluster Management for Kubernetes

- Address CVEs Using Red Hat Advanced Cluster Management Governance Policy Framework

The community keeps on growing. New policies that answer different security controls in different security compliance standards are uploaded every day. Get started and join the community at Open Cluster Management. More information can be found on the Red Hat Advanced Cluster Management for Kubernetes and Red Hat Advanced Cluster Security for Kubernetes product pages.

Special thanks

Thanks to my reviewers, who supported me through the writing process of the article. Much appreciated!!

Über den Autor

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Original Shows

Interessantes von den Experten, die die Technologien in Unternehmen mitgestalten

Produkte

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud-Services

- Alle Produkte anzeigen

Tools

- Training & Zertifizierung

- Eigenes Konto

- Kundensupport

- Für Entwickler

- Partner finden

- Red Hat Ecosystem Catalog

- Mehrwert von Red Hat berechnen

- Dokumentation

Testen, kaufen und verkaufen

Kommunizieren

Über Red Hat

Als weltweit größter Anbieter von Open-Source-Software-Lösungen für Unternehmen stellen wir Linux-, Cloud-, Container- und Kubernetes-Technologien bereit. Wir bieten robuste Lösungen, die es Unternehmen erleichtern, plattform- und umgebungsübergreifend zu arbeiten – vom Rechenzentrum bis zum Netzwerkrand.

Wählen Sie eine Sprache

Red Hat legal and privacy links

- Über Red Hat

- Jobs bei Red Hat

- Veranstaltungen

- Standorte

- Red Hat kontaktieren

- Red Hat Blog

- Diversität, Gleichberechtigung und Inklusion

- Cool Stuff Store

- Red Hat Summit