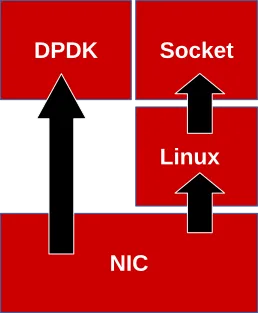

The Linux networking stack has many features that are essential for IoT (Internet of Things) and data center networking, such as filtering, connection tracking, memory management, VLANs, overlay, and process isolation. These features come with a small overhead of latency and throughput for tiny packets at line rate.

DPDK (Data Plane Development Kit) allows access to the hardware directly from applications, bypassing the Linux networking stack. This reduces latency and allows more packets to be processed. However, many features that Linux provides are not available with DPDK.

What if there was a way to have ultra low latency and high throughput for some traffic, and full feature-set from Linux networking, all at the same time? This “utopia” is now possible with Queue Splitting (Bifurcated Driver).

Introduction

Queue Splitting (Bifurcated Driver) is a design that allows for directing some traffic to DPDK, while keeping the remaining traffic in the traditional Linux networking stack. The filtering (splitting) is done in the hardware Network Interface Card (NIC). The software (driver) is only involved in the configuration of the splitting. Thus, there is no software overhead when using this approach in the critical path of packet movement.

Flows are filtered using IP addresses, and TCP or UDP ports may be configured on the NIC. Applications written with DPDK may either process those flows or forward them to other network ports. In both cases, reduced latency and higher throughput can be easily achieved.

Selected packets go through Linux networking stack and others go directly to a DPDK application in userspace. Thus yielding the proverbial best of both worlds.

Some packets go through the traditional Linux networking stack while others head directly to a DPDK application in userspace.

Some packets go through the traditional Linux networking stack while others head directly to a DPDK application in userspace.

How to Use It

The beta release of Red Hat Enterprise Linux 7.2 includes support for Queue Splitting (Bifurcated Driver). Applications written using DPDK libraries and drivers will be able to use Virtual Function ports where traffic will be directed.

The setup of the flow direction uses ethtool NTUPLE/NFC interface, with the Virtual Function as a target.

The following example creates two Virtual Functions, each one in a given port, configures the flow director for all TCP flows between two hosts and starts a DPDK application which forwards packets between those two ports.

# Creates Virtual Functions echo 1 > /sys/bus/pci/devices/0000:01:00.0/sriov_numvfs echo 1 > /sys/bus/pci/devices/0000:01:00.1/sriov_numvfs # Enable and set flow director ethtool -K em1 ntuple on ethtool -K em2 ntuple on ethtool -N em1 flow-type tcp4 src-ip 192.0.2.2 dst-ip 198.51.100.2 \ action $(($VF << 32)) ethtool -N em2 flow-type tcp4 src-ip 198.51.100.2 dst-ip 192.0.2.2 \ action $(($VF << 32)) # Enable hugepages for DPDK echo 1024 > /proc/sys/vm/nr_hugepages # Load and use vfio-pci driver modprobe vfio-pci dpdk_nic_bind.py -b vfio-pci 01:10.0 dpdk_nic_bind.py -b vfio-pci 01:10.1 # Use MAC forwarder and the peers MAC addresses testpmd -c 0xff -n 4 -d /usr/lib64/librte_pmd_ixgbe.so -- -a \ --eth-peer=0,ec:f4:bb:d3:ec:92 \ --eth-peer=1,ec:f4:bb:d7:ae:12 \ --forward-mode=mac

In this example, any TCP packets with those IP source and destination addresses will go through the DPDK forwarder (which will use the assigned MAC address when forwarding to the other port) . All other traffic, with different hosts or different protocols, will go through the Linux networking stack.

Another example is directing packets with a specific UDP destination port to a DPDK application, which, in turn, processes them and sends responses by pushing packets to the same port using DPDK.

# Creates Virtual Function echo 1 > /sys/bus/pci/devices/0000:01:00.0/sriov_numvfs # Enable and set flow director ethtool -K em1 ntuple on ethtool -N em1 flow-type udp4 dst-ip $EM1_IP_ADDRESS dst-port $UDP_PORT action $(($VF << 32)) # Enable hugepages for DPDK echo 1024 > /proc/sys/vm/nr_hugepages # Load and use vfio-pci driver modprobe vfio-pci dpdk_nic_bind.py -b vfio-pci 01:10.0 # Start UDP echo application udp_echo -c 0xff -n 4 -d /usr/lib64/librte_pmd_ixgbe.so

How it Works

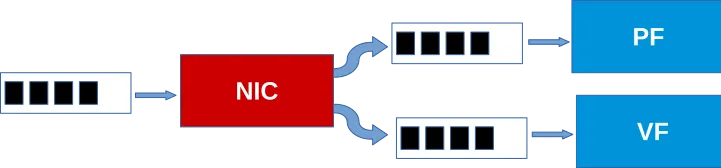

The Queue Splitting (Bifurcated Driver) design combines the technology of SR-IOV and packet flow directors.

SR-IOV is a PCI standard that allows the same physical adapter to present multiple virtual functions. For network adapters, this usually means directing traffic with a specific destination MAC address that goes into a port to the Virtual Function. The adapter acts like a switch.

Most modern network adapters have configurable packet flow directors, which allow the operating system to direct specific flows to a given packet queue. Queue Splitting (Bifurcated Driver) relies on the capability of some adapters to direct to a queue belonging to a Virtual Function.

Since a new PCI function receives the desired traffic, by using Linux drivers like VFIO, which allows user space applications to bypass the kernel when accessing PCI devices, DPDK applications may use the same driver that is already used for some adapters Virtual Functions.

A network adapter directing some packets to a Physical Function queue and other packets to a Virtual Function queue.

A network adapter directing some packets to a Physical Function queue and other packets to a Virtual Function queue.

Supported Hardware and Software

Queue Splitting (Bifurcated Driver) is included as Technology Preview in the beta release of Red Hat Enterprise Linux 7.2. It requires a system with SR-IOV support and has only been tested with an Intel 82599 adapter (but newer models should be supported as well… including some X540 and X550 models).

Limitations

As of now, only one driver supports the Queue Splitting (Bifurcated Driver) design, ixgbe. The limitations described below apply to the adapter supported by this driver. Other drivers may have different limitations.

Also, as of posting, only IPv4 is supported and UDP, TCP and SCTP are the supported protocols. Filters may include VLAN ID, IPv4 source and destination addresses, and source and destination ports for the specific transport protocol.

All filters must include the same set of fields. So, if one filter uses IPv4 source addresses, all other filters need to use them as well. A new filter cannot be inserted if it uses a field that is not used by the other (already inserted) filters.

Since it uses a Virtual Function, the receipt and transmission of some packets may be restricted, depending on configuration. For example, MAC addresses may not be spoofed unless the interface is set to allow it. It will receive broadcast and multicast packets, and it will receive packets to different destination MAC addresses if the interface is in promiscuous mode and the packet matches the filter. It will also receive all traffic that has the VF’s own MAC address as destination.

Note that fragmented packets are not directed to the Virtual Function as well.

While we plan to work with our partners to eliminate some of these limitations in future hardware and software releases - nothing is currently “set in stone”. To this end, thoughts, questions, and comments are welcome - we look forward to your feedback.

About the author

More like this

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Customer support

- Developer resources

- Find a partner

- Red Hat Ecosystem Catalog

- Red Hat value calculator

- Documentation

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit