Testing environment

In this performance analysis, we investigate various configurations and testing scenarios to showcase IPsec throughput on the latest RHEL 9 platform. Our choice of a modern multicore CPU and the latest stable RHEL aims to represent today's technological capabilities.

Hardware configuration

- Dual socket of 28 cores each Intel 4th Generation Xeon Scalable Processor

- Hyper-threading enabled (two sockets with 56 logical cores each)

- Directly connected high-speed 100Gbit Intel E810 network cards

Software information

- Distribution: RHEL-9.4.0

- Kernel: 5.14.0-427.13.1.el9_4.x86_64

- NetworkManager: 1.46.0-1.el9.x86_64

- libreswan: 4.12-1.el9.x86_64

- iperf3: 3.9-10.el9_2.x86_64

- Tuned profile: throughput-performance

Testing methodology

In this paper, we focus solely on IPsec in transport mode as it closely resembles the operation of a VPN concentrator, a common use case. To optimize performance and stability, we set the replay-window parameter to zero. It's worth noting that disabling replay protection at the IPsec layer is a decision left to the end-user. For the sake of simplicity in our testing, we chose to disable it.

No IPsec HW acceleration (e.g., Intel QAT or NIC hardware offloads) was involved. Other offloads were kept at the default.

We conducted tests using various cryptographic cipher suites - legacy AES-SHA1 and AES-GCM.

In the last part of the testing, we focus on the parallelism and scalability of our setup.

- IPsec configuration was set to transport mode.

- The replay-window was set to 0 for higher throughput.

- The results were obtained for both IPv4 and IPv6.

- Cyphersuites: aes-sha1 and aes-gcm

- Single and multiple security associations

Process and NIC interrupt CPU pinning

We also configure the CPU pinning. The iperf3 process is always pinned to the first CPU core of the socket to which the NIC is connected (--affinity 0,0). In the case of parallel tests, every iperf3 process is pinned to the respective CPU core.

The interrupts of the Intel NIC card are also pinned. By disabling the irqbalance and using the tuna, we ensure the IRQs are isolated only on socket 0 and spread across all the CPUs. We disabled irqbalance to keep the configuration simple. However, it could also make sense to keep irqbalance enabled and ban the NIC interrupts using the --banirq argument. For more details, see the irqbalance manual.

$ tuna isolate --socket 0

$ tuna isolate --socket 0

If the pinning is not configured correctly, the results are not stable, and if the interrupts get spread across the second NUMA node to which the NIC is not connected, throughput performance will be hit up to 35%.

For further information on hardware locality, please refer to the following image illustrating the hardware topology:

System configuration

This configuration must be done for both machines symmetrically:

- Install the libreswan package: dnf install -y libreswan

- Disable and stop the firewall: systemctl disable --now firewalld.service

- Configure the IP addresses on the ice_0 interface on both machines. In the case of multiple IPsec security associations, configure multiple addresses. In our case, we configure 14 IPv4 and 14 IPv6 addresses on each side. The configuration is done via NetworkManager. See the example of ip command output at the end of this document.

- Test the network setup: ping -I <local_addr> <remote_addr>

- Create the libreswan configuration files in /etc/ipsec.d/*. You can use this config as an example, just change your IP addresses accordingly.

- Enable and start the libreswan: systemctl enable --now ipsec.service

- Use the following commands to debug established SAs: journalctl -u ipsec.service, ip xfrm policy, ip xfrm state

- On a server-side, start multiple iperf3 server instances using iperf3 -s --port <port>. The number of instances should match the number of IP addresses configured for each address family (14 in our case).

- On the client machine, the tests are performed using the following iperf3 command. In the case of parallel tests, these commands must be run in parallel. Each of them is over a different iperf3 port with addresses that must be set according to libreswan configuration.

iperf3 --port <iperf_server_port> \

--bind "<local_bind_address>" \

--affinity <local_cpu_num>,<remote_cpu_num> \

--client "<iperf_server_address>" \

--time 30 \

--len <message_size>In our environment, the configuration, testing, and reporting are completely automated using an internal testing framework. However, you can also take a look at this script which can be used as a quick proof-of-concept to run multiple instances of iperf3.

0) Single Stream TCP Test over AES-SHA1 Security Association:

The AES-SHA1 performs at around 4 Gbit/s, which is significantly lower (by approximately 40%) compared to, for example, the AEAD (Authenticated Encryption with Associated Data) algorithm AES-GCM, where both encryption and integrity checks are performed simultaneously. The AES-SHA1 combines AES encryption with SHA1 hashing for integrity, with each operation performed separately. This separation creates a performance bottleneck, resulting in lower throughput.

IPsec AES-SHA1 IPv4 results

IPsec AES-SHA1 IPv6 results

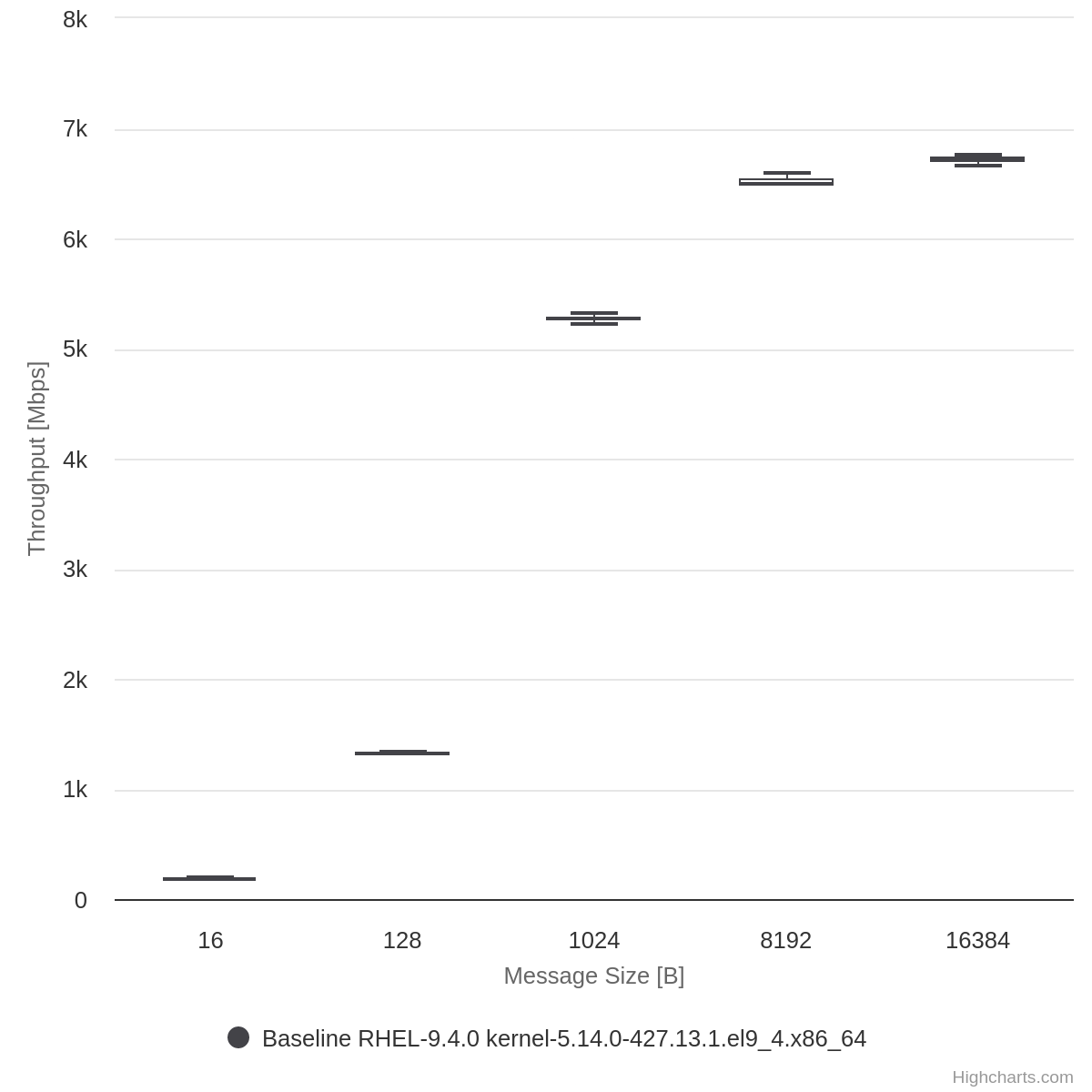

1) Single Stream TCP Test over AES-GCM Security Association:

The configuration for IPv4 and IPv6 can be found at the end of this document. It is the same for both machines.

As evident from the figures below, we achieved a throughput of 6 Gbit/s, approaching the 10 Gbit/s line rate, utilizing only a single CPU core (with other cores primarily handling interrupts), and without the need for specialized hardware or NIC offloads.

IPsec AES-GCM IPv4 results

IPsec AES-GCM IPv6 results

CPU usage and NIC interrupt allocation

As evident from the htop outputs, only the CPUs from socket 0 (CPUs 0-27 and 56-83) were used during the testing. All of the CPUs from socket 1 (CPUs with IDs 28-55 and 84-111) were removed from the mpstat output because these CPUs have been almost idle during the testing, handling only basic processes like htop or systemd.

From the mpstat output analysis in the tables below (the Number of CPUs row), you can see how many CPUs were used for handling IRQs, software IRQs, user space processes, kernel tasks, and how many were idle.

Sender (cidic1) | ||||||||||||

%usr | %nice | %sys | %iowait | %irq | %soft | %steal | %guest | %gnice | %idle | SUM of used CPUs: | ||

SUM | 0.6 | 0 | 100.92 | 0 | 1.41 | 12.74 | 0 | 0 | 0 | 5484.34 | 1.1567 | |

Number of CPUs | 0.01 | 0.00 | 1.01 | 0.00 | 0.01 | 0.13 | 0.00 | 0.00 | 0.00 | 54.84 | ||

Receiver (cidic2) | ||||||||||||

%usr | %nice | %sys | %iowait | %irq | %soft | %steal | %guest | %gnice | %idle | SUM of used CPUs: | ||

SUM | 0.9 | 0 | 97.37 | 0 | 1.52 | 53.57 | 0 | 0 | 0 | 5446.65 | 1.5336 | |

Number of CPUs | 0.01 | 0.00 | 0.97 | 0.00 | 0.02 | 0.54 | 0.00 | 0.00 | 0.00 | 54.47 | ||

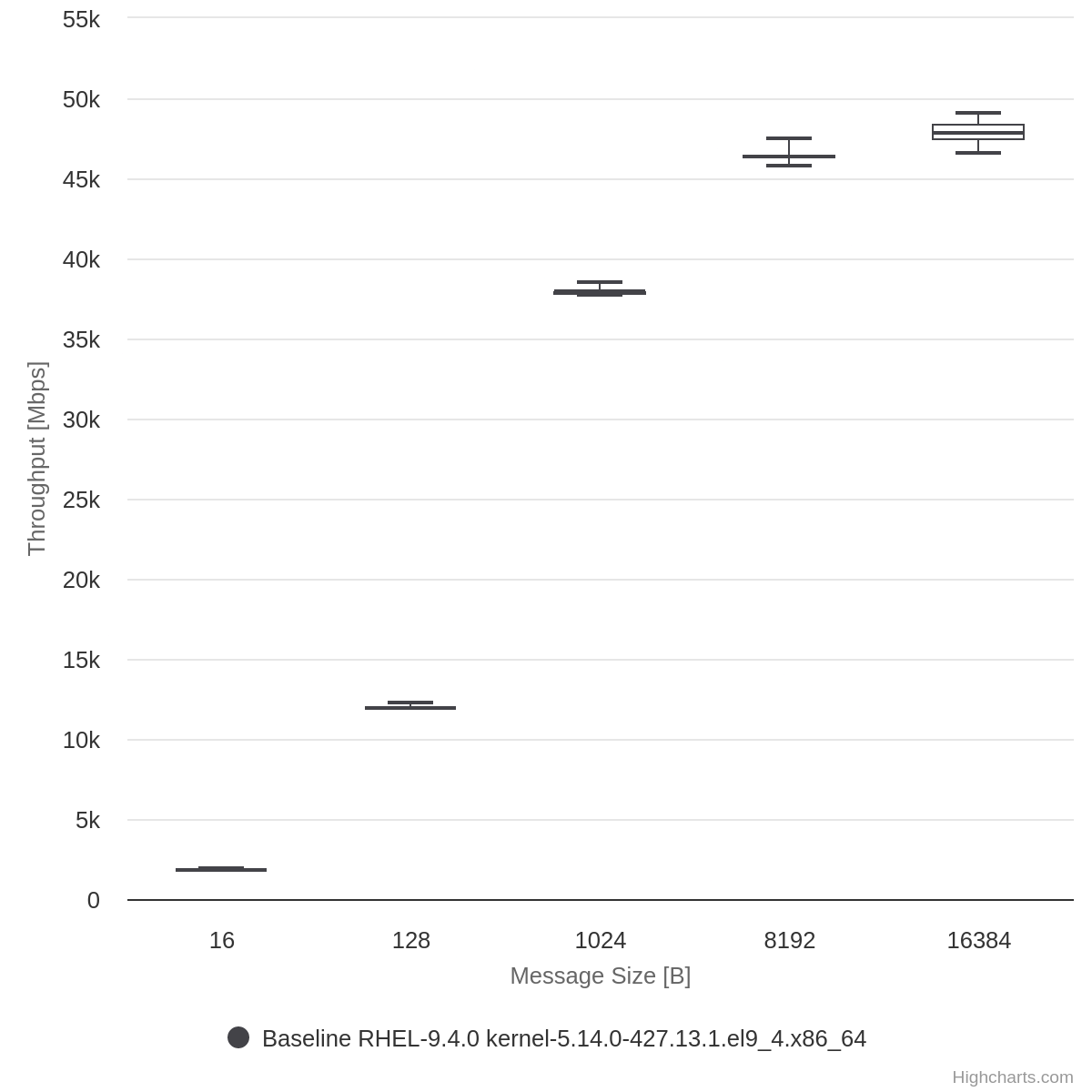

2) Parallel TCP Multistream Test over Multiple AES-GCM Security Associations:

- Similar IPsec configuration as the single stream test (1).

- Multiple IPsec associations correspond to the half number of CPU cores per socket.

The IPsec configuration mirrors that of the single-stream test (1). However, in this scenario, we configured additional IPv4/6 addresses on the enp61s0f0 interface, creating a distinct IPsec configuration for each address. The number of security associations was half of the number of CPU cores per socket, resulting in 14 associations for IPv4 and an additional 14 for IPv6.

As you can see from the figures below, with our dedicated server, we would be able to saturate a 40Gbit link or even 2x25Gbit links.

Parallel IPsec AES-GCM IPv4 results

Parallel IPsec AES-GCM IPv6 results

CPU usage and NIC interrupt allocation

The CPU and interrupt allocation in parallel tests is very similar to a single stream. Only the CPUs from socket 0 (which are CPUs 0-27 and 56-83) were used during the testing. All of the CPUs from socket 1 (CPUs with IDs 28-55 and 84-111) were removed from the mpstat output because they have been almost idle.

From the mpstat output analysis in the tables below (the Number of CPUs row), you can see how many CPUs were used for handling IRQs, software IRQs, user space processes, kernel tasks, and how many were idle.

Sender (cidic1) | ||||||||||||

%usr | %nice | %sys | %iowait | %irq | %soft | %steal | %guest | %gnice | %idle | SUM of used CPUs: | ||

SUM | 5.9 | 0 | 1175.6 | 0 | 7.35 | 165.29 | 0 | 0 | 0 | 4245.65 | 13.5414 | |

Number of CPUs | 0.06 | 0.00 | 11.76 | 0.00 | 0.07 | 1.65 | 0.00 | 0.00 | 0.00 | 42.46 | ||

Receiver (cidic2) | ||||||||||||

%usr | %nice | %sys | %iowait | %irq | %soft | %steal | %guest | %gnice | %idle | SUM of used CPUs: | ||

SUM | 16.89 | 0 | 1051.57 | 0.1 | 9.38 | 699.23 | 0 | 0 | 0 | 3822.82 | 17.7717 | |

Number of CPUs | 0.17 | 0.00 | 10.52 | 0.00 | 0.09 | 6.99 | 0.00 | 0.00 | 0.00 | 38.23 | ||

Results summary

- Single-stream IPsec AES-GCM tests achieved speeds of around 6 Gbit/s (IPv4 and IPv6) using only a single CPU core for the iperf3 process, with a few other socket cores dedicated to handling interrupts. No additional expensive hardware or separate servers are required; for instance, a common cloud service would suffice.

- Parallel tests showcased remarkable performance, reaching speeds of around 50 Gbit/s for IPv4 and IPv6.

- Notably, these results were achieved without IPsec hardware acceleration or other offloading mechanisms.

- With a dedicated mainstream server, we can max out a 40Gbit link or even 2x25Gbit links. This performance level competes with high-end industrial devices.

The obtained results highlight the impressive performance capabilities of IPsec on RHEL9, especially in parallel testing scenarios. Despite the absence of expensive hardware accelerators or NIC ESP offloads, IPsec over AES-GCM associations emerged as a compelling alternative, delivering high throughput and secure communication.

As demonstrated in this paper, the performance of IPsec on Red Hat Enterprise Linux 9 is already quite good on a nearly out-of-box configuration. However, further enhancements through additional optimizations and specialized tuning can push its performance even higher.

The table below summarizes the performance of different cryptographic functions at various link line rates, indicating the required CPU resources for each scenario:

Cryptographic function(s) | Link line rate | ||

1G | 10G | 50G | |

AES-SHA1 (single stream) | 1 core | 1 core (max ~3.75 Gpbs) | 1 core (max ~3.75 Gpbs) |

AES-GCM (single stream) | 1 core | almost possible with 2 cores (~6.8 Gpbs) | 2 cores (max ~6.8 Gpbs) |

AES-GCM (14 parallel SAs) | not necessary to configure multiple SAs | possible with 2 SAs: 1.94 cores needed for the sender and 2.79 for the receiver | dedicated server needed: 13.54 cores for the sender and 17.77 for the receiver |

Artifacts

CPU Info

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 57 bits virtual

Byte Order: Little Endian

CPU(s): 112

On-line CPU(s) list: 0-111

Vendor ID: GenuineIntel

BIOS Vendor ID: Intel(R) Corporation

Model name: Intel(R) Xeon(R) Gold 5420+

BIOS Model name: Intel(R) Xeon(R) Gold 5420+

CPU family: 6

Model: 143

Thread(s) per core: 2

Core(s) per socket: 28

Socket(s): 2

Stepping: 8

CPU(s) scaling MHz: 99%

CPU max MHz: 4100.0000

CPU min MHz: 800.0000

BogoMIPS: 4000.00

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cat_l2 cdp_l3 intel_ppin cdp_l2 ssbd mba ibrs ibpb stibp ibrs_enhanced tpr_shadow flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid cqm rdt_a avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb intel_pt avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local split_lock_detect avx_vnni avx512_bf16 wbnoinvd dtherm ida arat pln pts hwp hwp_act_window hwp_epp hwp_pkg_req vnmi avx512vbmi umip pku ospke waitpkg avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg tme avx512_vpopcntdq la57 rdpid bus_lock_detect cldemote movdiri movdir64b enqcmd fsrm md_clear serialize tsxldtrk pconfig arch_lbr ibt amx_bf16 avx512_fp16 amx_tile amx_int8 flush_l1d arch_capabilities

Virtualization: VT-x

L1d cache: 2.6 MiB (56 instances)

L1i cache: 1.8 MiB (56 instances)

L2 cache: 112 MiB (56 instances)

L3 cache: 105 MiB (2 instances)

NUMA node(s): 2

NUMA node0 CPU(s): 0-27,56-83

NUMA node1 CPU(s): 28-55,84-111

Vulnerability Gather data sampling: Not affected

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Retbleed: Not affected

Vulnerability Spec rstack overflow: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Enhanced / Automatic IBRS, IBPB conditional, RSB filling, PBRSB-eIBRS SW sequence

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affectedNIC Info

$ lspci | grep QSFP

0000:3d:00.0 Ethernet controller: Intel Corporation Ethernet Controller E810-C for QSFP (rev 02)

0000:3d:00.1 Ethernet controller: Intel Corporation Ethernet Controller E810-C for QSFP (rev 02)

$ ethtool -i ice_0

driver: ice

version: 5.14.0-427.4.1.el9_4.x86_64

firmware-version: 4.20 0x80017785 1.3346.0

expansion-rom-version:

bus-info: 0000:3d:00.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

$ ethtool -k ice_0

Features for ice_0:

rx-checksumming: on

tx-checksumming: on

tx-checksum-ipv4: on

tx-checksum-ip-generic: off [fixed]

tx-checksum-ipv6: on

tx-checksum-fcoe-crc: off [fixed]

tx-checksum-sctp: on

scatter-gather: on

tx-scatter-gather: on

tx-scatter-gather-fraglist: off [fixed]

tcp-segmentation-offload: on

tx-tcp-segmentation: on

tx-tcp-ecn-segmentation: on

tx-tcp-mangleid-segmentation: off

tx-tcp6-segmentation: on

generic-segmentation-offload: on

generic-receive-offload: on

large-receive-offload: off [fixed]

rx-vlan-offload: on

tx-vlan-offload: on

ntuple-filters: on

receive-hashing: on

highdma: on

rx-vlan-filter: on

vlan-challenged: off [fixed]

tx-lockless: off [fixed]

netns-local: off [fixed]

tx-gso-robust: off [fixed]

tx-fcoe-segmentation: off [fixed]

tx-gre-segmentation: on

tx-gre-csum-segmentation: on

tx-ipxip4-segmentation: on

tx-ipxip6-segmentation: on

tx-udp_tnl-segmentation: on

tx-udp_tnl-csum-segmentation: on

tx-gso-partial: on

tx-tunnel-remcsum-segmentation: off [fixed]

tx-sctp-segmentation: off [fixed]

tx-esp-segmentation: off [fixed]

tx-udp-segmentation: on

tx-gso-list: off [fixed]

fcoe-mtu: off [fixed]

tx-nocache-copy: off

loopback: off

rx-fcs: off

rx-all: off [fixed]

tx-vlan-stag-hw-insert: off

rx-vlan-stag-hw-parse: off

rx-vlan-stag-filter: on

l2-fwd-offload: off [fixed]

hw-tc-offload: off

esp-hw-offload: off [fixed]

esp-tx-csum-hw-offload: off [fixed]

rx-udp_tunnel-port-offload: on

tls-hw-tx-offload: off [fixed]

tls-hw-rx-offload: off [fixed]

rx-gro-hw: off [fixed]

tls-hw-record: off [fixed]

rx-gro-list: off

macsec-hw-offload: off [fixed]

rx-udp-gro-forwarding: off

hsr-tag-ins-offload: off [fixed]

hsr-tag-rm-offload: off [fixed]

hsr-fwd-offload: off [fixed]

hsr-dup-offload: off [fixed]IP Address Configuration

Client

$ ip address show dev ice_0

6: ice_0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether b4:96:91:db:db:e0 brd ff:ff:ff:ff:ff:ff

altname enp61s0f0

inet 172.20.0.1/24 brd 172.20.0.255 scope global noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.2/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.3/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.4/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.5/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.6/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.7/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.8/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.9/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.10/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.11/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.12/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.13/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.14/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet6 fd04::1/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::2/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::3/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::4/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::5/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::6/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::7/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::8/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::9/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::a/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::b/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::c/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::d/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::e/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::b696:91ff:fedb:dbe0/64 scope link noprefixroute

valid_lft forever preferred_lft foreverServer

$ ip address show dev ice_0

5: ice_0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 6c:fe:54:3d:a5:c8 brd ff:ff:ff:ff:ff:ff

altname enp61s0f0

inet 172.20.0.15/24 brd 172.20.0.255 scope global noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.16/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.17/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.18/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.19/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.20/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.21/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.22/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.23/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.24/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.25/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.26/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.27/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet 172.20.0.28/24 brd 172.20.0.255 scope global secondary noprefixroute ice_0

valid_lft forever preferred_lft forever

inet6 fd04::f/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::10/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::11/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::12/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::13/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::14/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::15/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::16/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::17/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::18/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::19/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::1a/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::1b/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fd04::1c/64 scope global noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::6efe:54ff:fe3d:a5c8/64 scope link noprefixroute

valid_lft forever preferred_lft foreverLibreswan IPsec configuration (IPv4 and IPv6)

$ cat /etc/ipsec.d/conn_IPv4_transport_aes_gcm128_encap-no_172.16.2.6_172.16.2.8.conf

conn IPv4_transport_aes_gcm128_encap-no

type=transport

authby=secret

left=172.16.2.6

right=172.16.2.8

phase2=esp

esp=aes_gcm128

auto=start

encapsulation=no

replay-window=0

nic-offload=no

$ cat /etc/ipsec.d/conn_IPv4_transport_aes_gcm128_encap-no_172.16.2.6_172.16.2.8.secrets

172.16.2.6 172.16.2.8 : PSK "your-secret-key"

$ cat /etc/ipsec.d/conn_IPv6_transport_aes_gcm128_encap-no_fd00:0:0:2::6_fd00:0:0:2::8.conf

conn IPv6_transport_aes_gcm128_encap-no_fd00:0:0:2::6_fd00:0:0:2::8

type=transport

authby=secret

left=fd00:0:0:2::6

right=fd00:0:0:2::8

phase2=esp

esp=aes_gcm128

auto=start

encapsulation=no

replay-window=0

nic-offload=no

$ cat /etc/ipsec.d/conn_IPv6_transport_aes-sha1_encap-no_fd00:0:0:2::5_fd00:0:0:2::7.secrets

fd00:0:0:2::5 fd00:0:0:2::7 : PSK "your-secret-key"

PoC script to run multiple parallel iperf3 clients

#!/bin/bash

number_of_tests=14

echo "Number of tests: $number_of_tests"

# Loop to run N parallel iperf3 tests

for ((i = 1; i <= $number_of_tests; i++)); do

# Extracting addresses from filenames

# IPv4

file=$(ls /etc/ipsec.d/conn_IPv4_transport_aes_gcm128-null_encap-no_*.conf | sed -n ${i}p)

bind_address=$(echo "$file" | grep -oP '(\d+\.){3}\d+' | head -n 1)

server_address=$(echo "$file" | grep -oP '(\d+\.){3}\d+' | tail -n 1)

# IPv6

#file=$(ls /etc/ipsec.d/conn_IPv6_transport_aes_gcm128-null_encap-no_fd04*.conf | sed -n ${i}p)

#bind_address=$(echo "$file" | grep -oP 'fd04::[a-f0-9:]*' | head -n 1)

#server_address=$(echo "$file" | grep -oP 'fd04::[a-f0-9:]*' | tail -n 1)

#echo "Address: ${bind_address} <-> ${server_address}"

# Calculate port number

port=$((5200 + i))

# Run iperf3 test with affinity set

iperf3 --json --port $port --bind "$bind_address" --affinity $i,$i --interval 10 --client "$server_address" --time 30 --len 16384 > /tmp/iperf_output_$i.json &

# Store the process ID of the background task

pids[$i]=$!

done

for pid in ${pids[*]}; do

wait $pid

done

total_throughput=0

for ((i = 1; i <= $number_of_tests; i++)); do

throughput=$(jq -r '.end.sum_sent.bits_per_second' /tmp/iperf_output_$i.json)

total_throughput=$(echo "$total_throughput + $throughput" | bc)

rm /tmp/iperf_output_$i.json

done

# Convert total throughput to Mbps

total_throughput_mbps=$(echo "scale=2; $total_throughput / (1024 * 1024)" | bc)

echo "Total network throughput of all clients: $total_throughput_mbps Mbps"

About the authors

I started at Red Hat in 2014 as an intern, learning much about Linux and open source technology. My main focus is improving the Linux kernel's performance, especially for network performance testing with IPsec and Macsec.

I also have experience in test automation, creating tools and services, and working with CI/CD systems like GitLab, Jenkins and OpenShift. I am skilled in maintaining Linux-based systems and configuring services to ensure they run smoothly.

I joined Red Hat as an intern in 2010. After my graduation at Brno University of Technology, I started in Red Hat full time in 2012. I focus on kernel network performance testing, and network card drivers and testing. I am also maintaining an onsite hardware lab environment for testing network setups.

More like this

MCP security: Implementing robust authentication and authorization

AI trust through open collaboration: A new chapter for responsible innovation

Post-quantum Cryptography | Compiler

Understanding AI Security Frameworks | Compiler

Read more about optimizing performance for the open-hybrid enterprise.

Read more about optimizing performance for the open-hybrid enterprise.

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds