LoRA vs. QLoRA

LoRA (Low-Rank adaptation) and QLoRA (quantized Low-Rank adaptation) are both techniques for training AI models. More specifically, they are forms of parameter-efficient fine-tuning (PEFT), a fine-tuning technique that has gained popularity because it is more resource-efficient than other methods of training large language models (LLMs).

LoRA and QLoRA both help fine-tune LLMs more efficiently, but differ in how they manipulate the model and utilize storage to reach intended results.

How are LoRA and QLoRA different from traditional fine-tuning?

LLMs are complex models made up of large numbers of parameters—some can reach into the billions. These parameters allow the model to be trained on a certain amount of information. More parameters means more data storage and, overall, a more capable model.

Traditional fine-tuning requires the refitting (updating or adjusting) of each individual parameter in order to update the LLM. This can mean fine-tuning billions of parameters, which takes a large amount of compute time and money.

Updating each parameter can lead to “overfitting,” a term used to describe an AI model that is learning “noise,” or unhelpful data, in addition to the general training data.

Imagine a teacher and their classroom. The class has learned math all year long. Just before the test, the teacher emphasizes the importance of long division. Now during the test, many of the students find themselves overly preoccupied with long division and have forgotten key mathematical equations for questions that are just as important. This is what overfitting can do to an LLM during traditional fine-tuning.

In addition to issues with overfitting, traditional fine-tuning also presents a significant cost when it comes to resources.

QLoRA and LoRA are both fine-tuning techniques that provide shortcuts to improve the efficiency of full fine-tuning. Instead of training all of the parameters, it breaks the model down into matrices and only trains the parameters necessary to learn new information.

To follow our metaphor, these fine-tuning techniques are able to introduce new topics efficiently, without distracting the model from other topics on the test.

Red Hat AI

How does LoRA work?

The LoRA technique uses new parameters to train the AI model on new data.

Instead of training the entire model and all of the pre-trained weights, they are set aside or “frozen” and a smaller sample size of parameters is trained instead. These sample sizes are called “low-rank” adaptation matrices, for which LoRA is named.

They are called low-rank because they are matrices with a low number of parameters and weights. Once trained, they are combined with the original parameters, and then act as one single matrix. This allows fine-tuning to be done much more efficiently.

It’s easier to think of the LoRA matrix as one row or one column that is added to the matrix.

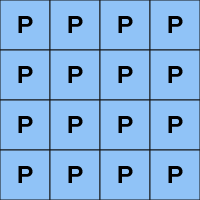

Think of this as the whole parameter that needs to be trained:

Training all of the weights in the parameter takes significant time, money, and memory. When it’s done, you may still have more training to do, and wasted a lot of resources along the way.

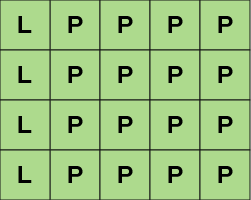

This column represents a low-rank weight:

When the new low-rank parameters have been trained, the single “row” or “column” is added into the original matrix. This allows it to apply its new training to the whole parameter.

Now the AI model can operate together with the newly fine-tuned weights.

Training the low-rank weight takes less time, memory, and cost. Once the sample size is trained, it can apply what it’s learned within the larger matrix, without taking up any extra memory.

Benefits of LoRA

LoRA is a technique that allows the model to be fine-tuned with less time, less resources, and less effort. Benefits include:

- Less parameters need to be trained.

- Lower risks of overfitting.

- Faster training time.

- Less memory is used.

- Flexible adjustments (training can be applied to some parts of the model and not others).

How does QLoRA work?

QLoRA is an extension of LoRA. It is a similar technique with an additional perk: less memory.

The “Q” in “QLoRA” stands for “quantized.” In this context, quantizing the model means compressing very complex, precise parameters (a lot of decimal numbers and a lot of memory) into a smaller, more concise parameter (less decimals and less memory).

Its goal is to fine-tune a portion of the model using the storage and memory of a single graphics processing unit (GPU). It does this using a 4-bit NormalFloat (NF4)---a new data type that is capable of quantizing the matrices with even less memory than LoRA. By compressing the parameter into smaller, more manageable data, it can decrease the memory footprint required by up to 4 times its original size.

After the model has been quantized, it is much easier to fine-tune because of its small size.

Think of this as the original model’s parameters:

Within the 12 parameters, 3 are green, 6 are blue, 2 are yellow, and 1 is pink. When the model is quantized, it is compressed into a representation of the previous model.

After quantization, we are left with a sample size of 1 green, 2 blue, and 1 yellow.

During quantization, there is a risk that some data is so small that it is lost during the compression. For example, the 1 pink parameter is missing because it was such a small fraction of the parameter, it did not represent enough data to carry over into the compressed version.

In this example, we compress the parameters from 12 to 4. But in reality, billions of parameters are being compressed into a finite number that can be manageably fine-tuned on a single GPU.

Ideally, any lost data can be recovered from the original parameter when the newly trained matrix is added back to the original matrices, without losing precision or accuracy along the way. However, this is not guaranteed.

This technique combines high-performance computing with low-maintenance memory storage. This keeps the model extremely accurate while working with limited resources.

Benefits of QLoRA

QLoRA is a technique that emphasizes low maintenance memory requirements. Similarly to LoRA, it prioritizes efficiency that allows a faster, easier fine-tuning training process. Benefits include:

- Requires less memory than LoRA

- Helps avoid overfitting data

- Maintains high levels of accuracy

- Fast and lightweight model tuning

How much accuracy do we lose when we quantize LLMs? Find out what happens when half-a-million quantized LLMs are evaluated.

How are LoRA and QLoRA different?

LoRA can be used all by itself as an efficient fine-tuning technique. QLoRA is an extension that adds layers of techniques to increase efficiency in addition to LoRA. QLoRA uses significantly less storage space.

If you’re struggling to decide which technique to use for your needs, it’s best to consider how much storage and resources you have. If you have limited storage, QLoRA will be easier to execute.

How Red Hat can help

Red Hat® AI is a portfolio of products and services that can help your enterprise at any stage of the AI journey—whether you’re at the very beginning or ready to scale across the hybrid cloud.

With small, purpose built models and flexible customization techniques, it provides the flexibility to develop and deploy anywhere.

Generative AI fine-tuning of LLMs: Red Hat and Supermicro showcase outstanding results for efficient Llama-2-70b fine tuning using LoRA in MLPerf Training v4.0

New generative AI (gen AI) training results were recently released by MLCommons in MLPerf Training v4.0.