In our previous article, we discussed the GAMES approach for application architecture. Based on interactions with customers and companies over many years, we've found that the following 5 components and principles provide a simple, scalable, and flexible architecture for all things software:

- GitOps (config-as-code)

- API

- Microservices

- Event-driven functions through a broker

- Shared Services Platform

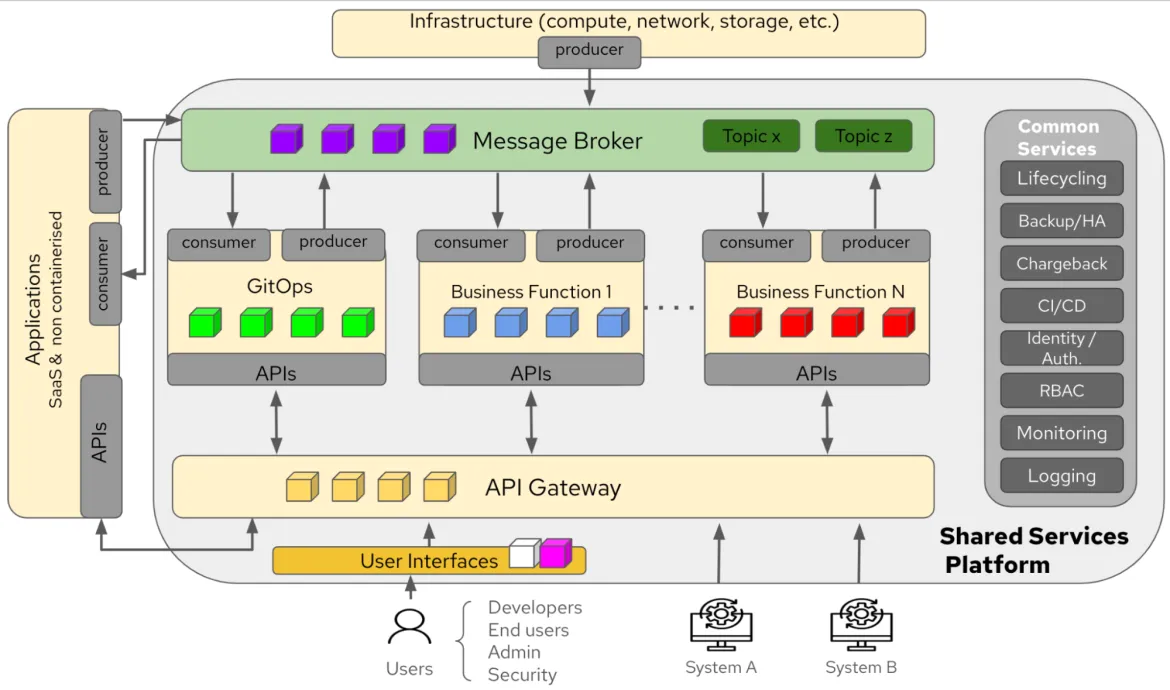

A GAMES architecture is event-driven, and incorporates GitOps, an API gateway, microservices, and event brokers to provide a shared services platform. Using these concepts together renders an architecture illustrated in the diagram below:

There are two important components (the Applications box on the left, and the Infrastructure box along the top) in this diagram. The first addresses applications not on the shared platform. These represent SaaS applications, as well as applications that haven't been containerized (these typically run in a VM or dedicated server). As long as those applications can use an API and a message broker, they fit into this overall integration pattern.

In other words, all applications in this environment can easily be transitioned in and out of the platform.

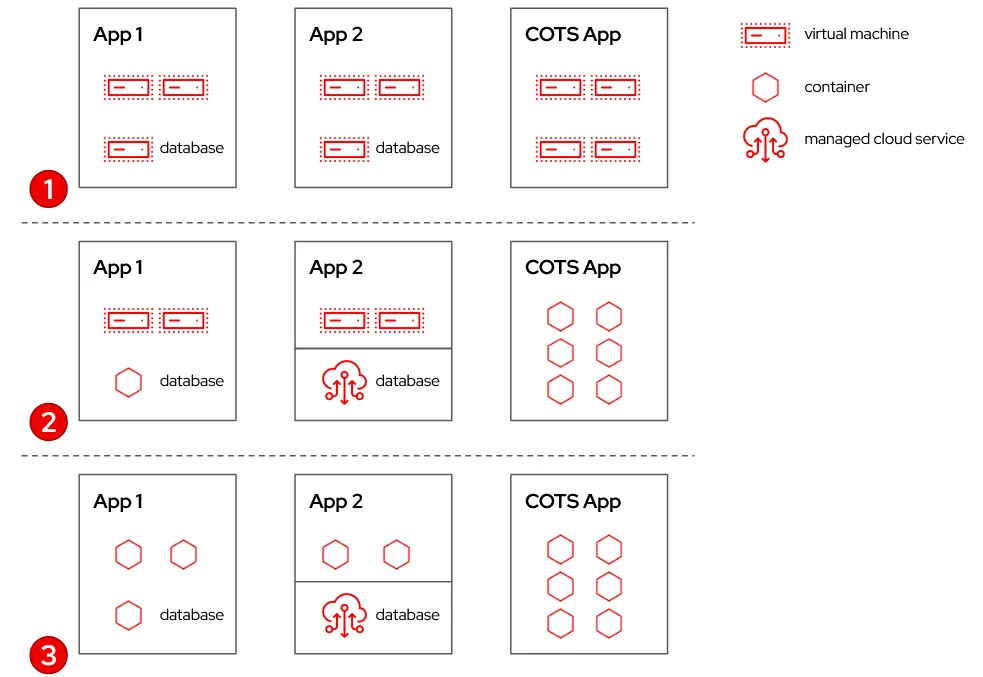

For example, an initial implementation of a business function can be done in containers, while the next iteration might use SaaS (or the other way around). Similarly, a COTS application in a group of VMs could be brought into the platform as it is migrated to containers as part of its lifecycling. Many COTS applications have End of Life (EoL) support for VM-based or containerized deployment.

It's not just software applications being handled in this architecture. Infrastructure can also be integrated using those two patterns: Using an API Gateway and using a Message Broker. Hardware and infrastructure components (compute, storage or networking management tools) can also use event-driven functions through the event broker to provide visibility, or to take proactive actions using a tool like Red Hat Ansible Automation Platform. This use case is discussed later in the VM self-management section of this article.

Kubernetes operators and operational model

Kubernetes is a container orchestration system used to deploy, scale and manage modern applications at an enterprise level. The Kubernetes platform can be extended with the operator pattern, which defines a method of managing the entire lifecycle of a software component. An operator can be thought of as encoding all of the best practices for deploying and managing a component.

In all of the functions described above (microservices, brokers, API gateways and GitOps agents), the resultant systems can be deployed as containerized applications, and managed by operators. You can build an entire application platform that scales, and is managed automatically, communicating using events and API calls.

Taking this model further, you can outsource some functions to managed application platforms, and concentrate on operating only the software functions relevant to your particular business.

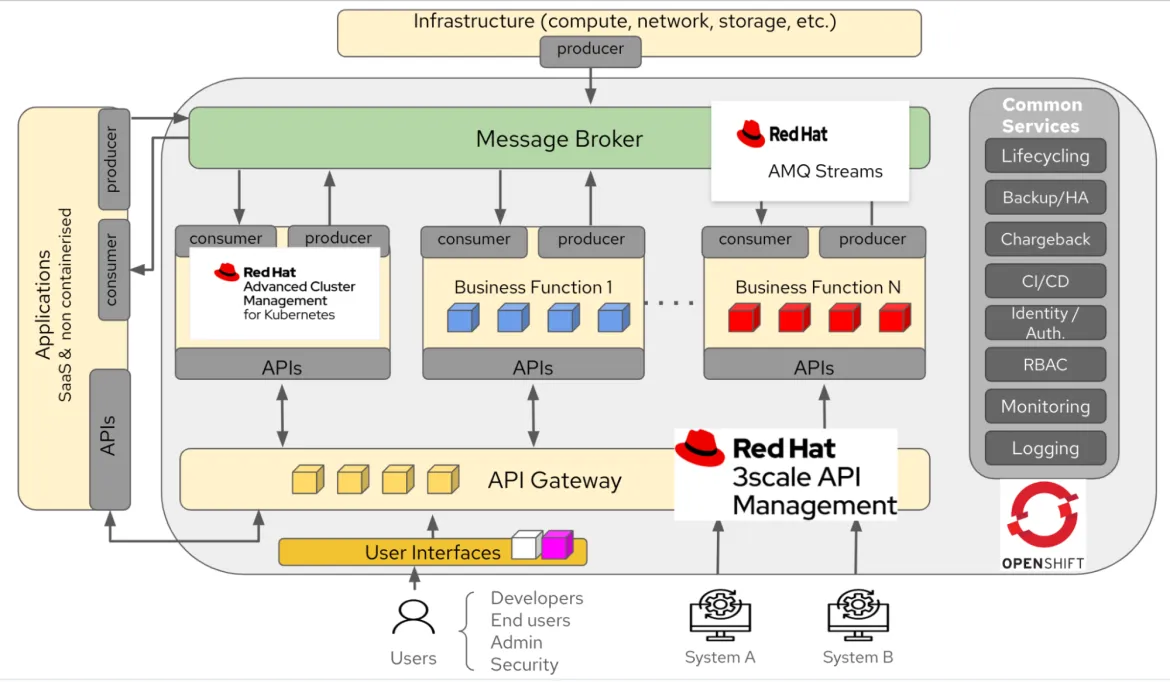

Red Hat and event-driven architecture use cases

Many Red Hat products are built using the operator model of containerized deployment, and feature API-first design and event sources and sinks. These architecture choices make a real difference in how modern organizations run. Here are some examples of how these concepts can be used.

Use case: Virtual machine self-management

With OpenShift Virtualization, a VM can be deployed alongside a containerized application in a single Kubernetes environment. Because VMs are described using Kubernetes Custom Resources (CR), they can be deployed in a number of ways: Using DevOps pipelines and GitOps, from the command line, or through a console user interface. If VMs are only deployed using pipelines, then the pipeline can be configured so that deployment images are fully patched and updated, and that the deployed VM is automatically registered with a Configuration Management Database (CMDB). However, if a VM is deployed in a less controlled fashion, these steps may be missed, leading to potential security and compliance risks.

This is where event-driven architecture can make a difference. By using events generated in the OpenShift environment to trigger automations, you can ensure compliance for all of your VMs, regardless of deployment method.

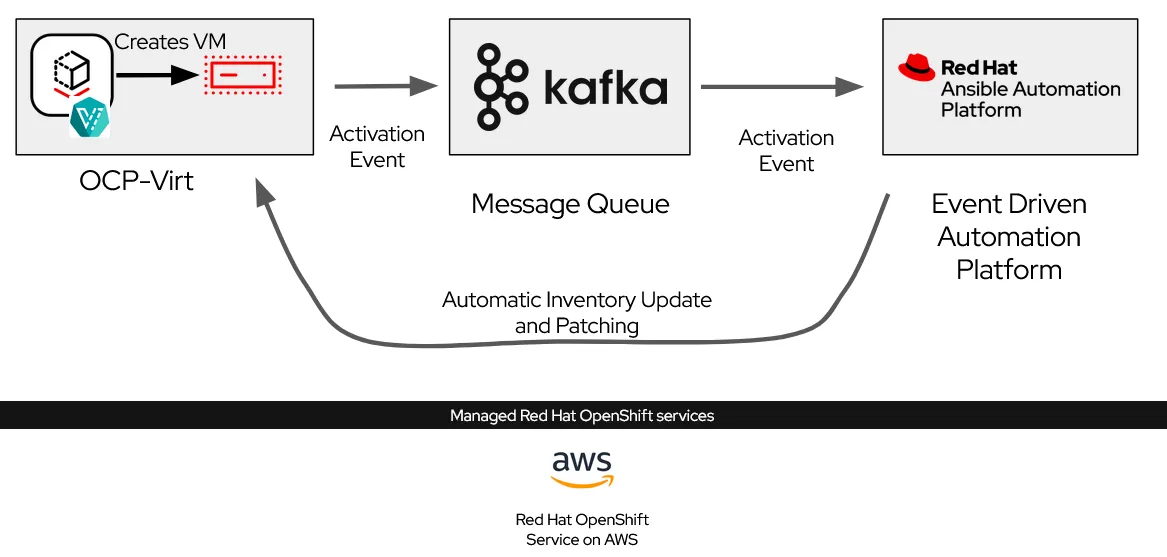

In the architecture below, state messages are forwarded from the OpenShift Virtualization environment in the OpenShift cluster to a Kafka topic with each message sent as an individual event. An Event-Driven Ansible Rulebook is subscribed to the same Kafka topic, and it applies business rules to each event to determine whether a given state message event indicates the creation of a virtual machine. When this condition is met, Event-Driven Ansible triggers an automation workflow within Ansible Automation Platform.

The Ansible Automation Platform workflow triggered by the event can take any number of steps based on user requirements. These might include:

- Refresh an Ansible Automation Platform dynamic inventory so the new VM is managed by any ongoing automation schedules (for security or compliance scanning or scheduled patching, for example)

- Register the new VM with a CMDB in an Information Technology Service Management (ITSM) tool, such as ServiceNow

- Automatically run a patching task on the new VM so it's brought up to the latest patch level, regardless of the age of the image used to start the VM

The advantages of using an event-driven architecture for this process include:

- The event sources may change. For example, the VM creation events may be generated by another monitoring application

- Multiple event sources can feed the same automations. For example, additional events may be used to trigger automations when security monitoring tools detect connections to the running VMs

- Multiple systems can respond to a single event. For example, VM creation events may also be monitored by a Security Information and Event Management (SIEM) tool

- The event sinks may change. For example, a different event-driven automation tool may be swapped in without reconfiguring the event sources. In fact, a rolling transition between tools can take place without any change to the sources

Use case: Automated security remediation

When dealing with security-related incidents, you often want to take some immediate action to solve a problem, potentially with some sort of isolation so a post-mortem investigation can take place. Again, an event-driven architecture lends itself to this scenario.

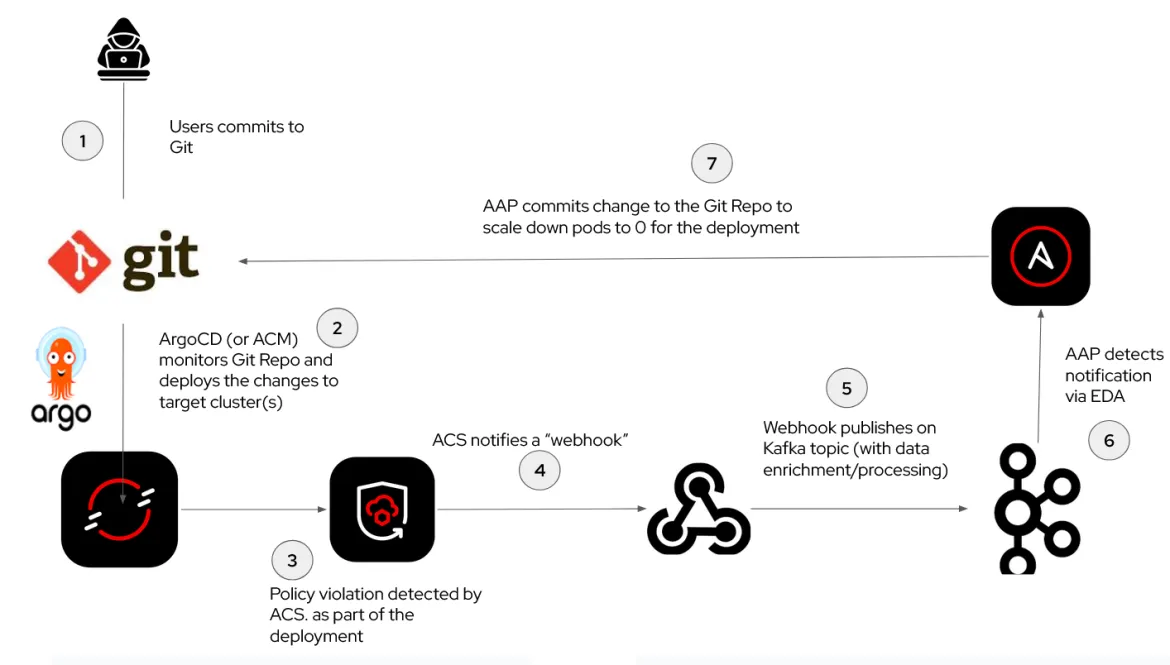

Consider the following architecture using an event-driven system to respond to security events.

As with the previous use case, a Kafka topic brokers sources and sinks of events. This time, the source of events is a Kubernetes security monitoring tool, Red Hat Advanced Cluster Security for Kubernetes With Red Hat Advanced Cluster Security, you can configure compliance rules to detect anomalous workloads running in a Kubernetes environment, and then send an event with details of the breach to a Kafka topic. Event-Driven Ansible reads the event, and should the event match a rule pattern, it can trigger an automation play to remediate the security incident.

For example, a compliance rule may be created to detect a Kubernetes deployment that uses incorrect or invalid labels. The non-compliance event is sent to the Kafka topic. Event-Driven Ansible receives the event, and the event payload data includes details about the workload, including the deployment name and namespace. An Ansible job is launched in Ansible Automation Platform, which connects to the OpenShift API and scales the deployment down to zero. This prevents malicious workloads from executing, but leaves it in place for further investigation. The Ansible job can also raise a ServiceNow incident, and post a notification message to a security operations centre team for review.

Conclusion

In this article, you've seen the benefits of adopting an event-driven architecture, and two concrete examples of where this can benefit platform designs in the automation and security domains.

For more information, watch full demos of the two use cases described above here:

Further details on how these demos were deployed can be found in the following git repositories:

product trial

Red Hat OpenShift Container Platform | Versión de prueba del producto

Sobre los autores

Simon Delord is a Solution Architect at Red Hat. He works with enterprises on their container/Kubernetes practices and driving business value from open source technology. The majority of his time is spent on introducing OpenShift Container Platform (OCP) to teams and helping break down silos to create cultures of collaboration.Prior to Red Hat, Simon worked with many Telco carriers and vendors in Europe and APAC specializing in networking, data-centres and hybrid cloud architectures.Simon is also a regular speaker at public conferences and has co-authored multiple RFCs in the IETF and other standard bodies.

Derek Waters is an experienced technology professional with over 25 years of experience in software development, solution architecture and team leadership. He has worked on IT projects as diverse as mine clearance simulations, TV ratings prediction, pathology lab information management and highly secure information management systems. He brings an entire career of scars from doing things the wrong way to his role as a Solution Architect at Red Hat helping people do them the right way instead.

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Programas originales

Vea historias divertidas de creadores y líderes en tecnología empresarial

Productos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servicios de nube

- Ver todos los productos

Herramientas

- Training y Certificación

- Mi cuenta

- Soporte al cliente

- Recursos para desarrolladores

- Busque un partner

- Red Hat Ecosystem Catalog

- Calculador de valor Red Hat

- Documentación

Realice pruebas, compras y ventas

Comunicarse

- Comuníquese con la oficina de ventas

- Comuníquese con el servicio al cliente

- Comuníquese con Red Hat Training

- Redes sociales

Acerca de Red Hat

Somos el proveedor líder a nivel mundial de soluciones empresariales de código abierto, incluyendo Linux, cloud, contenedores y Kubernetes. Ofrecemos soluciones reforzadas, las cuales permiten que las empresas trabajen en distintas plataformas y entornos con facilidad, desde el centro de datos principal hasta el extremo de la red.

Seleccionar idioma

Red Hat legal and privacy links

- Acerca de Red Hat

- Oportunidades de empleo

- Eventos

- Sedes

- Póngase en contacto con Red Hat

- Blog de Red Hat

- Diversidad, igualdad e inclusión

- Cool Stuff Store

- Red Hat Summit