Introduction

When developing machine learning workflow in the local environment such as laptop and desktop, the Machine Learning (ML) practitioners are free to test and install any tools they would like to use in their environment. This behavior introduces a lot of environmental issues later on when the work needs to be shared or delivered to other team members. The other users may not have all packages that are necessary to run the workflow. This article applies to ML projects using Python. Other languages that are common for ML workflow such as R and Scala may not see this issue.

A common solution for this type of issue is setting up a virtual environment for the Python projects so that all dependencies can be captured in a file and the environment can be delivered as a part of the project. When moving to OpenShift, this solution becomes more relevant since the environment is immutable and it needs to be rebuildable everytime it runs. So, it is necessary for the development environment on OpenShift to support this type of installation and testing during runtime in order for the practitioner to have a similar experience when working on OpenShift.

Code Ready Workspace (CRW) is a native development environment on OpenShift that enables developers who need an IDE experience on OpenShift. It is a strong option for any Machine Learning practitioner who prefers to work in an IDE environment such as PyCharm or Visual Studio Code. However, the out-of-the-box Code Ready Workspace has a lot of limitations for the practitioner to test out different tools and run their workflow on.

The configuration step that we will go over within this article is tested on running ML workflow using TensorFlow, PyTorch, and Scikit.

Configuration

Expanding Sidecar Memory and Waiting Time for PIP Installation

If we start with an empty Python environment on CRW, it is necessary to install a set of all packages and libraries that are required to run the workflow. Depending on the size of the packages, sometimes, the default size of the sidecar (128 mbs) that supports the installation is not enough:

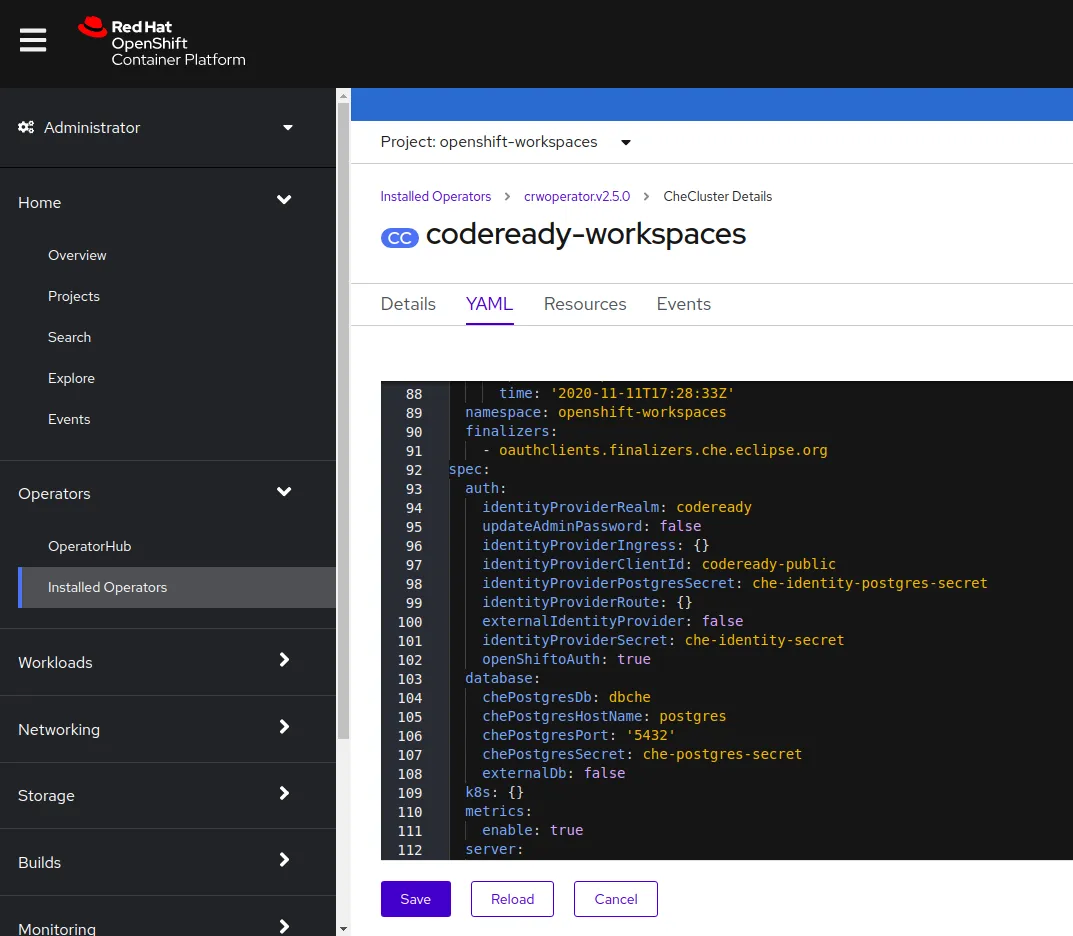

From the Openshift Console on the browser, the user can access the “RedHat CodeReady Workspaces” in the “OpenShift Workspaces” project:

Within the deployed cluster yaml file, the user can add the following properties:

spec:

...

server:

...

customCheProperties:

CHE_WORKSPACE_ACTIVITY__CHECK__SCHEDULER__PERIOD__S: '14400'

CHE_WORKSPACE_ACTIVITY__CLEANUP__SCHEDULER__PERIOD__S: '14400'

CHE_WORKSPACE_SIDECAR_DEFAULT__MEMORY__LIMIT__MB: '2048'

...

...

- “CHE_WORKSPACE_ACTIVITY__CHECK__SCHEDULER__PERIOD__S” and “CHE_WORKSPACE_ACTIVITY__CLEANUP__SCHEDULER__PERIOD__S” properties will allow the workspace to be opened and maintain its state within 14400 seconds. Within this time, if there is no interaction between the developers, the workspace will be maintained. If there is no activities into the workspace after this time, the CRW controller will shutdown the workspace to release the resource back to OpenShift Resource Pool

- “CHE_WORKSPACE_SIDECAR_DEFAULT__MEMORY__LIMIT__MB” property will extend the amount of cache memory that is allocated into the pip installation process. Since one of the required packages is PyTorch and it requires a cache memory of 780+ MB, the default 128 MB memory limit is not enough for pip installation process to be completed.

For more information relating to CRW properties, the user can access the official CRW documentation.

Expanding Build and Runtime Container Memory Limit

Traditionally, the practitioner usually runs their ML workflow on their local environment with the modern specification. The resource may not become an issue until they move the workload to a constraint environment, like a container. The Code Ready Workspace (CRW) maintains the operation of the IDE through five different containers within one pod. Different containers are responsible for different components within IDE:

- Tools

- Editor

- Plug-ins

- Commands

- VCS

- Build

- Dependency Management

- Compilers

- Build Configuration

- Runtime

- Runtime Dependencies

- Environment Variables

- Common Libraries

- Test

- Testing Tools

- Target Environment

- Logging Services

- Debug

- Debugger

- Debug Configuration

- Environment Variables

The ‘Build’ container will be the main environment that the workflow will be run on. Depending on the complexity, the workflow may go through several transformation, testing, and training steps using PyTorch, TensorFlow to create different models. The default 512 mb memory may not be enough for this intensive process.

In CRW, devfile is a template that captures all configuration for each workspace that the practitioner needs to work with. The devfile below describes a basic environment for a machine learning project using the default Python base image that comes with CRW:

metadata:

generateName: ml-workflow-

projects:

- name: ml-workflow

source:

location: 'http://<GIT_USERNAME>:<GIT_PASSWORD>@<GIT_URL>

type: git

branch: master

components:

- id: ms-python/python/latest

memoryLimit: 10Gi

cpuLimit: 1

preferences:

python.globalModuleInstallation: true

type: chePlugin

- mountSources: true

memoryLimit: 10Gi

cpuLimit: 1

type: dockerimage

image: registry.redhat.io/codeready-workspaces/plugin-java8-rhel8@sha256:bf9c2de38c7f75654d6d9789fb22215f55fef0787ef6fd59b354f95883cf5e95

alias: python

apiVersion: 1.0.0

commands:

- name: 1. Run

actions:

- workdir: '${CHE_PROJECTS_ROOT}/<PROJECT_FOLDER>'

type: exec

command: python run.py

component: python

- name: Debug current file

actions:

- referenceContent: |

{

"version": "0.2.0",

"configurations": [

{

"name": "Python: Current File",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "internalConsole"

}

]

}

type: vscode-launch

The memoryLimit and cpuLimit properties are applied into the runtime and build containers. These will ensure that the memory can go up to 10Gb and the cpu limitation can go up to 1 vcore when the container is running. Depending on the complexity of the ML workload, this number may or may not be enough for it to be completed. If the memory resource is not high enough, the workload process will be killed during the operation.

More configuration information can be found on Red Hat Code Ready Workspaces official documentation.

Conclusion

The article offered a series of steps that Machine Learning practitioners need to do in order to run ML workflow on the RedHat Code Ready Workspace (CRW). This setup is only intended to be used during the development process since the environment will hold up a lot of resources to run one workflow.

This configuration also brings up other challenges on the amount of workspace that we can have on the OpenShift cluster. If each user requires a large amount of memory and cpu for each workspace, the number of active workspace will be limited based on the OpenShift Cluster. The best practice in this scenario would be only configuring the limitation of resources but not at the request. This configuration will allow the container to burst up only when they need it.

For production Machine Learning workload on OpenShift, the practitioner will need to look at pipeline technologies such as Kubeflow Pipeline / ArgoCD / Tekton to optimize and automate the operation on OpenShift.

À propos de l'auteur

Contenu similaire

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Programmes originaux

Histoires passionnantes de créateurs et de leaders de technologies d'entreprise

Produits

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Services cloud

- Voir tous les produits

Outils

- Formation et certification

- Mon compte

- Assistance client

- Ressources développeurs

- Rechercher un partenaire

- Red Hat Ecosystem Catalog

- Calculateur de valeur Red Hat

- Documentation

Essayer, acheter et vendre

Communication

- Contacter le service commercial

- Contactez notre service clientèle

- Contacter le service de formation

- Réseaux sociaux

À propos de Red Hat

Premier éditeur mondial de solutions Open Source pour les entreprises, nous fournissons des technologies Linux, cloud, de conteneurs et Kubernetes. Nous proposons des solutions stables qui aident les entreprises à jongler avec les divers environnements et plateformes, du cœur du datacenter à la périphérie du réseau.

Sélectionner une langue

Red Hat legal and privacy links

- À propos de Red Hat

- Carrières

- Événements

- Bureaux

- Contacter Red Hat

- Lire le blog Red Hat

- Diversité, équité et inclusion

- Cool Stuff Store

- Red Hat Summit