Red Hat OpenShift Operators are an extension mechanism of Red Hat OpenShift. They can automate a custom workflow related to native OpenShift resources by defining and implementing the arbitrary resources and custom logic around them, which are then treated as first-class citizens. This article covers the basic concept of Operators and provides an example in Golang using an Operator SDK tool. The example implements a realistic use case of an application deployment that is updated when the native configmap OpenShift resource is modified.

What is an Operator?

Operators extend the Kubernetes APIs. You must first understand the Kubernetes container orchestration platform. The Kubernetes architecture is based on a declarative programming paradigm. The main idea behind the declarative model is to declare the desired state of the system. The logical component of the system, called the controller, then ensures that the actual state matches the desired state. In other words, the system observes, analyzes, and updates the current state in a continuous loop to match the desired state. Various architectural elements combine in a coherent fashion to implement this pattern and constitute the control plane of OpenShift. The elements include:

- API Server: This is the central nervous system of the control plane, which manages the platform logic through REST APIs that are exposed to the applications and clients, such as the CLI tool. All elements of the platform interact with it through these APIs. API server also provides the extension points to add APIs for managing the user-defined resources to address the unique application management requirements.

- Scheduler: Schedules pod resources on the nodes.

- Kubelet: An agent running on each node that deploys a container using the container runtime.

- Kubeproxy: An agent running on each node that configures the node's network settings.

- etcd database: Persistent storage for the cluster state, including the resources. It can only be accessed by the API server.

Note: Both kubeproxy and kubelet are connected to an API server.

The Operator is a user-implemented controller that watches the user-defined state (custom resource) and implements a customized logic, which usually involves an action (modify, create, update, delete) of the native OpenShift resources (pods, deployment, service, route, secret, configmap, node, etc.). The Operator involves the following parts:

- Custom Resource Definition (CRD): This defines the state that the logic of the Operator is interested in. Defining a CRD effectively adds the endpoint to the API server for that custom resource. The Operator is limited only by imagination and the requirements of the specific use case for what can be captured as a state. The only restraint is that it be captured in a particular format as described in the schema requirement of the CRD. You rarely have to handwrite the CRD manifests, as tools like the Operator SDK can generate them from code.

- Custom Resource (CR): This is a specific resource for a particular application and is based on CRD. CR is to CRD what container is to image. There is a one to many relationship between CRD and CR, as numerous CRs can be created based on the CRD.

- Controller: This is where the logic of the Operator is implemented. The controller watches for the events related to a specific CR and performs its magic based on them. The controller is deployed as a container on OpenShift and, like any container, is based on the image.

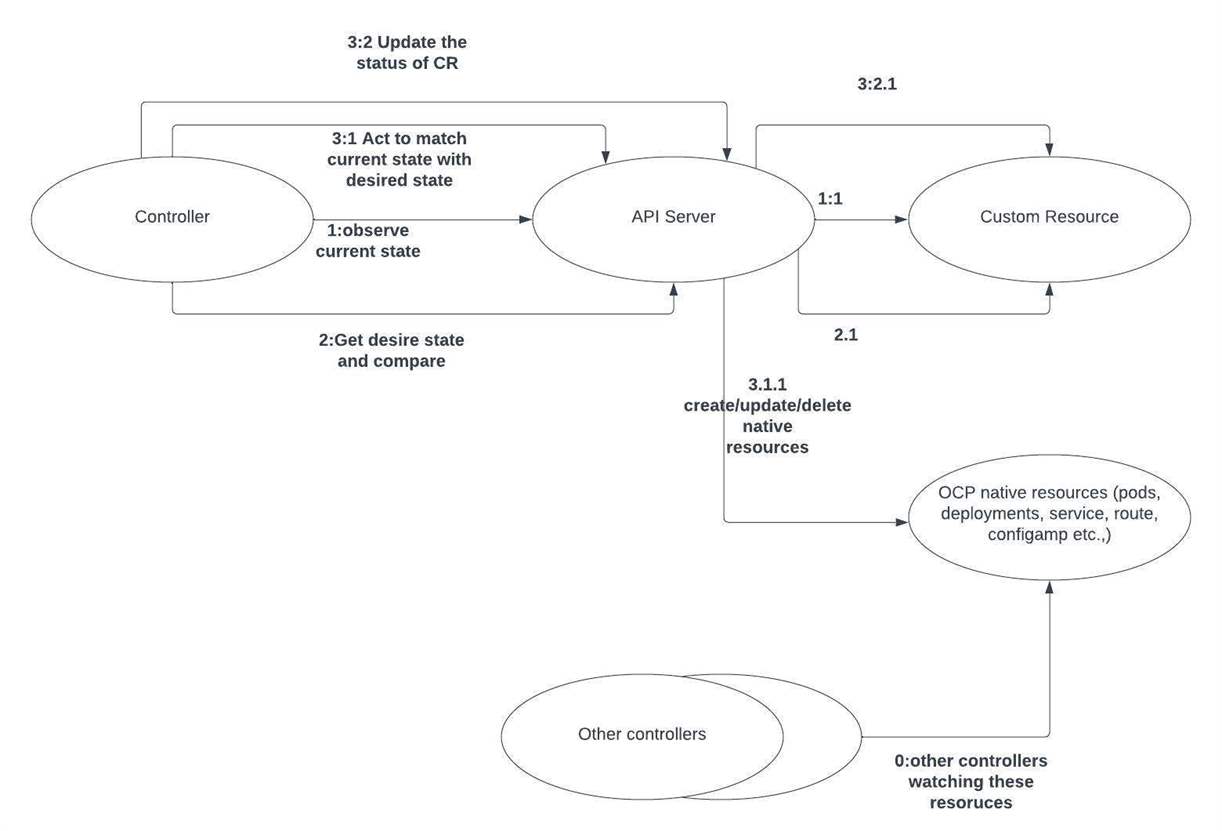

The logical parts of the Operator pattern are schematically presented below:

As the diagram shows, the Operator is based on an observe-analyze-act pattern.

Implementation example

The code for the example implementation using the Operator SDK and instructions on how to run it are provided in this GitHub repo.

This example is a realistic scenario that calls for an automation best implemented using an Operator. It is a web application that uses a configmap mounted in a location within a container. All is fine unless you modify the configmap. There is no native mechanism in OpenShift that would result in the application restarting if the configmap that is mounted in the application is modified. The application must be restarted manually to achieve this. In this example, the CRD is defined with a configmap name and a map with labels for the pods that need to be deleted when the configmap is modified.

I'll demonstrate the steps to create the Operator code below.

Prerequisites

The following tools are required to implement this Operator locally and deploy it on OpenShift:

- OpenShift local installation

- Operator-sdk for Golang

- Podman installation and the classpath is configured for it

Bootstrap Operator code using the Operator SDK

The Operator SDK can bootstrap the Operator project, providing templates and code for the basic functionality.

To bootstrap the project, create a directory on the local machine and then cd to it. Run the following command from that directory:

operator-sdk init --project-name configwatcher-go-operator --domain github.com --repo github.com/hsaid4327/configwatcher-go-operator

Note that --project-name, --domain, and --repo are specific to your project.

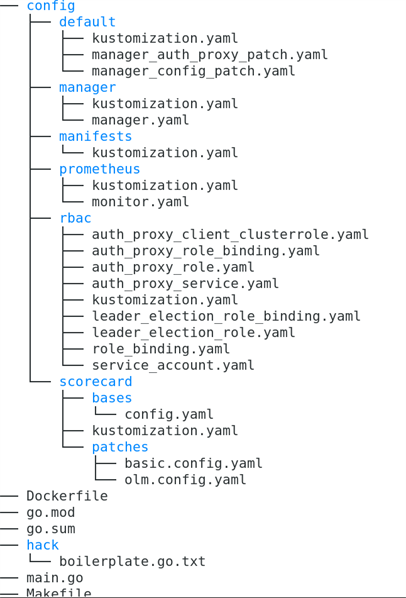

The file structure created is:

The default/kustomization.yaml file has the default namespace for the OpenShift project derived from the project name. The go.mod file contains the module path based on the --repo flag. The Dockerfile contains the file to create an image for the controller. The main.go file is the driver for the controller file and the main go program to load and run the controller logic. You don't need to modify any files yet. At this point, you have the manager (main.go) to run, but it has no controller logic linked to it. If you want to run the manager locally, you can do it by running this command:

make run

Note: Make sure you are logged on to your local cluster before running this command.

Run the following command to implement the skeleton logic for the controller:

operator-sdk create api --group tutorials --version v1 --kind ConfigWatcher --resource --controller

Here is a brief description of the domain, group, and kind settings:

- kind: The name of the resource. For example, ConfigWatcher.

- group: The combination of <group>.<domain> For example, tutorials.github.com.

- CRD name: The combination of <kind>-plural.<group> For example, configwatchers.tutorials.github.co.

Create manifests for CRD

In this step, you can modify the created files with your custom state and create CRD manifests and sample CR resource files. The file to modify is: api/v1/configwatcher_types.go. Modify this file to add the following:

Configwatcher_types.go

type ConfigWatcherSpec struct {

//Name of the ConfigMap to monitor for changes

ConfigMap string `json:"configMap,omitempty"`

// PodSelector defines the label selector for the pods to delete, if the given

//configmap changes

PodSelector map[string]string `json:"podSelector,omitempty"`

}

To create manifests, type:

make manifests

The manifest file is created at this location:

config/crd/bases/tutorials.github.com_configwatchers.yaml

Implement controller logic

The controller logic is implemented in the reconcile loop in the file controllers/configwatch_controller.go.

You can copy the file from this GitHub repository.

There are the following important things to note about the file:

1. Since the controller needs to delete pods and watch configmaps, it must be added to the role generated when you run make manifests

//+kubebuilder:rbac:groups=core,resources=pods,verbs=get;list;watch;delete;deletecollection //+kubebuilder:rbac:groups=core,resources=configmaps,verbs=get;list;wat//ch

2. Since the controller needs to watch for events when the configmap associated with the CR is modified, it requires the following initialization step:

func (r *ConfigWatcherReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).For(&tutorialsv1.ConfigWatcher{}).Watches(

&source.Kind{Type: &corev1.ConfigMap{}},

handler.EnqueueRequestsFromMapFunc(r.findCrWithReferenceToResource),

builder.WithPredicates(predicate.ResourceVersionChangedPredicate{}),

).Complete(r)

}

Build and push the controller image to a registry

Pushing the image to a repo requires the following steps:

- Use Podman to log in to the registry where you want to push the image, such as quay.io.

- Modify the Makefile to replace Docker with Podman for docker-build and docker-push targets

- Build and push the controller image to the registry:

make docker-build docker-push IMG=quay.io/<repo>/<image-name>:<image-tag>

Deploy the Operator on OpenShift

To deploy and test the operator on a different OpenShift project than the default project, create the project in OpenShift, set up a secret to access the registry for the controller image, and modify the service account auto-created manifest to use that secret:

oc new-project <your-project-name>

Next, modify the file config/default/kustomization.yaml to change the project name to the one created in previous steps.

Create a secret with your credentials to access the registry:

oc create secret docker-registry my-secret --docker-server=quay.io --docker-username=<uname> --docker-password=<password> --docker-email=<email>

Install the CRD on OpenShift. Note that CRD is a non-namespaced resource:

make install

Deploy the controller on the targeted namespace:

make deploy IMG=quay.io/<controller-image>

Create a CR from the sample file. You might have to modify the sample file config/samples/tutorials_v1_configwacher.yaml to add the fields for the state:

apiVersion: tutorials.github.com/v1 kind: ConfigWatcher metadata: labels: app.kubernetes.io/name: configwatcher app.kubernetes.io/instance: configwatcher-sample app.kubernetes.io/part-of: configwatcher-go-operator app.kubernetes.io/managed-by: kustomize app.kubernetes.io/created-by: configwatcher-go-operator name: configwatcher-sample spec: configMap: webapp-config podSelector: app: webapp

Note that the configMap field points to the name of the configmap the controller is watching, and the podSelector field has a label for the pods to be deleted when the configmap is modified:

oc apply -f config/samples/tutorials_v1_configwacher.yaml

Test the Operator by deploying a web application

The manifest to deploy a basic web application with a configmap and service is provided in the extra folder in the project repo. It is reproduced here. You can save the following as a YAML manifest file and then run this command: oc apply -f

# ConfigMap which holds the value of the data to serve by

# the webapp

apiVersion: v1

kind: ConfigMap

metadata:

name: webapp-config

data:

message: "Welcome to Kubernetes Patterns !"

---

# Deployment for a super simple HTTP server which

# serves the value of an environment variable to the browser.

# The env-var is picked up from a config map

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp

labels:

app: webapp

spec:

selector:

matchLabels:

app: webapp

template:

metadata:

labels:

app: webapp

spec:

containers:

- name: app

image: k8spatterns/mini-http-server

ports:

- containerPort: 8080

env:

# Message to print is taken from the ConfigMap as env var.

# Note that changes to the ConfigMap require a restart of the Pod

- name: MESSAGE

valueFrom:

configMapKeyRef:

name: webapp-config

key: message

---

# Service for accessing the web server via port 8080

apiVersion: v1

kind: Service

metadata:

name: webapp

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: webapp

type: ClusterIP

You can expose the svc to create the route for the web app:

oc expose svc/<svc-name>

curl http://$(oc get route -o jsonpath='{.items[0].spec.host}')

It will show the values for the unmodified configmap.

Now modify the configmap:

oc patch configmap webapp-config -p '{"data":{"message":"Greets from your smooth operator!"}}'

This command will start the controller logic, resulting in the web application pods being removed and the updated configmap deployed to the application. You can access the route to see if the changes to the configmap reflect in the application.

Wrap up

Operators are a critical component of OpenShift, allowing administrators to automate workflows. This article covered the key concepts surrounding Operators and provided a realistic use case involving a web app deployment as an example.

Resources

OpenShift Operators are a complex subject with many moving parts. Below is a list of links and resources that can be useful in understanding the Operator ecosystem and tooling:

- Go documentation for the controller runtime used to build controllers/operators with Golang for the Kubernetes platform.

- Go references for Kubernetes APIs

- Additional Go references for Kubernetes APIs

- Kubernetes

- Kubernetes API reference

- Kubernetes source code and examples

- Go client library for Kubernetes

- Operator SDK framework

- Operator SDK GitHub repo

- Operator SDK framework community

- Kubebuilder (a tool that is used by the Operator SDK to generate manifests and some controller code)

À propos de l'auteur

Hammad Said has a background in enterprise application development, with more than 3 years in cloud applications focusing on Red Hat OpenShift and DevOps methodologies.

Plus de résultats similaires

Friday Five — February 27, 2026 | Red Hat

AI in telco – the catalyst for scaling digital business

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud