Virtualization continues to play an increasingly critical role in the enterprise technology landscape. As part of this evolution, Red Hat OpenShift Virtualization offers a pathway for organizations looking to modernize and consolidate workloads. Finding tools to support migration from existing hypervisors to OpenShift Virtualization is an essential step in this journey. I have written in the past on how you can use the migration toolkit for virtualization (MTV) to help migrate virtual machines (VMs) to OpenShift Virtualization, but one important aspect of that toolkit is how to automate certain commands or tasks immediately before or after the VMvirtual machine is migrated. This type of automation is made possible through the migration toolkit for virtualization’s migration hooks.

I have written in the past on how you can use the migration toolkit for virtualization (MTV) to help migrate virtual machines (VMs) to OpenShift Virtualization, but one important aspect of that toolkit is how to automate certain commands or tasks immediately before or after the VM is migrated. This type of automation is made possible through the migration toolkit for virtualization’s migration hooks.

Applications running in virtual machines in many organizations can become complex and intertwined with other applications and processes. Stopping an application that is running on a server could involve multiple steps on the virtual machine guest or even on other servers in the environment. With migration hooks, you can run Ansible playbooks directly from within the migration toolkit for virtualization, eliminating many of those otherwise manual tasks.

In this article, I will discuss migration hooks, how to use them, and how they work, which will provide you with the knowledge you need to make using them a success as you migrate virtual machine workloads into your OpenShift Virtualization environment.

What are migration hooks?

When creating a migration plan in the migration toolkit for virtualization, you may have noticed a tab labeled "Hooks.". Let's take some time to demonstrate how to create them and how they work.

There are two kinds of migration hooks whose names are generally self-explanatory:

- Pre migration hook: an Ansible playbook that runs against the guest VM prior to any migration attempt by MTV

- Post migration hook: an Ansible playbook that runs against the guest VM immediately after the migration to OCP-Virt has completed

When the migration plan is started, MTV creates a pod in the migration project where the Ansible playbook will be run. If multiple hosts are configured in the migration plan, a separate pod is created for each one. You might want to use pre migration hooks for things like stopping a database, clearing a data queue, or sending a notification prior to the virtual machine being shutdown for migration. With pre migration hooks, you can automate pre-migration tasks to ensure a successful migration.

Once the migration is complete, the post migration hook can be used to automatically run any tasks that you would normally run to bring an application back up, such as starting services or validating application communication with other components. Basically, if a task can be incorporated into an Ansible playbook, then the pre and post migration hooks can automate those tasks for you.

Migration hook prerequisites

Before we move on to creating migration hooks, there are a few tasks that we need to do. First, an OpenShift service account is required to run the playbooks inside the hook pods that will be created. The service account name can be any name you like, but our example here uses the name "forklift-hook".

$ oc create sa forklift-hook

serviceaccount/forklift-hook createdOnce the service account is created, you need to ensure that it has sufficient access in the namespace to run commands inside the hook pod. The example I show later requires write access to the pod filesytem, so the service account must have at least Edit access in the namespace.

$ oc policy add-role-to-user edit system:serviceaccount:openshift-mtv:forklift-hook

--rolebinding-name forklift-hook

clusterrole.rbac.authorization.k8s.io/edit added:

"system:serviceaccount:openshift-mtv:forklift-hook"Now that the service account has been created and granted appropriate permissions, we also need some credentials that the hook playbook uses to access the guest virtual machine. In general, Ansible playbooks rely on SSH keys for access to the managed operating system, and this case is no different.

We use our existing RSA ID for creating an OpenShift secret in the openshift-mtv namespace that we use for our Ansible playbook to access the virtual machines during migration.

$ oc create secret generic privkey --from-file key=~/.ssh/id_rsa -n openshift-mtv

secret/privkey createdThere are some aspects of the guest configuration that are also created, but those are largely out of scope for this article. However, some obvious examples include that the virtual machine needs to have SSH access enabled and the public key portion of the keypair must already be installed on the virtual machine, and virtual machines running Microsoft Windows Server require Remote Execution to be enabled. If Ansible is already among the tools you use to manage your environment, this may already be done.

Creating migration hooks

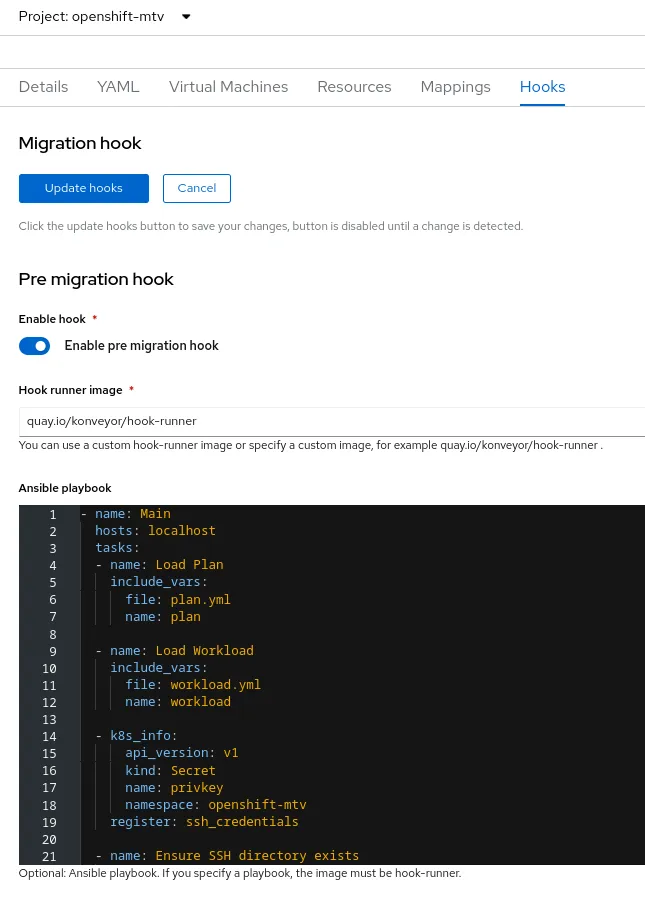

Once the prerequisites are in place and the migration plan is created, navigate to the Hooks tab in the Console. After enabling a hook with the toggle switch, you can copy and paste an existing Ansible playbook directly into the migration plan, or create a new one.

Once you've completed the playbooks and clicked the Update Hooks button, a new OpenShift object is created for each hook, which can be viewed using the OpenShift command-line tool.

$ oc get hooks

NAME READY IMAGE AGE

rhel8-01-plan-post-hook True quay.io/konveyor/hook-runner 18s

rhel8-01-plan-pre-hook True quay.io/konveyor/hook-runner 19sThe hook names contain the migration plan name and if the hook is a pre migration hook or a post migration hook. The playbook you entered is automatically base64-encoded and stored in the hook object.

As of the time of writing, there is no way in the OpenShift Console to associate the service account we created earlier with the migration hooks that are created in the migration plan. Therefore, it is necessary to add the service account name into the hooks that we created manually by using the command line interface to edit them.

$ oc -n openshift-mtv patch hook rhel8-01-plan-pre-hook \

-p '{"spec":{"serviceAccount":"forklift-hook"}}' --type mergeMigration hook example

Now that all of that work has been done, let’s look at what components should be in the Ansible playbook that makes up the migration hook.

Inside the pod that MTV creates, there are three files:

- playbook.yml: a file that contains a copy of the playbook that you typed in the OpenShift Console

- plan.yml: a YAML file that contains variables about the migration plan that can be used by the Ansible playbook

- workload.yml: a YAML file that contains variables about the virtual machine that will be migrated in the playbook. Since a new pod is created for every virtual machine in a plan, this file only ever contains information for a single virtual machine

The first step in either a pre or post migration hook playbook is to import the plan.yml and the workload.yml files so that the variables inside them are available to the playbook as it runs.

- name: Main

hosts: localhost

tasks:

- name: Load Plan

include_vars:

file: plan.yml

name: plan

- name: Load Workload

include_vars:

file: workload.yml

name: workload

…Next, we need to get the private key from the secret we created earlier and store it in the ~/.ssh directory inside the pod, which also requires creating the ~/.ssh directory.

…

- k8s_info:

api_version: v1

kind: Secret

name: privkey

namespace: openshift-mtv

register: ssh_credentials

- name: Ensure SSH directory exists

file:

path: ~/.ssh

state: directory

mode: 0750

- name: Create SSH key

copy:

dest: ~/.ssh/id_rsa

content: "{{ ssh_credentials.resources[0].data.key | b64decode }}"

mode: 0600

…Once the SSH key is in the correct location, we extract the IP address of the virtual machine and put it in a temporary Ansible inventory that gets used later to run any tasks we want on the virtual machine being migrated. This variable comes from the workload.yml file that was imported at the beginning of the playbook.

…

- add_host:

name: "{{ workload.vm.ipaddress }}"

ansible_user: root

groups: vms

…It's important to note that if the virtual machine IP address changes during migration, then the post migration hook using the code above will not be able to access the virtual machine and the job will fail. Also, if the virtual machine has more than one IP address, only the IP address of the first interface is used with the playbook example provided above. If you need to use a different IP address, then you can access it in the playbook by changing {{ workload.vm.ipaddress }} to {{ workload.vm.guestnetworks[X].ip }}where "X" is the instance of the IP address you want to use.

The next play in the playbook is the fun part, where you can add any tasks that you need to run on the virtual machine before or after the migration. In this example, I have included excerpts from both a pre and post migration hook.

Pre Migration Hook

…

- hosts: vms

tasks:

- name: Stop MariaDB

service:

name: mariadb

state: stopped

- name: Create Test File

copy:

dest: /test1.txt

content: "Hello World"

mode: 0644Post Migration Hook

…

- hosts: vms

gather_facts: no

tasks:

- name: Wait for VM to boot

Wait_for_connection:

timeout: 300

- name: Start MariaDB

service:

name: mariadb

state: started

- name: Create Test File

copy:

dest: /test2.txt

content: "Hello World"

mode: 0644The two examples above are almost identical, but the post migration hook requires some different configuration options than the pre migration hook due to the fact that the virtual machine will not be fully booted by the time the post migration hook runs. The two additions specific to the post migration hook are the gather_facts: no option to disable fact collection and the wait_for_connection task. If either of these are missing in a post migration hook, then the post migration hook fails immediately after starting. Because the virtual machine is already running before the migration, these options are not required for a pre migration hook.

Finally, the last two tasks in the excerpts above are merely simple examples that show where your customized tasks can be added. My examples stop/start a MariaDB database and then create a file with some text in it. When you create your playbook, modify and add to these tasks with the steps needed in your environment.

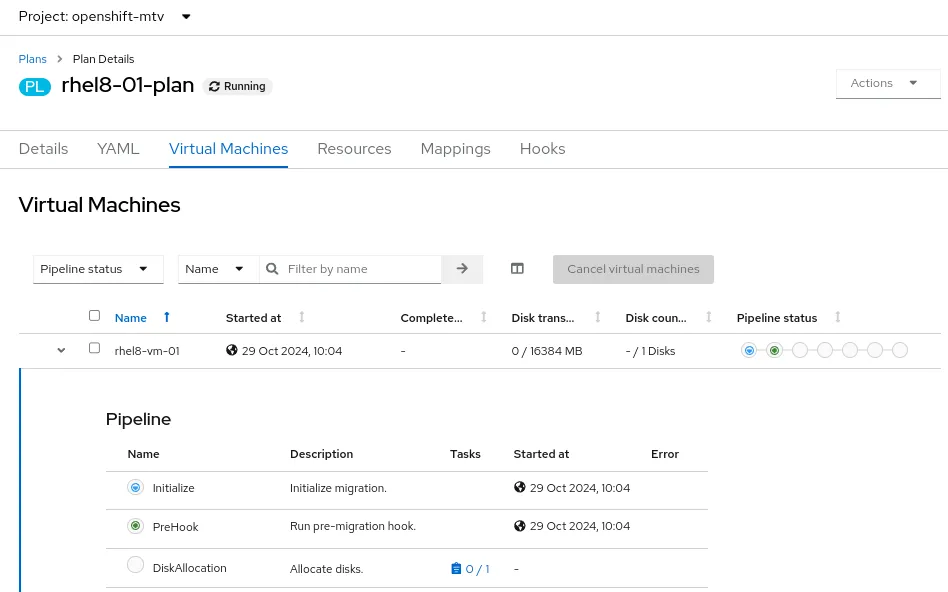

Running the migration plan with migration hooks

Once the migration hooks are created and the service account has been associated with them, you can run the migration plan. The pre migration hook is one of the first steps that runs and can be seen in the migration plan details.

From the OpenShift command-line tools, we can also see that a pod has been created for the pre migration hook, and you can view the pod logs like you would for any other pod. This is very useful for troubleshooting errors in the migration hooks, should they occur.

$ oc logs -f rhel8-01-plan-vm-1002-prehook-9hqwp-khdx5

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [Main] ********************************************************************

TASK [Gathering Facts] *********************************************************

ok: [localhost]

TASK [Load Plan] ***************************************************************

ok: [localhost]

TASK [Load Workload] ***********************************************************

ok: [localhost]

TASK [k8s_info] ****************************************************************

ok: [localhost]

TASK [Ensure SSH directory exists] *********************************************

changed: [localhost]

TASK [Create SSH key] **********************************************************

changed: [localhost]

TASK [add_host] ****************************************************************

changed: [localhost]

PLAY [vms] *********************************************************************

TASK [Gathering Facts] *********************************************************

ok: [192.168.0.152]

TASK [Stop MariaDB] ************************************************************

changed: [192.168.0.152]

TASK [Create Test File] ********************************************************

changed: [192.168.0.152]

PLAY RECAP *********************************************************************

192.168.0.152 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

localhost : ok=7 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 As you can see in the log output above, all Ansible tasks ran successfully, including stopping the MariaDB database and creating the test file.

The migration plan continues to run. Once the virtual machine has been relocated to OpenShift Virtualization, the post migration hook runs. As before, the post migration hook runs inside a pod, and you can view the logs as it is running or after it has completed.

Hooked on migrations

Migrating from one hypervisor to another can be an intimidating and daunting task. Red Hat's migration toolkit for virtualization can help make this process easier, and migration hooks are a key part of automating the migration journey. As this article has demonstrated, migration hooks, coupled with Red Hat Ansible, can make complex tasks that must be performed during the migration of virtual machines to OpenShift Virtualization more reliable and easier to manage.

For more information about the migration toolkit for virtualization, you can check out another blog post of mine about the topic, or have a look at the product on our website. Quick and easy migrations are within your reach and Red Hat is here to help.

training

Gestion des machines virtuelles avec Red Hat OpenShift Virtualization avec examen

À propos de l'auteur

Matthew Secaur is a Red Hat Senior Technical Account Manager (TAM) for Canada and the Northeast United States. He has expertise in Red Hat OpenShift Platform, Red Hat OpenStack Platform, and Red Hat Ceph Storage.

Contenu similaire

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Programmes originaux

Histoires passionnantes de créateurs et de leaders de technologies d'entreprise

Produits

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Services cloud

- Voir tous les produits

Outils

- Formation et certification

- Mon compte

- Assistance client

- Ressources développeurs

- Rechercher un partenaire

- Red Hat Ecosystem Catalog

- Calculateur de valeur Red Hat

- Documentation

Essayer, acheter et vendre

Communication

- Contacter le service commercial

- Contactez notre service clientèle

- Contacter le service de formation

- Réseaux sociaux

À propos de Red Hat

Premier éditeur mondial de solutions Open Source pour les entreprises, nous fournissons des technologies Linux, cloud, de conteneurs et Kubernetes. Nous proposons des solutions stables qui aident les entreprises à jongler avec les divers environnements et plateformes, du cœur du datacenter à la périphérie du réseau.

Sélectionner une langue

Red Hat legal and privacy links

- À propos de Red Hat

- Carrières

- Événements

- Bureaux

- Contacter Red Hat

- Lire le blog Red Hat

- Diversité, équité et inclusion

- Cool Stuff Store

- Red Hat Summit