Introduction

Peer-pods solution is supported with Red Hat OpenShift sandboxed containers 1.5 alongside Red Hat OpenShift 4.14. With this new release, peer-pods deployment on public cloud AWS and Azure are fully supported by Red Hat. The peer-pods solution is also the foundation for confidential containers on Red Hat OpenShift.

Currently, Container Storage Interface (CSI) persistent volumes for peer-pods solution is not supported. However, there are alternatives available depending on your environment and use cases. For example, if you deploy peer-pods on AWS and have a workload that needs to process data stored in Amazon S3 (Simple Storage Service), then this blog is for you.

This blog demonstrates how to access object data stored in Amazon S3 in your workloads deployed using OpenShift sandboxed containers.

Topology overview

Amazon S3 is an object storage service that uses a bucket to store the objects. The application Mountpoint for Amazon S3 is used to mount an Amazon S3 bucket as a local directory inside a Linux operating system.

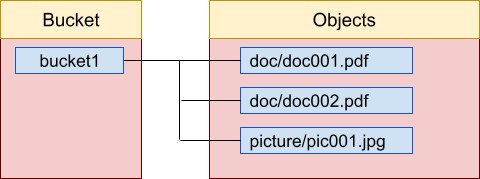

Amazon S3 topology

In Amazon S3, a bucket is used to store objects. A bucket is a storage container. The bucket and object topology looks like this:

To use Amazon S3, you need to create a bucket.

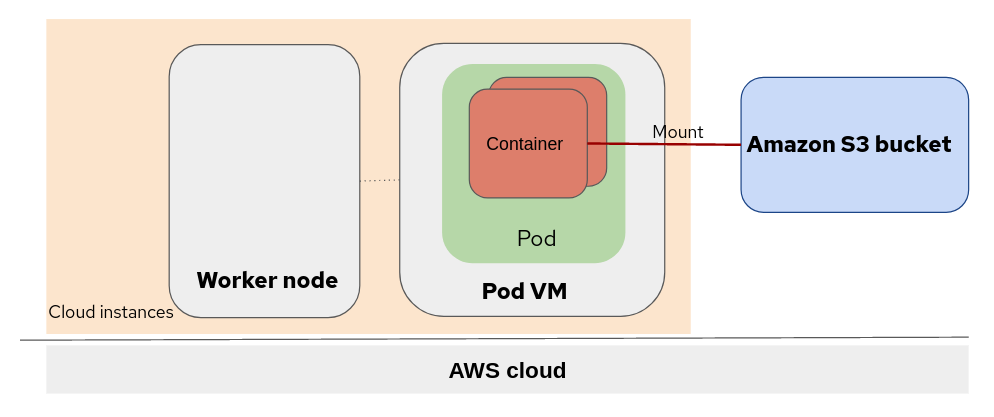

Peer-pods with Amazon S3 bucket topology

The chart below illustrates the topology of OpenShift sandboxed container peer-pods with an Amazon S3 bucket mounted using Mountpoint for Amazon S3.

The pod is deployed on the AWS cloud instance (a virtual machine). The OpenShift worker nodes and pod virtual machine (VM) are running at the same virtualization level. The Amazon S3 bucket is mounted to the container running inside the Pod VM.

Configuring an s3 bucket for use with peer-pods

If you want to follow along with this example, you must have the OpenShift sandboxed containers operator installed with peer-pods on AWS. Refer to Red Hat documentation for setup instructions. Configuration for Amazon S3 is described below.

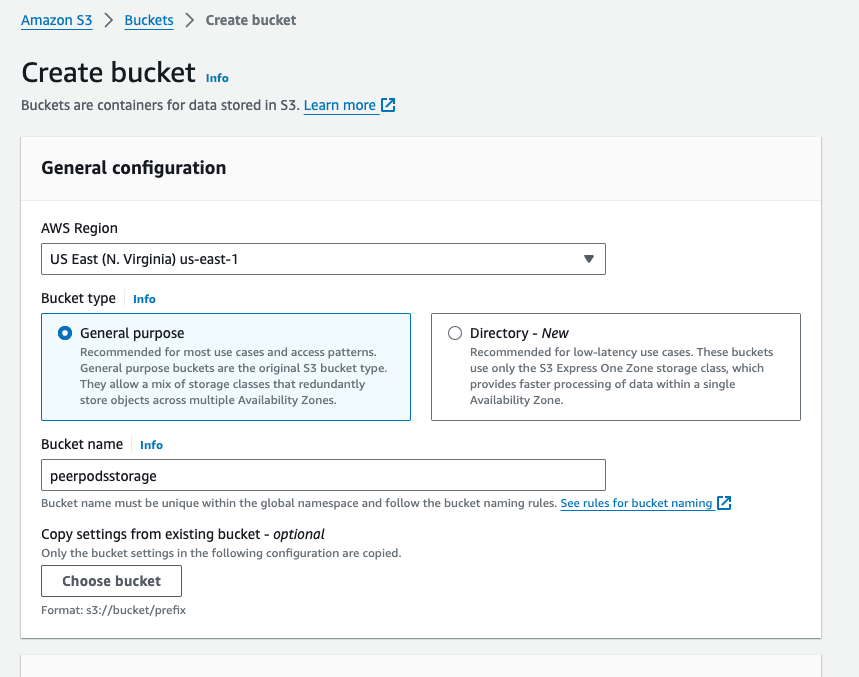

Create an S3 bucket

First, you need to log into AWS and create a bucket.

- Select S3 under Storage

- Click + Create bucket button

- Create a bucket by setting up the Bucket name (for example, peerpodsstorage), Region, and other options

- Click the Create button

Create a secret with auth details

You need valid AWS credentials (AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY) to access your bucket. In this blog, we use a Kubernetes secret object to store the AWS credentials, and have made the details available as environment variables to the pod.

# export S3_BUCKET=peerpodsstorage

# export AWS_ACCESS_KEY_ID=$(aws configure get aws_access_key_id)

# export AWS_SECRET_ACCESS_KEY=$(aws configure get aws_secret_access_key)You must explicitly specify the S3 bucket name to use. In this blog, we use a peerpodsstorage storage account (S3_BUCKET), created in the previous step.

# cat > storage-secret.yaml <<EOF

apiVersion: v1

kind: Secret

metadata:

name: storage-secret

type: Opaque

stringData:

S3_BUCKET: "${S3_BUCKET}"

AWS_ACCESS_KEY_ID: "${AWS_ACCESS_KEY_ID}"

AWS_SECRET_ACCESS_KEY: "${AWS_SECRET_ACCESS_KEY}"

EOFApply the configuration with the oc command:

# oc apply -f storage-secret.yamlStart the pod

There are two common approaches to using an Amazon S3 bucket mounted volume in peer-pods containers. One is to use the Amazon S3 bucket before the application workload, demonstrated in example 1 below. The other is to use the Amazon S3 bucket with a container lifecycle hook, demonstrated in example 2 below.

Example 1: Use Amazon S3 bucket before the application workload

In this example, the Amazon S3 bucket mounting is executed before the application workload and we use secrets to get the AWS auth details. You do this with a YAML configuration file:

apiVersion: v1

kind: Pod

metadata:

name: test-s3

labels:

app: test-s3

spec:

runtimeClassName: kata-remote

containers:

- name: test-s3

image: quay.io/openshift_sandboxed_containers/s3mountpoint

command: ["sh", "-c"]

args:

- mknod /dev/fuse -m 0666 c 10 229 && mkdir /mycontainer && mount-s3 "$S3_BUCKET" /mycontainer && sleep infinity

securityContext:

privileged: true

env:

- name: S3_BUCKET

valueFrom:

secretKeyRef:

name: s3-secret

key: S3_BUCKET

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: s3-secret

key: AWS_ACCESS_KEY_ID

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: s3-secret

key: AWS_SECRET_ACCESS_KEYApply the configuration with the oc command:

# oc apply -f test-s3.yamlLog in to the container to verify that the S3 bucket has been mounted successfully:

# oc rsh test-s3

# mount

...

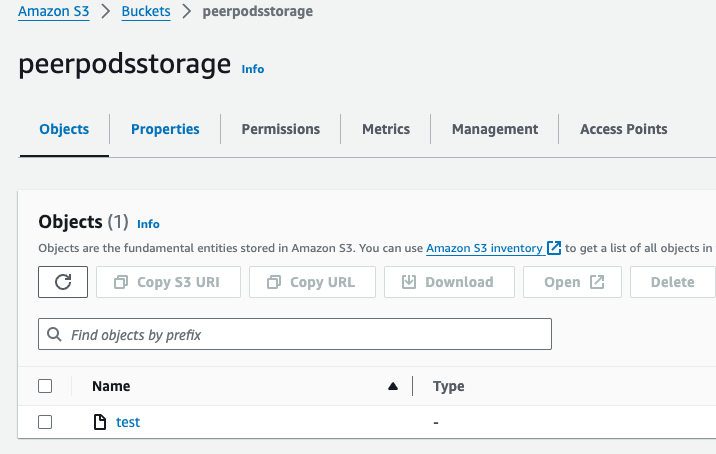

mountpoint-s3 on /mycontainer type fuse (rw,nosuid,nodev,noatime,user_id=0,group_id=0,default_permissions)On the Buckets > peerpodsstorage page, create a file named test in the /mycontainer directory.

Verify that the new directory is visible:

# ls

testSuccess!

Example 2: Use an S3 bucket with a container lifecycle hook

In this example, the Amazon S3 bucket mounting is executed at the container postStart lifecycle, and we use secrets to get the AWS auth details. Create the YAML configuration:

apiVersion: v1

kind: Pod

metadata:

name: test-s3-2

labels:

app: test-s3-2

spec:

runtimeClassName: kata-remote

containers:

- name: test-s3-2

image: quay.io/openshift_sandboxed_containers/s3mountpoint

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "mkdir /mycontainer && mknod /dev/fuse -m 0666 c 10 229 && mount-s3 "$S3_BUCKET" /mycontainer"]

securityContext:

privileged: true

env:

- name: S3_BUCKET

valueFrom:

secretKeyRef:

name: s3-secret

key: S3_BUCKET

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: s3-secret

key: AWS_ACCESS_KEY_ID

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: s3-secret

key: AWS_SECRET_ACCESS_KEYApply the configuration with the oc command:

# oc apply -f test-s3-2.yamlLog in to the container to verify that the S3 bucket has mounted successfully:

# oc rsh test-s3-2

# df -a

Filesystem 1K-blocks Used Available Use% Mounted on

overlay 81106868 10939832 70150652 14% /

...

mountpoint-s3 0 0 0 - /mycontainer Note that there are no stats. This is expected behavior, because Mountpoint doesn't yet report file system stats.

Summary

In this blog post, we demonstrated how to incorporate Amazon S3 data into your Red Hat OpenShift workload using an S3 bucket. We hope you find this information helpful.

저자 소개

Pei Zhang is a quality engineer in Red Hat since 2015. She has made testing contributions to NFV Virt, Virtual Network, SR-IOV, KVM-RT features. She is working on the Red Hat OpenShift sandboxed containers project.

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

오리지널 쇼

엔터프라이즈 기술 분야의 제작자와 리더가 전하는 흥미로운 스토리

제품

- Red Hat Enterprise Linux

- Red Hat OpenShift Enterprise

- Red Hat Ansible Automation Platform

- 클라우드 서비스

- 모든 제품 보기

툴

체험, 구매 & 영업

커뮤니케이션

Red Hat 소개

Red Hat은 Linux, 클라우드, 컨테이너, 쿠버네티스 등을 포함한 글로벌 엔터프라이즈 오픈소스 솔루션 공급업체입니다. Red Hat은 코어 데이터센터에서 네트워크 엣지에 이르기까지 다양한 플랫폼과 환경에서 기업의 업무 편의성을 높여 주는 강화된 기능의 솔루션을 제공합니다.