Containers are Linux. The operating system that revolutionized the data center over the past two decades is now aiming to revolutionize how we package, deploy and manage applications in the cloud. Of course you'd expect a Red Hatter to say that, but the facts speak for themselves. Interest in containers technology continues to grow, as more organizations realize the benefits they can provide for how they manage applications and infrastructure. But it’s easy to get lost in all the hype and forget what containers are really about. Ultimately, containers are a feature of Linux. Containers have been a part of the Linux operating system for more than a decade, and go back even further in UNIX. That’s why, despite the very recent introduction of Windows containers, the majority of containers we see are in fact Linux containers. That also means that if you’re deploying containers, your Linux choices matter a lot.

We’ve talked about this before, including last year at Red Hat Summit. As we gear up for Red Hat Summit 2017, you can expect to hear more from us on this over the next few weeks and more importantly from enterprise customers deploying containers in production today. So when we say containers are Linux, let’s break down what that actually means and why this should matter to you.

Containers are Linux

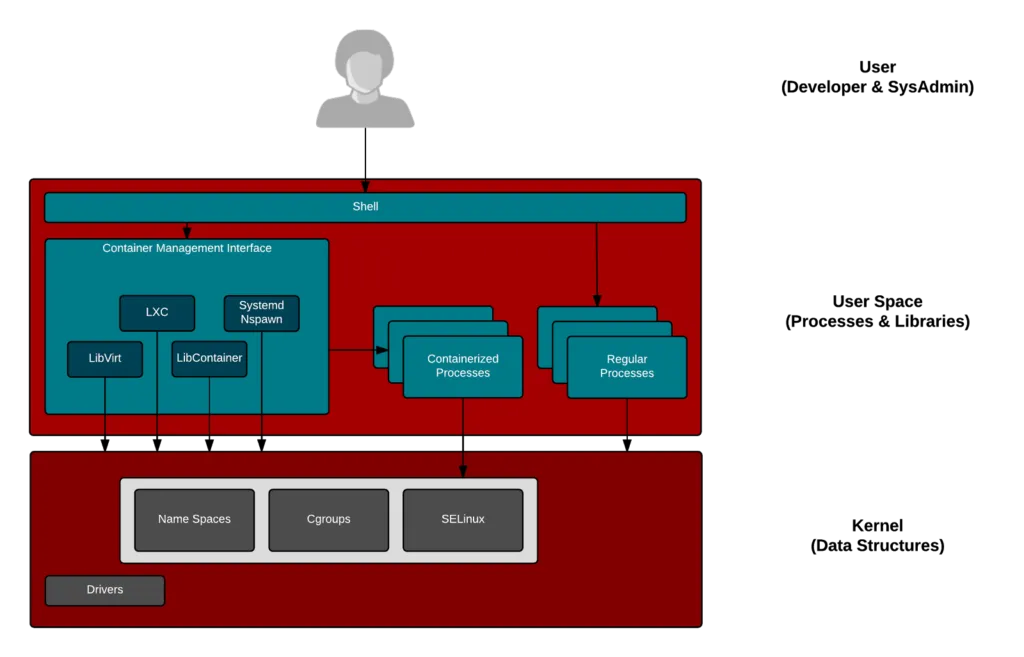

A Linux container is nothing more than a process that runs on Linux. It shares a host kernel with other containerized processes. So what actually makes this process a “container”?

First, each containerized process is isolated from other processes running on the same Linux host, using kernel namespaces. Kernel namespaces provide a virtualized world for the container processes to run in. For example the “PID” namespace causes a containerized process to only see other processes inside of that container, but not processes from other containers on the shared host. Additional security isolation is provided by kernel features like dropped capabilities, read-only mounts and seccomp. Additional file system security isolation is provided by SELinux in distributions like Red Hat Enterprise Linux. This isolation helps ensure that one container cannot exploit other containers or take down the underlying host.

Second, the resources consumed by each container process (memory, cpu, I/O, etc.) are confined to specified limits, using Linux control groups (or “cgroups”). This helps eliminate noisy neighbor issues, by keeping one container from over-consuming Linux host resources and starving other containers.

This ability to both isolate containerized processes and confine the resources they consume is what enables multiple application containers to run more securely on a shared Linux host. The combination of isolation and resource confinement is what makes a Linux process a Linux container. In other words, containers are Linux. Let’s explore some of the implications of this.

Container security is Linux security

Once you understand how containers work, it’s easy to understand that container security is Linux security. The ability for multiple containers to run safely on a shared host is only as good as the kernel’s ability to provide multi-tenant isolation between containers and the underlying host operating system. This includes the Linux kernel namespaces and additional security features like SELinux, provided by the kernel and your chosen Linux host distribution, as well as the security and reliability of the Linux distribution itself. Ultimately, that means your containers are only as secure as the Linux host they run on.

Another important factor is ensuring that the content that runs inside your container is trusted. The docker open source project introduced a layered packaging format for immutable container images, but users still need to ensure that the images they are running are safe. Each container image consists of a base Linux user space layer and then additional layers depending on the application. For example, Red Hat provides base images for Red Hat Enterprise Linux 7 and Red Hat Enterprise Linux 6, and also provides a large number of certified images for various language runtimes, middleware, databases and more via our certified container registry. Red Hat employs a large number of engineers that package image content from known source code and seek to ensure that this content is free from vulnerabilities. Red Hat also provides security monitoring, so when a new issue is detected, a fix is identified and updated container images are released, so customers can in turn update their applications that rely on them.

Container performance is Linux performance

It’s also easy to see how container performance is tied to Linux performance. A container image is a layered Linux filesystem that is used to instantiate container instances. The choice of Linux filesystem used - OverlayFS, Device Mapper, BTRFS, AUFS, etc. - can impact the ability to build, store and run those images efficiently. Performance and related issues in this area typically involve troubleshooting the Linux host filesystem.

Red Hat has worked with the Cloud Native Computing Foundation (CNCF) to test container deployments on Kubernetes in OpenShift at scale. In our most recent performance benchmark, we tested containers on a bare metal cluster consisting of 100 physical servers and on a virtual machine cluster consisting of 2048 VMs. As you read the results of these tests, you can quickly see how closely tied container performance is to Linux performance. At Red Hat, we rely on the skills and experience of our Linux performance and scale engineering team, and work with customers and the community, to seek to identify the best configuration for running containers at massive scale.

Container reliability is Linux reliability

Ultimately, organizations who are adopting containers for their production applications need to know that their containers run reliably. Whether it is questions about security, performance, scalability, or quality in general - the reliability of your containers has a lot to do with the reliability of the Linux distribution it runs on and the vendor that stands behind it. Containers represent a new way to package and run applications on Linux. Red Hat has a long history of supporting Linux for mission critical applications, across both commercial and public sector organizations. Reliability is a hallmark of Red Hat Enterprise Linux and why it has become a de facto standard for Linux in the enterprise. That reputation for reliability is also why Red Hat Enterprise Linux is becoming a standard for running Linux containers in enterprise environments.

So What is Docker?

So if containers are Linux, then what is Docker? That depends on what you are referring to. Most people know of docker as the open source software project launched in March 2013, that automates the deployment of application containers on Linux. The docker project has been one of the most popular open source projects of the last few years, with the docker/docker Github repository garnering over 1,600 contributors and 41,000 stars.

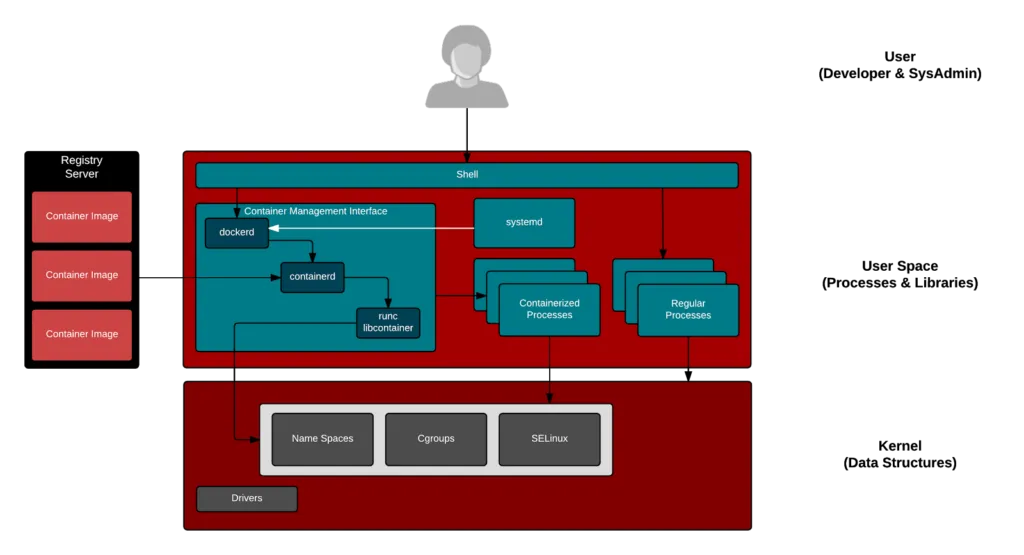

The docker container engine manages the configuration of Linux kernel namespaces, additional security features and cgroups and introduced a layered packaging format for content that runs inside containers. This made it easy for developers to run containers on their local machines and create immutable images that would run consistently across other machines and in different environments. The runtime for those containers isn’t docker, it’s Linux.

The Open Container Initiative (OCI) was subsequently launched to create open industry standard specifications for the container format (image-spec) and runtime (runtime-spec). Over the past couple of years, the docker project maintainers have begun spinning out the lower level container runtime plumbing as separate projects, including OCI runC and most recently containerd which was spun out and donated to the CNCF.

Docker Inc. is also a private company that initiated the docker project and sells commercial products under the same name. Docker EE combines the docker container engine (including runc and containerd) along with additional orchestration, management and security capabilities. Docker Inc.’s products compete in a growing landscape of vendors trying to provide best of breed solutions for building, deploying and managing containers. That includes vendors like Red Hat, with solutions like OpenShift, Red Hat Enterprise Linux and Red Hat Enterprise Linux Atomic Host, and other independent software vendors who provide container management platforms (CoreOS, Rancher, VMware, etc.), as well as major cloud providers like Amazon, Microsoft Azure, and Google which provide hosted container services in the public cloud.

Are Container vendors Linux vendors?

If at their core containers are Linux, is it a stretch to say that container vendors are just Linux vendors? Perhaps not.

The Linux operating system is fundamental to the operation of containerized applications - from the kernel, to the filesystem, to networking and more. Containers provide a faster, more efficient and portable abstraction to run applications on Linux across different infrastructure footprints - including physical servers, virtualization platforms, private clouds and public clouds.

As more container deployments move to production, many organizations are asking Red Hat about moving from do-it-yourself container solutions to supported container platforms. Security concerns are often cited as one of the main barriers to container adoption in production. This is the case with any new technology. The same concerns you hear about containers today are what you heard about virtualization in the early 2000s. Many an IT manager back then, when talking about virtualization would say, “Great for development, but I’ll never run it in production!” How times have changed. Today, many organizations run virtualization in production, and not just for simple applications, but for their most complex and mission-critical systems. That same evolution in mindset is beginning to happen with containers.

As more and more organizations deploy containers for their own mission-critical applications, they need to ask the same questions of their container platform vendor as they do their Linux vendor. The same considerations and criteria apply in either case:

- Is this vendor’s platform reliable?

- How secure is it?

- Will it scale?

- And ultimately, can I trust it for my most important applications?

Your container platform vendor and your Linux vendor are in fact one and the same.

So why Red Hat for containers?

Red Hat’s contributions to containers go back to the creation of those core container primitives of Linux namespaces and cGroups in the early 2000s. Containers have been a core feature of Red Hat Enterprise Linux over multiple major releases and was the foundation of Red Hat OpenShift since its initial release in 2011. Since 2013, Red Hat has contributed to the docker open source project, becoming the #2 contributor overall. Red Hat was one of the first major companies to announce, back in September 2013, that we would be collaborating on the docker project and has worked with Docker Inc. and a number of other vendors and individual contributors to help make this project what it is today.

Red Hat packages and ships a fully supported binary version of the docker container engine project as part of Red Hat Enterprise Linux 7 and Red Hat Enterprise Linux Atomic Host. This is the foundation for OpenShift, which provides a robust, production-grade container platform that also includes container orchestration with Kubernetes and additional application lifecycle management capabilities, fully supported runtimes, middleware and database services, as well integrated operational management solutions and developer tools. Red Hat provides support for leading projects in the container ecosystem and delivers a comprehensive container platform product. We enable customers to leverage their existing investments in Linux and evolve them to leverage the benefits of Linux containers. We leverage the power of Kubernetes to move them from running individual containers on individual servers, to running complex multi-container applications across clusters of servers, running in their data centers or in the public cloud. We connect that to their applications and their application development processes by adding capabilities to automate and integrate with their build, continuous integration and continuous deployment processes. Finally, we provide operational management capabilities to manage their container platform on the public cloud, private cloud, virtualization platform or physical infrastructure of their choice.

At Red Hat, we saw early on both the potential for containers and the importance of standardizing how people build and run containers. That’s why we’ve committed to being a leader in the upstream container communities and governance organizations. Most importantly, Red Hat has a well-earned reputation as a leader in Linux over the past 15+ years and a proven track record with customers. As more of these customers move from running applications on Linux, to running applications on Linux containers - those same organizations and more are again trusting Red Hat to lead the way.

저자 소개

Joe Fernandes is Vice President and General Manager of the Artificial Intelligence (AI) Business Unit at Red Hat, where he leads product management, product marketing, and technical marketing for Red Hat's AI platforms, including Red Hat Enterprise Linux AI (RHEL AI) and Red Hat OpenShift AI.

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

오리지널 쇼

엔터프라이즈 기술 분야의 제작자와 리더가 전하는 흥미로운 스토리

제품

- Red Hat Enterprise Linux

- Red Hat OpenShift Enterprise

- Red Hat Ansible Automation Platform

- 클라우드 서비스

- 모든 제품 보기

툴

체험, 구매 & 영업

커뮤니케이션

Red Hat 소개

Red Hat은 Linux, 클라우드, 컨테이너, 쿠버네티스 등을 포함한 글로벌 엔터프라이즈 오픈소스 솔루션 공급업체입니다. Red Hat은 코어 데이터센터에서 네트워크 엣지에 이르기까지 다양한 플랫폼과 환경에서 기업의 업무 편의성을 높여 주는 강화된 기능의 솔루션을 제공합니다.