In an earlier post, we provided hands-on instructions leveraging the vdpa_sim simulator. As the virtio/vDPA project continues to evolve, we have changed the process used for creating vDPA devices. We have also introduced a new vDPA software device called vp_vdpa which provides real traffic capabilities compared to loopback-only mode the previous vdpa_sim software device provided

In this article we explain the different vDPA software simulators, and provide detailed instruction for hands-on use cases you can try out.

We now have two vDPA software simulators: vdpa_sim and vp_vdpa.

In Part 1 of this article we’ll focus on hands-on examples with vdpa_sim, and in Part 2 we’ll look at hands-on examples with vp_vdpa.

vDPA software simulators

The vdpa_sim is a device with a software implementation of an input–output memory management unit (IOMMU) that represents an emulator on a chip. The vdpa_sim will loopback transmit (Tx( traffic to its receive (Rx). The main use cases for this simulated device are feature testing, prototyping and development. The drawback of using such a device is that we can only use a loopback function instead of real traffic.

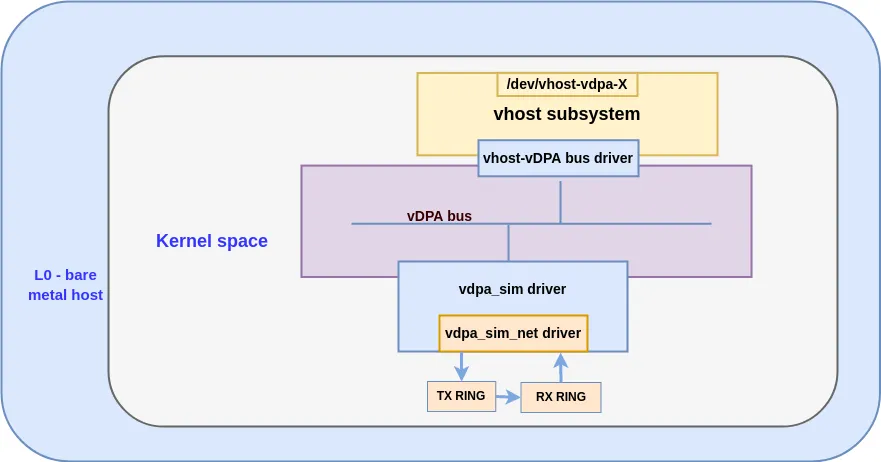

The following diagram shows the building blocks of a vdpa_sim device:

Figure 1: abstraction for vdpa_sim

We will use two types of vDPA bus to set up the environment: vhost_vdpa bus driver and virtio_vdpa bus driver. For additional information on these bus drivers, refer to vDPA bus drivers for kernel subsystem interactions.

We will provide hands on instructions for two use cases:

-

Use case #1: vpda_sim+vhost_vdpa will work as a network backend for a virtual machine (VM) with guest kernel virtio-net driver

-

Use case #2: vdpa_sim+virtio_vdpa bus will work as a virtio device (can be consumed by a container workload)

General requirements and preparation

In order to run the vDPA setups the following requirements are mandatory:

-

A computer (physical machine, VM or container) running a Linux distribution. This guide is focused on Fedora 36, however the commands should not change significantly for other Linux distributions

-

A user with

sudopermissions -

About 50GB of free space in your home directory

-

At least 8GB of RAM

Also, when describing nested guests, we will use the following terminology:

-

L0: the bare metal host, running KVM

-

L1: a VM running on L0, also called the "guest hypervisor" as it itself is capable of running KVM

-

L2: a VM running on L1, also called the "nested guest"

Prepare the general environment

The following section should be treated as a checklist you can use to assess that everything is working on your environment (although some may already be installed and you can skip them).

Install related packages (if required)

Here are all of the related packages required — please install them if needed:

-

kernel-modules-internal (required by pktgen)

-

libmnl-devel (required by vdpa tool)

-

qemu-kvm

-

libvirt-daemon-qemu

-

libvirt-daemon-kvm

-

libvirt

-

virt-install

-

libguestfs-tools-c

-

kernel-tools (required by libvirt/create qcow2)

-

driverctl (required by driverctl)

Prepare the qcow2 image

When we use the nested environment, we need to use the qcow2 image to load the VM. The following describes the steps to create an L1/L2 qcow2 image (in this case we are creating L1.qcow2).

Download the latest Fedora-Cloud-Base image

If the download link below is not available, please go to the Fedora Cloud website and get a new one:

[user@L0 ~]#wget https://download-cc-rdu01.fedoraproject.org/pub/fedora/linux/releases/36/Cloud/x86_64/images/Fedora-Cloud-Base-36-1.5.x86_64.qcow2

Use the following command to install the related package and change the password to your own (insert your password in “changeme”):

[user@L0 ~]# sudo virt-sysprep -root-password password:changeme --uninstall cloud-init --network --install ethtool,pciutils --selinux-relabel -a Fedora-Cloud-Base-36-1.5.x86_64.qcow2

Enlarge the qcow2 disk size (if required)

Sometimes we may need a larger disk. Following are the steps to modify the disk size (in this example we are creating a 30GB disk):

[user@L0 ~]# sudo yum install libguestfs-tools guestfs-tools [user@L0 ~]# qemu-img create -f qcow2 -o preallocation=metadata L1.qcow2 30G Formatting 'L1.qcow2', fmt=qcow2 cluster_size=65536 extended_l2=off preallocation=metadata compression_type=zlib size=53687091200 lazy_refcounts=off refcount_bits=16

Expand the file system:

[user@L0 ~]# virt-filesystems --long --parts --blkdevs -h -a Fedora-Cloud-Base-36-1.5.x86_64.qcow2 Name Type MBR Size Parent /dev/sda1 partition - 1.0M /dev/sda /dev/sda2 partition - 1000M /dev/sda /dev/sda3 partition - 100M /dev/sda /dev/sda4 partition - 4.0M /dev/sda /dev/sda5 partition - 3.9G /dev/sda /dev/sda device - 5.0G - [user@L0 ~]# virt-resize --resize /dev/sda2=+500M --expand /dev/sda5 Fedora-Cloud-Base-36-1.5.x86_64.qcow2 L1.qcow2

Now we have got an L1.qcow2 image with disk size 30G. You can create the L2.qcow2 image by repeating these steps.

Install vdpa tool (if required)

Vdpa tool is a tool used to set up a vdpa device. This tool was included in iproute-5.15.0-2.fc36.x86_64.rpm and was installed in Fedora 36 by default. Our suggestion is to update the iproute to version 5.18.0. If your iproute is newer than this, you can skip this step.

Note : please install libmnl-devel before you install the vdpa tool

[user@L0 ~]# sudo dnf install libmnl-devel

Clone and install the vdpa tool

[user@L0 ~]# git clone git://git.kernel.org/pub/scm/network/iproute2/iproute2-next.git [user@L0 ~]# cd iproute2-next [user@L0 ~]# ./configure [user@L0 ~]# make [user@L0 ~]# make install

Check if you install it correctly

[user@L0 ~]# vdpa -V vdpa utility, iproute2-5.18.0 [user@L0 ~]# vdpa --help Usage: vdpa [ OPTIONS ] OBJECT { COMMAND | help } where OBJECT := { mgmtdev | dev } OPTIONS := { -V[ersion] | -n[o-nice-names] | -j[son] | -p[retty] }

Install the libvirt (if required)

If the QEMU and libvirt are not installed in your system you can use the following command to set up the environment. We require using libvirt version 6.9.0 or newer. We also require using QEMU version 5.1.0 or newer.

[user@L0 ~]#sudo yum install qemu-kvm libvirt-daemon-qemu libvirt-daemon-kvm libvirt virt-install libguestfs-tools-c kernel-tools [user@L0 ~]#sudo usermod -a -G libvirt $(whoami) [user@L0 ~]#sudo systemctl enable libvirtd [user@L0 ~]#sudo systemctl start libvirtd [user@L0 ~]#virsh net-define /usr/share/libvirt/networks/default.xml Network default defined from /usr/share/libvirt/networks/default.xml [user@L0 ~]# virsh net-start default Network default started [user@L0 ~]# virsh net-list Name State Autostart Persistent -------------------------------------------- default active no yes

Use case 1: Experimenting with vdpa_sim_net + vhost_vdpa bus driver

Overview of the datapath

The vhost vDPA bus driver connects the vDPA bus to the vhost subsystem and presents a vhost char device to userspace. The vhost-vdpa device could be used as a network backend for VMs with the help of QEMU. For more information see vDPA kernel framework part 3: usage for VMs and containers.

We will use QEMU/libvirt to set up this environment.

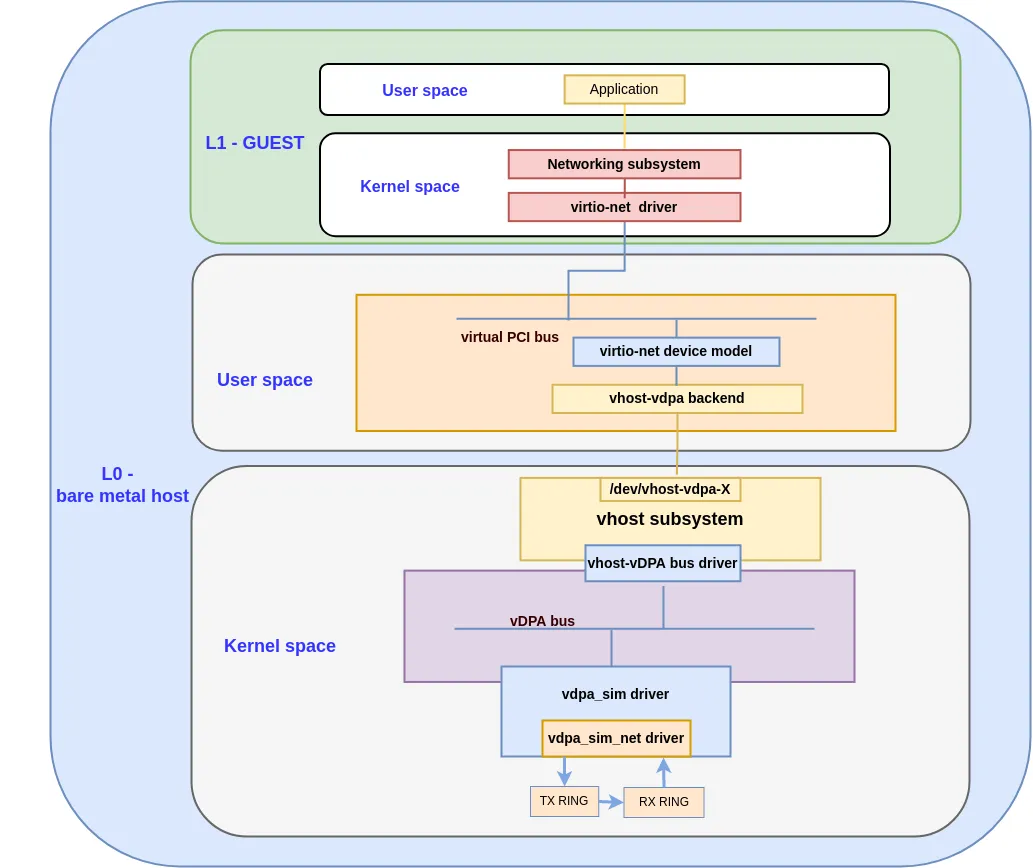

The following diagram shows the datapath for this solution:

Figure 2: the datapath for the vdpa_sim with vhost_vdpa

Create the vdpa device

Load all the related kmod, you should now load the kmod with the following commands:

[user@L0 ~]# modprobe vdpa [user@L0 ~]# modprobe vhost_vdpa [user@L0 ~]# modprobe vdpa_sim [user@L0 ~]# modprobe vdpa_sim_net

Check if the vdpa mgmtdev was created successfully, you can find the device vdpasim_net by the following command:

[user@L0 ~]# vdpa mgmtdev show vdpasim_net: supported_classes net max_supported_vqs 2 dev_features MAC ANY_LAYOUT VERSION_1 ACCESS_PLATFORM

Create the vdpa device:

[user@L0 ~]# vdpa dev add name vdpa0 mgmtdev vdpasim_net mac 00:e8:ca:33:ba:05 [user@L0 ~]# vdpa dev show -jp { "dev": { "vdpa0": { "type": "network", "mgmtdev": "vdpasim_net", "vendor_id": 0, "max_vqs": 3, "max_vq_size": 256 } } }

Verify that vhost_vdpa is bound correctly — you can enforce that the bus driver is indeed vhost_vdpa by using the following commands (you should see the driver name in the output):

[user@L0 ~]# ls -l /sys/bus/vdpa/devices/vdpa0/driver lrwxrwxrwx. 1 root root 0 Jun 12 07:33 /sys/bus/vdpa/devices/vdpa0/driver -> ../../bus/vdpa/drivers/vhost_vdpa [user@L0 ~]# sudo driverctl -b vdpa list-devices vdpa0 vhost_vdpa [*]

If the driver is not bound correctly, you can fix it by performing the following steps:

[user@L0 ~]#sudo driverctl -b vdpa list-devices vdpa0 virtio_vdpa [*] [user@L0 ~]#sudo driverctl -b vdpa set-override vdpa0 vhost_vdpa [user@L0 ~]#sudo driverctl -b vdpa list-devices vdpa0 vhost_vdpa [*]

Load the L1 guest

We will provide two methods to load the VM: a QEMU cmdline and libvirt API (both are valid).

Method 1: Load the VM with a QEMU cmdline

Set the ulimit -l to be unlimited. ulimit -l sets the maximum size that may be locked into memory, since vhost_vpda needs to lock pages for making sure the hardware DMA works correctly. Here we set it to unlimited. and you can choose the size you need.

[user@L0 ~]# ulimit -l unlimited

If you forget to set this value, you may get the error message from QEMU as following:

qemu-system-x86_64: failed to write, fd=12, errno=14 (Bad address) qemu-system-x86_64: vhost vdpa map fail! qemu-system-x86_64: vhost-vdpa: DMA mapping failed, unable to continue

Now we want to launch the guest VM.

The device /dev/vhost-vdpa-0 is the vDPA device we can use. The following is a simple example of using QEMU to launch a VM with vhost_vdpa:

[user@L0 ~]# sudo qemu-kvm \ -drive file=//home/test/L1.qcow2,media=disk,if=virtio \ -net nic,model=virtio \ -net user,hostfwd=tcp::2226-:22\ -netdev type=vhost-vdpa,vhostdev=/dev/vhost-vdpa-0,id=vhost-vdpa0 \ -device virtio-net-pci,netdev=vhost-vdpa0,bus=pcie.0,addr=0x7\ disable-modern=off,page-per-vq=on \ -nographic \ -m 4G \ -smp 4 \ -cpu host \ 2>&1 | tee vm.log

Method 2: Load the VM using libvirt

First we need to Use virsh-install to install an L1 guest VM:

[user@L0 pktgen]# virt-install -n L1 \ --ram 4096 \ --vcpus 4 \ --nographics \ --virt-type kvm \ --disk path=/home/test/L1.qcow2,bus=virtio \ --noautoconsole \ --import \ --os-variant fedora36 Starting install... Creating domain... | 0 B 00:00:00 Domain creation completed.

Use virsh edit to add the vdpa device in L1 guest.

Here is the sample for vdpa device and you can get more information from the libvert website.

<devices> <interface type='vdpa'> <source dev='/dev/vhost-vdpa-0'/> </interface> </devices>

Attach the vdpa device in L1 guest, you can also use virsh edit to edit the L1.xml:

[root@L0 test]# cat vdpa.xml <interface type='vdpa'> <source dev='/dev/vhost-vdpa-0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'> </interface> [root@L0 test]# virsh attach-device --config L1 vdpa.xml Device attached successfully

This is the workaround for locking the guest's memory:

[user@L1 ~]# virt-xml L1 --edit --memorybacking locked=on Domain 'L1' defined successfully.

Start L1 guest:

[root@L0 dev]# virsh start L1 Domain 'L1' started [root@L0 dev]# virsh console L1 Connected to domain 'L1' Escape character is ^] (Ctrl + ])

After the guest boots up, we can then login to it.

We can verify if the port has been bound successfully. You can verify this by checking the PCI address. This address should be the same with the PCI address we add-in XML file.

guest login: root Password: Last login: Tue Sep 29 12:03:03 on ttyS0 [root@L1 ~]# ethtool -i eth0 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: 0000:00:07.0 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no [root@L1 ~]# ip -s link show eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT gro0 link/ether fa:46:73:e7:7d:78 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped overrun mcast 12006996 200043 0 0 0 0 TX: bytes packets errors dropped carrier collsns 12006996 200043 0 0 0 0

Running traffic with vhost_vdpa in Guest

Now that we have a vdpa_sim port with the loopback function. We can generate traffic using pktgen to verify it. Pktgen is a packet generator integrated with the Linux kernel. For more information please refer to the pktgen.txt and sample test script:

[root@L1 ~]# modprobe pktgen [ 198.003981] pktgen: Packet Generator for packet performance testing. Version: 2.75 [root@L1 ~]# ip -s link show eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT gro0 link/ether fa:46:73:e7:7d:78 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped overrun mcast 18013078 300081 0 0 0 0 TX: bytes packets errors dropped carrier collsns 18013078 300081 0 0 0 0 [root@L1 ~]# ./pktgen_sample01_simple.sh -i eth0 -m fa:46:73:e7:7d:78 [root@L1 ~]# ip -s link show eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT gro0 link/ether fa:46:73:e7:7d:78 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped overrun mcast 24013078 400081 0 0 0 0 TX: bytes packets errors dropped carrier collsns 24013078 400081 0 0 0 0

You can see the RX packets are increasing together with TX packets which means the vdpa_sim is working as expected.

Use case 2: Experimenting with vdpa_sim_net + virtio_vdpa bus driver

Overview of the datapath

Virtio_vdpa driver is a transport implementation for kernel virtio drivers on top of vDPA bus operations. Virtio_vdpa will create a virtio device in the virtio bus. For more information on the virtio_vdpa bus see vDPA kernel framework part 3: usage for VMs and containers.

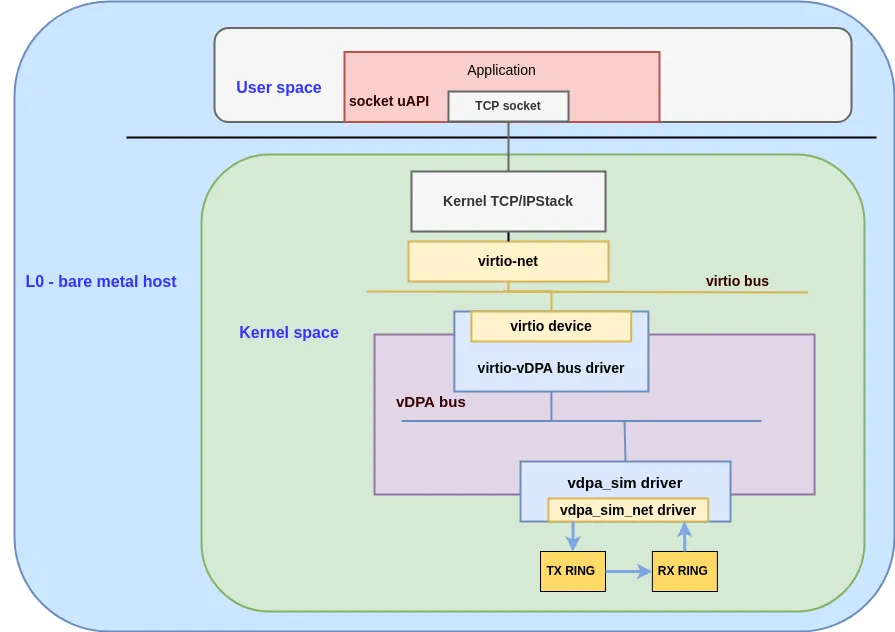

This diagram shows the datapath for virtio-vDPA using a vdpa_sim (the vDPA simulator device):

Figure 3 : the datapath for the vdpa_sim with virtio_vdpa

Create the vdpa device

Let’s start with loading the model:

[user@L0 ~]# modprobe vdpa [user@L0 ~]# modprobe vdpa_sim [user@L0 ~]# modprobe vdpa_sim_net [user@L0 ~]# modprobe virtio_vdpa

Note: if the vDPA related modules are not compiled then follow the instructions in Use case #1 vhost_vpda bus driver to compile them.

Let’s create the vDPA device:

[user@L0 ~]# vdpa mgmtdev show vdpasim_net: supported_classes net max_supported_vqs 2 dev_features MAC ANY_LAYOUT VERSION_1 ACCESS_PLATFORM [user@L0 ~]# vdpa dev add name vdpa0 mgmtdev vdpasim_net mac 00:11:22:33:44:55 [user@L0 ~]# vdpa mgmtdev show -jp { "mgmtdev": { "vdpasim_net": { "supported_classes": [ "net" ], "max_supported_vqs": 2, "dev_features": [ "MAC","ANY_LAYOUT","VERSION_1","ACCESS_PLATFORM" ] } } }

Let’s verify the bus-info — verify the driver is bounded to virtio-vdpa correctly (observe the correct driver in the output):

[root@L0 ~]# ls -l /sys/bus/vdpa/devices/vdpa0/driver lrwxrwxrwx. 1 root root 0 Jun 12 07:44 /sys/bus/vdpa/devices/vdpa0/driver -> ../../bus/vdpa/drivers/virtio_vdpa [root@L0 ~]# driverctl -b vdpa list-devices vdpa0 virtio_vdpa [*]

Now we want to confirm that everything is configured properly and for that we want to ensure our port uses a bus-info of type vdpa:

[user@L0 ~]# ip -s link show eth3 7: eth3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000 link/ether 00:11:22:33:44:55 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 5965 36 0 0 0 0 TX: bytes packets errors dropped carrier collsns 5965 36 0 0 0 0 [user@L0 ~]# ethtool -i eth3 driver: virtio_net version: 1.0.0 firmware-version: expansion-rom-version: bus-info: vdpa0 supports-statistics: yes supports-test: no supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no

Running traffic on the virtio_vdpa

Now that we have a vdpa_sim port with the loopback function we can generate traffic using pktgen to verify it. If the pktgen was not installed, you can install it by running the following command:

[user@L0 ~]# dnf install kernel-modules-internal

For more information please refer to the pktgen doc and sample test script.

Following are the commands and the running logs:

[user@L0 ~]# ip -s link show eth3 7: eth3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000 link/ether 00:11:22:33:44:55 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 9172 55 0 0 0 0 TX: bytes packets errors dropped carrier collsns 9172 55 0 0 0 0 [user@L0 ~]# ./pktgen_sample01_simple.sh -i eth0 -m 00:e8:ca:33:ba:05 (you can get this script from sample test script) [user@L0 ~]# ip -s link show eth3 7: eth3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000 link/ether 00:11:22:33:44:55 brd ff:ff:ff:ff:ff:ff RX: bytes packets errors dropped missed mcast 6011992 100072 0 0 0 0 TX: bytes packets errors dropped carrier collsns 6011992 100072 0 0 0 0

You can see the RX packets are increasing together with TX packets which means the vdpa_sim is working as expected.

Summary

In this article we've covered the vdpa_sim device and presented two use cases for using it with the vhost_vdap and virtio_vdpa. In our next article, we will present the vp_vdpa driver which enables running real traffic on the devices.

Sobre os autores

Cindy is a Senior Software Engineer with Red Hat, specializing in Virtualization and Networking.

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Programas originais

Veja as histórias divertidas de criadores e líderes em tecnologia empresarial

Produtos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Red Hat Cloud Services

- Veja todos os produtos

Ferramentas

- Treinamento e certificação

- Minha conta

- Suporte ao cliente

- Recursos para desenvolvedores

- Encontre um parceiro

- Red Hat Ecosystem Catalog

- Calculadora de valor Red Hat

- Documentação

Experimente, compre, venda

Comunicação

- Contate o setor de vendas

- Fale com o Atendimento ao Cliente

- Contate o setor de treinamento

- Redes sociais

Sobre a Red Hat

A Red Hat é a líder mundial em soluções empresariais open source como Linux, nuvem, containers e Kubernetes. Fornecemos soluções robustas que facilitam o trabalho em diversas plataformas e ambientes, do datacenter principal até a borda da rede.

Selecione um idioma

Red Hat legal and privacy links

- Sobre a Red Hat

- Oportunidades de emprego

- Eventos

- Escritórios

- Fale com a Red Hat

- Blog da Red Hat

- Diversidade, equidade e inclusão

- Cool Stuff Store

- Red Hat Summit