A while ago I got involved in a customer project to test automation for a broad set of use cases. One of the first groups involved was the database team—they had a project where they wanted to automate the provisioning of Windows servers on top of vSphere and then install and configure Microsoft SQL server on top of that Windows server. They had 50 servers they wanted to deploy with SQL server. I thought this would be an excellent opportunity to also show them an alternative, namely to run the SQL databases on top of Red Hat Enterprise Linux (RHEL) instead.

RHEL subscriptions come with great tools and automation capabilities including RHEL System Roles. System Roles use Red Hat Ansible Automation Platform to simplify the setup, which was perfect for this use case and to use as a demonstration.

The demo I created specifically focused on the automated setup of RHEL and SQL with relevant monitoring with Grafana and Red Hat Insights. It doesn’t include the entire ordering process with things like IP Address Management (IPAM) and DNS, etc.

This workflow was my end goal as a demo for the project team.

Some parts of the environment were already in place. VMware infrastructure, Red Hat Ansible Automation Platform, Red Hat Insights and a monitoring server in the environment to monitor both SQL servers and other systems from a central point.

Figure 2

The preferred method for collecting metrics in RHEL is to use Performance Co-Pilot (PCP), and Grafana is a great tool to visualize the metrics. So I used that combination for my use case.

What you see in figure 2 was the starting point. If you want more information on how to use the Metrics System Role to deploy and configure Grafana, read this blog post about the setup of Grafana, by Brian Smith.

Now we can start provisioning the database server and configure monitoring. I am using Red Hat Ansible Automation Platform and a workflow job template to link different playbooks together in a workflow. By doing so, playbooks can be kept smaller, more focused and easy to reuse in other workflows.

For the entire workflow I am using the following playbooks, which are available on GitHub if you want to give it a try.

1. deploy_rhel_on_vsphere.yml

--- - name: Create a VM from a template hosts: localhost connection: local gather_facts: no vars: vcenter_server: vcenter_user: vcenter_pass: vm_name: vm_template: guest_domain: tasks: - name: Clone the template vmware_guest: hostname: "{{ vcenter_server }}" username: "{{ vcenter_user }}" password: "{{ vcenter_pass }}" validate_certs: False name: "{{ vm_name }}" template: "{{ vm_template }}" datacenter: "{{ vm_datacenter }}" folder: "{{ vm_folder }}" disk: - size_gb: "{{ vm_disk_size }}" type: default datastore: "{{ vm_datastore }}" hardware: memory_mb: "{{ vm_memory }}" num_cpus: "{{ vm_cpu }}" customization_spec: "{{ vm_cuztomization_spec }}" state: poweredon wait_for_ip_address: yes - name: Pass variables to Tower Workflows set_stats: data: vm_name: "{{ vm_name }}" vm_cpu: "{{ vm_cpu }}" vm_memory: "{{ vm_memory }}" vm_disk_size: "{{ vm_disk_size }}" vm_datastore: "{{ vm_datastore }}"

This playbook targets the vCenter server to clone a VM template in VMware and we can set some specifications for the VM through variables during the order process via a survey in the Ansible Controller Node (we get to that later). In the last task (Pass variables to Tower Workflows), I use the set_stats module to pass on my variables from this play to the remaining playbooks in the workflow.

2. wf_rhsm.yml

--- - name: "Post configuration of VM" hosts: "{{ vm_name }}" become: yes tasks: - name: Register VM with Red Hat subscription redhat_subscription: state: present username: "{{ rhsm_username }}" password: "{{ rhsm_password }}" pool_ids: "{{ rhsm_pool_id }}" consumer_name: "{{ vm_name }}" auto_attach: true force_register: yes - name: Enable relevant RHEL8 repos rhsm_repository: name: "{{ item }}" with_items: "{{ rhsm_repos }}"

Here we register the server with Red Hat Subscription Management and add repos. In this playbook, I am adding the following repos.

rhsm_repos:

- rhel-8-for-x86_64-baseos-rpms

- rhel-8-for-x86_64-appstream-rpms

- ansible-2.9-for-rhel-8-x86_64-rpms

You only want to add the Ansible 2.9 repo if you are going to use Red Hat connector.

I added the Ansible repo here to be able to use Red Hat Connector in a later stage of the workflow.

3. wf_sql_prereqs.yml

--- - name: Solve prereqs for sql installation on the host hosts: "{{ vm_name }}" become: yes tasks: - name: Permit mssql traffic in fw on port 1443/tcp firewalld: port: 1433/tcp permanent: yes immediate: yes state: enabled - name: Permit monitoring traffic firewalld: service: "{{ item }}" permanent: yes immediate: yes state: enabled with_items: - pmcd - pmproxy

This playbook opens firewall ports for SQL Server and monitoring.

4. wf_mssql_server.yml

--- - hosts: "{{ vm_name }}" become: yes vars: mssql_accept_microsoft_odbc_driver_17_for_sql_server_eula: true mssql_accept_microsoft_cli_utilities_for_sql_server_eula: true mssql_accept_microsoft_sql_server_standard_eula: true mssql_password: "{{ secret_sql_passwd }}" mssql_edition: Developer mssql_tcp_port: 1433 roles: - microsoft.sql.server

This playbook installs and configures mssql on the server using microsoft.sql.server Ansible role. The role is jointly developed and maintained by Red Hat and Microsoft and fully supported by Red Hat with a RHEL subscription. It simplifies and automates the installation and configuration of SQL Server, but also the configuration of your RHEL host with recommended settings to run the SQL Server workloads. You can get more instructions about how to use it on the Red Hat Customer Portal RHEL documentation.

5. wf_metrics.yml

--- - name: Use metrics system role to configure PCP metrics recording hosts: "{{ vm_name }}" vars: metrics_retention_days: 7 metrics_from_mssql: yes mssql_agent_trusted: mssql_agent_username: mssql_agent_password: roles: - redhat.rhel_system_roles.metrics - name: Use metrics system role to configure Grafana hosts: "{{ monitor_name }}" vars: metrics_monitored_hosts: ["{{ vm_name }}"] roles: - redhat.rhel_system_roles.metrics - name: Ensure remote monitoring is possible hosts: "{{ vm_name }}" tasks: - name: Make sure pmproxy is running service: name: pmproxy state: started enabled: yes

In the first task, “Use metrics system role to configure PCP metrics recording,” applies to your newly created rhel-vm with SQL Server. The variables configure PCP to retain metrics for both OS and SQL server for seven days. In order for the PCP Performance Monitoring Domain Agent (PMDA) for SQL to connect to the SQL database, there are authentication variables to set in the metrics system role, mssql_agent_username and mssql_agent_password.

The variable mssql_agent_trusted needs to be in there as well, but without a value. The next task, “Use metrics system role to configure Grafana”, is addressing the central monitoring server. The variable here tells PCP to monitor the new SQL server.

The final task is making sure the pmproxy service is running on the SQL server. This is needed in order to get the metrics over to the central monitoring server.

6. wf_rhc

--- - name: "Post configuration of VM" hosts: "{{ vm_name }}" become: yes tasks: - name: Install necessary packages ansible.builtin.package: name: - ansible - rhc-worker-playbook state: latest - name: Register with rhc shell: | rhc connect expect "User:" send "{{ rhsm_username }}\n" expect "Password:" send "{{ rhsm_password }}\n" exit 0

This last playbook installs Ansible and the rhc-worker-playbook, prerequisites for Red Hat connector which offers an improved way of connecting systems (the new VM) to Red Hat Insights as well as enabling Red Hat Cloud Connector.

Please note that Red Hat Cloud Connector requires a Smart Management subscription, and enables you to remediate problems directly from Red Hat Insights. If you would rather use the traditional method for connecting to Insights (e.g. if you don’t want to install Ansible in every host or you already have a Satellite server), you can use the playbook wf_insights.yml from the same repository.

Now we have all the playbooks to use for the demo. Next we have to add and use them in the Ansible Controller Node (formerly known as Ansible Tower).

As mentioned before, I am using a workflow job template to connect the playbooks together in a workflow. Before they can be put into the workflow job template, the playbooks need to be associated with Job Templates. See the Job Template example figure.

Job Template example

As you can see above, a Job Template glues a playbook together with an inventory holding the machines you are managing, adding the credentials needed for the playbook and much more.

You can then set access permissions on the Job Template as well as the objects in it to control who can use it. To get more information about Job Templates, you can read more in the Ansible Controller user guide.

A Workflow Job Template consists of Job Templates and other types of tasks, such as inventory syncs or approval steps, etc. Here is an example from the demo.

You create the workflow in the visualizer, and it looks like this.

So from the start you create “nodes.” Deploy VM is my first node from where I point to a Job Template holding the credentials, playbook, etc. to clone my VM template in vSphere.

Then you work like this to define your entire workflow.

I also added a survey to my template which gives the person using the template a chance to set variables in a simple way that is controlled and predefined.

To learn more about Workflow Job Templates, you can read up on it here.

After the survey is done and you have chosen your configuration for the DB server, you can launch the provisioning workflow. The result will be a new VM optimized for SQL server running RHEL 8.5 (in my case) and SQL server in your vSphere cluster.

You can connect to it via Azure Data Studio as you would have done if it were running on a Windows machine.

Necessary metrics from the OS and from SQL server are sent to a central monitoring server where you can do performance troubleshooting or just see how it performs.

You can read more about how to setup and use the Grafana dashboards provided by RHEL System Roles, and more about performance monitoring of SQL server on RHEL in this blog post written by Don Pinto and Louis Imershein.

Last but not least, your SQL server is monitored by the Red Hat Insights service.

Red Hat Insights is included with most RHEL subscriptions and is a managed service using predictive analytics and deep domain expertise to assess IT environments and identify and prioritize operational and security risks, while simplifying subscription tracking across hybrid cloud deployments. Red Hat Insights not only focuses on the RHEL OS, but also on things like underlying infrastructure such as Azure and AWS, and applications like Microsoft SQL Server and others. Insights helps teams stay ahead of critical operational issues.

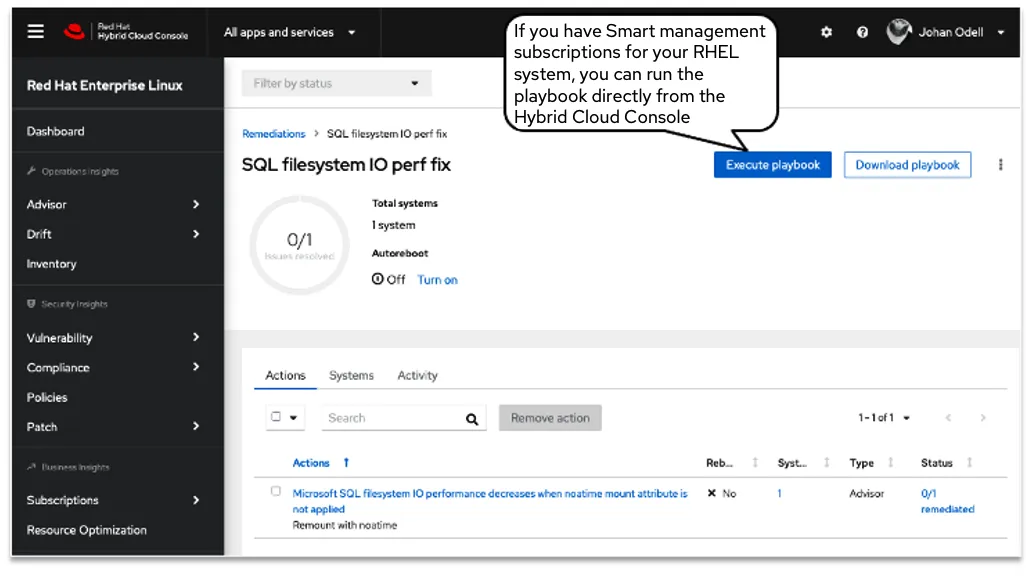

As you can see in the picture, Insights has found a configuration setting in my SQL server that can cause a performance decrease over time. Exactly the sort of thing that might be hard to find when users are complaining about performance and you have tons of other things to do.

In Insights, SQL on RHEL best practices are codified into our recommendations, helping you be more confident that your MS SQL deployment is running properly. The recommendations can be read in Insights, but Insights can even produce an Ansible playbook to get you to an optimal state by automating the steps needed to get there.

If this is interesting and you want to test it yourself, all the playbooks used in this demonstration can be found here.

This blog is only one example of testing automation with SQL Server on Red Hat Enterprise Linux for a broad set of use cases. Check out our recent case study if you are interested to learn more.

Sobre o autor

Johan Odell is a Solution Architect working with Enterprise Accounts at Red Hat with a focus on Linux, automation and Kubernetes. He has been at Red Hat since 2017, previously working in similar roles for over 20 years.

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Programas originais

Veja as histórias divertidas de criadores e líderes em tecnologia empresarial

Produtos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Red Hat Cloud Services

- Veja todos os produtos

Ferramentas

- Treinamento e certificação

- Minha conta

- Suporte ao cliente

- Recursos para desenvolvedores

- Encontre um parceiro

- Red Hat Ecosystem Catalog

- Calculadora de valor Red Hat

- Documentação

Experimente, compre, venda

Comunicação

- Contate o setor de vendas

- Fale com o Atendimento ao Cliente

- Contate o setor de treinamento

- Redes sociais

Sobre a Red Hat

A Red Hat é a líder mundial em soluções empresariais open source como Linux, nuvem, containers e Kubernetes. Fornecemos soluções robustas que facilitam o trabalho em diversas plataformas e ambientes, do datacenter principal até a borda da rede.

Selecione um idioma

Red Hat legal and privacy links

- Sobre a Red Hat

- Oportunidades de emprego

- Eventos

- Escritórios

- Fale com a Red Hat

- Blog da Red Hat

- Diversidade, equidade e inclusão

- Cool Stuff Store

- Red Hat Summit