Peer-pods, also known as the Kata remote hypervisor, enable the creation of Kata Virtual Machines (VM) on any environment, be it on-prem or in the cloud, without requiring bare metal servers or nested virtualization support. This is accomplished by extending Kata containers runtime to manage the VM lifecycle using cloud provider APIs (e.g., AWS, Azure) or third-party hypervisor APIs (such as VMware vSphere).

Since peer-pods are separate VMs alongside the Kubernetes node, traditional Container Storage Interface (CSI) cannot function properly within them, and different solutions are required. This blog will dig into these challenges and discuss solutions for overcoming these issues in the context of CSI and peer-pods.

A refresher on peer-pods

In peer-pods, the workload runs in a separate VM adjacent to the Kubernetes worker node's VM. The peer-pod VM connects to the Kubernetes overlay network through a tunnel setup between the Kubernetes node VM and the peer-pod VM.

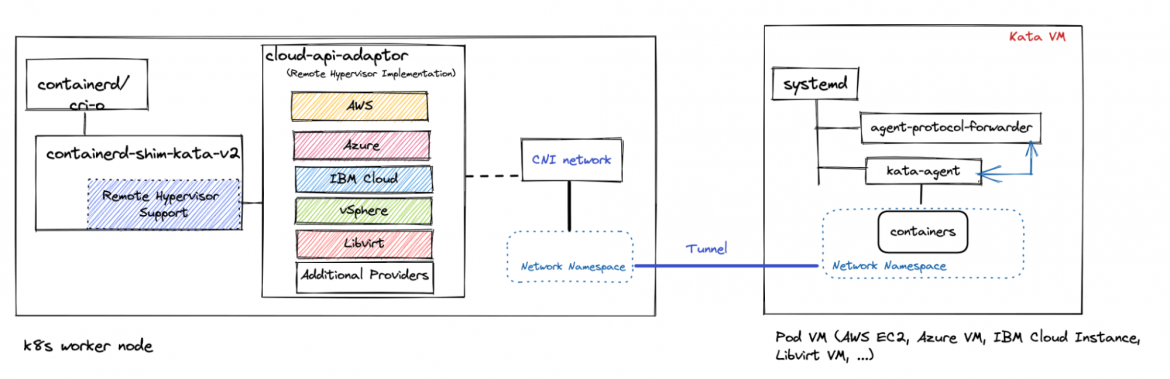

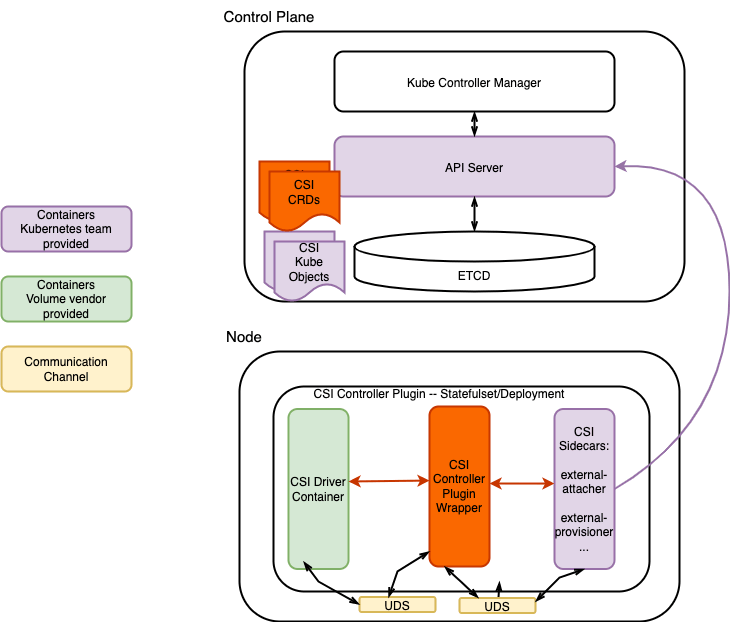

The following diagram from Red Hat OpenShift sandboxed containers: Peer-pods technical deep dive shows the overall peer-pods architecture, including the Kubernetes worker node and the Pod VM.

Figure 1: peer-pods architecture

The main components of a peer-pods architecture are:

- Remote hypervisor support: Enhances the Kata shim layer to interact with the cloud-api-adapter instead of directly calling local hypervisor APIs.

- cloud-api-adaptor: Enables the creation of a Kata VM in a cloud environment and on third-party hypervisors by invoking their respective public APIs.

- agent-protocol-forwarder: Enables the kata-agent to communicate with the worker node over TCP.

The Container Storage Interface (CSI) in Kubernetes

The Container Storage Interface (CSI) exposes various persistent volumes to a pod. The Kubernetes community defines these interfaces and provides helpful sidecars to simplify the development and deployment of CSI volume drivers on Kubernetes. CSI volume drivers implement the CSI interface and consist of the CSI Controller Plugin and the CSI Node Plugin (explained in the next section). Storage developers leverage these sidecars, implement the CSI interface, and supply it to customers as CSI volume drivers. Here is a look at the details of the CSI interface.

The CSI interface

CSI volume drivers implement the CSI interface, which consists of two parts:

- CSI Controller Plugin: A cluster-level StatefulSet or Deployment to facilitate communication with the Kubernetes controllers.

- CSI Node Plugin: A node-level DaemonSet to facilitate communication with the Kubelet on each node.

CSI Plugins are used to represent both the CSI Controller Plugin and the CSI Node Plugin when there is no need to be explicit.

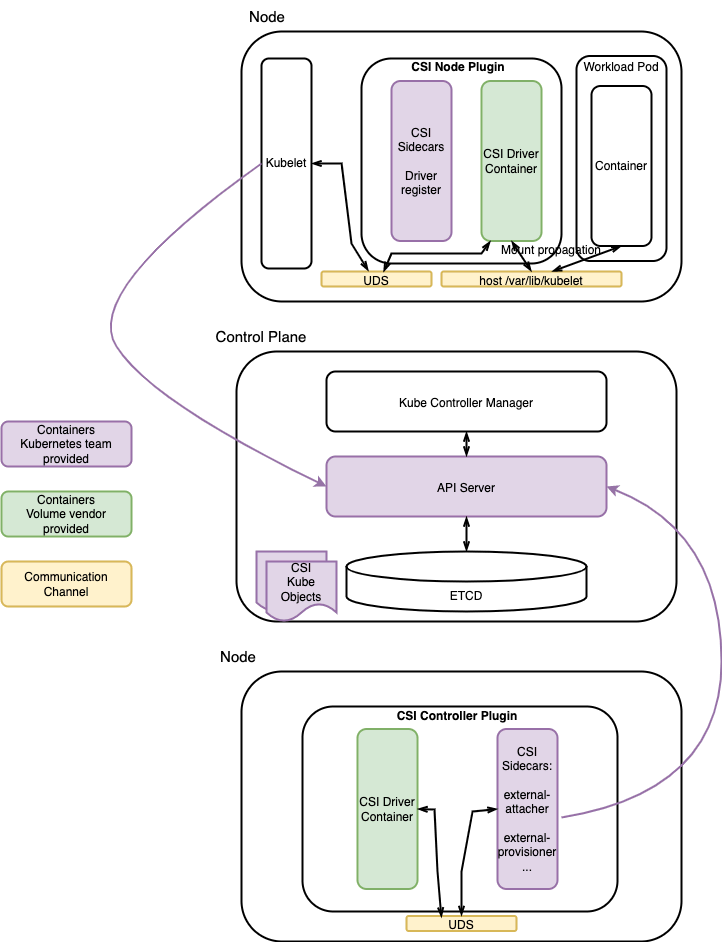

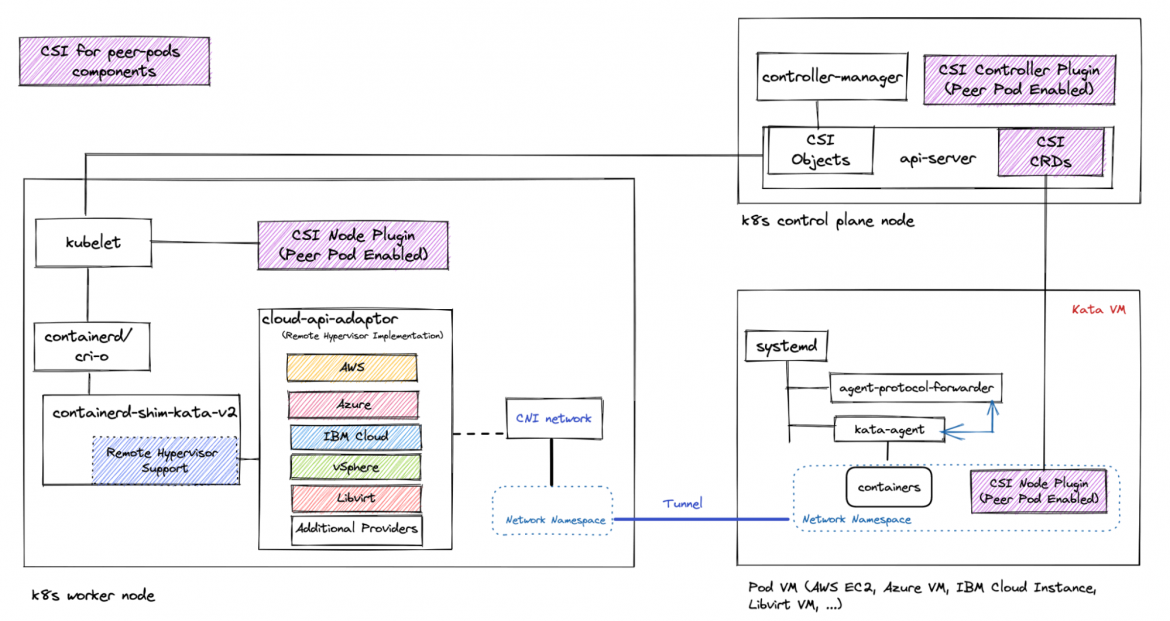

Figure 2 shows the high-level architecture of the CSI Controller Plugin:

Figure 2: CSI Controller Plugin

The figure shows that the volume vendor implements the CSI APIs within the CSI Driver Container (green) to create/delete or attach/detach volumes. The CSI Driver Container communicates with the CSI Sidecar (purple) via a Unix Domain Socket so that the CSI Sidecar can save the desired and actual status in the CSI Kube Objects inside the controller node (purple). The CSI Node Plugin can read the intermediate status within the CSI Kube Objects and take corresponding actions, such as mounting or unmounting a volume to the pod.

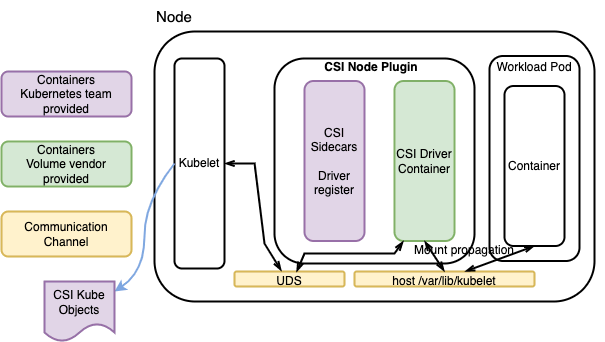

Figure 3 shows the high-level architecture of the CSI Node Plugin:

Figure 3: CSI Node Plugin

Figure 3 shows how the volume vendor implements the CSI APIs within the CSI Node Plugin and communicates with Kubelet via a Unix Domain Socket. Kubelet reads the intermediate status in the CSI Kube Objects, which is saved by the CSI Controller Plugin (illustrated in Figure 2), and calls the CSI Node Plugin to take actions (such as mounting or unmounting a volume to a pod) to make the actual state match the desired state.

The CSI Controller Plugin facilitates the communication with the control plane node, while the CSI Node Plugin facilitates the communication with the worker node's Kubelet instance and multiple CSI Kube Objects.

The following figure shows how the CSI node plugin and the CSI controller plugin interact with the control plane node:

Figure 4: CSI Controller Plugin and CSI Node Plugin

Use CSI with peer-pods

I'll look more closely at using the CSI with a peer-pod.

For a standard pod, the use of a persistent volume includes the following steps:

- The volume is attached to the worker node.

- The volume is mounted to a path on the worker node.

- The mount path is propagated to the namespace of the pod from the worker node host.

- The mount path is mounted to a container in the pod.

This is more complicated with peer-pods. A volume attached to a worker node is not visible to peer-pods, as they are external VMs. Thus, for peer-pod workloads, you need to modify the CSI drivers to change the attaching and mounting algorithms being used without changing the CSI drivers code.

Challenges with CSI when using peer-pods

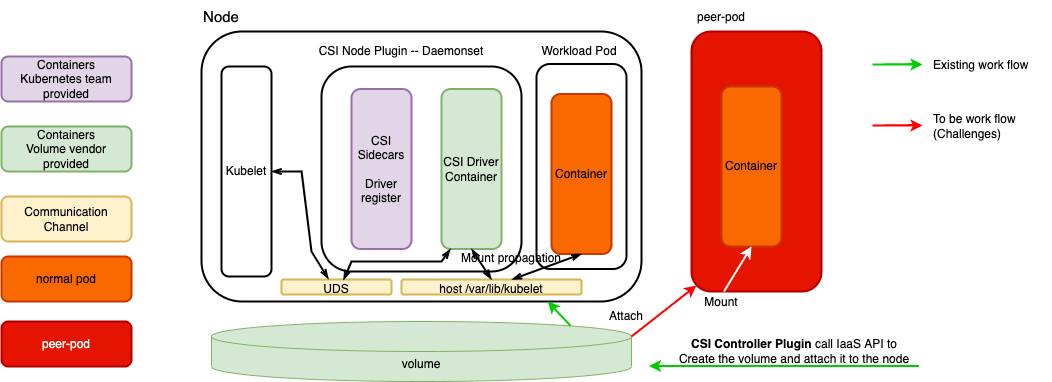

Figure 5 shows the challenges faced when using persistent volumes in peer-pods:

Figure 5: Challenges to use persistent volume in peer-pods

You need to address the following questions:

- How to interpret the CSI Controller Plugin and CSI Node Plugin so they take actions to achieve the desired storage state, such as attaching a volume to the peer-pod VM rather than attaching it to the worker node VM?

- How to solve the problem of the order of the CSI actions, such as attaching a volume, which happens before a standard pod creation, but for a peer-pod it needs to happen after a peer-pod is created?

CSI peer-pods solution overview

Interpret the CSI Controller Plugin and CSI Node Plugin

Figure 6 shows how to interpret the CSI Controller Plugin:

Figure 6: Interpret CSI Controller Plugin

Note the following:

- CSI Controller Plugin Wrapper: This is added to the flow between the CSI sidecars and the CSI Driver Container in the CSI Controller Plugin pod. It can manipulate the CSI API requests as needed. For example, the CSI Controller Plugin Wrapper can call an IaaS API to attach a volume to the peer-pods VM rather than worker node.

- Customized Resource Definition (CRD): CSI CRD is added, which can cache and replay the intermediate CSI actions. More details on this later.

Similar to Figure 6, Figure 7 shows how to interpret the CSI Node Plugin.

Figure 7: Interpret CSI Node Plugin

Note the following:

- CSI Node Plugin Wrapper: This is added to the CSI Node Plugin pod. Messages between Kubelet and the CSI Driver Container now flow through the CSI Node Plugin Wrapper. This allows the CSI API calls to be amended as needed. For example, CSI Node Plugin Wrapper can ignore the CSI mount API and cache it to Customized Resource Definition (CRD).

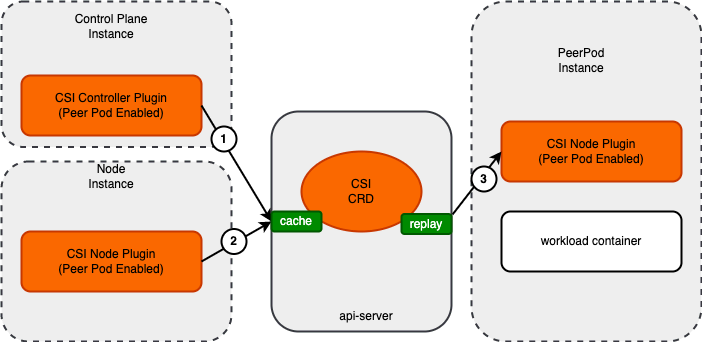

Solve the sequence of the CSI actions challenge

Figure 8 shows how to use the CSI CRD to cache and replay the CSI actions. As discussed in previous sections, some CSI actions in the CSI Controller Plugin and the CSI Node Plugin need to be postponed when using peer-pods. For example, attaching a volume, which happens before a standard pod creation, now needs to occur after the peer-pod is created. The Peer Pod Enabled CSI Controller Plugin and CSI Node Plugin will generate the CSI CRD and cache it in the api-server. I added a Peer Pod Enabled CSI Node Plugin, also in the PeerPod instance. It can retrieve the CSI CRD from api-server and replay the CSI actions.

Figure 8: CSI actions cache and replay

Note the following:

- The CSI Controller Plugin caches the intermediate status and actions in the CSI CRD.

- The CSI Node Plugin on the worker node caches intermediate status and actions in the CSI CRD.

- The CSI Node Plugin is added into the peer-pod, which retrieves and replays the actions cached in the CSI CRD.

Returning to Figure 1, the following are the new components added to support persistent volume:

Figure 9: CSI in peer-pods solution overview

Note the following:

- The Peer Pod Enabled CSI Controller Plugin on the control plane node.

- The Peer Pod Enabled CSI Node Plugin on the worker node.

- The Peer Pod Enabled CSI Node Plugin on the peer-pods VM.

- The CSI customized resource definition (CRD).

Summary

This blog post provided a high-level overview of persistent storage in the peer-pods solution.

It looked at the traditional CSI architecture, its workflow, and the unique challenges of using CSI with peer-pods. It also outlined solutions for resolving these challenges.

In addition, the post discussed the Peer Pod Enabled CSI Plugins, peer-pods volume CRD. It also examined using the Peer Pod Enabled CSI Plugin and the CRD to accomplish persistent storage in a cloud-api-adapter implementation.

Sobre os autores

Qi Feng is an architect of cloud-native infrastructure and Confidential Computing in IBM Cloud and Systems. He is the maintainer of Cloud API Adapter of Confidential Container. He is a big fan of open source and has contributed to various CNCF communities in addition to CoCo.

Da Li is working in the area of confidential containers, is one maintainer of the CNCF confidential containers cloud-api-adaptor project, and focuses on csi-wrapper, podvm image build and e2e test pipelines.

Yohei is working on enhancements of performance and security of software stacks for IBM Z. He has contributed to various open source projects related to cloud and security, and is recently contributing to the Confidential Container project, a CNCF sandbox project.

Mais como este

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Programas originais

Veja as histórias divertidas de criadores e líderes em tecnologia empresarial

Produtos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Red Hat Cloud Services

- Veja todos os produtos

Ferramentas

- Treinamento e certificação

- Minha conta

- Suporte ao cliente

- Recursos para desenvolvedores

- Encontre um parceiro

- Red Hat Ecosystem Catalog

- Calculadora de valor Red Hat

- Documentação

Experimente, compre, venda

Comunicação

- Contate o setor de vendas

- Fale com o Atendimento ao Cliente

- Contate o setor de treinamento

- Redes sociais

Sobre a Red Hat

A Red Hat é a líder mundial em soluções empresariais open source como Linux, nuvem, containers e Kubernetes. Fornecemos soluções robustas que facilitam o trabalho em diversas plataformas e ambientes, do datacenter principal até a borda da rede.

Selecione um idioma

Red Hat legal and privacy links

- Sobre a Red Hat

- Oportunidades de emprego

- Eventos

- Escritórios

- Fale com a Red Hat

- Blog da Red Hat

- Diversidade, equidade e inclusão

- Cool Stuff Store

- Red Hat Summit