Confidential computing strengthens application security by providing isolation, encryption and attestation so data remains protected while in use. By integrating these security features with a scalable, high-performance artificial intelligence (AI) and machine learning (ML) ecosystem, organizations can adopt a defense-in-depth approach. This is especially critical for regulated industries handling sensitive data, such as Personally Identifiable Information (PII), Protected Health Information (PHI), and financial information, enabling them to leverage AI with confidence.

In this article, we explore how Red Hat OpenShift AI delivers a scalable, performant trusted execution environment (TEE) to deploy Kubernetes applications through confidential containers (CoCo). This is done by leveraging Azure confidential virtual machines (CVMs), powered by NVIDIA H100 GPUs with confidential computing capabilities.

We also showcase a proof of concept (PoC) for running NVIDIA NIM™ within the CoCo environment, demonstrating an end-to-end AI inference deployment. This approach enables fine-tuned, optimized AI applications to run seamlessly on OpenShift AI, leveraging the power of NVIDIA Hopper H100 GPUs in a confidential computing framework.

This article continues previous work Red Hat and NVIDIA are collaborating on for supporting confidential GPUs as detailed in this article.

Red Hat OpenShift AI

OpenShift AI is a flexible, scalable AI and ML platform that enables enterprises to create and deliver AI applications at scale across hybrid cloud environments. It’s based on the community Open Data Hub project and common open source projects such as Jupyter, Pytorch and Kubeflow.

It provides trusted, operationally consistent capabilities for teams to experiment, serve models and deliver innovative applications.

OpenShift AI also supports the creation of, training and experimentation with ML models and integrates with common AI frameworks. It includes tools for deploying AI models in production environments providing scalability, stronger security and streamlined manageability. Leveraging Red Hat OpenShift, OpenShift AI also offers infrastructure management to orchestrate containers and cloud-native infrastructure for deploying AI workloads on different footprints. It’s also an efficient way to collaborate between different company functions (data scientists, developers, IT teams) on developing, integrating and deploying models with specific business applications.

NVIDIA NIM

NVIDIA NIM is a set of cloud-native microservices that simplifies and accelerates the deployment of AI models, especially for generative AI (gen AI) applications, across diverse infrastructures such as the cloud, data centres and workstations.

NVIDIA NIM architecture

NIM is an optimizing inference engine leveraging NVIDIA® TensorRT™ and NVIDIA TensorRT-LLM for low latency and high-throughput inferencing. NVIDIA NIM is built on a containerized microservice architecture including APIs, runtime layers and prebuilt model engines which are deployed on Kubernetes clusters for enhanced scalability. NVIDIA NIM supports multiple AI models such as large language models (LLMs), vision language models (VLMs), domain specific models and more.

NVIDIA NIM can be deployed on multiple footprints including on-premises, cloud and hybrid clouds. It’s designed as an easy-to-use solution where developers can integrate AI functionality into apps while performing minimal code changes and using standard APIs. NVIDIA NIM is also a production-grade solution strengthening data security, providing observability and monitoring, autoscaling using Kubernetes and continuous security updates to production deployments.

Red Hat OpenShift Confidential Containers

Red Hat OpenShift sandboxed containers, built on Kata Containers, now provide the additional capability to run confidential containers. Confidential containers are containers deployed within an isolated hardware enclave that help protect data and code from privileged users such as cloud or cluster administrators. The CNCF Confidential Containers project is the foundation for the OpenShift CoCo solution.

Confidential computing helps protect your data in use by leveraging dedicated hardware-based solutions. Using hardware, you can create isolated environments which are owned by you and help protect against unauthorized access or changes to your workload's data while it’s being executed (data in use).

CoCo enables cloud-native confidential computing using a number of hardware platforms and supporting technologies. CoCo aims to standardize confidential computing at the pod level and simplify its consumption in Kubernetes environments. By doing so, Kubernetes users can deploy CoCo workloads using their familiar workflows and tools without needing a deep understanding of the underlying confidential computing technologies.

OpenShift AI with NVIDIA NIM and confidential containers

Integrating NVIDIA NIM with OpenShift AI provides a unique value proposition, offering a scalable, flexible and performance-optimized platform for deploying gen AI applications.

NVIDIA NIM's microservices architecture integrates seamlessly with OpenShift AI, creating a smooth pathway for deploying AI models within a unified workflow. This enhances consistency and simplifies management across different AI deployments. The integration also facilitates the scaling and monitoring of NVIDIA NIM deployments alongside other AI models in hybrid cloud environments.

Incorporating CoCo into NIM and OpenShift AI improves security, provides enhanced data protection and unlocks additional avenues for AI usage in regulated industries such as financial services and healthcare.

Specifically, CoCo helps address the following Open Worldwide Application Security Project (OWASP) top 10 security issues for LLM applications, helping improve the trust and adoption of OpenShift AI with NVIDIA NIM across different industries:

- Prompt Injection (LLM01:2025): Prevents the unauthorized modification of prompts from privileged entities in the infrastructure by running inference workloads in protected and isolated environments, reducing susceptibility to manipulated inputs.

- Sensitive Information Disclosure (LLM02:2025): Encrypting sensitive data, including cryptographic keys, AI model parameters and user data during processing reduces the risk of exposure via memory dumping attacks.

- Data and Model Poisoning (LLM04: 2025): Using a trusted execution environment minimizes data and model poisoning from compromised infrastructure or malicious privileged entities.

- Supply Chain Attacks on LLMs (LLM03:2025): Helps mitigate risks of deploying tampered or backdoored AI models through attestation and integrity checks before execution. The model can be encrypted at source and only decrypted inside the secure environment.

- Model Theft and Extraction (LLM03:2025): Protects proprietary and open source models from being extracted or replicated by making sure inferencing happens within secure and isolated environments.

- Excessive Agency (LLM06:2025): Restricts the execution of AI agents and plugins to trusted environments so they operate within predefined policies, mitigating the impact of potential privilege escalation vulnerabilities.

- Unbounded Consumption (LLM10:2025): Controls execution environments to mitigate abuse of AI infrastructure, such as denial-of-service threats to the complete infrastructure, by restricting impact to the isolated execution environment.

Use case: Confidential GPUs with NVIDIA NIM workloads in OpenShift AI

Now that we have a clear understanding of what the various pieces are and what they do, let's bring them all together.

To enable a confidential GPU use case we bring together the following building blocks:

- Azure public cloud: Supporting confidential virtual machines (CVMs) used for confidential containers and providing NVIDIA H100 GPUs used for confidential GPUs

- OpenShift:- The workloads' orchestration platform running on Azure

- OpenShift AI: For managing the lifecycle of AI workloads (deployed via an operator)

- NVIDIA NIM: For running inference using confidential GPUs (enabled via OpenShift AI)

- Confidential containers: For provisioning confidential workloads connected to confidential GPUs (deployed via an operator)

- Attestation solution: Based on the Trustee attestation for attesting both CPUs and GPUs in a confidential environment (deployed via an operator)

Architecture overview

Let's review the solution architecture.

TEEs, attestation and secret management

CoCo integrates TEE infrastructure with the cloud-native world. A TEE is at the heart of a confidential computing solution, being isolated environments with enhanced security (e.g. runtime memory encryption, integrity protection) provided by confidential computing-capable hardware. The CVM that executes inside the TEE is the foundation for the OpenShift CoCo solution.

When you create a CoCo workload, a CVM is created and the workload is deployed within it. The CVM prevents anyone who isn’t the workload's rightful owner from accessing or even viewing what happens inside it.

Attestation is the process used to verify that a TEE, where the workload will run or where you want to send confidential information, is trusted. The combination of TEEs and attestation capability enables the CoCo solution to provide a trusted environment to run workloads and enforce the protection of code and data from unauthorized access by privileged entities.

In the CoCo solution, the Trustee project provides the capability of attestation. It’s responsible for performing the attestation operations and delivering secrets after successful attestation.

Operators for supporting CoCo and attestation

The Red Hat CoCo solution is based on two key operators:

- Red Hat OpenShift confidential containers: A feature added to Red Hat OpenShift sandbox containers operator that is responsible for deploying the building blocks for connecting workloads (pods) and CVMs that run inside the TEE provided by hardware. This also enables the support for confidential GPUs.

- Confidential compute attestation operator: Responsible for deploying and managing the Trustee service in an OpenShift cluster. Please note that the attestation operator should be deployed in a separate secure cluster the user is responsible for (more details in the following section).

Deploy Openshift AI and enabling NVIDIA NIM

For our end-to-end flow to work we also need to install the following operator:

- Red Hat OpenShift AI operator: This deploys OpenShift AI's components

- NVIDIA NIM: NVIDIA NIM is enabled from the OpenShift AI dashboard as described in the documentation. The NIM OpenShift AI application simplifies the user experience by bringing all the images available from the NVIDIA's image registry into OpenShift.

Attesting CPU and GPU resources

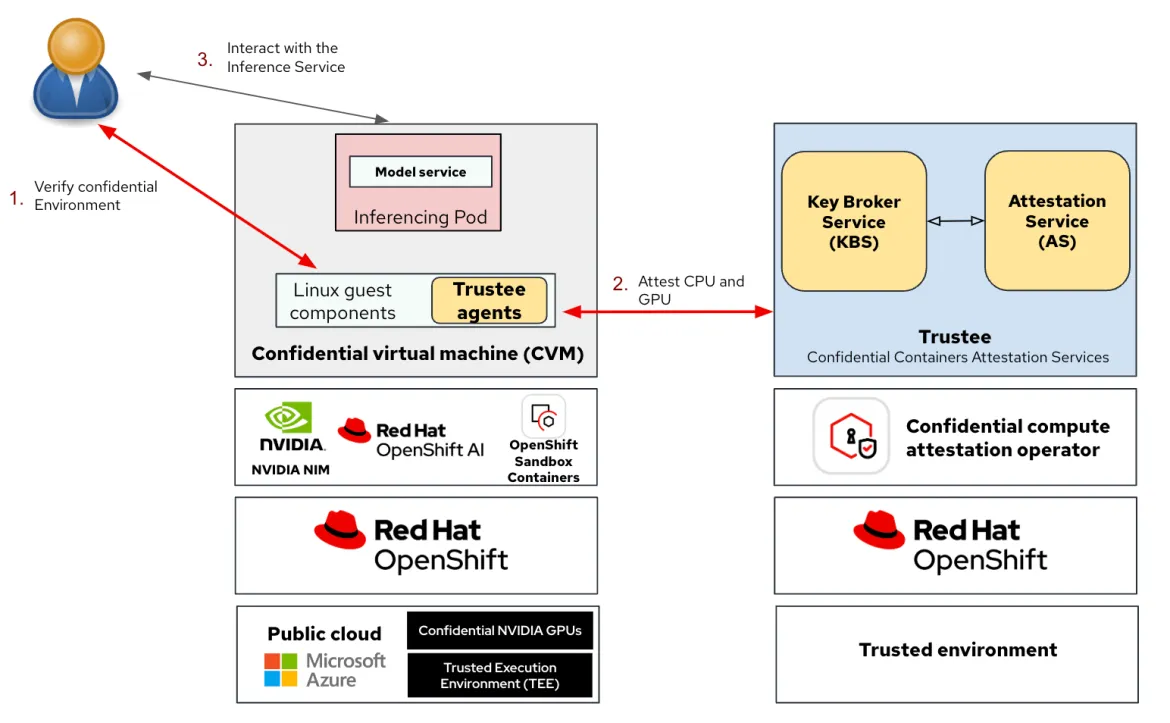

The following diagram shows how the different building blocks come together to offer confidential containers with confidential GPU capabilities:

Attestation for CPUs and GPUs across two OpenShift clusters (left and right)

A key point shown in this diagram is that the confidential GPUs solution is composed of two separate OpenShift clusters: a cluster running outside the reach of the public cloud (where attestation is securely deployed) and a cluster running on the public cloud for consuming the cloud’s resources.

The confidential compute attestation operator (right side), working as attester and secret resource provider, runs on the cluster outside the reach of the cloud provider. In this example, it runs on an OpenShift cluster located in a trusted environment (which the user is responsible for).

We deploy OpenShift sandbox containers and OpenShift AI operators enabling us to create confidential containers and NVIDIA NIM workloads in the cluster running on the public cloud (left side).

The confidential containers are then deployed on the OpenShift cluster (left side) with a NVIDIA NIM workload. In this PoC, we are using the NVIDIA NIM inference workload. The confidential compute attestation operator running in the trusted environment provides the required functionality to attest the CoCo environment to make sure nothing was altered by any privileged entity in the cloud environment.

For additional information on these building blocks we recommend reading our previous article: AI meets security: POC to run workloads in confidential containers using NVIDIA accelerated computing

Workflow overview

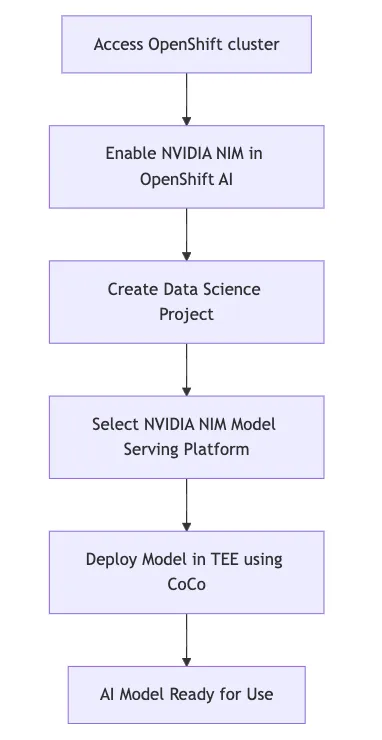

This illustration shows a high level workflow for deploying a model using NIM as a CoCo workload:

High-level workflow of NIM deployed model in OpenShift AI with CoCo

Before sending inference requests to the AI model, a user verifies the trustworthiness of the CoCo environment via remote attestation. Note that the attestation process must verify both the CPU and the GPU device.

Demo

Wrap up

With the possibility of running NVIDIA NIM microservices in a confidential TEE provided by CoCo on Openshift AI, we can accelerate the deployment of foundation models on cloud deployments and help keep confidential data secure.

This creates opportunities for regulated industries to adopt AI inferencing use cases with a stronger security posture. Stay tuned as we work on productizing the AI application support with CoCo and follow the Red Hat confidential containers, Red Hat Openshift AI and NVIDIA NIM projects for updates.

关于作者

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

Emanuele Giuseppe Esposito is a Software Engineer at Red Hat, with focus on Confidential Computing, QEMU and KVM. He joined Red Hat in 2021, right after getting a Master Degree in CS at ETH Zürich. Emanuele is passionate about the whole virtualization stack, ranging from Openshift Sandboxed Containers to low-level features in QEMU and KVM.

产品

工具

试用购买与出售

沟通

关于红帽

我们是世界领先的企业开源解决方案供应商,提供包括 Linux、云、容器和 Kubernetes。我们致力于提供经过安全强化的解决方案,从核心数据中心到网络边缘,让企业能够更轻松地跨平台和环境运营。