Most general purpose large language models (LLM) are trained with a wide range of generic data on the internet. They often lack domain-specific knowledge, which makes it challenging to generate accurate or relevant responses in specialized fields. They also lack the ability to process new or technical terms, leading to misunderstandings or incorrect information.

An "AI hallucination" is a term used to indicate that an AI model has produced information that's either false or misleading, but is presented as factual. This is a direct result of the model training goal of always predicting the next token regardless of the question. It can be difficult to tell whether information provided by AI contains learned facts or a hallucination. This is a problem when you're trying to use an LLM for critical purposes and applications such as those used in healthcare or finance.

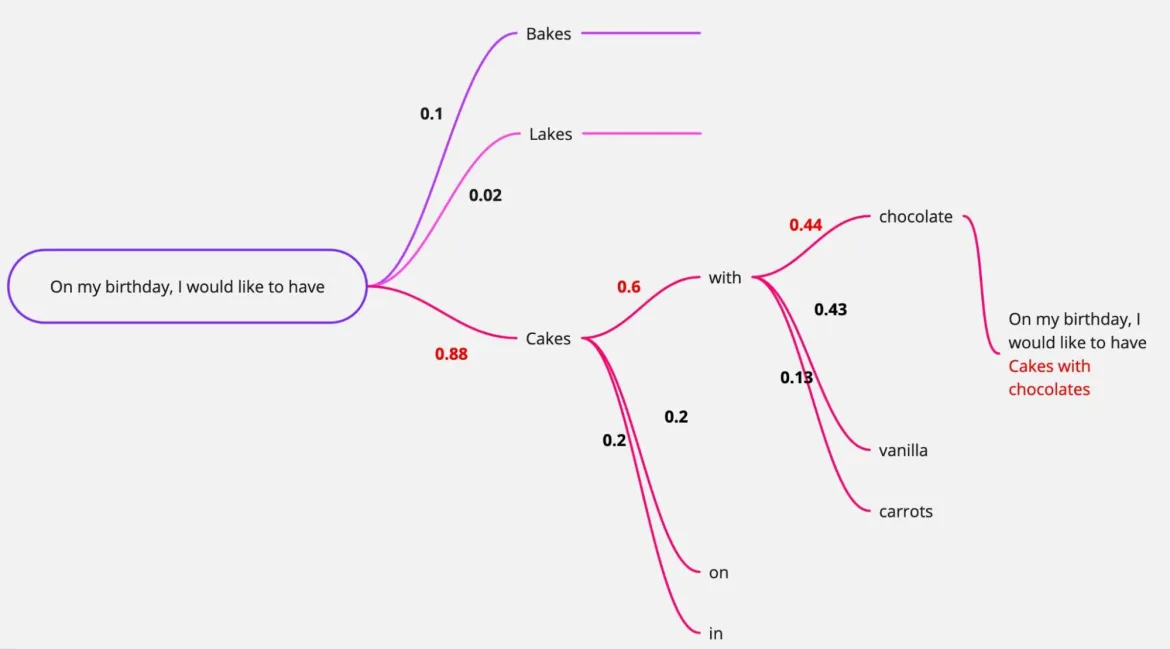

The diagram below demonstrates how an LLM predicts the next word in a sentence based on its training. In most cases a "greedy algorithm" is used, the word with the most probability is chosen and the output is generated.

Hallucinations can cause security problems

Research has demonstrated that LLM output, when used verbatim, can lead to long-lasting, hard-to-detect security issues and cause supply chain security problems. A few months ago, researchers exposed a new attack technique known as AI Package Hallucination. This technique used LLM tools to spread malicious packages that do not exist, based on model outputs provided to the end user.

Code generation LLMs are often trained using code repositories on the internet. Sometimes these repositories contain intentionally malicious code, such as typosquatted or hijacked package dependencies. Should a developer use tainted code from a generated model, it can cause widespread security issues for their systems, and even spread the issues to their customers, causing widespread supply chain security issues.

Hallucinations can cause financial losses and lawsuits

The hallucination issue with LLMs is especially problematic in finance where precision is crucial. Recent research by Haoqiang Kang and Xiao-Yang Liu marks a pivotal effort in exploring LLM hallucination within financial contexts. When the outputs generated by LLMs are used directly to make financial decisions, they can lead to significant financial losses to companies and potential legal consequences.

Hallucinations mitigation 101

Hallucinations are a reality with LLM output. While researchers attempt to figure out the best way to eliminate them, mitigating them is currently the most appropriate solution for enterprises. To do so, enterprises should:

1. Fine-tune the model with domain-specific knowledge

The primary source of LLM hallucinations is the model’s lack of training with domain-specific data. During inference, an LLM simply tries to account for knowledge gaps by inventing probable phrases. Training a model on more relevant and accurate information makes it more accurate at generating responses, thereby minimizing chances of hallucination.

InstructLab is an open source initiative by Red Hat and IBM that provides a platform for easy engagement with LLMs. It allows companies and contributors to easily fine-tune and align models with domain-specific knowledge using a taxonomy-based curation process.

2. Use Retrieval Augmented Generation (RAG)

RAG addresses AI hallucinations by ensuring factual accuracy. It searches an organization's private data sources for relevant information to enhance the LLM's public knowledge.

User prompts are converted to a vector representation by using models like BERT. These vectors are used to search a database for relevant information. Vector databases are used to store domain specific information for this purpose. In the end, the output is generated from the original prompt and the retrieved information to produce an accurate response.

3. Use advance prompting techniques

Advanced prompting techniques, such as chain of thought prompting, can considerably reduce hallucinations. Chain of thought prompting helps enable complex reasoning capabilities through intermediate reasoning steps. Further, it can be combined with other techniques, such as few-shot prompting, to get better results on complex tasks that require the model to reason before responding.

4. Use guardrails

Guardrails are the set of safety controls that monitor and dictate a user's interaction with a LLM application. They are a set of programmable, rule-based systems that sit in between users and foundational models in order to make sure the AI model is operating between defined principles in an organization.

Using modern guardrails, especially those that support contextual grounding, can help reduce hallucinations. These guardrails check if the model response is factually accurate based on the source and make sure output is grounded in the source. Any new information introduced in the response will be considered un-grounded.

Understanding and mitigating the causes of hallucinations is critical for deploying an LLM in high-stakes domains such as health care, legal, and financial services, where accuracy and reliability are paramount. Though hallucinations cannot currently be completely eliminated, the way you implement solutions relying on an LLM can help mitigate them.

You can make setting up your own LLM solution for your organization easy with RHEL AI, an image specially designed for deploying AI.

关于作者

Huzaifa Sidhpurwala is a Senior Principal Product Security Engineer - AI security, safety and trustworthiness, working for Red Hat Product Security Team.

更多此类内容

产品

工具

试用购买与出售

沟通

关于红帽

我们是世界领先的企业开源解决方案供应商,提供包括 Linux、云、容器和 Kubernetes。我们致力于提供经过安全强化的解决方案,从核心数据中心到网络边缘,让企业能够更轻松地跨平台和环境运营。