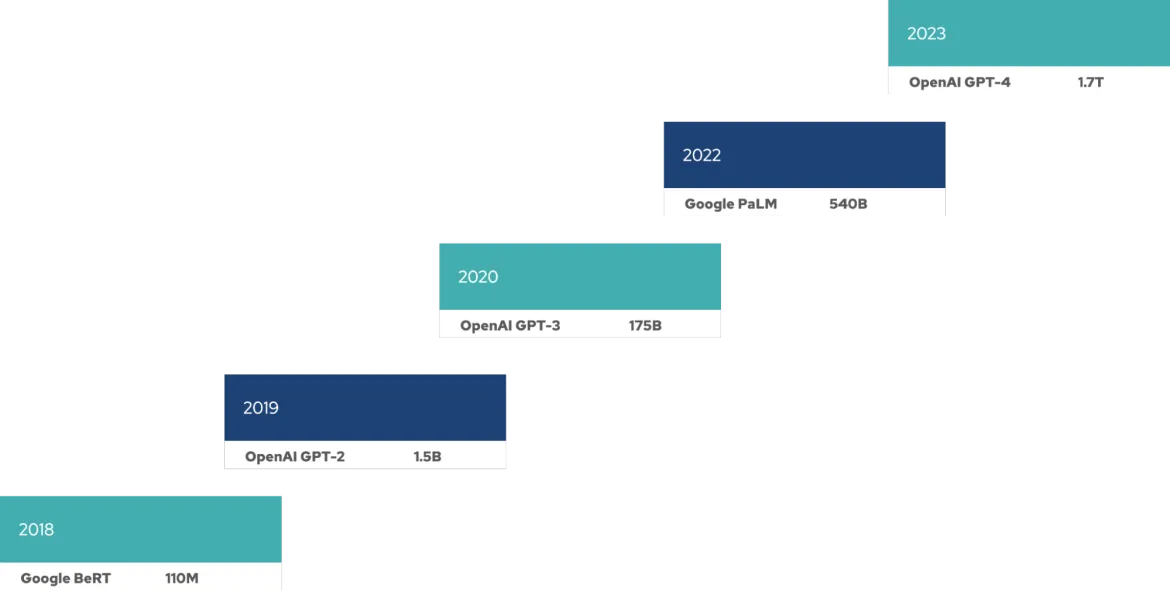

It seems that any given large language model (LLM) gets larger and larger with each release. This requires a large number of GPUs to train the model and more resources are needed throughout the lifecycle of these models for fine tuning, inferencing and so on. There’s a new Moore’s law for these LLMs: The model size (measured by numbers of parameters) is doubling in size every four months.

LLMs are costly

It's expensive in terms of resources, time and money to train and operate an LLM.Resource requirements have a direct impact on companies deploying an LLM–it doesn’t matter whether it's on their own infrastructure or using a hyperscaler. And LLMs are getting even bigger.

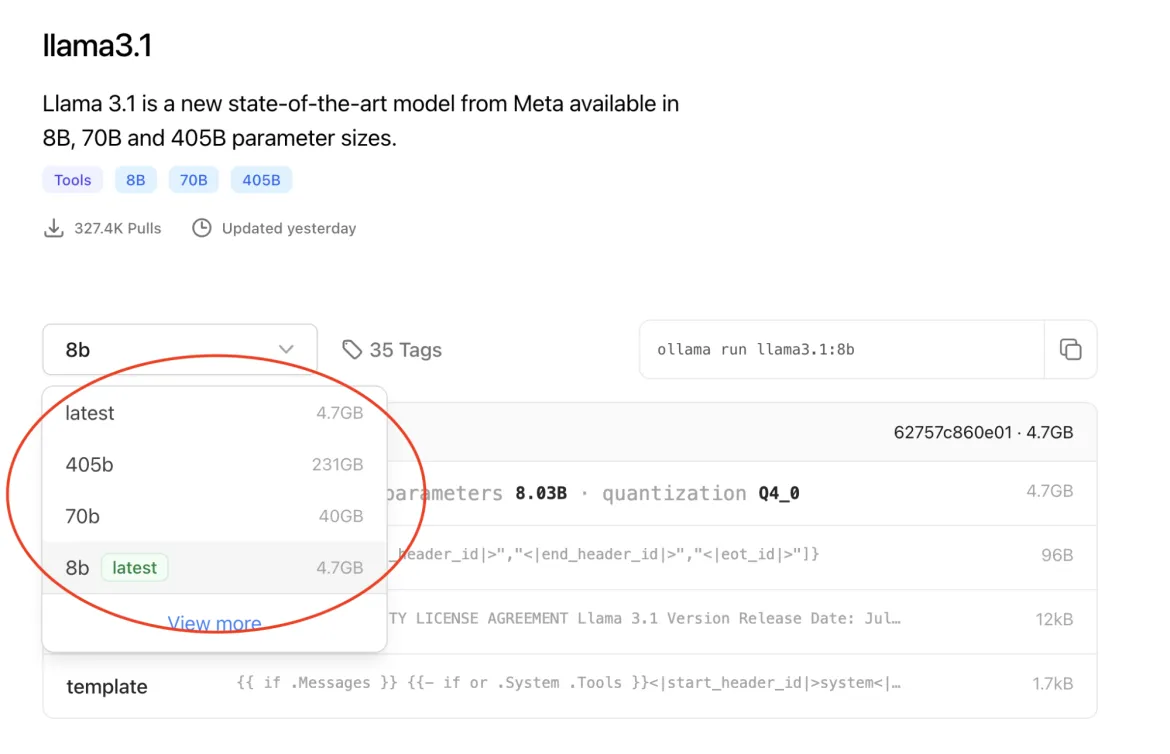

Additionally, operating an LLM requires a great deal of resources. The Llama 3.1 LLM has 405 billion parameters, requiring 810GB of memory (FP16) for inference alone. The Llama 3.1 model family was trained on 15 trillion tokens on a GPU cluster with 39 million GPU hours. With LLM size increasing exponentially, the computing and memory requirements for training and operating are growing, as well. Llama 3.1 fine tuning requires 3.25TB of memory.

here was a severe shortage of GPUs last year, and just as the supply and demand gap is improving, the next bottleneck is expected to be power. With more data centers coming online and electricity consumption for each data center doubling to almost 150MW, it’s easy to see why this would become a problem for the AI industry.

How to make LLMs less expensive

Before we discuss how to make LLMs less costly, consider an example of something we’re all familiar with. Cameras are constantly improving, with new models taking higher resolution pictures than ever before. But the raw image files can be as large as 40 MB (or greater) each. Unless you’re a media professional who needs to manipulate these images, most people are happy with a JPEG version of the image, shrinking the file size by 80%. Sure, the compression used by JPEG decreases the image quality from the RAW original, but for most purposes JPEG is good enough. Additionally, it usually takes special applications to process and view a raw image. So dealing with raw images has a higher computation cost compared to a JPEG image.

Now let’s go back to talking about LLMs. The model size is dependent upon the number of parameters, so one approach is to use a model with a smaller number of parameters. All popular open source models come with a range of parameters, allowing you to choose the ones that best suit a specific application.

However, a LLM with a greater number of parameters generally outperforms one with fewer parameters across most benchmarks. To lower the resource requirements, it may be better to use a larger parameter model but compress it to a smaller size. Tests have shown that GAN compression can reduce computation by almost 20 times.

There are dozens of approaches to compressing an LLM, including quantization, pruning, knowledge distillation and layer reduction.

Quantization

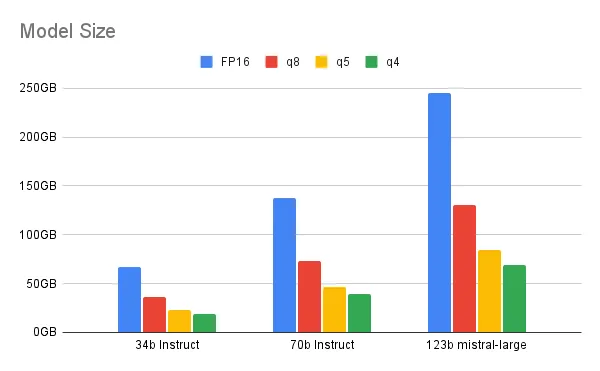

Quantization changes the numerical values in a model from 32-bit floating point format to a lower precision data type: 16-bit floating point, 8-bit integer, 4-bit integer or even 2-bit integer. By lowering the precision data type, the model needs fewer bits during operations, resulting in lower memory and computation. Quantization can be done after the model is trained or during the training process.

As we move to lower bits, there is a tradeoff involved between quantization and performance. This paper highlights the ideal range offered by 4-bit quantization for larger (at least 70 billion parameters) models. Anything lower shows a noticeable performance discrepancy between the LLM and its quantized counterpart. For a smaller model, 6-bit or 8-bit quantization may be a better choice.

Thanks to quantization, I can run this LLM RAG demo on my laptop.

Pruning

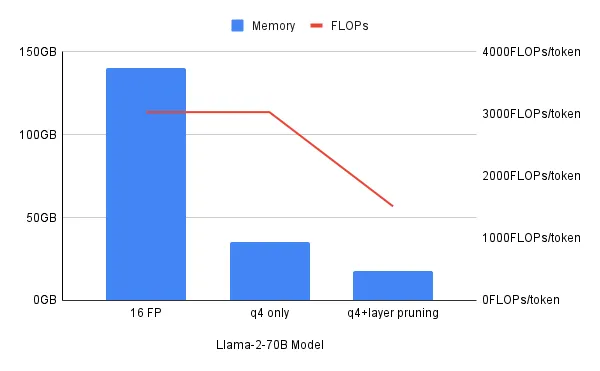

Pruning reduces model size by eliminating less important weights or neurons. A delicate balance is required between model size reduction and preserving accuracy. Pruning can be done before, during or after the model training. Layer pruning takes this idea further by removing whole blocks of layers. In this paper, the authors report that up to 50% of layers can be removed with a minimal degradation of performance.

Knowledge distillation

Transfers knowledge from a large model (the teacher) to a smaller model (the student). The smaller model is trained from the larger model’s outputs instead of larger training data.

This paper shows how Google’s BERT model distillation into DistilBERT reduced the model size by 40%, increased the inference speed by 60%, while retaining 97% of its language understanding capabilities.

Hybrid approach

While each of these individual compression techniques are helpful, sometimes a hybrid approach that combines different compression techniques works best. This paper points out how 4-bit quantization reduces memory needs by 4 times, but not computation resources as measured by FLOPS (floating-point operations per second). Quantization combined with layer pruning helps reduce the memory as well as computation resources.

Benefits of a smaller model

Using smaller models can significantly reduce computational requirements, while maintaining a high level of performance and accuracy.

- Lower computation costs: Smaller models lower the CPU and GPU requirements that can result in significant cost savings. Considering high end GPUs can cost as much as $30,000 each, any reduction in computational cost is good news

- Decreased memory use: Smaller models require less memory compared to larger counterparts. This helps with model deployments to resource constrained systems like IoT devices or mobile phones

- Faster inference: Smaller models can load and execute quickly, which results in reduced inference latency. Faster inference can make a big difference for real-time applications like autonomous vehicles

- Reduced carbon footprint: Reduced computational requirements of smaller models helps improve energy efficiency thereby reducing environmental impact

- Deployment flexibility: Lower computational requirements increase flexibility for deploying models where they’re needed. The models can be deployed to fit the dynamically changing user needs or system constraints, including the edge of the network

Smaller and less expensive models are gaining popularity, as evident by recent releases of ChatGPT-4o mini (60% cheaper than GPT-3.5 Turbo), and the open source innovations of SmolLM and Mistral NeMo:

- Hugging Face SmolLM: A family of small models with 135 million, 360 million, and 1.7 billion parameters

- Mistral NeMo: A small model with 12 billion parameters, built in collaboration with Nvidia

This trend towards SLM (small language models) is driven by the benefits discussed above. There are lots of options available with smaller models: Use a pre-built model or use compression techniques to shrink an existing LLM. Your use case needs to drive what approach you take when choosing a small model, so consider your options carefully.

Über den Autor

Ishu Verma is Technical Evangelist at Red Hat focused on emerging technologies like edge computing, IoT and AI/ML. He and fellow open source hackers work on building solutions with next-gen open source technologies. Before joining Red Hat in 2015, Verma worked at Intel on IoT Gateways and building end-to-end IoT solutions with partners. He has been a speaker and panelist at IoT World Congress, DevConf, Embedded Linux Forum, Red Hat Summit and other on-site and virtual forums. He lives in the valley of sun, Arizona.

Mehr davon

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Original Shows

Interessantes von den Experten, die die Technologien in Unternehmen mitgestalten

Produkte

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud-Services

- Alle Produkte anzeigen

Tools

- Training & Zertifizierung

- Eigenes Konto

- Kundensupport

- Für Entwickler

- Partner finden

- Red Hat Ecosystem Catalog

- Mehrwert von Red Hat berechnen

- Dokumentation

Testen, kaufen und verkaufen

Kommunizieren

Über Red Hat

Als weltweit größter Anbieter von Open-Source-Software-Lösungen für Unternehmen stellen wir Linux-, Cloud-, Container- und Kubernetes-Technologien bereit. Wir bieten robuste Lösungen, die es Unternehmen erleichtern, plattform- und umgebungsübergreifend zu arbeiten – vom Rechenzentrum bis zum Netzwerkrand.

Wählen Sie eine Sprache

Red Hat legal and privacy links

- Über Red Hat

- Jobs bei Red Hat

- Veranstaltungen

- Standorte

- Red Hat kontaktieren

- Red Hat Blog

- Diversität, Gleichberechtigung und Inklusion

- Cool Stuff Store

- Red Hat Summit