TrustyAI is an open source community dedicated to providing a diverse toolkit for responsible artificial intelligence (AI) development and deployment. TrustyAI was founded in 2019 as part of Kogito, an open source business automation community, as a response to growing demand from users in highly regulated industries such as financial services and healthcare.

With increasing global regulation of AI technologies, toolkits for responsible AI are an invaluable and necessary asset to any MLOps platform. Since 2021, TrustyAI has been independent of Kogito, and has grown in size and scope amidst the recent AI fervor. As a major contributor of TrustyAI, Red Hat is dedicated to helping the community continue to grow and evolve.

As an open source community, TrustyAI is uniquely positioned in the responsible AI space. The TrustyAI community believes that democratizing the design and research of responsible AI tooling via an open source model is important in empowering those affected by AI decisions – nowadays, basically everyone – to have a say in what it means to be responsible with your AI.

TrustyAI use cases

The TrustyAI community maintains a number of responsible AI projects, revolving around model explainability, model monitoring, and responsible model serving. To understand the value of these projects, let’s look at two example use cases; the first investigating a “traditional” machine learning model that predicts insurance claim acceptance, and the second deploying a generative AI (gen AI) model to serve as an algebra tutor.

Predictive model: Insurance claim acceptance

In this scenario, imagine an insurance company that wants to use an ML model to help process claims; perhaps the model produces some estimate of how likely a claim is to be approved, where high-likelihood claims are then automatically approved.

First, let’s perform a granular analysis of the predictive model by reviewing a specific insurance claim that the model has marked as likely to be denied. By using an explainability algorithm, we can look at a list of important features of the application that led to the denial. If any possibly discriminatory factors such as race or gender were used in the model’s reasoning, we could flag this particular decision for manual review.

Next, we use an anomaly detection algorithm to confirm that the model was suitably trained such as to accurately process this claim. If this claim is sufficiently different from the model’s training data, the model will likely not produce trustworthy results and its decision would again warrant manual review.

If both of those checks confirmed that the model’s prediction was fair and reliable, we might finally turn to a counterfactual algorithm. This will provide us with a report that describes what the applicant might be able to do differently in order to get their claim approved, and from that we could provide them some actionable feedback on their application.

Next, let’s look at our model on a broader scale to understand larger patterns in how it treats our users. We can use a bias algorithm, which monitors disparities in the model’s treatment of various demographic groups and how that might change over time. For example, we might want to monitor the rate of claim acceptance across the different racial groups amongst our applicants and ensure that those rates are not different in any statistically significant manner. If we notice that the rates are diverging, it is likely a sign that we need to retrain our model or rethink our architecture.

This analysis can be complemented by a drift algorithm, which will analyze the inbound data and ensure that the general distribution of data the model is seeing aligns with the data it was trained on. For example, we might notice that the age distribution of our users is significantly different to that of our training data, and therefore our model is unlikely able to produce trustworthy predictions and needs to be retrained over more representative data. Again, these analyses could be hooked into an automated model deployment pipeline, such that any concerning metrics are immediately flagged and trigger various remediation protocols.

Gen AI: Algebra tutor

For the second scenario, imagine that we’ve been provided a generative AI model, and we want to use it as an algebra tutor. To do this, we might want to verify that the model has good domain-specific knowledge and guardrail user interactions to conform to that intended domain.

To verify the model’s algebra abilities, we could perform evaluations using TrustyAI’s Language Model Evaluation service. This is based upon the popular upstream lm-evaluation-harness library from EleutherAI, and provides a suite of automated evaluations to measure a wide variety of different aspects of model performance, from generalized evaluations that measure the model’s general knowledge, language comprehension or susceptibility to racial biases, to more targeted evaluations that measure specific domain knowledge in fields like medicine or physics. In our case, we’ll pick three subsets of the Massive Multitask Language Understanding evaluation: abstract algebra, college mathematics, and high school mathematics. If our model scores well on these examinations, that will give us confidence that is well-suited to serve as a reliable algebra tutor.

Once we are satisfied with the model’s performance in the desired domain, we can set up a guardrailed deployment using TrustyAI Guardrails. Guardrails lets us filter both the content being sent to and from our models; this limits the pathways of interaction between the user and the model to those that we intend. Here, we might apply input and output guardrails that check that the content of the conversation is related to mathematics. Then, as we design out the full application around our model, we can use any guardrail violations as a signal to guide the user back towards the intended topics. This helps prevent unexpected behaviors that might occur in unforeseen interaction pathways, for which we might be exposed to unforeseen liabilities.

TrustyAI community projects

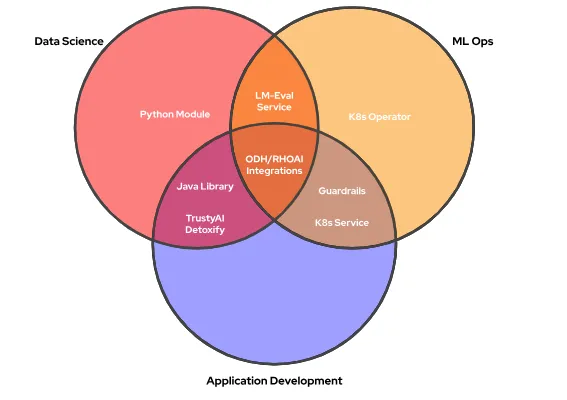

The TrustyAI community provides tools to perform these workflows for a number of different user profiles. For Java developers, we provide the TrustyAI Java library, the oldest active project within our community. Its purpose is to enable a responsible AI workflow within Java applications, which at the time of its initial development made it the only such library within the Java ecosystem. While Java is not a common language for ML work, its nature as a compiled language allows for impressive speed gains compared to the more-typical ML lingua franca, Python.

For data scientists and developers that are using Python, we expose our Java library via the TrustyAI Python library, which fuses the advantages of Java’s speed to the familiarity of Python. Here, TrustyAI’s algorithms are integrated with common data science libraries like Numpy and Pandas. Future work is planned to add native Python algorithms to the library, broadening TrustyAI’s compatibility by integrating with libraries like PyTorch and TensorFlow. One such project is trustyai-detoxify, a module within the TrustyAI Python library that provides guardrails, toxic language detection, and rephrasing capabilities for use with LLMs.

The different features within TrustyAI and how they map to different user profiles.

For enterprise and MLOps use-cases, TrustyAI provides the TrustyAI Kubernetes Service and Operator, which serve TrustyAI bias, explainability, and drift algorithms within a cloud environment as well as provide interfaces for the Language Model Evaluation and Guardrails features. Both the service and operator are integrated into Open Data Hub and Red Hat OpenShift AI to facilitate coordination between model servers and TrustyAI, bringing easy access to our responsible AI toolkit to users of both platforms. Currently, the TrustyAI Kubernetes service supports tabular models served in KServe or ModelMesh.

The future of TrustyAI

As the largest contributor to TrustyAI, Red Hat believes that as AI’s influence continues to grow, it’s imperative for a community of diverse contributors, perspectives, and experiences to define responsible AI. As we continue to grow the work of the TrustyAI community, we must also collaborate and integrate with other open source communities, such as Kubeflow and EleutherAI, to foster growth and expansion for all.

The landscape of AI is ever-changing, and TrustyAI needs to continuously evolve to remain impactful. To stay up to date with our latest additions, check out TrustyAI’s community guidelines and roadmaps, ask questions on the discussion board, and join the community Slack channel.

To read more about Red Hat's KubeCon NA 2024 news, visit the Red Hat KubeCon newsroom.

Sobre el autor

Rob Geada has worked at Red Hat for 8 years, with focus on data science, machine learning, and AI responsibility. Rob has a PhD in Computer Science from Newcastle University, where he researched automated design algorithms for convolutional neural networks.

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Programas originales

Vea historias divertidas de creadores y líderes en tecnología empresarial

Productos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servicios de nube

- Ver todos los productos

Herramientas

- Training y Certificación

- Mi cuenta

- Soporte al cliente

- Recursos para desarrolladores

- Busque un partner

- Red Hat Ecosystem Catalog

- Calculador de valor Red Hat

- Documentación

Realice pruebas, compras y ventas

Comunicarse

- Comuníquese con la oficina de ventas

- Comuníquese con el servicio al cliente

- Comuníquese con Red Hat Training

- Redes sociales

Acerca de Red Hat

Somos el proveedor líder a nivel mundial de soluciones empresariales de código abierto, incluyendo Linux, cloud, contenedores y Kubernetes. Ofrecemos soluciones reforzadas, las cuales permiten que las empresas trabajen en distintas plataformas y entornos con facilidad, desde el centro de datos principal hasta el extremo de la red.

Seleccionar idioma

Red Hat legal and privacy links

- Acerca de Red Hat

- Oportunidades de empleo

- Eventos

- Sedes

- Póngase en contacto con Red Hat

- Blog de Red Hat

- Diversidad, igualdad e inclusión

- Cool Stuff Store

- Red Hat Summit