Back in December, we announced that Windows Container support for Red Hat OpenShift became generally available. This was made possible by the Windows MachineConfig Operator (WMCO), which acts as an entry point for OpenShift customers to run containerized Windows workloads in their OpenShift cluster. This Operator allows users to deploy a Windows worker node as a day 2 task. This gave users the ability to manage their Windows container workloads alongside Linux containers in AWS or Azure.

With OpenShift 4.7, we’ve made an update to the WMCO that now extends functionality and support to vSphere IPI clusters. Using the updated version WMCO Operator on OpenShift 4.7 or later, users can now run their Windows container workloads and Linux containers on one platform, vSphere.

In this blog, I will go over how to install and deploy a Windows worker node on OpenShift.

Prerequisites

A full list of prerequisites for installing on vSphere can be found in the official documentation. Please review these before attempting to install vSphere. Specifically, you’ll need the information about vCenter, a user with the right permissions, and two DNS entries. Again, consult the documentation if you have any questions.

Additionally, for Windows containers, you will need to install the OVNKubernetes network plug-in with Hybrid Networking Overlay customizations. It’s important to note that this can only be done at installation time*.

Once you’ve taken a look at the prerequisites, you can install OpenShift to VMware vSphere version 6.7U3 or 7.0 using the full-stack automation, also known as Installer Provisioned Infrastructure or IPI, experience.

* Since you cannot yet migrate between OpenShiftSDN and OVNKubernetes, only install with the OVN plus Hybrid networking overlay that has been upgraded to 4.7. and new 4.7 installs that are supported with Windows Containers.

You must also prepare a Windows Server 2019 “golden image” to use as a base of your Windows worker nodes. This includes installing all the latest updates, installing the docker runtime, installing and configuring OpenSSH, and installing the VMware tools.

Installing OpenShift With Hybrid Overlay Networking

You must install OpenShift using vSphere IPI with OVN and configure hybrid-overlay networking customizations. These steps are outlined in the official OpenShift documentation site. The Windows MachineConfig Operator will not work unless you install the cluster the way outlined in the documentation.

Once you have followed the documentation and the installer has finished the installation process, verify that hybrid overlay networking is enabled:

$ oc get network.operator cluster -o jsonpath='{.spec.defaultNetwork}' | jq -r |

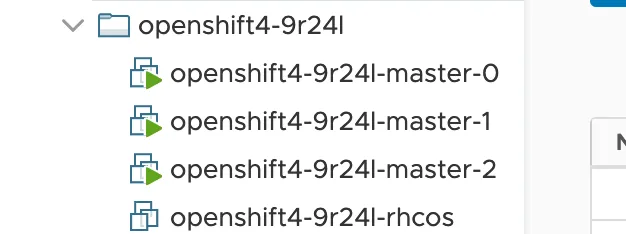

Nnote that the OpenShift installer creates a directory inside of vSphere with the name of your cluster and a unique identifier:

This is important because we will be placing the Windows Server golden image in this folder.

Creating a Windows Server Golden Image

Before you can deploy a Windows worker, you first need to prepare a VM template to be used by the OpenShift Machine API to build a Windows worker. Moreover, the WMCO uses SSH in order to install and configure the Windows machine as a worker. Therefore, we’ll need to first create an SSH key for the WMCO to use:.

$ ssh-keygen -t rsa -b 4096 -N '' -f ${HOME}/.ssh/windows_node

|

Once you’ve created the SSH key, you can proceed to prepare your Windows node. To prepare the Windows, you’ll need the following:

- Windows Server 2019 LTSC version 10.0.17763.1457 or older.

- The following patch must be installed - KB4565351.

- Open TCP port 10250 on the Windows firewall for container logs.

- SSH installed with key-based authentication as the Administrator user with the keypair you created. This is usually done by adding the public key to the authorized_keys file into the .ssh directory in the Administrator’s directory. Currently, using the local Administrator account is required and the host must not be joined to a domain.

- Docker runtime installed.

- Any base images pre-pulled into the server. See this matrix for compatibility.

- VMware Tools installed. Note that the C:\ProgramData\VMware\VMware Tools\tools.conf file must contain exclude-nics= to make sure the vNIC generated on the Windows VM by the hybrid overlay won't be ignored.

- The server sysprep-ed to where SSH key based authentication is preserved.

For consistency, name the Windows VM <clustername>-<id>-windows. This is consistent with how the Red Hat Enterprise Linux CoreOS (RHCOS) VM template is named. Save this VM in the same folder as the one your cluster is running in. In the end, you’ll see something like this, below. (I’ve outlined the Windows VM in a red box.)

After you’ve run the sysprep command and shutdown the VM, you can leave it as a VM (which is what the RHCOS VM is) or convert it into a template. The WMCO will work with both.

Installing the Windows MachineConfig Operator

The WMCO can be installed via OperatorHub on your cluster. This can easily be done via the OpenShift UI:.

Please consult the official documentation for specifics on how to install the Windows MachineConfig Operator. The high-level steps are outlined below:

- From the administrator’s perspective, use the left side navigation to go to Operators → OperatorHub.

- In the Filter by keyword type in Windows Machine Config Operator to filter the catalog, then select the Windows Machine Config Operator tile.

- After reviewing the information, go ahead and click Install.

- On the Install Operator page: Select 4.7 as the update channel, leave the Installation Mode and Installed Namespace to their respective defaults. Select either Automatic or Manual for the Approval Strategy.

- Click Install.

After a while, you should see a Pod in the openshift-windows-machine-config-operator namespace:

$ oc get pods -n openshift-windows-machine-config-operator |

The WMCO uses SSH to interact with the Windows machine. You will need to load the SSH private key into a secret. This is the same key that you created when you prepared the Windows Server golden image.

Load the private key as a secret into the openshift-windows-machine-config-operator namespace:

$ oc create secret generic cloud-private-key \ |

The key should now be loaded into OpenShift:

$ oc get secret cloud-private-key -n openshift-windows-machine-config-operator |

Deploying a Windows Machine

Creating a Windows Machine uses the same process as creating a RHCOS Machine using a MachineSet object. To build a MachineSet YAML file, you’ll need the clusterID. You can get this with the following command:

$ oc get -o jsonpath='{.status.infrastructureName}{"\n"}' infrastructure cluster |

Using this, and information about my vSphere cluster and folder/VM names, I can build my MachineSet:

apiVersion: machine.openshift.io/v1beta1 |

The full list of options and values available for the MachineSet YAML can be seen by visiting the official documentation page on how to create it. I will go over the import sections.

- Every label key of machine.openshift.io/cluster-api-cluster must have the value of the clusterID, which in this case is openshift4-9r24l.

- The .metadata.name is the name you want your MachineSet to have. Please note that the MachineSet name cannot be more than nine characters long, due to the way machine names are generated in vSphere.

- Every label key of machine.openshift.io/cluster-api-machineset must have the value of the MachineSet name you chose.

- Note that the userDataSecret is set to windows-user-data. This secret is generated by the WMCO Operator for you.

- Note that the template value is set as a full path to the VM you ran sysprep on.

- The workspace section is information about your vSphere cluster OpenShift installation. This can be found in your current MachineSets by running the following: oc get machinesets -n openshift-machine-api -o yaml

Once you’ve set up your MachineConfig YAML file (I’ve named mine windows-ms.yaml) apply it to your cluster:

$ oc apply -f windows-ms.yaml

|

Once this YAML is applied, you can inspect the operator logs to see the WMCO create, bootstrap, and install the Windows Node:.

$ oc logs -f -l name=windows-machine-config-operator -n openshift-windows-machine-config-operator

|

You can see that this creates a MachineSet for you:

$ oc get machinesets -n openshift-machine-api |

$ oc get machines -n openshift-machine-api |

After a while, this MachineSet, winmach-8t6dd, wil go from “Provisioning” to “Provisioned”:

$ oc get machine winmach-8t6dd -n openshift-machine-api |

- Transfers required binaries to set up the Windows worker.

- Remotely configures the kubelet.

- Installs and configures the hybrid overlay networking.

- Configures the CNI.

- Sets up and manages the kube-proxy process.

- Approves the CSRs so the node can join the cluster.

Soon the Windows Machine should be configured as an OpenShift worker:

$ oc get nodes -l kubernetes.io/os=windows |

If you run an oc describe node winmach-8t6dd you can see more information about the Windows node. Here is a snippet that shows the System Info:

System Info: |

Please note that this host has a Taint. This is to isolate Windows workloads and not let the scheduler try and schedule Linux containers (which would fail anyway) on this host:

$ oc describe node winmach-8t6dd | grep Taint |

To login to this node, I’ll first need to know it’s IP address. I can obtain the IP address of my Windows node with the following command:

$ oc get node winmach-8t6dd -o jsonpath='{.status.addresses}' | jq -r |

In this output, we want the ExternalIP. You can SSH into this Windows worker as the user Administrator with the SSH key you created earlier:

$ ssh -i ${HOME}/.ssh/windows_node Administrator@192.168.1.16 powershell

|

Windows PowerShell |

Verify that docker is running:

PS C:\Users\Administrator> docker ps |

PS C:\Users\Administrator> Get-Process | ?{ $_.ProcessName -match "kube|overlay|docker" } |

Go ahead and exit this session by typing exit in the PowerShell session.

Scaling a Windows MachineSet

The paradigm used to interact with the Windows MachineSet is the same as the RHCOS MachineSets. This includes creating, managing, and scaling. To scale the Windows MachineSet, you’d run the following command:

$ oc scale machineset winworker --replicas=2 -n openshift-machine-api

|

After some time, you should see two Windows nodes in your cluster:

$ oc get nodes -l kubernetes.io/os=windows |

You should see two new “winmach” machines as well:

$ oc get machines -n openshift-machine-api \ |

Deploying Sample Workload

With the Windows MachineConfig Operator and Windows worker in place, you can now deploy an application. Here is an example of the application I’m going to deploy:

apiVersion: apps/v1 |

A few things to note here:

- I have Tolerations in place so that this Deployment will be able to be scheduled on the Windows worker node.

- I am using the mcr.microsoft.com/powershell:lts-nanoserver-1909 image. The image is specific to the version of Windows you run. Please consult the compatibility matrix to determine which image is best for your installation.

- The “ContainerAdminsitrator” under the securityContext section indicates which user inside the container will run the process. For more information, please see this doc.

- Lastly, note that I am using a nodeSelector of kubernetes.io/os: windows. This will place this Deployment on the Windows node since the node is labeled as such.

To apply this Deployment, first you need to create a namespace. This sample application expects a namespace of windows-workloads:

$ oc new-project windows-workloads |

$ oc apply -f sample-winc-app.yaml |

Then you should see the Pod running on your Windows node:

$ oc get pods -o wide |

$ oc expose deployment win-webserver --target-port=80 --port=8080 |

This should have created a service of type ClusterIP:

$ oc get svc |

Let’s now create a route by exposing this service:

$ oc expose svc win-webserver |

You can now visit this application by viewing the route on your web browser. To get the route, run the following command:

$ oc get route win-webserver -o jsonpath='{.spec.host}{"\n"}' |

The page should display the following:

Application Logging

Getting the logging output from a Windows container is the same as a Linux container. You can use the same command. For example:

$ oc logs -f win-webserver-95584c8c-7dssq -n windows-workloads |

If you need to debug further, you can even run an oc rsh into the container to start an interactive PowerShell session:

$ oc -n windows-workloads rsh win-webserver-95584c8c-7dssq pwsh |

Notice that I passed pwsh since the default shell for oc rsh is bash. This way you can get an interactive PowerShell session inside the container.

Application Storage

You can use the in-tree vSphere volume plug-in for storage. You must use storage outside the PV/PVC paradigm as the CSI vSphere plugin is not currently supported*.

* Note: CSI-proxy is still in alpha status. More info here.

To use the in-tree volume plug-in, first login to an ESXi host via SSH and create the storage:

[root@esxi:~] vmkfstools -c 2G /vmfs/volumes/datastore1/myDisk.vmdk

|

Note that here, you need to specify the path to your datastore. In my case, my datastore is named datastore1. For more information, please see the upstream Kuberentes documentation.

You can specify the volume in your container definition in your deployment or pod. Here is an example of using this in a Pod:

apiVersion: v1 |

This is similar to the container definition inside the deployment of the application we first deployed, but I do want to call out a few things.Here in the .spec.volumes I am specifying the datastore and the name of the disk. I’m also specifying the fsType to be ntfs. Additionally, in the .spec.containers.volumeMounts I am setting the mountPath to c:\Data.

Let’s deploy this sample pod:

$ oc apply -f sample-pod.yaml

|

Once the pod is up and running, you can rsh into the pod to see the storage:

$ oc rsh win-storage pwsh |

Here you can now use the storage inside your pod:

PS C:\> cd c:\data |

If you SSH into the Windows VM, you can see the disk by running Get-Disk in a PowerShell session. You will see that the 2G volume is mounted on the Windows VM host:

PS C:\Users\Administrator> Get-Disk |

Conclusion

This post has covered getting started with deploying Windows nodes to your OpenShift cluster and deploying a sample application to verify functionality. Containers, Kubernetes, and OpenShift have almost exclusively been the domain of Linux since their inception, but many organizations have Windows-only applications which can benefit from containers, too. OpenShift 4.7 with the Windows MachineConfig Operator enables our customers to deploy Windows and Linux containers to the same cluster, simplifying development and deployment processes and consolidating administration to one platform, OpenShift.

I have just scratched the surface of what's possible here.There is a whole world of possibilities opened up with this new feature, including integrating Windows containers into your OpenShift- based CI/CD processes, autoscaling .NET applications based on CPU, memory, and custom metrics, or just containerizing that one, last, stubborn microservice.

For more information, see the official docs, OpenShift TV, and our other blog posts. I did a stream about this on the OpenShift Ask The Admin show; which you can watch the recording of here.

Sobre el autor

Christian Hernandez currently leads the Developer Experience team at Codefresh. He has experience in enterprise architecture, DevOps, tech support, advocacy, software engineering, and management. He's passionate about open source and cloud-native architecture. He is an OpenGitOps Maintainer and an Argo Project Marketing SIG member. His current focus has been on Kubernetes, DevOps, and GitOps practices.

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Programas originales

Vea historias divertidas de creadores y líderes en tecnología empresarial

Productos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servicios de nube

- Ver todos los productos

Herramientas

- Training y Certificación

- Mi cuenta

- Soporte al cliente

- Recursos para desarrolladores

- Busque un partner

- Red Hat Ecosystem Catalog

- Calculador de valor Red Hat

- Documentación

Realice pruebas, compras y ventas

Comunicarse

- Comuníquese con la oficina de ventas

- Comuníquese con el servicio al cliente

- Comuníquese con Red Hat Training

- Redes sociales

Acerca de Red Hat

Somos el proveedor líder a nivel mundial de soluciones empresariales de código abierto, incluyendo Linux, cloud, contenedores y Kubernetes. Ofrecemos soluciones reforzadas, las cuales permiten que las empresas trabajen en distintas plataformas y entornos con facilidad, desde el centro de datos principal hasta el extremo de la red.

Seleccionar idioma

Red Hat legal and privacy links

- Acerca de Red Hat

- Oportunidades de empleo

- Eventos

- Sedes

- Póngase en contacto con Red Hat

- Blog de Red Hat

- Diversidad, igualdad e inclusión

- Cool Stuff Store

- Red Hat Summit