The bare metal cloud is an abstraction layer for the pools of dedicated servers with different capabilities (processing, networking or storage) that can be provisioned and consumed with cloud-like ease and speed. It embraces the orchestration and automation of the cloud and applies them to bare metal workload use cases.

The benefit to end users is that they get access to the direct hardware processing power of individual servers and are able to provision workloads without the overhead of the virtualization layer—providing the ability to provision environments in an Infrastructure-as-code methodology with separation of tenants and projects.

This model of consuming bare metal resources is most effective in industries where overhead in virtualization translates into productivity loss, or where the virtualization is just not possible. Industry examples could include silicon manufacturers, OEMs, telcos, render farms, research, financial services and many more.

Bare Metal Cloud - Top Use Cases:

In this section we'll look at some of the top use cases we see for bare metal cloud.

Data Center-as-a-Service (DCaaS)

This use case describes a highly scalable environment that allows for an abstracted way to deploy any workload. The common workloads could be hypervisors (such as Red Hat Virtualization), resource intensive databases, containerization platforms (Kubernetes, OpenShift, etc.), networking technologies or anything that benefits from raw bare metal performance. Many administrative tasks while racking servers are consistent and repetitive, making them a prime candidate for automation and orchestration.

Hardware Benchmarking Cloud

In order to stay competitive and be able to release products faster, hardware vendors may look for ways to automate validation of the components they are manufacturing. Components can include a processor, logic board, GPU, network adapter or even entire servers.

The process of validating hardware components requires multiple tests and benchmarks against multiple operating systems, test suites, integration points and product settings. This results in the test matrix that grows beyond the capability of manual quality assurance processes. Bare metal clouds with an automation/orchestration layer can help enable these manufacturers to streamline the process by applying CI/CD methodologies to product validations.

High Performance Clouds (renderinging farms, GPU, AI, ML, research)

This use case covers the workloads that require specialized hardware such as GPUs as well as the typical large research environments where the virtualization overhead impacts the speed of computational power for number crunching. The industries that have been taking advantage of high performance clouds are entertainment (movie studios), pharmaceutical and research, blockchain, education, law enforcement, telcos etc.

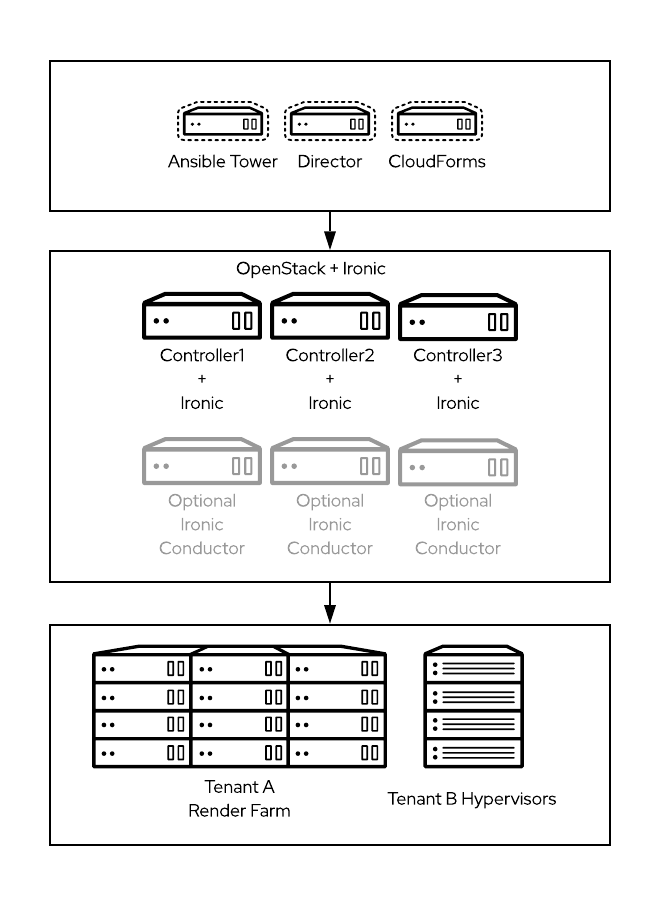

Here is a quick reference architecture to help you get started:

In proposed reference architecture the pool of heterogeneous bare metal nodes is being managed by highly available OpenStack control plane with Ironic and optional dedicated Ironic Conductors (for better scalability). The top layer of the architecture manages the lifecycle of the cloud (director) as well as workload orchestration (Ansible tower) and compliance policies, chargeback/showback and service catalog (Red Hat CloudForms).

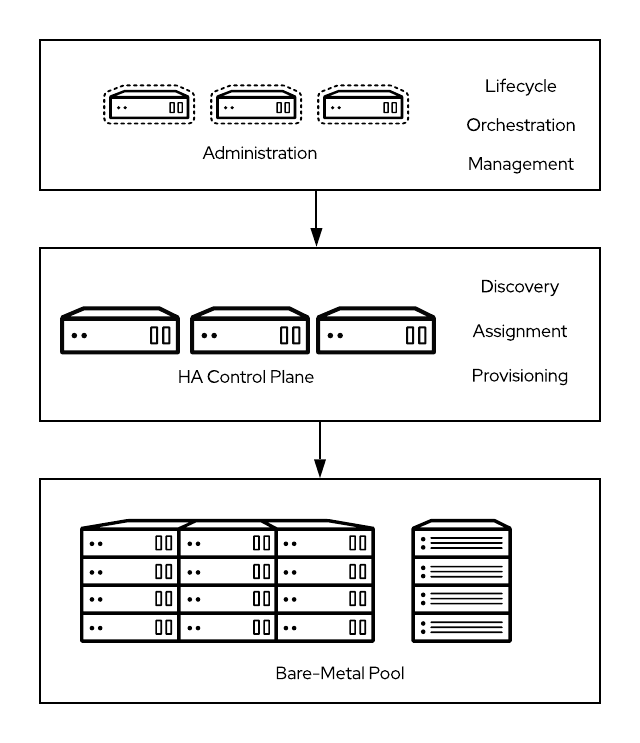

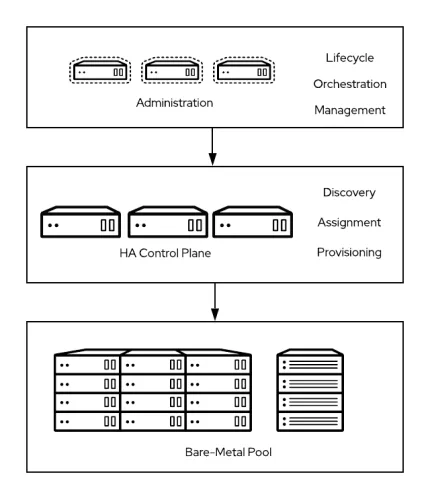

Function Diagram

Red Hat Architecture

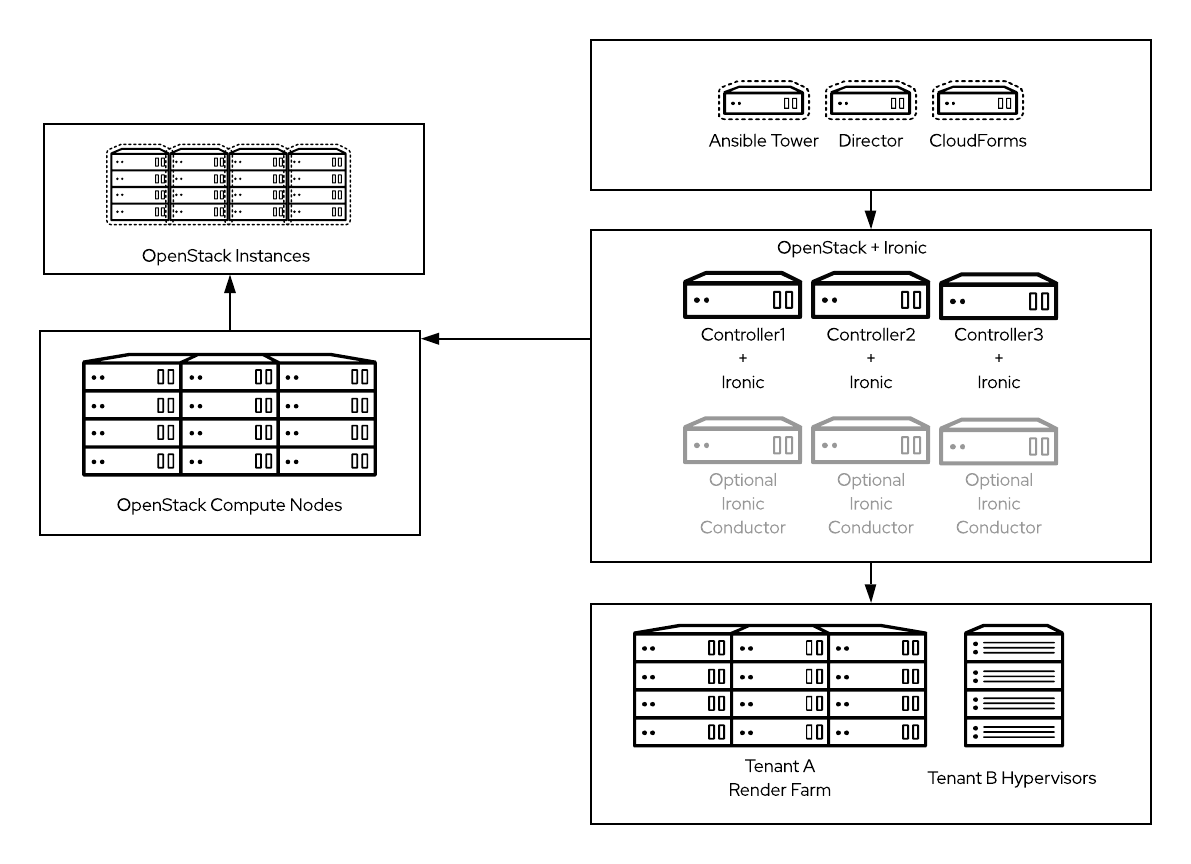

Optional Integration with Standard OpenStack

System Requirements and Execution:

In this section we'll look at some of the system requirements and how we might make them work.

Zero-touch auto discovery & auto deployment

In this example use case, we have deployed architecture as depicted above:

Ironic is in the overcloud and configured with a provisioning network that is piped to all of the bare metal nodes. The nodes within the bare metal pool could be different hardware vendors, different network configurations, different GPUs installed. The primary configuration which needs to remain the same is that they all have a NIC on the Ironic Provisioning Network.

The goal in this regard is to design a ruleset that will automate the identification of the node based on its hardware specifications and utilize a ruleset to put that node into a serviceable state that it is performing the function it was purchased for all with simply powering the server on.

Let’s assume that as an organization we know:

-

If the server manufacturer is Dell, the admin user and password is delladmin/dellpassword (just an example!).

-

If the server manufacturer is HP, the admin user and password is hpadmin/hppassword (again, an example).

-

If a server is a Dell server that has at least 8 SSD drives, we know that server is supposed to be a Ceph node for our enterprise OpenStack environment (which is a completely different OpenStack deployment than this purpose-built solution).

-

If a server is a Dell server and not a Ceph server, we know that server is a compute node resource for our enterprise OpenStack environment.

-

If the server is a HP server, we know this server is to be provisioned GPU resource node in our render farm for rendering video.

-

All Supermicro servers will have a capability added to them so that tenants can deploy whatever bare metal workload they would like on it. The CPU model, manufacturer and BIOS version would be stored as capabilities and can be associated with specific flavors for specific use cases.

Using a “zero-touch provisioning” process, we would be able to automate all of this to the point where the only thing needed would be to power on the nodes. In order to deliver such a solution, the up-front configuration would require:

The servers would be racked/stacked and piped to the Ironic PXE network for the undercloud.

Ironic would be configured with rulesets to auto-configure the credentials (numbers 1 and 2, above):

[ { "description": "Set IPMI credentials for Dell", "conditions": [ {"op": "eq", "field": "data://auto_discovered", "value": true}, {"op": "eq", "field": "data://inventory.system_vendor.manufacturer", "value": "Dell"} ], "actions": [ {"action": "set-attribute", "path": "driver_info/ipmi_username", "value": "delladmin"}, {"action": "set-attribute", "path": "driver_info/ipmi_password", "value": "dellpassword"}, {"action": "set-attribute", "path": "driver_info/ipmi_address", "value": "{data[inventory][bmc_address]}"} ] }, { "description": "Set IPMI credentials for HP", "conditions": [ {"op": "eq", "field": "data://auto_discovered", "value": true}, {"op": "eq", "field": "data://inventory.system_vendor.manufacturer", "value": "HP"} ], "actions": [ {"action": "set-attribute", "path": "driver_info/ipmi_username", "value": "hpadmin"}, {"action": "set-attribute", "path": "driver_info/ipmi_password", "value": "hppassword"}, {"action": "set-attribute", "path": "driver_info/ipmi_address", "value": "{data[inventory][bmc_address]}"} ] } ]

Additionally, we would have another rule in place to store the capabilities for all servers so that they would be associated with a flavor in our overcloud (numbers 5 and 6, above]:

[ { "description": "set basic capabilities, names, ramdisks and bootmode", "conditions": [ {"op": "eq", "field": "data://auto_discovered", "value": true} ], "actions": [ {"action": "set-capability", "name": "cpu_model", "value": "{data[inventory][cpu][model_name]}"}, {"action": "set-capability", "name": "manufacturer", "value": "{data[inventory][system_vendor][manufacturer]}"}, {"action": "set-capability", "name": "bios_version", "value": "{data[extra][firmware][bios][version]}"}, {"action": "set-capability", "name": "boot_mode", "value": "uefi"}, {"action": "set-capability", "name": "boot_option", "value": "local"}, {"action": "set-attribute", "path": "name", "value": "{data[extra][system][motherboard][vendor]}-{data[extra][system][motherboard][name]:.9}-{data[inventory][bmc_address]}"}, {"action": "set-attribute", "path": "driver_info/deploy_kernel", "value": "4ea0514e-681b-4582-99ad-77adfc1a47d1"}, {"action": "set-attribute", "path": "driver_info/deploy_ramdisk", "value": "5c4b7077-b41a-4c73-84f5-95490dd33e1f"} ] } ]

And finally, let’s set up Ansible Tower scheduler in the administration cluster to monitor OpenStack Ironic dcatabase for enrolled nodes and provision them based on their capabilities.

The full process of provisioning would look like this:

-

Node powered on and has a DHCP request [our “one-touch”].

-

Ironic in the Overcloud PXE boots the Node.

-

The inspector gathers all of the data from the node and based on the ruleset configured above, it automatically sets the credentials for the node, giving Ironic power control.

-

For all of the nodes, OpenStack adds capabilities to the Ironic entry which are associated with specific flavors which tenants would use in the Overcloud to deploy specific workloads.

-

A scheduled job executing from Tower in the Administrative Cluster would look for bare metal nodes in an “enroll” state and take action when one was found:

-

Check server vendor, if it was HP, deploy render farm image for the render farm tenant to node using the render farm flavor which would use the capabilities set during auto-discovery.

-

Check server vendor, if Dell, query introspection data for:

-

Determine switch/port information from LLDP data.

-

Determine disk types and count of the server.

-

-

Move the servers from Ironic in the Overcloud to Ironic in director.

-

Scale overcloud out based on disk configuration (Ceph or Compute)

-

All other vendors would be ignored.

-

An architecture such as that described above could be configurable and scalable to automate many aspects of the datacenter.

** The above architecture could use director as the “zero touch provisioning” node, however, it’s not recommended due to lack of HA and scaling capabilities.

Multi-tenancy

Multi-tenancy in the Bare Metal cloud allows for VLAN separation of the individual nodes for the separate projects. For example in the Hardware Benchmarking Use Case the QA team testing distributed, NoSQL database cluster on VLAN X, where the hardware vendor partners QA team validates the functionality of their operating system on another isolated set of nodes isolated by VLAN Y.

In the same fashion for the High Performance use case, the movie studio might assign different pool of nodes for rendering different motion pictures that are in production at the same time. This model also allows us to reclaim unused nodes or re-assign them dynamically to another project.

The multi-tenancy in this model is satisfied by a networking-ansible integration. This is the integration with the ML2 Networking-Ansible driver, based on Ansible Networking. Networking-ansible together with OpenStack Neutron can control the switchport VLANs for individual nodes. This functionality is generally available starting in Red Hat OpenStack Platform 13.

Image Building

OpenStack Ironic generally uses the same image lifecycle and methodologies for Bare Metal instances as is does for Virtual Machine instances. Both Linux-based and Windows-based operating system images are supported. There are two notable differences the cloud operator needs to take under consideration when building images for Bare Metal:

-

Hardware Drivers (nics, storage, video)

-

Boot mode (UEFI, Legacy)

Today virtual machines don’t require special drivers, since the hardware is abstracted by Virtio devices (KVM) and the only supported boot mode for VM is Legacy.

The process of building images can be achieved in a couple of ways:

-

Manually - by designating a single bare metal node to act as libvirt based hypervisor (with or without UEFI support based on the requirements). Installing required operating system with required hardware drivers to the “shell VM” and capturing and exporting either the qcow2 or raw disk file of the image

-

Automatic - with the help of Red Hat provided or third party image building tools. Example - diskimage-builder, packer, windows-openstack-imaging-tools

Summary and Conclusion

Leveraging Openstack to perform bare-metal capabilities within the datacenter can improve efficiency and time-to-delivery in many aspects of the enterprise. Understanding where and when to use it is critical for implementation. As a recap, we have covered several use cases where bare metal provisioning makes sense:

-

Datacenter as a Service

-

Hardware Benchmarking

-

High performance workloads

OpenStack using Ironic enables the creation of a bare metal cloud to provision and consume these resources with cloud-like ease and speed. If your enterprise is looking to perform this level of automation within your datacenter or would like to discuss other areas where you think bare metal provisioning can improve your business workflow, please reach out as we would love to discuss these opportunities with you!

執筆者紹介

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

オリジナル番組

エンタープライズ向けテクノロジーのメーカーやリーダーによるストーリー

製品

ツール

試用、購入、販売

コミュニケーション

Red Hat について

エンタープライズ・オープンソース・ソリューションのプロバイダーとして世界をリードする Red Hat は、Linux、クラウド、コンテナ、Kubernetes などのテクノロジーを提供しています。Red Hat は強化されたソリューションを提供し、コアデータセンターからネットワークエッジまで、企業が複数のプラットフォームおよび環境間で容易に運用できるようにしています。

言語を選択してください

Red Hat legal and privacy links

- Red Hat について

- 採用情報

- イベント

- 各国のオフィス

- Red Hat へのお問い合わせ

- Red Hat ブログ

- ダイバーシティ、エクイティ、およびインクルージョン

- Cool Stuff Store

- Red Hat Summit