Diferencias entre LoRA y QLoRA

La adaptación de bajo rango (LoRA) y la adaptación de bajo rango cuantificada (QLoRA) son técnicas para entrenar modelos de inteligencia artificial. En concreto, se trata de formas de perfeccionamiento eficiente de parámetros (PEFT), una técnica de ajuste que adquirió gran popularidad debido a que es más eficiente en el uso de recursos que otros métodos de entrenamiento de modelos de lenguaje de gran tamaño (LLM).

Las técnicas LoRA y QLoRA facilitan el perfeccionamiento de los LLM, pero se diferencian en el modo en que manipulan el modelo y utilizan el almacenamiento para obtener los resultados deseados.

Las diferencias de LoRA y QLoRA respecto al perfeccionamiento tradicional

Los LLM son modelos complejos que constan de numerosos parámetros, algunos de los cuales ascienden a miles de millones. Estos permiten entrenar el modelo con una cierta cantidad de información. Un mayor número de parámetros se traduce en un mayor almacenamiento de datos y, en general, en un resultado más eficaz.

El ajuste tradicional implica que cada uno de los parámetros deba volver a perfeccionarse (actualizarse o adaptarse) para actualizar el LLM. Esta operación puede suponer el ajuste de miles de millones de parámetros, lo cual conlleva una gran inversión de tiempo y dinero.

La actualización de los parámetros puede dar lugar a un "sobreajuste", un término que se utiliza para describir un modelo de inteligencia artificial que aprende datos innecesarios o poco útiles, además de aquellos del entrenamiento.

Imaginémonos un profesor y su clase. Durante todo el año, los alumnos estudiaron matemáticas. Justo antes del examen, el profesor hace hincapié en la importancia de la división larga. En el momento del examen, muchos de ellos están preocupados por esta operación y descuidan las principales ecuaciones matemáticas de otras cuestiones igualmente importantes. Esto es lo que el sobreajuste puede provocar en un LLM durante el ajuste tradicional.

Además de los problemas de sobreajuste, el ajuste tradicional supone un costo considerable en cuanto a recursos.

QLoRA y LoRA son técnicas de perfeccionamiento que permiten mejorar la eficacia de este proceso. En lugar de entrenar todos los parámetros, dividen el modelo en matrices y únicamente entrenan aquellos parámetros que son necesarios para aprender información nueva.

Si retomamos nuestra metáfora, estas técnicas pueden introducir conceptos nuevos de manera eficaz sin que el modelo desvíe la atención de otros temas del examen.

Inteligencia artificial de Red Hat

Funcionamiento de LoRA

La técnica LoRA utiliza distintos parámetros para entrenar el modelo de inteligencia artificial a partir de datos nuevos.

En lugar de entrenar el modelo completo y todas las ponderaciones entrenadas previamente, se apartan o bloquean y, en cambio, se trabaja con una muestra de parámetros más reducida, la cual se denomina "matriz de adaptación de bajo rango", es decir, LoRA.

Se las conoce como "de bajo rango" porque son matrices que contienen pocos parámetros y ponderaciones. Una vez entrenadas, se combinan con los parámetros originales y funcionan como una sola matriz, lo cual permite que el perfeccionamiento se haga con mucha más eficacia.

Para simplificar la comprensión, pensemos en LoRA como una fila o una columna que se suma a la matriz.

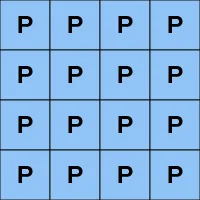

Imagínate que este es el parámetro completo que hay que entrenar:

Para entrenar todas las ponderaciones del parámetro, es necesario emplear mucho tiempo, dinero y memoria. Cuando termines, es posible que tengas que seguir entrenando el modelo y que hayas malgastado una gran cantidad de recursos en el proceso.

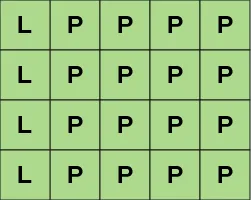

Esta columna representa una ponderación de rango bajo:

Cuando se hayan entrenado los parámetros de bajo rango nuevos, la fila o la columna única se incorpora a la matriz original. De este modo, puede aplicarse el entrenamiento a la totalidad de los parámetros.

Ahora, el modelo de inteligencia artificial puede funcionar con las ponderaciones que se acaban de perfeccionar.

El entrenamiento de la ponderación de bajo rango requiere menos tiempo, memoria y costos. Una vez que se entrena el modelo, se puede aplicar lo aprendido dentro de la matriz de mayor tamaño, sin ocupar más memoria.

Ventajas de LoRA

Se trata de una técnica que permite perfeccionar el modelo en menos tiempo, con menos recursos y sin tanto esfuerzo. Estas son algunas de las ventajas que ofrece:

- necesidad de entrenar menos parámetros;

- menor riesgo de sobreajuste;

- agilización del tiempo de entrenamiento;

- reducción de la memoria utilizada;

- ajustes flexibles (el entrenamiento puede aplicarse a algunas partes del modelo y no a otras).

Funcionamiento de QLoRA

QLoRA, una extensión de LoRA, es una técnica similar que cuenta con una ventaja adicional: uso de una menor cantidad de memoria.

La "Q" de "QLoRA" corresponde a "quantized" (cuantificada). En este contexto, cuantificar el modelo implica comprimir parámetros muy complejos y precisos (una gran cantidad de números decimales y de memoria) en otros más pequeños y concisos (menos decimales y menos memoria).

Su objetivo consiste en perfeccionar una parte del modelo valiéndose del almacenamiento y la memoria de una sola unidad de procesamiento gráfico (GPU). Para ello, recurre a NormalFloat (NF4) de 4 bits, un nuevo tipo de datos que permite cuantificar las matrices con una capacidad de memoria aún menor que LoRA. Al comprimir el parámetro en datos más pequeños y manejables, se puede reducir el espacio de memoria necesario hasta cuatro veces su tamaño original.

Una vez que se cuantifica el modelo, resulta mucho más fácil ajustarlo debido a su tamaño reducido.

Imagínate que estos son los parámetros del modelo original:

En los doce parámetros, se incluyen tres verdes, seis azules, dos amarillos y uno rosado. Cuando el modelo se cuantifica, se comprime y se obtiene una representación del modelo anterior.

Después de la cuantificación, la muestra queda compuesta por un parámetro verde, dos azules y uno amarillo.

Durante el proceso, se corre el riesgo de que algunos datos sean tan pequeños que se pierdan durante la compresión. Por ejemplo, el parámetro rosado no aparece porque representaba un porcentaje de datos tan pequeño que no podía transferirse a la versión comprimida.

En este ejemplo, comprimimos los parámetros de doce a cuatro. Sin embargo, lo que ocurre en realidad es que se comprimen miles de millones de parámetros en un número finito que puede perfeccionarse fácilmente en una sola GPU.

Lo ideal es que cualquier dato que se pierda pueda recuperarse a partir del parámetro original cuando la matriz recién entrenada se vuelva a incorporar a las originales, sin que por ello deje de ser precisa o exacta. Ahora bien, esta posibilidad es incierta.

En esta técnica, se combina la informática de alto rendimiento con el almacenamiento en memoria eficiente. De este modo, el modelo sigue ofreciendo un alto grado de precisión con recursos limitados.

Ventajas de QLoRA

Se trata de una técnica que hace hincapié en los requisitos de memoria de bajo mantenimiento. Como LoRA, da prioridad a la eficiencia, lo cual facilita y acelera el proceso de entrenamiento para perfeccionar el modelo. Estas son algunas de las ventajas que ofrece:

- requiere menos memoria que LoRA;

- evita el sobreajuste de datos;

- mantiene altos niveles de precisión;

- perfecciona el modelo de manera rápida y ligera.

Las diferencias entre LoRA y QLoRA

LoRA es una técnica de ajuste eficaz que puede utilizarse de forma independiente. Por su parte, QLoRA es una extensión de la anterior que no solo incorpora una serie de técnicas que aumentan la eficacia de LoRA, sino que también ocupa mucho menos espacio de almacenamiento.

Si tienes dudas sobre la técnica que mejor se adapta a tus necesidades, te recomendamos que consideres la capacidad de almacenamiento y los recursos de que dispones. Por ejemplo, si tienes poco almacenamiento, QLoRA te resultará más fácil de ejecutar.

Red Hat puede ayudarte

Red Hat® AI es una cartera de productos y servicios que ayudan a tu empresa en cualquier etapa del proceso de aplicación de la inteligencia artificial, ya sea que estés en los inicios del proyecto o listo para expandirlo a la nube híbrida.

Con modelos pequeños diseñados para un propósito específico y técnicas de personalización sencillas, ofrece la flexibilidad para desarrollar e implementar sistemas en cualquier entorno.