With generative AI capturing the imagination of users worldwide, enterprises are looking at infusing AI into their products. IBM watsonx is an enterprise-focused artificial intelligence (AI) and data platform.

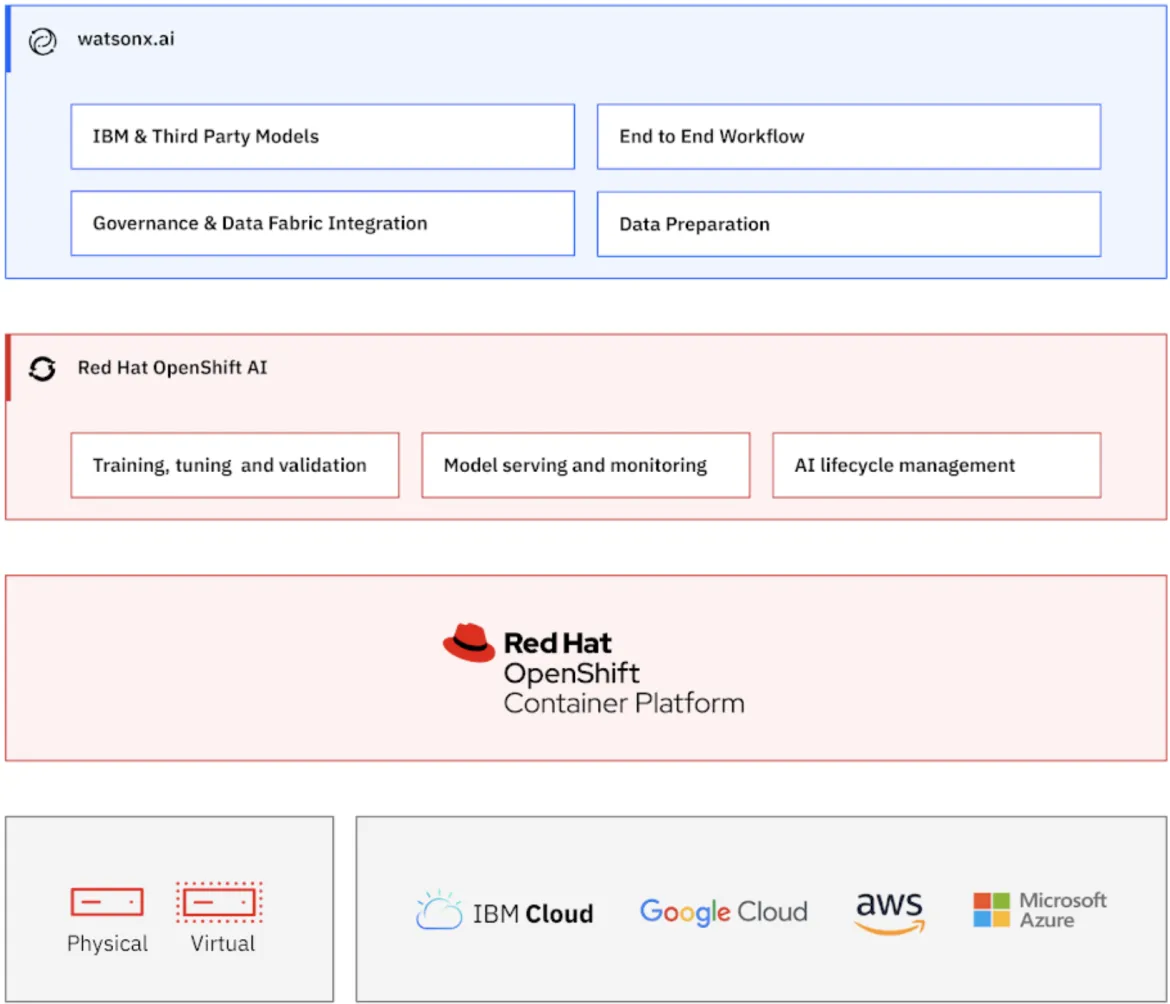

The watsonx platform enables customers to scale and accelerate the adoption and impact of AI with a full technology stack, AI, trusted data and governance, and flexibility across clouds and on-premises environments by being built on top of Red Hat OpenShift. The watsonx platform has three core components:

- watsonx.ai: An enterprise-grade AI studio that helps AI builders innovate with all the APIs, tools, models and runtimes to simplify and scale the development and deployment of AI applications

- watsonx.data: A fit-for-purpose data store that is open, hybrid and governed – built on open lake house architecture to scale analytics and AI workloads with all your data

- watsonx.governance: An end-to-end toolkit for AI governance across the entire AI lifecycle to accelerate responsible, transparent, and explainable AI for Generative AI and ML models and workflows to ensure organizational accountability for risk and investment decisions

Benefits of OpenShift AI

OpenShift AI provides a unified platform for data scientists, application developers and IT administrators for training, serving, monitoring and managing the lifecycle of AI/ML models and applications. It simplifies the iterative process of integrating models into software development processes, production rollout, monitoring, retraining and redeployment.

OpenShift AI leverages the hybrid cloud capabilities of OpenShift, allowing it to be deployed in a hybrid configuration. This means you can deploy your AI platform closer to your data, and overcome the struggles of data gravity. Do you have personal identifiable information (PII) forcing your organization to store some data on-prem? OpenShift AI can easily be deployed on-prem in an air gapped or disconnected configuration, allowing you to build your models where needed while also keeping your data secure. Once completed, your model can be served on-prem, in the cloud, or at the edge.

Because watsonx.ai leverages AI accelerator enhancements in OpenShift AI, Linux kernel solutions have been developed in conjunction with the top AI accelerator companies. Thanks to work by Nvidia, AMD, and Intel, a watsonx AI workload can run at the highest efficiency.

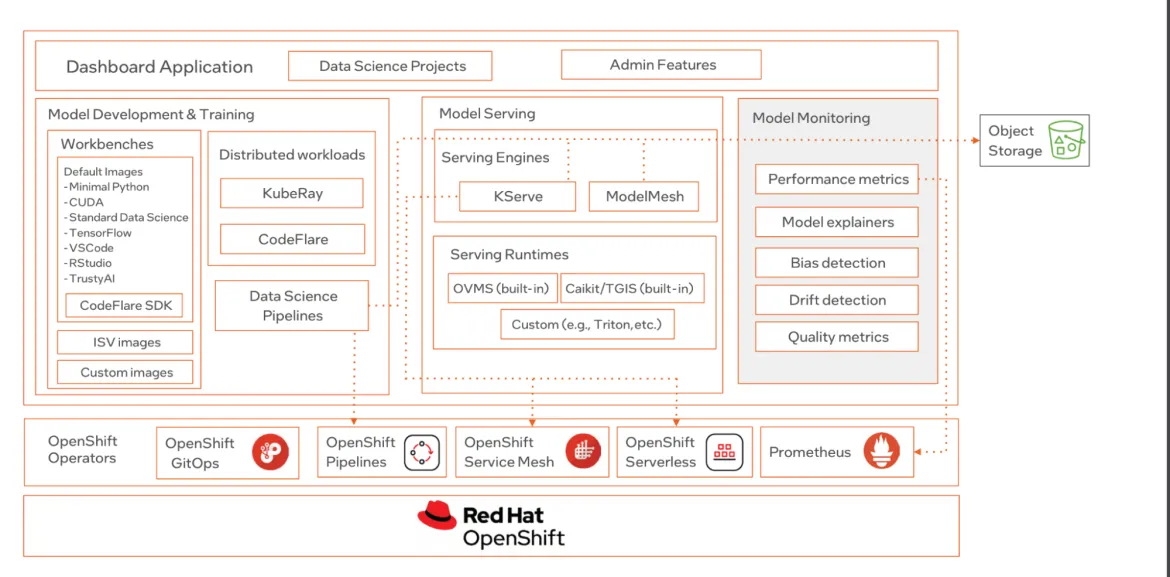

The graphic below explains how OpenShift AI supports a full AI model lifecycle, and provides a detailed view of the technologies used for each key feature.

Workbench

You can interact with your data and train models within a notebook IDE powered by Jupyter Notebook, R Studio or Visual Studio Code. These workbenches come with a variety of popular data science libraries, such as PyTorch or TensorFlow. Need to add your own libraries? No problem. Within OpenShift AI, you can customize the image used for your workbench and install Python libraries or Linux packages within your image. These workbenches can interact with different data storage locations (like S3) and take advantage of distributed training libraries such as CodeFlare and KubeRay.

KServe

A variety of different model runtimes are available within OpenShift AI, powered by the model server KServe. This allows you to bring the runtime you need without being locked into a specific runtime environment. The custom foundational model approach (in which you bring your own foundational model) of OpenShift allows you to serve a variety of predictive and generative AI model types. This means your OpenShift AI cluster can manage and serve any model, allowing it to function as your organization's AI platform. The custom foundational model approach has become a core component of watsonx.ai V2, and it's the KServe model server on OpenShift AI that powers it.

Monitoring and security

OpenShift AI leverages many of the existing functionality of the base OpenShift platform. This includes the monitoring capabilities of the open source Prometheus tool and the RBAC controls that limit the ability of users to services that have been designated to them. These features have been enhanced further to include the ability to monitor the quality and model drift of the models running on OpenShift AI.

Benefits of watsonx.ai

IBM watsonx.ai is an enterprise-grade AI studio for AI builders that helps simplify and scale the development of AI applications with easy-to-use tools and system prompts for building, deploying, and monitoring AI applications. It provides AI developers and model builders with tools, templates, and frameworks to streamline the application development process using high-quality data across the AI application lifecycle.

The watsonx.ai components include:

- Foundational models: Choose from IBM-developed Granite models, open source and third-party models, or bring your own custom foundation model

- Prompt Lab: Experiment with foundation models and build prompts

- Tuning Studio: Tune your foundation models with labeled data

- Data Science and MLOps: Build machine learning models automatically with model training, development, visual modeling and synthetic data generation

Foundational models

Watsonx.ai offers a selection of models encompassing IBM-developed Granite AI models, open source models, models sourced from third-party providers like Mistral AI and Meta’s llama models, as well as custom foundational models.

Granite is IBM’s flagship brand of both open and proprietary large language model (LLM) foundation models, spanning multiple modalities. IBM has released a family of core Granite Code, Time Series, Language and GeoSpatial models as open source software. These are available on Hugging Face under a permissive Apache 2.0 license that enables broad, unencumbered commercial usage. All Granite models are trained on carefully curated data, with industry-leading levels of transparency about the data that went into them. Watsonx.ai offers the ability to bring your own foundational models and deploy them using these instructions.

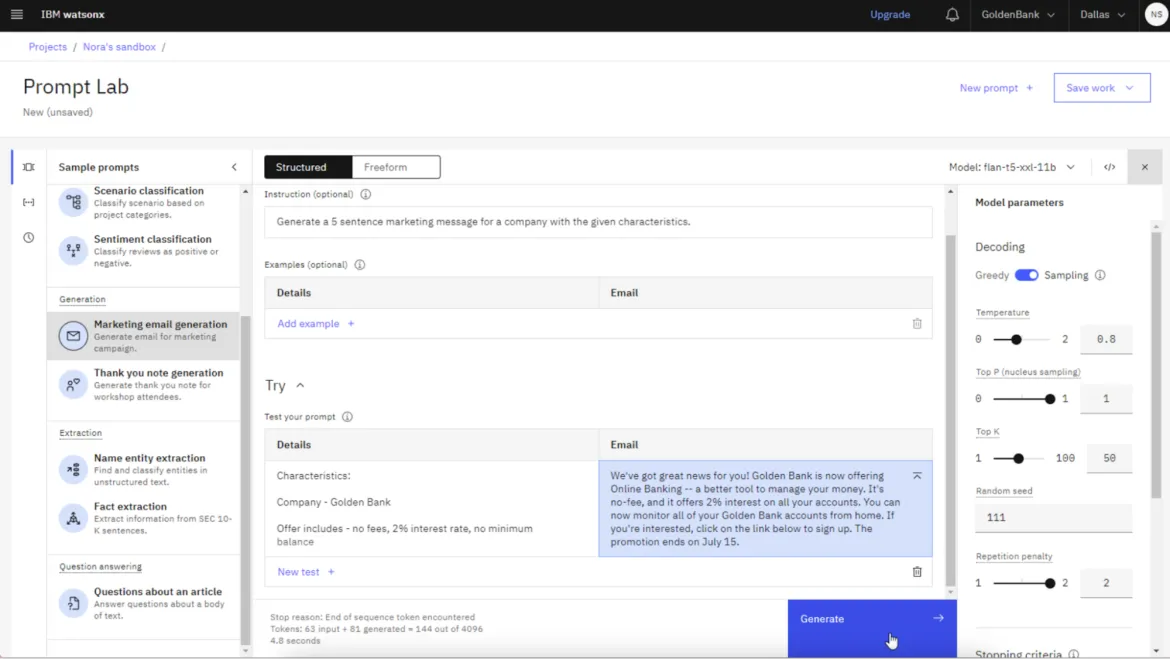

Prompt Lab

Prompt Lab is a key feature of watsonx.ai that allows AI builders to develop effective prompts for foundation models using prompt engineering techniques. Within the Prompt Lab, users can interact with foundation models in the prompt editor using the Chat, Freeform or Structured mode. These multiple options allow you to craft the best model configurations to support a range of Natural Language Processing (NLP) type tasks including question answering, content generation and summarization, text classification and extraction.

Watsonx.ai Tuning Studio is a tool designed to help users customize and optimize foundation models for specific business needs using techniques like prompt-tuning to help tune your foundation models with labeled data for better performance and accuracy.

Data Science and MLOps

IBM watsonx.ai provides all the tools, pipelines, and runtimes that a business needs to support building ML models automatically. You can automate your entire AI model lifecycle, from development to deployment, with connections to a variety of application programming interfaces (API), software development kits (SDK) and libraries.

Install watsonx.ai on OpenShift

The OpenShift AI repository has detailed instructions for a quick installation of watsonx.ai on OpenShift AI for a proof-of-concept demonstration. You can have a model like Meta’s llama-3 series, Mistral AI, IBM's Granite model, or other commercial or open source or custom models up and running on watsonx.ai on OpenShift AI.

Sample application leveraging watsonx.ai

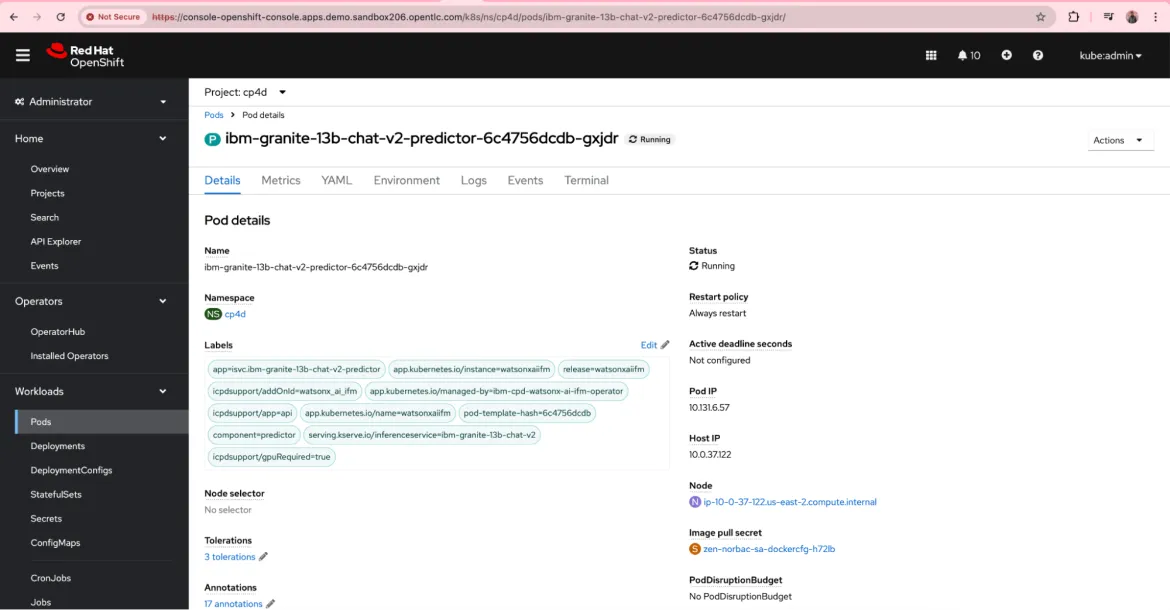

Retrieval-augmented generation (RAG) is an AI framework for improving the quality of LLM generated responses by grounding the model on external sources of knowledge to supplement the LLM’s internal representation of information. You can see a sample application that uses RAG in the example repository contributed on GitHub by Ruslan Magana Vsevolodovna of IBM. In our sample, we used IBM granite-13b-chat-v2 foundational model, but you can use other foundational models in watsonx.ai (such as Meta’s llama-3-70b-instruct, Mistral AI’s Mistral Large 2 model, and so on).

The foundational model can run on Red Hat OpenShift, or can be accessed using the SaaS option. For our demonstration, we had an instance of PostgreSQL with the pgvector extension running on OpenShift. This can be used as a vector store. One of the approaches to integrate large language models into your applications is by using LangChain.

Here's an example response to a RAG query:

from langchain.chains import RetrievalQA qa = RetrievalQA.from_chain_type(llm=watsonx_granite, chain_type="stuff", retriever=db.as_retriever()) query = "What is a vector database?" qa.run(query) "A vector database is a database that can store vectors (fixed-length lists of numbers) along with other data items.”

Learn more about OpenShift AI and download solution patterns at here. If you're interested in learning more about watsonx.ai, you can try watsonx.ai for free in a trial or demo experience.

This post was written with the help of Ruslan Magana Vsevolodovna (IBM).

References

- https://www.redhat.com/en/technologies/cloud-computing/openshift/openshift-ai

- https://www.redhat.com/en/blog/the-power-of-ai-is-open

- https://www.ibm.com/products/watsonx-ai

- https://github.com/rh-aiservices-bu/llm-on-openshift/blob/main/pgvector_deployment/README.md

- https://research.ibm.com/blog/retrieval-augmented-generation-RAG

- https://newsletter.nocode.ai/p/guide-retrieval-augmented-generation

- https://newsletter.nocode.ai/p/tutorial-chat-documents

- https://cdrdv2-public.intel.com/821840/OpenShift-AI-Sol-Brief-V3.pdf

Sobre los autores

With his experience of being a customer of OpenShift as well as prior experience of working on IBM software, Santosh helps customers with their Hybrid Cloud adoption journey.

Christopher Nuland is a cloud architect for Red Hat services. He helps organizations with their cloud native migrations with a focus with the Red Hat OpenShift product.

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Programas originales

Vea historias divertidas de creadores y líderes en tecnología empresarial

Productos

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servicios de nube

- Ver todos los productos

Herramientas

- Training y Certificación

- Mi cuenta

- Soporte al cliente

- Recursos para desarrolladores

- Busque un partner

- Red Hat Ecosystem Catalog

- Calculador de valor Red Hat

- Documentación

Realice pruebas, compras y ventas

Comunicarse

- Comuníquese con la oficina de ventas

- Comuníquese con el servicio al cliente

- Comuníquese con Red Hat Training

- Redes sociales

Acerca de Red Hat

Somos el proveedor líder a nivel mundial de soluciones empresariales de código abierto, incluyendo Linux, cloud, contenedores y Kubernetes. Ofrecemos soluciones reforzadas, las cuales permiten que las empresas trabajen en distintas plataformas y entornos con facilidad, desde el centro de datos principal hasta el extremo de la red.

Seleccionar idioma

Red Hat legal and privacy links

- Acerca de Red Hat

- Oportunidades de empleo

- Eventos

- Sedes

- Póngase en contacto con Red Hat

- Blog de Red Hat

- Diversidad, igualdad e inclusión

- Cool Stuff Store

- Red Hat Summit